Cooperating Reasoning Processes: More Than Just the Sum of Their Parts ∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Notices of The

Mathematics People His research addresses a variety of topics, including the 2012–2013 AMS Centennial information content of quantum states, the physical Fellowship Awarded resources needed for quantum computers to surpass classical computers, and the barriers to solving computer The AMS has awarded its Cen- science’s vexing P versus NP question, that is, whether tennial Fellowship for 2012– every problem whose solution can be quickly verified by 2013 to Karin Melnick of the a computer can also be quickly solved by a computer. University of Maryland. The The Waterman Award is given annually to one or more fellowship carries a stipend of outstanding researchers under the age of thirty-five in any US$80,000, an expense allow- field of science or engineering supported by the NSF. The ance of US$8,000, and a com- prize consists of a medal and a US$1 million grant to be plimentary Society membership used over a five-year period for further advanced study for one year. in the awardee’s field. Karin Melnick was born and raised in Marin County, —From an NSF announcement California. She attended Reed Photo by Riza Falk. College in Portland, Oregon, Karin Melnick and completed her Ph.D. at the Kalai Awarded Rothschild University of Chicago in 2006 Prize under the direction of Benson Farb. With a Postdoctoral Research Fellowship from the National Science Founda- Gil Kalai of the Einstein Institute of Mathematics, The tion, she went to Yale University as a Gibbs Assistant Hebrew University of Jerusalem, has been named a recipi- Professor. She received a Junior Research Fellowship from ent of the 2012 Rothschild Prize for his groundbreaking the Erwin Schrödinger Institute in spring 2009. -

Curriculum Vitae

Curriculum vitae Thomas Andreas Meyer 25 August 2019 Address Room 312, Computer Science Building University of Cape Town University Avenue, Rondebosch Tel: +27 12 650 5519 e-mail: [email protected] WWW: http://cs.uct.ac.za/~tmeyer Biographical information Date of birth: 14 January 1964, Randburg, South Africa. Marital status: Married to Louise Leenen. We have two children. Citizenship: Australian and South African. Qualifications 1. PhD (Computer Science), University of South Africa, Pretoria, South Africa, 1999. 2. MSc (Computer Science), Rand Afrikaans University (now the University of Johan- nesburg), Johannesburg, South Africa, 1986. 3. BSc Honours (Computer Science) with distinction, Rand Afrikaans University (now the University of Johannesburg), Johannesburg, South Africa, 1985. 4. BSc (Computer Science, Mathematical Statistics), Rand Afrikaans University (now the University of Johannesburg), Johannesburg, South Africa, 1984. 1 Employment and positions held 1. July 2015 to date: Professor, Department of Computer Science, University of Cape Town, Cape Town, South Africa. 2. July 2015 to June 2020: Director, Centre for Artificial Intelligence Research (CAIR), CSIR, Pretoria, South Africa. 3. July 2015 to June 2020: UCT-CSIR Chair in Artificial Intelligence, University of Cape Town, Cape Town, South Africa. 4. August 2011 to June 2015: Chief Scientist, CSIR Meraka Institute, Pretoria, South Africa. 5. May 2011 to June 2015: Director of the UKZN/CSIR Meraka Centre for Artificial Intelligence Research, CSIR and University of KwaZulu-Natal, South Africa. 6. August 2007 to July 2011: Principal Researcher, CSIR Meraka Institute, Pretoria, South Africa. 7. August 2007 to June 2015: Research Group Leader of the Knowledge Representation and Reasoning group (KRR) at the CSIR Meraka Institute, Pretoria, South Africa. -

Membership of Sectional Committees 2015

Membership of Sectional Committees 2015 The main responsibility of the Sectional Committees is to select a short list of candidates for consideration by Council for election to the Fellowship. The Committees meet twice a year, in January and March. SECTIONAL COMMITTEE 1 [1963] SECTIONAL COMMITTEE 3 [1963] Mathematics Chemistry Chair: Professor Keith Ball Chair: Professor Anthony Stace Members: Members: Professor Philip Candelas Professor Varinder Aggarwal Professor Ben Green Professor Harry Anderson Professor John Hinch Professor Steven Armes Professor Christopher Hull Professor Paul Attfield Professor Richard Kerswell Professor Shankar Balasubramanian Professor Chandrashekhar Khare Professor Philip Bartlett Professor Steffen Lauritzen Professor Geoffrey Cloke Professor David MacKay Professor Peter Edwards Professor Robert MacKay Professor Malcolm Levitt Professor James McKernan Professor John Maier Professor Michael Paterson Professor Stephen Mann Professor Mary Rees Professor David Manolopoulos Professor John Toland Professor Paul O’Brien Professor Srinivasa Varadhan Professor David Parker Professor Alex Wilkie Professor Stephen Withers SECTIONAL COMMITTEE 2 [1963] SECTIONAL COMMITTEE 4 [1990] Astronomy and physics Engineering Chair: Professor Simon White Chair: Professor Hywel Thomas Members: Members: Professor Girish Agarwal Professor Ross Anderson Professor Michael Coey Professor Alan Bundy Professor Jack Connor Professor Michael Burdekin Professor Laurence Eaves Professor Russell Cowburn Professor Nigel Glover Professor John Crowcroft -

EU/UK Open Letter

Open Letter to the President of the European Parliament; the President of the European Council; the President of the European Commission; the Prime Minister of the United Kingdom; the governments of the member states of the European Union; the government of Norway and the government of Switzerland Artificial Intelligence is a field of research and technology that is already having an important impact on the citizens and nations across Europe – an impact that is certain to increase significantly in the future. Europe is also home to many of the world's best researchers and innovators in Artificial Intelligence, and many of these are based in the UK, a country with a rich and proud tradition in computing science and Artificial Intelligence. We – the researchers and stakeholders comprising CLAIRE (the Confederation of Laboratories for Artificial Intelligence Research in Europe; see also below) – believe that, regardless of the future relationship between the UK and the EU, it is of great importance that all European nations, including the UK, closely collaborate on research and innovation in Artificial Intelligence. This is an area that is likely to bring fundamental change to the way we live and work – in the short, medium and long term. It will also play an important role in addressing the grand challenges we face as individuals and as societies. In addition, as a general-purpose technology, Artificial Intelligence is a global “game changer” and a major driver of innovation, future growth, and competitiveness; however, to fully realise the benefits and responsibly manage the risks associated with Artificial intelligence, cooperation across Europe (and beyond) is essential. -

Membership of Sectional Committees 2014

Membership of Sectional Committees 2014 The main responsibility of the Sectional Committees is to select a short list of candidates for consideration by Council for election to the Fellowship. The Committees meet twice a year, in January and March. SECTIONAL COMMITTEE 1 [1963] SECTIONAL COMMITTEE 3 [1963] Mathematics Chemistry Chair: Professor Peter McCullagh Chair: Professor Anthony Stace Members: Members: Professor Keith Ball Professor Varinder Aggarwal Professor Philip Candelas Professor Shankar Balasubramanian Professor Michael Duff Professor Philip Bartlett Professor Georg Gottlob Professor Peter Bruce Professor Ben Green Professor Eleanor Campbell Professor Richard Kerswell Professor Geoffrey Cloke Professor Robert MacKay Professor Peter Edwards Professor James McKernan Professor Craig Hawker Professor Michael Paterson Professor Philip Kocienski Professor Mary Rees Professor Malcolm Levitt Professor Andrew Soward Professor David Manolopoulos Professor John Toland Professor Paul O’Brien Professor Srinivasa Varadhan Professor David Parker Professor Alex Wilkie Professor Ezio Rizzardo Professor Trevor Wooley Professor Stephen Withers SECTIONAL COMMITTEE 2 [1963] SECTIONAL COMMITTEE 4 [1990] Astronomy and physics Engineering Chair: Professor Simon White Chair: Professor Keith Bowen Members: Members: Professor Gabriel Aeppli Professor Ross Anderson Professor Girish Agarwal Professor Alan Bundy Professor Michael Coey Professor John Burland Professor Jack Connor Professor Russell Cowburn Professor Stan Cowley Dame Ann Dowling Professor -

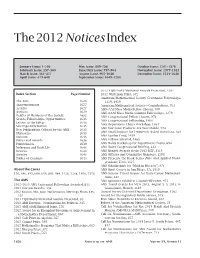

The 2012 Noticesindex

The 2012 Notices Index January issue: 1–256 May issue: 609–736 October issue: 1201–1376 February issue: 257–360 June/July issue: 737–904 November issue: 1377–1512 March issue: 361–472 August issue: 905–1048 December issue: 1513–1648 April issue: 473–608 September issue: 1049–1200 2012 Trjitzinsky Memorial Awards Presented, 1581 Index Section Page Number 2012 Whiteman Prize, 572 American Mathematical Society Centennial Fellowships, The AMS 1626 1120, 1459 Announcements 1627 American Mathematical Society—Contributions, 701 Articles 1627 AMS–AAS Mass Media Fellow Chosen, 850 Authors 1629 AMS-AAAS Mass Media Summer Fellowships, 1279 Deaths of Members of the Society 1632 AMS Congressional Fellow Chosen, 974 Grants, Fellowships, Opportunities 1633 AMS Congressional Fellowship, 1460 Letters to the Editor 1635 AMS Department Chairs Workshop, 1461 Meetings Information 1635 AMS Electronic Products Are Now Mobile, 974 New Publications Offered by the AMS 1635 Obituaries 1635 AMS Email Support for Frequently Asked Questions, 328 Opinion 1636 AMS Epsilon Fund, 1459 Prizes and Awards 1636 AMS Fellows Selected, 1465 Prizewinners 1639 AMS Holds Workshop for Department Chairs, 694 Reference and Book List 1643 AMS Hosts Congressional Briefing, 442 Reviews 1643 AMS Menger Awards at the 2012 ISEF, 1118 Surveys 1643 AMS Officers and Committee Members, 1290 Tables of Contents 1644 AMS Presents the Book Series Pure and Applied Under- graduate Texts, 1125 AMS Scholarships for “Math in Moscow”, 971 About the Cover AMS Short Course in San Diego, CA, 1319 110, 346, -

Computer Workshop Speaker Biographies (PDF)

Troy Astarte Troy Kaighin Astarte is a research assistant in computing science at Newcastle University. Their PhD research was on the history of programming language semantics and they are now working with Cliff Jones on a research project on the history of concurrency in programming and computing systems, funded by the Leverhulme Trust. Alan Bundy CBE FREng FRS Following a PhD in Mathematical Logic at the University of Leicester, Alan Bundy came to Edinburgh in June 1971 to join Bernard Meltzer’s Meta-Mathematics Unit (MMU), and has been at the University of Edinburgh ever since. In the mid-1960s, Donald Michie had attracted to Edinburgh Christopher Longuet-Higgins and Richard Gregory. These three founded the Department of Machine Intelligence and Perception (DMIP), to which MMU was closely connected. Alan learnt about the many pioneering AI projects conducted at MMU and DMIP during their history, and those in the successor Department of Artificial Intelligence – and contributed to them himself. Chris Burton Chris Burton joined Ferranti Ltd Computer Department in 1957 and worked on the last of the large vacuum tube computers made by that firm. His career extended through hardware project management on the 1900 and 2900 series of mainframes, then into exploitation of microprocessors and finally into AI in the UK Alvey Project. He was one of the founder members of the Computer Conservation Society and from 1989 has served in various roles including leading the Pegasus and Elliott 401 Restoration Working Parties. In 1994 he started and led the major project to create a working replica of the Manchester ‘Baby’ computer, the world's first stored program computer.