Computing Skills for Biologists

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AFS - a Secure Distributed File System

SLAC-PUB-11152 Part III: AFS - A Secure Distributed File System Alf Wachsmann Submitted to Linux Journal issue #132/April 2005 Stanford Linear Accelerator Center, Stanford University, Stanford, CA 94309 Work supported by Department of Energy contract DE–AC02–76SF00515. SLAC-PUB-11152.txt Part III: AFS - A Secure Distributed File System III.1 Introduction AFS is a secure distributed global file system providing location independence, scalability and transparent migration capabilities for data. AFS works across a multitude of Unix and non-Unix operating systems and is used at many large sites in production for many years. AFS still provides unique features that are not available with other distributed file systems even though AFS is almost 20 years old. This age might make it less appealing to some but with IBM making AFS available as open-source in 2000, new interest in use and development was sparked. When talking about AFS, people often mention other file systems as potential alternatives. Coda (http://www.coda.cs.cmu.edu/) with its disconnected mode will always be a research project and never have production quality. Intermezzo (http://www.inter-mezzo.org/) is now in the Linux kernel but not available for any other operating systems. NFSv4 (http://www.nfsv4.org/) which picked up many ideas from AFS and Coda is not mature enough yet to be used in serious production mode. This article presents the rich features of AFS and invites readers to play with it. III.2 Features and Benefits of AFS AFS client software is available for many Unix flavors: HP, Compaq, IBM, SUN, SGI, Linux. -

Introduction to Ggplot2

Introduction to ggplot2 Dawn Koffman Office of Population Research Princeton University January 2014 1 Part 1: Concepts and Terminology 2 R Package: ggplot2 Used to produce statistical graphics, author = Hadley Wickham "attempt to take the good things about base and lattice graphics and improve on them with a strong, underlying model " based on The Grammar of Graphics by Leland Wilkinson, 2005 "... describes the meaning of what we do when we construct statistical graphics ... More than a taxonomy ... Computational system based on the underlying mathematics of representing statistical functions of data." - does not limit developer to a set of pre-specified graphics adds some concepts to grammar which allow it to work well with R 3 qplot() ggplot2 provides two ways to produce plot objects: qplot() # quick plot – not covered in this workshop uses some concepts of The Grammar of Graphics, but doesn’t provide full capability and designed to be very similar to plot() and simple to use may make it easy to produce basic graphs but may delay understanding philosophy of ggplot2 ggplot() # grammar of graphics plot – focus of this workshop provides fuller implementation of The Grammar of Graphics may have steeper learning curve but allows much more flexibility when building graphs 4 Grammar Defines Components of Graphics data: in ggplot2, data must be stored as an R data frame coordinate system: describes 2-D space that data is projected onto - for example, Cartesian coordinates, polar coordinates, map projections, ... geoms: describe type of geometric objects that represent data - for example, points, lines, polygons, ... aesthetics: describe visual characteristics that represent data - for example, position, size, color, shape, transparency, fill scales: for each aesthetic, describe how visual characteristic is converted to display values - for example, log scales, color scales, size scales, shape scales, .. -

Written & Oral Presentation: Computer Tools

Written & Oral Presentation: Computer Tools Aleksandar Donev Courant Institute, NYU1 [email protected] 1Course MATH-GA.2840-004, Spring 2018 February 7th, 2018 A. Donev (Courant Institute) Tools 2/7/2018 1 / 13 Outline 1 LaTex A. Donev (Courant Institute) Tools 2/7/2018 2 / 13 LaTex What is LaTex? Some content taken from Wikipedia. TeX is a typesetting system: \allow anybody to produce high-quality books using minimal effort, and to provide a system that would give exactly the same results on all computers, at any point in time." Knuth had the idea to use mathematics to typeset mathematics! LaTex is a markup language for technical writing, with special emphasis on math-heavy writing, built on top of Tex: \TeX handles the layout side, while LaTeX handles the content side for document processing." What's a markup language and how does it differ from WYSIWYG ("what you see is what you get") word processors like Microsoft Word? Compare to html, and contrast interpreted versus compiled languages. Using LaTex: write-format-preview (compare to code-compile-execute). A. Donev (Courant Institute) Tools 2/7/2018 3 / 13 LaTex Why LaTex? Advantages of LaTex: (interactive) Abstract: Separate presentation from content: focus on the content and not visual appearance. Portable: LaTex files are simple text files so perfectly portable and easy to open/edit/share/diff. Flexible: Change appearance/format by changing one word, e.g., the document class. Extensible: macros allow one to add new functionality. Any advantages of WYSIWYG? (interactive) LyX is a combination of the two: Focus on content but also see it on your screen! (Lyx Demo, including change tracking). -

How to Write a Paper and Format It Using LATEX

How To Write a Paper and Format it Using LATEX Jennifer E. Hoffman1, 2, ∗ 1Department of Physics, Harvard University, Cambridge, Massachusetts 02138, USA 2School of Engineering & Applied Sciences, Harvard University, Cambridge, Massachusetts 02138, USA (Dated: July 30, 2020) The goal of this document is to demonstrate how to write a paper. We walk through the process of outlining, writing, formatting in LATEX, making figures, referencing, and checking style and content. Source files are available at: http://hoffman.physics.harvard.edu/example-paper/. I. GETTING STARTED 8. Rewrite your abstract, taking into account what you have learned from the process of writing the paper. As You should start writing your paper while you are you fine-tune your abstract, you may find it helpful to working on your experiment. Prof. George Whitesides refer to Nature's instructions for writing an abstract says: \A paper is not just an archival device for stor- [2] and for clear communication more generally [3]. ing a completed research program; it is also a structure for planning your research in progress. If you clearly As you contemplate the paper you have just writ- understand the purpose and form of a paper, it can be ten, put yourself in the shoes of the reviewers (includ- immensely useful to you in organizing and conducting ing your collaborators). You already work many, many your research. A good outline for the paper is also a hours/week, and you don't really want to spend more good plan for the research program. You should write time reading this paper. So you're going to be very happy and rewrite these plans/outlines throughout the course if the figures are pretty, the text flows logically, the ref- of the research. -

Regression Models by Gretl and R Statistical Packages for Data Analysis in Marine Geology Polina Lemenkova

Regression Models by Gretl and R Statistical Packages for Data Analysis in Marine Geology Polina Lemenkova To cite this version: Polina Lemenkova. Regression Models by Gretl and R Statistical Packages for Data Analysis in Marine Geology. International Journal of Environmental Trends (IJENT), 2019, 3 (1), pp.39 - 59. hal-02163671 HAL Id: hal-02163671 https://hal.archives-ouvertes.fr/hal-02163671 Submitted on 3 Jul 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License International Journal of Environmental Trends (IJENT) 2019: 3 (1),39-59 ISSN: 2602-4160 Research Article REGRESSION MODELS BY GRETL AND R STATISTICAL PACKAGES FOR DATA ANALYSIS IN MARINE GEOLOGY Polina Lemenkova 1* 1 ORCID ID number: 0000-0002-5759-1089. Ocean University of China, College of Marine Geo-sciences. 238 Songling Rd., 266100, Qingdao, Shandong, P. R. C. Tel.: +86-1768-554-1605. Abstract Received 3 May 2018 Gretl and R statistical libraries enables to perform data analysis using various algorithms, modules and functions. The case study of this research consists in geospatial analysis of Accepted the Mariana Trench, a hadal trench located in the Pacific Ocean. -

Complete Issue 40:3 As One

TUGBOAT Volume 40, Number 3 / 2019 General Delivery 211 From the president / Boris Veytsman 212 Editorial comments / Barbara Beeton TEX Users Group 2019 sponsors; Kerning between lowercase+uppercase; Differential “d”; Bibliographic archives in BibTEX form 213 Ukraine at BachoTEX 2019: Thoughts and impressions / Yevhen Strakhov Publishing 215 An experience of trying to submit a paper in LATEX in an XML-first world / David Walden 217 Studying the histories of computerizing publishing and desktop publishing, 2017–19 / David Walden Resources 229 TEX services at texlive.info / Norbert Preining 231 Providing Docker images for TEX Live and ConTEXt / Island of TEX 232 TEX on the Raspberry Pi / Hans Hagen Software & Tools 234 MuPDF tools / Taco Hoekwater 236 LATEX on the road / Piet van Oostrum Graphics 247 A Brazilian Portuguese work on MetaPost, and how mathematics is embedded in it / Estev˜aoVin´ıcius Candia LATEX 251 LATEX news, issue 30, October 2019 / LATEX Project Team Methods 255 Understanding scientific documents with synthetic analysis on mathematical expressions and natural language / Takuto Asakura Fonts 257 Modern Type 3 fonts / Hans Hagen Multilingual 263 Typesetting the Bangla script in Unicode TEX engines—experiences and insights Document Processing / Md Qutub Uddin Sajib Typography 270 Typographers’ Inn / Peter Flynn Book Reviews 272 Book review: Hermann Zapf and the World He Designed: A Biography by Jerry Kelly / Barbara Beeton 274 Book review: Carol Twombly: Her brief but brilliant career in type design by Nancy Stock-Allen / Karl -

Linux Kernal II 9.1 Architecture

Page 1 of 7 Linux Kernal II 9.1 Architecture: The Linux kernel is a Unix-like operating system kernel used by a variety of operating systems based on it, which are usually in the form of Linux distributions. The Linux kernel is a prominent example of free and open source software. Programming language The Linux kernel is written in the version of the C programming language supported by GCC (which has introduced a number of extensions and changes to standard C), together with a number of short sections of code written in the assembly language (in GCC's "AT&T-style" syntax) of the target architecture. Because of the extensions to C it supports, GCC was for a long time the only compiler capable of correctly building the Linux kernel. Compiler compatibility GCC is the default compiler for the Linux kernel source. In 2004, Intel claimed to have modified the kernel so that its C compiler also was capable of compiling it. There was another such reported success in 2009 with a modified 2.6.22 version of the kernel. Since 2010, effort has been underway to build the Linux kernel with Clang, an alternative compiler for the C language; as of 12 April 2014, the official kernel could almost be compiled by Clang. The project dedicated to this effort is named LLVMLinxu after the LLVM compiler infrastructure upon which Clang is built. LLVMLinux does not aim to fork either the Linux kernel or the LLVM, therefore it is a meta-project composed of patches that are eventually submitted to the upstream projects. -

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO..........................................................................................................................................1 Martin Hinner < [email protected]>, http://martin.hinner.info............................................................1 1. Introduction..........................................................................................................................................1 2. Volumes...............................................................................................................................................1 3. DOS FAT 12/16/32, VFAT.................................................................................................................2 4. High Performance FileSystem (HPFS)................................................................................................2 5. New Technology FileSystem (NTFS).................................................................................................2 6. Extended filesystems (Ext, Ext2, Ext3)...............................................................................................2 7. Macintosh Hierarchical Filesystem − HFS..........................................................................................3 8. ISO 9660 − CD−ROM filesystem.......................................................................................................3 9. Other filesystems.................................................................................................................................3 -

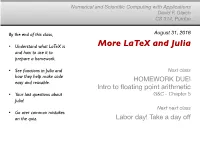

More Latex and Julia and How to Use It to Prepare a Homework

Numerical and Scientific Computing with Applications David F. Gleich CS 314, Purdue By the end of this class, August 31, 2016 • Understand what LaTeX is More LaTeX and Julia and how to use it to prepare a homework. • See functions in Julia and Next class how they help make code HOMEWORK DUE! easy and reusable. Intro to floating point arithmetic • Your last questions about G&C - Chapter 5 Julia! Next next class • Go over common mistakes on the quiz. Labor day! Take a day off Logistics 1. Final exam We will have the final EARLY (December 2) based on the results of the poll which overwhelmingly picked this option. Course Survey Matlab only 33 Numpy/Scipy only 5 Both 16 Latex 9 Taylor series 23 Topics Monte Carlo, ODEs, Matrices Stuff Julia & Mandelbrot sets! Quiz Results … at end of class ... LaTeX • A document typesetting system designed for beautiful mathematical documents. • TeX was designed by Donald Knuth • LaTeX was designed by Leslie Lamport • Both won Turing awards - Nobel prize of CS • You need to “compile” your documents. pdflatex myfile.tex # produces myfile.pdf Recommended packages + editors Windows • MiKTex and TexStudio Mac • MacTex and TexStudio or TexMaker Linux • TeXLive (or apt-get / yum package) + Kile or TexStudio Online • Overleaf • Juliabox Notebooks Making a simple document \documentclass{article} \usepackage[margin=1in]{geometry} \title{My document} \author{David and Collaborators} \begin{document} \maketitle \section{Problem 1} \section*{Solution} \section{Problem 1} \section*{Solution} \end{document} demo Editing a homework demo Back to Julia! . -

Julia: a Fresh Approach to Numerical Computing∗

SIAM REVIEW c 2017 Society for Industrial and Applied Mathematics Vol. 59, No. 1, pp. 65–98 Julia: A Fresh Approach to Numerical Computing∗ Jeff Bezansony Alan Edelmanz Stefan Karpinskix Viral B. Shahy Abstract. Bridging cultures that have often been distant, Julia combines expertise from the diverse fields of computer science and computational science to create a new approach to numerical computing. Julia is designed to be easy and fast and questions notions generally held to be \laws of nature" by practitioners of numerical computing: 1. High-level dynamic programs have to be slow. 2. One must prototype in one language and then rewrite in another language for speed or deployment. 3. There are parts of a system appropriate for the programmer, and other parts that are best left untouched as they have been built by the experts. We introduce the Julia programming language and its design|a dance between special- ization and abstraction. Specialization allows for custom treatment. Multiple dispatch, a technique from computer science, picks the right algorithm for the right circumstance. Abstraction, which is what good computation is really about, recognizes what remains the same after differences are stripped away. Abstractions in mathematics are captured as code through another technique from computer science, generic programming. Julia shows that one can achieve machine performance without sacrificing human con- venience. Key words. Julia, numerical, scientific computing, parallel AMS subject classifications. 68N15, 65Y05, 97P40 DOI. 10.1137/141000671 Contents 1 Scientific Computing Languages: The Julia Innovation 66 1.1 Julia Architecture and Language Design Philosophy . 67 ∗Received by the editors December 18, 2014; accepted for publication (in revised form) December 16, 2015; published electronically February 7, 2017. -

Introduction to Latex & Overleaf

Introduction Features A Basic LATEX Document Some LATEX Examples Installing and Using LATEX References Introduction to LATEX & Overleaf Ricky Patterson UVa Library 27 Sep 2018 (View source: https://www.overleaf.com/read/vshrryxvbhjw) Intro to LATEX & Overleaf 27 Sep 2018, (View source: Ricky Patterson https://www.overleaf.com/read/vshrryxvbhjw) 1 / 18 Introduction Features A Basic LATEX Document Some LATEX Examples Installing and Using LATEX References Outline Introduction Features A Basic LATEX Document Example Document Preamble ndocumentclass Packages Body Typing Text Caveats Formatting Some LATEX Examples Tables and Figures Mathematics AIntro to LATEX & Overleaf 27 Sep 2018, (View source: InstallingRicky and Patterson Using Lhttps://www.overleaf.com/read/vshrryxvbhjw)TEX 2 / 18 References Introduction Features A Basic LATEX Document Some LATEX Examples Installing and Using LATEX References Introduction I LATEX is a document preparation system for high quality typesetting I Not a Word Processor - Allows authors to focus on content rather than appearance. I Frequently used in the preparation of scientific or technical documents, but can be used to create letters, dissertations, music scores, calendars, presentations, etc., etc., etc. Intro to LATEX & Overleaf 27 Sep 2018, (View source: Ricky Patterson https://www.overleaf.com/read/vshrryxvbhjw) 3 / 18 However... I Steep learning curve I Not WYSIWYG I Can’t easily convert to and from Word, etc. Introduction Features A Basic LATEX Document Some LATEX Examples Installing and Using LATEX -

Revolution R Enterprise™ 7.1 Getting Started Guide

Revolution R Enterprise™ 7.1 Getting Started Guide The correct bibliographic citation for this manual is as follows: Revolution Analytics, Inc. 2014. Revolution R Enterprise 7.1 Getting Started Guide. Revolution Analytics, Inc., Mountain View, CA. Revolution R Enterprise 7.1 Getting Started Guide Copyright © 2014 Revolution Analytics, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of Revolution Analytics. U.S. Government Restricted Rights Notice: Use, duplication, or disclosure of this software and related documentation by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of The Rights in Technical Data and Computer Software clause at 52.227-7013. Revolution R, Revolution R Enterprise, RPE, RevoScaleR, RevoDeployR, RevoTreeView, and Revolution Analytics are trademarks of Revolution Analytics. Other product names mentioned herein are used for identification purposes only and may be trademarks of their respective owners. Revolution Analytics. 2570 W. El Camino Real Suite 222 Mountain View, CA 94040 USA. Revised on March 3, 2014 We want our documentation to be useful, and we want it to address your needs. If you have comments on this or any Revolution document, send e-mail to [email protected]. We’d love to hear from you. Contents Chapter 1. What Is Revolution R Enterprise? ....................................................................