What Is Algorithmic Composition? Let Us Begin at the Beginning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer-Assisted Composition a Short Historical Review

MUMT 303 New Media Production II Charalampos Saitis Winter 2010 Computer-Assisted Composition A short historical review Computer-assisted composition is considered amongst the major musical developments that characterized the twentieth century. The quest for ‘new music’ started with Erik Satie and the early electronic instruments (Telharmonium, Theremin), explored the use of electricity, moved into the magnetic tape recording (Stockhausen, Varese, Cage), and soon arrived to the computer era. Computers, science, and technology promised new perspectives into sound, music, and composition. In this context computer-assisted composition soon became a creative challenge – if not necessity. After all, composers were the first artists to make substantive use of computers. The first traces of computer-assisted composition are found in the Bells Labs, in the U.S.A, at the late 50s. It was Max Matthews, an engineer there, who saw the possibilities of computer music while experimenting on digital transmission of telephone calls. In 1957, the first ever computer programme to create sounds was built. It was named Music I. Of course, this first attempt had many problems, e.g. it was monophonic and had no attack or decay. Max Matthews went on improving the programme, introducing a series of programmes named Music II, Music III, and so on until Music V. The idea of unit generators that could be put together to from bigger blocks was introduced in Music III. Meanwhile, Lejaren Hiller was creating the first ever computer-composed musical work: The Illiac Suit for String Quartet. This marked also a first attempt towards algorithmic composition. A binary code was processed in the Illiac Computer at the University of Illinois, producing the very first computer algorithmic composition. -

Products of Interest

Products of Interest Universal Audio Apollo Audio from the company’s 2192 interface The front panel of the interface is used here and can be viewed features two combination balanced Interface 1 at almost any angle. The Apollo XLR/jack inputs; two 4 -in. Hi-Z, The Apollo, from Universal Audio, interface supports Core Audio and instrument/line, balanced inputs; is a high-resolution 18 × 24 digital ASIO drivers, and is compatible with and a stereo headphone output. A audio interface designed to deliver all well-known DAWs on Macintosh further four channels of analog inputs the sound of analog recordings (see and Windows operating systems. A and six outputs, all on balanced TRS Figure 1). The interface is available Console application and Console jack ports, are located on the rear with two or four processors, which al- Recall plug-in allow the user to panel. The microphone pre-amplifiers low the audio to be recorded through control and recall the settings for the are taken from the UFX interface UAD-2 powered plug-ins with less interface and plug-in for individual and offer 64 dB gain and overload than 2 msec latency. The user can also sessions. The Apollo has a 19-in., 1U protection. The convertors have a low mix and master using these proces- rack space chassis. latency design, with 14- and 7- sample sors, without drawing from the host The Apollo DUO Core model is latency reported for the A-D and computer processor. The microphone listed for US$ 1,999 and the QUAD D-A convertors respectively. -

Digital Performer Plug-Ins Guide

Title page Digital Performer ® 10 Plug-in Guide 1280 Massachusetts Avenue Cambridge, MA 02138 Business voice: (617) 576-2760 Business fax: (617) 576-3609 Technical support: (617) 576-3066 Tech support web: www.motu.com/support Web site: www.motu.com ABOUT THE MARK OF THE UNICORN LICENSE AGREEMENT receipt. If failure of the disk has resulted from accident, abuse or misapplication of the AND LIMITED WARRANTY ON SOFTWARE product, then MOTU shall have no responsibility to replace the disk(s) under this TO PERSONS WHO PURCHASE OR USE THIS PRODUCT: carefully read all the terms and Limited Warranty. conditions of the “click-wrap” license agreement presented to you when you install THIS LIMITED WARRANTY AND RIGHT OF REPLACEMENT IS IN LIEU OF, AND YOU the software. Using the software or this documentation indicates your acceptance of HEREBY WAIVE, ANY AND ALL OTHER WARRANTIES, BOTH EXPRESS AND IMPLIED, the terms and conditions of that license agreement. INCLUDING BUT NOT LIMITED TO WARRANTIES OF MERCHANTABILITY AND FITNESS Mark of the Unicorn, Inc. (“MOTU”) owns both this program and its documentation. FOR A PARTICULAR PURPOSE. THE LIABILITY OF MOTU PURSUANT TO THIS LIMITED Both the program and the documentation are protected under applicable copyright, WARRANTY SHALL BE LIMITED TO THE REPLACEMENT OF THE DEFECTIVE DISK(S), AND trademark, and trade-secret laws. Your right to use the program and the IN NO EVENT SHALL MOTU OR ITS SUPPLIERS, LICENSORS, OR AFFILIATES BE LIABLE documentation are limited to the terms and conditions described in the license FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES, INCLUDING BUT NOT LIMITED TO agreement. -

Chuck: a Strongly Timed Computer Music Language

Ge Wang,∗ Perry R. Cook,† ChucK: A Strongly Timed and Spencer Salazar∗ ∗Center for Computer Research in Music Computer Music Language and Acoustics (CCRMA) Stanford University 660 Lomita Drive, Stanford, California 94306, USA {ge, spencer}@ccrma.stanford.edu †Department of Computer Science Princeton University 35 Olden Street, Princeton, New Jersey 08540, USA [email protected] Abstract: ChucK is a programming language designed for computer music. It aims to be expressive and straightforward to read and write with respect to time and concurrency, and to provide a platform for precise audio synthesis and analysis and for rapid experimentation in computer music. In particular, ChucK defines the notion of a strongly timed audio programming language, comprising a versatile time-based programming model that allows programmers to flexibly and precisely control the flow of time in code and use the keyword now as a time-aware control construct, and gives programmers the ability to use the timing mechanism to realize sample-accurate concurrent programming. Several case studies are presented that illustrate the workings, properties, and personality of the language. We also discuss applications of ChucK in laptop orchestras, computer music pedagogy, and mobile music instruments. Properties and affordances of the language and its future directions are outlined. What Is ChucK? form the notion of a strongly timed computer music programming language. ChucK (Wang 2008) is a computer music program- ming language. First released in 2003, it is designed to support a wide array of real-time and interactive Two Observations about Audio Programming tasks such as sound synthesis, physical modeling, gesture mapping, algorithmic composition, sonifi- Time is intimately connected with sound and is cation, audio analysis, and live performance. -

Chunking: a New Approach to Algorithmic Composition of Rhythm and Metre for Csound

Chunking: A new Approach to Algorithmic Composition of Rhythm and Metre for Csound Georg Boenn University of Lethbridge, Faculty of Fine Arts, Music Department [email protected] Abstract. A new concept for generating non-isochronous musical me- tres is introduced, which produces complete rhythmic sequences on the basis of integer partitions and combinatorics. It was realized as a command- line tool called chunking, written in C++ and published under the GPL licence. Chunking 1 produces scores for Csound2 and standard notation output using Lilypond3. A new shorthand notation for rhythm is pre- sented as intermediate data that can be sent to different backends. The algorithm uses a musical hierarchy of sentences, phrases, patterns and rhythmic chunks. The design of the algorithms was influenced by recent studies in music phenomenology, and makes references to psychology and cognition as well. Keywords: Rhythm, NI-Metre, Musical Sentence, Algorithmic Compo- sition, Symmetry, Csound Score Generators. 1 Introduction There are a large number of powerful tools that enable algorithmic composition with Csound: CsoundAC [8], blue[11], or Common Music[9], for example. For a good overview about the subject, the reader is also referred to the book by Nierhaus[7]. This paper focuses on a specific algorithm written in C++ to produce musical sentences and to generate input files for Csound and lilypond. Non-isochronous metres (NI metres) are metres that have different beat lengths, which alternate in very specific patterns. They have been analyzed by London[5] who defines them with a series of well-formedness rules. With the software presented in this paper (from now on called chunking) it is possible to generate NI metres. -

Contributors to This Issue

Contributors to this Issue Stuart I. Feldman received an A.B. from Princeton in Astrophysi- cal Sciences in 1968 and a Ph.D. from MIT in Applied Mathemat- ics in 1973. He was a member of technical staf from 1973-1983 in the Computing Science Research center at Bell Laboratories. He has been at Bellcore in Morristown, New Jersey since 1984; he is now division manager of Computer Systems Research. He is Vice Chair of ACM SIGPLAN and a member of the Technical Policy Board of the Numerical Algorithms Group. Feldman is best known for having written several important UNIX utilities, includ- ing the MAKE program for maintaining computer programs and the first portable Fortran 77 compiler (F77). His main technical interests are programming languages and compilers, software confrguration management, software development environments, and program debugging. He has worked in many computing areas, including aþbraic manipulation (the portable Altran sys- tem), operating systems (the venerable Multics system), and sili- con compilation. W. Morven Gentleman is a Principal Research Oftcer in the Com- puting Technology Section of the National Research Council of Canada, the main research laboratory of the Canadian govern- ment. He has a B.Sc. (Hon. Mathematical Physics) from McGill University (1963) and a Ph.D. (Mathematics) from princeton University (1966). His experience includes 15 years in the Com- puter Science Department at the University of Waterloo, ûve years at Bell Laboratories, and time at the National Physical Laboratories in England. His interests include software engi- neering, embedded systems, computer architecture, numerical analysis, and symbolic algebraic computation. He has had a long term involvement with program portability, going back to the Altran symbolic algebra system, the Bell Laboratories Library One, and earlier. -

Multi–Channel, 24Bit/192Khz Audio Interface for the Macintosh User's

Multi–Channel, 24bit/192kHz Audio Interface for the Macintosh User’s Guide v1.1 – October 2006 User’s Guide Table of Contents Owners Record ............................................................................................................. 2 Introduction................................................................................................................... 3 Getting Started Quickly.......................................................................................... 4–7 1. Installing software ............................................................................................ 4 2. Hardware connections....................................................................................... 4 3. OS X configuration ............................................................................................ 5 4. iTunes playback................................................................................................. 5 5. DAW configuration ............................................................................................ 6 6. Recording .......................................................................................................... 7 General Operation................................................................................................... 8–11 Making Settings with Software Control Panels ...................................................... 8 Making Settings with Ensemble’s Front Panel Encoder Knobs ............................... 8 Setting Sample Rate ............................................................................................. -

Pro Audio for Print Layout 1 9/14/11 12:04 AM Page 356

356-443 Pro Audio for Print_Layout 1 9/14/11 12:04 AM Page 356 PRO AUDIO 356 Large Diaphragm Microphones www.BandH.com C414 XLS C214 C414 XLII Accurate, beautifully detailed pickup of any acoustic Cost-effective alternative to the dual-diaphragm Unrivaled up-front sound is well-known for classic instrument. Nine pickup patterns. Controls can be C414, delivers the pristine sound reproduction of music recording or drum ambience miking. Nine disabled for trouble-free use in live-sound applications the classic condenser mic, in a single-pattern pickup patterns enable the perfect setting for every and permanent installations. Three switchable cardioid design. Features low-cut filter switch, application. Three switchable bass cut filters and different bass cut filters and three pre-attenuation 20dB pad switch and dynamic range of 152 dB. three pre-attenuation levels. All controls can be levels. Peak Hold LED displays even shortest overload Includes case, pop filter, windscreen, and easily disabled, Dynamic range of 152 dB. Includes peaks. Dynamic range of 152 dB. Includes case, pop shockmount. case, pop filter, windscreen, and shockmount. filter, windscreen, and shockmount. #AKC214 ..................................................399.00 #AKC414XLII .............................................999.00 #AKC414XLS..................................................949.99 #AKC214MP (Matched Stereo Pair)...............899.00 #AKC414XLIIST (Matched Stereo Pair).........2099.00 Perception Series C2000B AT2020 High quality recording mic with elegantly styled True condenser mics, they deliver clear sound with Effectively isolates source signals while providing die-cast metal housing and silver-gray finish, the accurate sonic detail. Switchable 20dB and switchable a fast transient response and high 144dB SPL C2000B has an almost ruler-flat response that bass cut filter. -

Kitcore Guide Version

AU, RTAS, and VST plug-in Version 2.0 for Windows XP and Vista and Mac OS X Submersible Music 505 Fifth Avenue South, Suite 900 505 Union Station Seattle, WA 98104 www.submersiblemusic.com Copyright Samplitude is a registered trademark of Magix AG. © 2008 Submersible Music Inc. All rights reserved. This guide may not be reproduced or transmitted in whole or in part in Sonar is a registered trademark of Twelve Tone Systems, Inc. any form or by any means without the prior written consent of Submersible Music Inc. ASIO is a trademark of Steinberg Soft- und Hardware GmbH. KitCore™, DrumCore®, and Gabrielizer ™ are trademarks or registered trademarks of Submersible Music Inc. All other ReWire™ and REX™ by Propellerhead, © Propellerhead trademarks found herein are the property of their respective Software AB. owners. All trademarks contained herein are the property of their Pentium is a registered trademark of Intel Corporation. respective owners. AMD and Athlon are trademarks of Advanced Micro Devices, All features and specifications of this guide or the DrumCore Inc. product are subject to change without notice. Windows and DirectSound are registered trademarks of Microsoft Corporation in the United States and other countries. Mac, Power Mac, PowerBook, MacBook, and the Mac and Audio Units logos are trademarks of Apple Computer, Inc., registered in the U.S. and other countries. ACID, ACID Music Studio, and ACID Pro are trademarks or registered trademarks of Madison Media Software, Inc., a subsidiary of Sony Corporation of America or its affiliates in the United States and other countries. Digital Performer is a registered trademark of Mark of the Unicorn, Inc. -

Users Manual

Users Manual DRAFT COPY c 2007 1 Contents 1 Introduction 4 1.1 What is Schism Tracker ........................ 4 1.2 What is Impulse Tracker ........................ 5 1.3 About Schism Tracker ......................... 5 1.4 Where can I get Schism Tracker .................... 6 1.5 Compiling Schism Tracker ....................... 6 1.6 Running Schism Tracker ........................ 6 2 Using Schism Tracker 7 2.1 Basic user interface ........................... 7 2.2 Playing songs .............................. 9 2.3 Pattern editor - F2 .......................... 10 2.4 Order List, Channel settings - F11 ................. 18 2.5 Samples - F3 ............................. 20 2.6 Instruments - F4 ........................... 24 2.7 Song Settings - F12 ......................... 27 2.8 Info Page - F5 ............................ 29 2.9 MIDI Configuration - ⇑ Shift + F1 ................. 30 2.10 Song Message - ⇑ Shift + F9 .................... 32 2.11 Load Module - F9 .......................... 33 2.12 Save Module - F10 .......................... 34 2.13 Player Settings - ⇑ Shift + F5 ................... 35 2.14 Tracker Settings - Ctrl - F1 ..................... 36 3 Practical Schism Tracker 37 3.1 Finding the perfect loop ........................ 37 3.2 Modal theory .............................. 37 3.3 Chord theory .............................. 39 3.4 Tuning samples ............................. 41 3.5 Multi-Sample Instruments ....................... 42 3.6 Easy flanging .............................. 42 3.7 Reverb-like echoes .......................... -

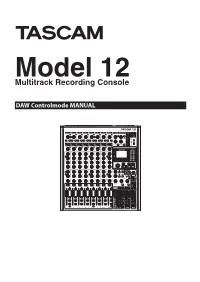

DAW Control Manual

ModelMultitrack Recording Console12 DAW Controlmode MANUAL Introduction Contents Overview The Model 12 has DAW control functions. By setting it to DAW Introduction .............................................................................. 2 control mode, its controls can be used for basic operation of Overview .................................................................................................... 2 the DAW application. This includes fader operation, muting, Trademarks ................................................................................................ 2 panning, soloing, recording, playing, stopping and other transport functions. Model 12 operations ................................................................ 3 Mackie Control and HUI protocol emulation are supported, so Preparing the unit ................................................................................... 3 Cubase, Digital Performer, Logic, Live, Pro Tools, Cakewalk and Connecting with a Computer ....................................................... 3 other major DAW applications can be controlled. Starting DAW control mode .......................................................... 3 Ending DAW control mode ............................................................ 3 MTR/USB SEND POINT screen settings ...................................... 4 Trademarks Mixer controls that can be used when in DAW control mode... 5 USB audio input and output when in DAW control mode ....... 6 o TASCAM is a registered trademark of TEAC Corporation. Making -

1 a NEW MUSIC COMPOSITION TECHNIQUE USING NATURAL SCIENCE DATA D.M.A Document Presented in Partial Fulfillment of the Requiremen

A NEW MUSIC COMPOSITION TECHNIQUE USING NATURAL SCIENCE DATA D.M.A Document Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts in the Graduate School of The Ohio State University By Joungmin Lee, B.A., M.M. Graduate Program in Music The Ohio State University 2019 D.M.A. Document Committee Dr. Thomas Wells, Advisor Dr. Jan Radzynski Dr. Arved Ashby 1 Copyrighted by Joungmin Lee 2019 2 ABSTRACT The relationship of music and mathematics are well documented since the time of ancient Greece, and this relationship is evidenced in the mathematical or quasi- mathematical nature of compositional approaches by composers such as Xenakis, Schoenberg, Charles Dodge, and composers who employ computer-assisted-composition techniques in their work. This study is an attempt to create a composition with data collected over the course 32 years from melting glaciers in seven areas in Greenland, and at the same time produce a work that is expressive and expands my compositional palette. To begin with, numeric values from data were rounded to four-digits and converted into frequencies in Hz. Moreover, the other data are rounded to two-digit values that determine note durations. Using these transformations, a prototype composition was developed, with data from each of the seven Greenland-glacier areas used to compose individual instrument parts in a septet. The composition Contrast and Conflict is a pilot study based on 20 data sets. Serves as a practical example of the methods the author used to develop and transform data. One of the author’s significant findings is that data analysis, albeit sometimes painful and time-consuming, reduced his overall composing time.