The Coordicide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IOTA: a Cryptographic Perspective

IOTA: A Cryptographic Perspective Bryan Baek Jason Lin Harvard University Georgia Institute of Technology [email protected] [email protected] 1 Introduction IOTA is an open-source distributed ledger protocol that presents a unique juxtaposition to the blockchain-based Bitcoin. Instead of using the traditional blockchain approach, IOTA stores individual transactions in a directed-acyclic graph (DAG) called Tangle. IOTA claims that this allows several benefits over its blockchain-based predecessors such as quantum-proof properties, no transaction fees, fast transactions, secure data transfer, and infinite scalability. Currently standing at 16th place in the CoinMarketCap, it topped 7 during the height of the bullish period of late 2017. Given these claims and attractions, this paper seeks to analyze the benefits and how they create vulnerabilities and/or disadvantages compared to the standard blockchain approach. Based upon our findings, we will discuss the future of IOTA and its potential of being a viable competitor to blockchain technology for transactions. 2 Background IOTA, which stands for Internet of Things Application [1], is a crypto technology in the form of a distributed ledger that facilitates transactions between Internet of Things (IoT) devices. IoT encompass smart home devices, cameras, sensors, and other devices that monitor conditions in commercial, industrial, and domestic settings [2]. As IoT becomes integrated with different facets of our life, their computational power and storage is often left to waste when idle. David Sonstebo, Sergey Ivancheglo, Dominik Schiener, and Serguei Popov founded the IOTA Foundation in June 2015 after working in the IoT industry because they saw the need to create an ecosystem where IoT devices share and allocate resources efficiently [3]. -

Wallance, an Alternative to Blockchain for Iot Loïc Dalmasso, Florent Bruguier, Achraf Lamlih, Pascal Benoit

Wallance, an Alternative to Blockchain for IoT Loïc Dalmasso, Florent Bruguier, Achraf Lamlih, Pascal Benoit To cite this version: Loïc Dalmasso, Florent Bruguier, Achraf Lamlih, Pascal Benoit. Wallance, an Alternative to Blockchain for IoT. IEEE 6th World Forum on Internet of Things (WF-IoT 2020), Jun 2020, New Orleans, LA, United States. 10.1109/WF-IoT48130.2020.9221474. hal-02893953v2 HAL Id: hal-02893953 https://hal.archives-ouvertes.fr/hal-02893953v2 Submitted on 1 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Wallance, an Alternative to Blockchain for IoT Loïc Dalmasso, Florent Bruguier, Achraf Lamlih, Pascal Benoit LIRMM University of Montpellier, CNRS Montpellier, France [email protected] Abstract—Since the expansion of the Internet of Things a decentralized Machine-to-Machine (M2M) communication (IoT), connected devices became smart and autonomous. Their protocol. A security mechanism has to ensure the trust exponentially increasing number and their use in many between devices, securing all interactions without a central application domains result in a huge potential of cybersecurity authority like Cloud. In this context, the blockchain system threats. Taking into account the evolution of the IoT, security brings many benefits in terms of reliability and security. -

Cryptocurrency Speculation and Blockchain Stocks (December 16, 2017 by Dafang Wu; PDF Version)

Cryptocurrency Speculation and Blockchain Stocks (December 16, 2017 by Dafang Wu; PDF Version) After Chicago Board Options Exchange began transactions of Bitcoin future contracts last week, cryptocurrency (“coin”) is becoming mainstream. We are quite late to this game, as many coins have appreciated more than 100 times so far this year, but it is never too late. This situation is similar to the early era of internet development, so we will need to understand the situation and cautiously catch up. Similar to many articles I wrote on airport finance, this article provides information rather than recommendations. Many coins are almost scams; many publicly traded companies claiming to be in blockchain technology, especially those traded over-the-counter (OTC), are also scams. Therefore, further research is required to make investment decisions, if we call coin speculation an “investment.” Background Instead of discussing the history of coins or technology, here are the basics that we need to know: Coins are digital currencies issued by organizations or teams; ownership and transactions are recorded in a public ledger There are more than 1,000 coins, with Bitcoin having the highest market capitalization of nearly $300 billion Each coin has a name, such as Bitcoin, and a trading ticker like stock, such as BTC There are more than 7,000 coin exchanges Some exchanges allow us to purchase coins using real life money, which they refer to as fiat money Some exchanges are not regulated, and only allow us to trade among coins. For this type of exchange, Bitcoin is typically the currency. To switch between two other coins, we will need to sell the first one and receive Bitcoin, and use the Bitcoin to purchase the second one There is no customer service for any coin – if we accidentally lose it or send to a wrong party, there is no way to get back Security threat and fraud always exist for coin trading – search Mt. -

Analyzing the Darknetmarkets Subreddit for Evolutions of Tools and Trends Using LDA Topic Modeling

DIGITAL FORENSIC RESEARCH CONFERENCE Analyzing the DarkNetMarkets Subreddit for Evolutions of Tools and Trends Using LDA Topic Modeling By Kyle Porter From the proceedings of The Digital Forensic Research Conference DFRWS 2018 USA Providence, RI (July 15th - 18th) DFRWS is dedicated to the sharing of knowledge and ideas about digital forensics research. Ever since it organized the first open workshop devoted to digital forensics in 2001, DFRWS continues to bring academics and practitioners together in an informal environment. As a non-profit, volunteer organization, DFRWS sponsors technical working groups, annual conferences and forensic challenges to help drive the direction of research and development. https:/dfrws.org Digital Investigation 26 (2018) S87eS97 Contents lists available at ScienceDirect Digital Investigation journal homepage: www.elsevier.com/locate/diin DFRWS 2018 USA d Proceedings of the Eighteenth Annual DFRWS USA Analyzing the DarkNetMarkets subreddit for evolutions of tools and trends using LDA topic modeling Kyle Porter Department of Information Security and Communication Technology, NTNU, Gjøvik, Norway abstract Keywords: Darknet markets, which can be considered as online black markets, in general sell illegal items such as Topic modeling drugs, firearms, and malware. In July 2017, significant law enforcement operations compromised or Latent dirichlet allocation completely took down multiple international darknet markets. To quickly understand how this affected Web crawling the markets and the choice of tools utilized by users of darknet markets, we use unsupervised topic Datamining Semantic analysis modeling techniques on the DarkNetMarkets subreddit in order to determine prominent topics and Digital forensics terms, and how they have changed over a year's time. -

The Open Legal Challenges of Pursuing AML/CFT Accountability Within Privacy-Enhanced Iom Ecosystems∗

The open legal challenges of pursuing AML/CFT accountability within privacy-enhanced IoM ecosystems∗ Nadia Pocher PhD Candidate Universitat Aut`onomade Barcelona • K.U. Leuven • Universit`adi Bologna Law, Science and Technology: Rights of Internet of Everything Joint Doctorate LaST-JD-RIoE MSCA ITN EJD n. 814177 [email protected] Abstract This research paper focuses on the interconnections between traditional and cutting- edge technological features of virtual currencies and the EU legal framework to prevent the misuse of the financial system for money laundering and terrorist financing purposes. It highlights a set of Anti-Money Laundering and Counter-Terrorist Financing (AML/CFT) challenges brought about in the Internet of Money (IoM) landscape by the double-edged nature of Distributed Ledger Technologies (DLTs) as both transparency and privacy ori- ented. Special attention is paid to inferences from concepts such as pseudonymity and traceability; this contribution explores these notions by relating them to privacy enhanc- ing mechanisms and blockchain intelligence strategies, while heeding both core elements of the present AML/CFT obliged entities' framework and possible new conceptualizations. Finally, it identifies key controversies and open questions as to the actual feasibility of ef- fectively applying the \active cooperation" AML/CFT approach to the crypto ecosystems. 1 The Internet of Money and underlying technologies The onset and subsequent development of the so-called "crypto economy" significantly and ubiq- uitously transformed the global financial landscape. The latter has been extensively confronted with non-traditional forms of currencies since the Bitcoin launch in January 2009. Relevant industry-altering effects, both ongoing and prospective, have been outlined more clearly by ideas such as Initial Coin Offerings (ICOs) or the recently unveiled Facebook-led Libra initiative. -

![Arxiv:1808.03380V1 [Cs.DC] 10 Aug 2018](https://docslib.b-cdn.net/cover/8739/arxiv-1808-03380v1-cs-dc-10-aug-2018-1228739.webp)

Arxiv:1808.03380V1 [Cs.DC] 10 Aug 2018

A SURVEY OF DATA TRANSFER AND STORAGE TECHNIQUES IN PREVALENT CRYPTOCURRENCIES AND SUGGESTED IMPROVEMENTS By Sunny Katkuri A Thesis submitted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major Subject: COMPUTER SCIENCE Examining Committee: Prof. Brian Levine, Thesis Adviser arXiv:1808.03380v1 [cs.DC] 10 Aug 2018 Prof. Phillipa Gill, Reader University of Massachusetts Amherst May 2018 CONTENTS LIST OF TABLES . iv LIST OF FIGURES . v ACKNOWLEDGMENT . vi ABSTRACT . vii 1. INTRODUCTION . 1 1.1 Background . 1 1.1.1 Graphene . 2 1.2 Contributions . 4 1.2.1 Documentation and Improvements . 4 1.2.1.1 Nano . 4 1.2.1.2 Ethereum . 5 1.2.1.3 IOTA . 5 1.2.2 Network Analysis . 6 1.2.3 Transaction ordering in Graphene . 7 1.2.3.1 Evaluation . 8 1.2.4 Evaluating Graphene for unsynchronized mempools . 9 2. Nano . 11 2.1 Node Discovery . 11 2.2 Block Synchronization . 11 2.2.1 Bootstrapping . 13 2.3 Block Propagation . 15 2.3.1 Representative crawling . 16 2.4 Block Storage . 16 2.5 Network Analysis . 16 2.6 Networking Protocol . 17 2.6.1 Messages . 18 2.7 Experiments . 20 2.7.1 Keepalive messages . 20 2.7.2 Frontier requests . 22 ii 3. Ethereum . 25 3.1 Node Discovery . 25 3.2 Block Synchronization . 27 3.3 Block Propagation . 29 3.4 Block Storage . 29 3.5 Network Analysis . 31 3.6 Networking Protocol . 32 3.7 Experiments . 35 3.7.1 Blockchain Analysis . 35 3.7.2 Graphene in Geth . -

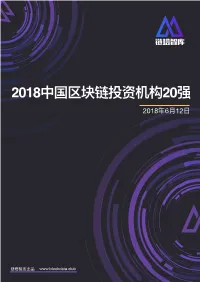

2018中国区块链投资机构20强 2018年6⽉12⽇

2018中国区块链投资机构20强 2018年6⽉12⽇ 链塔智库出品 www.blockdata.club 前⾔ 近年来,中国区块链⾏业的快速发展逐渐获得投资机构关注,中国区块链⾏业投资年增速已连续多年超 过100%。专注于区块链⾏业的投资机构正在⻜速成⻓,在区块链产业积极布局,构建⾃⼰的版图,⽽ 持开放态度的传统投资机构也在跑步⼊局。 链塔智库对活跃于区块链领域的120家投资机构及其所投项⽬进⾏分析,从区块链⾏业投资影响⼒、投 资标的数量、投资⾦额总量等⽅⾯选取了⼆⼗家表现最好的投资机构重点研究,形成结论如下: 从投资项⽬分类来看,这些投资机构普遍看好区块链平台类的公司。 从投资标的地域来看,这些投资机构更多的投资于海外项⽬。 从国内项⽬⻆度来看,北京地区项⽬占⽐最⾼。 从投资轮次来看,⼤部分投资发⽣在天使轮及A轮,反映⾏业仍处于早期阶段。 www.blockdata.club ⽬录 PART.1 2018中国区块链投资机构20强 榜单 PART.2 2018中国区块链投资机构20强 榜单分析 PART.3 影响⼒、投资数⽬、投资⾦额 细分榜单 PART.4 2018中国区块链投资机构版图 www.blockdata.club PART.1 2018中国区块链投资机构20强榜单 链塔智库基于链塔数据库数据,辅以整理超过120余家活跃于区块链领域的投资机构及所投项⽬,从 各家机构在投资影响⼒、投资项⽬数量、投资总额、回报情况、退出情况等维度进⾏评分(单项评价 最⾼10分),评选出2018中国区块链投资机构20强。 区块链投资机构评分维度模型 投资⾦额 重点项⽬影响⼒ 投资影响⼒ 背书能⼒ 媒体影响⼒ 退出情况 总投资数⽬ 投资数⽬ 所投项⽬已发Token数 回报情况 链塔智库研究绘制 www.blockdata.club www.blockdata.club 1 38.1% 21.1%95% 2018中国区块链 79.5% 投资机构 TOP 20 70.2% 名次 47.5% 机构名称 创始⼈ 总评价 影响⼒评分 数量评分 ⾦额评分 1 分布式资本 沈波 9.1 9 10 9 2 硬币资本 李笑来 9.0 10 9 8 3 连接资本 林嘉鹏 8.7 7 10 7 4 15.8% Dfund 赵东 8.1 7 6 9 5 54.1% IDG资本 熊晓鸽 7.7 9 4 9 6 泛城资本 陈伟星 7.5 9 5 6 7 真格基⾦ 徐⼩平 7.4 7 5 7 8 节点资本 杜均 7.1 6 10 7 9 丹华资本 张⾸晟 6.8 8 6 5 10 维京资本 张宇⽂ 6.5 8 5 5 11 了得资本 易理华 6.4 8 6 4 12 FBG Capital 周硕基 6.3 6 6 8 13 千⽅基⾦ 张银海 6.2 8 6 5 14 红杉资本中国 沈南鹏 6.1 10 4 5 15 创世资本 朱怀阳、孙泽宇 6.0 6 6 6 16 Pre Angel 王利杰 5.9 6 8 5 17 蛮⼦基⾦ 薛蛮⼦ 5.8 8 5 3 18 隆领资本 蔡⽂胜 5.5 7 5 3 19 ⼋维资本 傅哲⻜、阮宇博 5.2 6 5 5 20 BlockVC 徐英凯 5.1 6 5 4 数据来源:链塔数据库及公开信息整理 www.blockdata.club www.blockdata.club 2 PART.2 2018中国区块链投资机构20强榜单分析 2.1 区块链平台项目最受欢迎,占比44% 链塔智库根据收录的投资机构20强所投项⽬以及公开信息,对投资项⽬所属⾏业进⾏分类。 20强投资机构投资项⽬⾏业分布 其他 2% 社交 6% ⽣活 8% ⾦融 29% ⽂娱 11% 区块链平台 44% -

Parasite Chain Detection in the IOTA Protocol

Parasite Chain Detection in the IOTA Protocol Andreas Penzkofer IOTA Foundation, Berlin, Germany Bartosz Kusmierz Department of Theoretical Physics, Wroclaw University of Science and Technology, Poland Angelo Capossele IOTA Foundation, Berlin, Germany William Sanders IOTA Foundation, Berlin, Germany Olivia Saa Department of Applied Mathematics, Institute of Mathematics and Statistics, University of São Paulo, Brazil Abstract In recent years several distributed ledger technologies based on directed acyclic graphs (DAGs) have appeared on the market. Similar to blockchain technologies, DAG-based systems aim to build an immutable ledger and are faced with security concerns regarding the irreversibility of the ledger state. However, due to their more complex nature and recent popularity, the study of adversarial actions has received little attention so far. In this paper we are concerned with a particular type of attack on the IOTA cryptocurrency, more specifically a Parasite Chain attack that attempts to revert the history stored in the DAG structure, also called the Tangle. In order to improve the security of the Tangle, we present a detection mechanism for this type of attack. In this mechanism, we embrace the complexity of the DAG structure by sampling certain aspects of it, more particularly the distribution of the number of approvers. We initially describe models that predict the distribution that should be expected for a Tangle without any malicious actors. We then introduce metrics that compare this reference distribution with the measured distribution. Upon detection, measures can then be taken to render the attack unsuccessful. We show that due to a form of the Parasite Chain that is different from the main Tangle it is possible to detect certain types of malicious chains. -

Blockchain-Based Sensor Data Validation for Security in the Future Electric Grid

Blockchain-based Sensor Data Validation for Security in the Future Electric Grid A. G. Colac¸o∗, K. G. Nagananda∗, R. S. Blum∗, H. F. Korthy ∗Department of ECE, yDepartment of CSE, Lehigh University, Bethlehem PA 18015, USA. Email: fasc219,kgn209,rb0f,[email protected] Abstract—This paper explores the feasibility of using blocks) linked via cryptographic hash pointers to the previous blockchain technology to validate that measured sensor data block. Each block contains a timestamp and transaction data. approximately follows a known accepted model to enhance sensor The central feature of blockchains is their resistance to modi- data security in electricity grid systems. This provides a more robust information infrastructure that can be secured against fication of data (immutability), thereby providing “security by not only failures but also malicious attacks. Such robustness design”. Although blockchains are immutable by design, the is valuable in envisioned electricity grids that are distributed data they contain are visible to all nodes participating in the at a global scale including both small and large nodes. While system except for data items that are specifically encrypted. this may be valuable, blockchain’s security benefits come at the Transactions are signed cryptographically using public-key cost of computation of cryptographic functions and the cost of reaching distributed consensus. We report experimental results encryption [5] by the submitter, thus making transactions showing that, for the proposed application and assumptions, the irrefutable. Blocks are added to the chain via distributed time for these computations is small enough to not negatively consensus over a network of computers (nodes) that verify impact the overall system operation. -

Blockchain Development Trends 2021 >Blockchain Development Trends 2021

>Blockchain Development Trends 2021_ >Blockchain Development Trends 2021_ This report analyzes key development trends in core blockchain, DeFi and NFT projects over the course of the past 12 months The full methodology, data sources and code used for the analysis are open source and available on the Outlier Ventures GitHub repository. The codebase is managed by Mudit Marda, and the report is compiled by him with tremendous support of Aron van Ammers. 2 >The last 12 months Executive summary_ * Ethereum is still the most actively developed Blockchain protocol, followed by Cardano and Bitcoin. * Multi-chain protocols like Polkadot, Cosmos and Avalanche are seeing a consistent rise in core development and developer contribution. * In the year of its public launch, decentralized file storage projectFilecoin jumped straight into the top 5 of most actively developed projects. * Ethereum killers Tron, EOS, Komodo, and Qtum are seeing a decrease in core de- velopment metrics. * DeFi protocols took the space by storm with Ethereum being the choice of the underlying blockchain and smart contracts platform. They saw an increase in core development and developer contribution activity over the year which was all open sourced. The most active projects are Maker, Gnosis and Synthetix, with Aave and Bancor showing the most growth. SushiSwap and Yearn Finance, both launched in 2020, quickly grew toward and beyond the development activity and size of most other DeFi protocols. * NFT and Metaverse projects like collectibles, gaming, crypto art and virtual worlds saw a market-wide increase in interest, but mostly follow a closed source devel- opment approach. A notable exception is Decentraland, which has development activity on the levels of some major blockchain technologies like Stellar and Al- gorand, and ahead of some of the most popular DeFi protocols like Uniswap and Compound. -

Feasibility of Cryptocurrency on Mobile Devices

University of Amsterdam Feasibility of Cryptocurrency on Mobile devices MSc System and Network Engineering Research Project 1 Sander Lentink & Anas Younis [email protected] [email protected] Abstract This research focuses on the use of cryptocurrencies on mobile devices, which is a subset of possible applications of a distributed ledger. This pa- per gives an overview of consensus mechanisms available when designing a practical cryptocurrency for mobile devices. The focus is on permis- sionless designs to reach consensus and keep in sync with the distributed ledger. For this we looked into chain linking, SPV (Simple Payment Verifica- tion), SCP (Stellar Consensus Protocol), Skipchains and the tangle. The first three are consensus mechanisms for blockchain ledgers and the last is a consensus mechanism designed with a DAG (Directed Acyclic Graph) ledger. These consensus mechanisms were explored as options since they enable faster transaction times than traditional payment processes, which take around 30 seconds [1]. Besides the transaction time, we look at tech- niques that allow a device to determine the balance of a wallet. These synchronization techniques are dependent on the consensus mechanisms. We conclude that acceptable transaction times and efficient usage of a mobile device's power resource can be achieved with the researched tech- niques. Future work notes that this is a non-exhaustive list and mentions other requirements for mobile cryptocurrencies, such as privacy. 1 Contents 1 Introduction 3 2 Related work 3 3 Research question 4 4 Cryptocurrency attributes 5 5 Techniques 6 5.1 Sidechain . .7 5.2 Simple Payment Verification . .8 5.3 Stellar Consensus Protocol . -

IOTA Use Cases

A non-profit open-source technology organization What is IOTA? and inventor of the Tangle, a distributed ledger and protocol for the Internet of Things. How is it different IOTA solves Blockchain’s problems of scalability, energy requirements, data security, from blockchain? and transaction fees. The technology The Tangle is a directed acyclic graph (DAG), advantage meaning no blocks, no miners, and no fees! MOBI LITY WATCH THE VIDEO ABI Research predicts 8 million Earn as you drive with consumer vehicles with self-driving Jaguar Land Rover capabilities shipping in 2025. Cars can Communicate Car data can be securely transmitted via the Tangle to other cars or machines. Cars can Pay Car Digital Twins Autonomously for parking, Tracks vehicle usage and ownership battery charging, tolls, enabling fraud prevention, pay-per-use PLATOONING and other services. and usage-based insurances. Allows trucks to drive semi-autono- mously, reducing distance between each other, creating improved fuel ef- ficiency and safety. With IOTA, trucks can compensate each other for the differences in fuel savings. Over-the-Air Update Cars can Earn +12mi Cars are safer/more reliable Transacting data about potholes, via real-time software updates. traffic congestion, weather conditions and more. -12mi SUPPLY CHAIN SUPPLY CHAIN & GLOBAL TRADE Roughly 10% of supply chain goods are fraudulent. The supply chain lacks traceability, transparency and trust. Immutable audit trail keeps encrypted hash of logistical updates. Data integrity: Original documents and events reported to authorized actors provide process transparency and access. Track & trace: Includes geo-location, transfer of ownership/custody, and real-time data about conditions such as temperature, humidity, etc.