City, University of London Institutional Repository

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Racker News Outlets Spreadsheet.Xlsx

RADIO Station Contact Person Email/Website/Phone Cayuga Radio Group (95.9; 94.1; 95.5; 96.7; 103.7; 99.9; 97.3; 107.7; 96.3; 97.7 FM) Online Form https://cyradiogroup.com/advertise/ WDWN (89.1 FM) Steve Keeler, Telcom Dept. Chairperson (315) 255-1743 x [email protected] WSKG (89.3 FM) Online Form // https://wskg.org/about-us/contact-us/ (607) 729-0100 WXHC (101.5 FM) PSA Email (must be recieved two weeks in advance) [email protected] WPIE -- ESPN Ithaca https://www.espnithaca.com/advertise-with-us/ (107.1 FM; 1160 AM) Stephen Kimball, Business Development Manager [email protected], (607) 533-0057 WICB (91.7 FM) Molli Michalik, Director of Public Relations [email protected], (607) 274-1040 x extension 7 For Programming questions or comments, you can email WITH (90.1 FM) Audience Services [email protected], (607) 330-4373 WVBR (93.5 FM) Trevor Bacchi, WVBR Sales Manager https://www.wvbr.com/advertise, [email protected] WEOS (89.5 FM) Greg Cotterill, Station Manager (315) 781-3456, [email protected] WRFI (88.1 FM) Online Form // https://www.wrfi.org/contact/ (607) 319-5445 DIGITAL News Site Contact Person Email/Website/Phone CNY Central (WSTM) News Desk [email protected], (315) 477-9446 WSYR Events Calendar [email protected] WICZ (Fox 40) News Desk [email protected], (607) 798-0070 WENY Online Form // https://www.weny.com/events#!/ Adversiting: [email protected], (607) 739-3636 WETM James Carl, Digital Media and Operations Manager [email protected], (607) 733-5518 WIVT (Newschannel34) John Scott, Local Sales Manager (607) 771-3434 ex.1704 WBNG Jennifer Volpe, Account Executive [email protected], (607) 584-7215 www.syracuse.com/ Online Form // https://www.syracuse.com/placead/ Submit an event: http://myevent.syracuse.com/web/event.php PRINT Newspaper Contact Person Email/Website/Phone Tompkins Weekly Todd Mallinson, Advertising Director [email protected], (607) 533-0057 Ithaca Times Jim Bilinski, Advertising Director [email protected], (607) 277-7000 ext. -

115 Jackson MS GM: Thomas Dumey GSM: Dennis Logsdon Shirk Inc

PD -FM2: Sam McGuire CE: Max Turner Rep: D &R Rep: Major Market MyStar Communications Corp. WVBR -FM AOR Susquehanna Radio Corp. (grp) acq 1989, $11M Stn 1:93.5mHz 3kw @250' acq. WGRL from Butler U. 12- 17 -93, est. 3135 N. Meridian St.; 46208 GM: Andrew Ettinger GSM: Mike Crandall $7 -7.5M, RBR 5 -17 -93 317 -925 -1079 Fax: 317-921-3676 PD: Kelly Roth CE: John B. Hill Box 502950; 46250 Rep: Katz & Powell Net: NBC, AP 317-842 -9550 Fax: 317-577-3361 WXLW/WHHH Rel- Talk- Sprt/CHR Cornell Radio Guild Stn 1: 950 kHz 5 kw -D, DAD 227 Linden Ave.; 14850 WIBC/WKLR News -Talk/Oldies Stn 2: 96.3 mHz 640 w @ 715' 607 -273 -4000 Fax: 607-273-4069 Stn 1: 1070 kHz 50 kw-D, 10 kw -N, DA2 GM: Bill Shirk (pres) GSM: Mike Davidson Stn 2: 93.1 mHz 12.6 kw @ 1,023' PD: Scott Walker CE: Kim Hurst #115 Jackson MS GM: Thomas Dumey GSM: Dennis Logsdon Shirk Inc. See Market Profile, page 3 -75 PD -AM: Ed Lennon PD -FM: Roy Laurence 6264 La Pas Trail; 46268 Station Follows Station Follows WIIN CE: Norm Beaty 317 -293 -9600 Fax: 317-328-3870 -FM - WLRM WJDS WMGO - Rep: Christal WJDX -FM WSLI WMSI -FM WJDS Sconnix Broadcasting Co. (grp) WXTZ -FM Easy WJMI -FM WOAD WOAD WJNT - WSLI 9292 N. Meridian St.; 46260 Stn 1: 93.9 mHz 2.75 kw @ 492' WKTF -FM WJDS WSTZ -FM WZRX 317 -844-7200 Fax: 317-846-1081 GM: Mary Weiss GSM: John Coleman WKXI - WTYX -FM WKXI PD: Bill Fundsmann CE: Kim Hurst WKXI -FM WKXI WZRX - Duopoly Weiss Broadcasting of Noblesville Inc. -

ORANGE SLICES Greg Robinson Third 6-23 33-43 6-23 Seventh 2-14 Dick Macpherson Chris Gedney ‘93 33-43 12-30 Brian Higgins ‘04 and Former Orange All- Seventh Third

2007 SYRACUSE FOOTBALL S SYRACUSE (1-5 overall, 1-1 BIG EAST) vs. RUTGERS (3-2 overall, 0-1 BIG EAST) • October 13, 2007 (12:00 p.m. • ESPN Regional) • Carrier Dome • Syracuse, N.Y. • ORANGE SLICES ORANGE AND RUTGERS CLASH IN BIG EAST BATTLE ORANGE PRIDE The SU football team will host Rutgers at 12:00 p.m. on Saturday, Oct. 13 at the Carrier Dome. On the Air For the Orange, its the third straight conference game against a team that entered 2007 ranked in the national polls. SU is 1-1 in the BIG EAST, defeating then-#18/19 Louisville on Television the road, 38-35, on Sept. 22 and losing at home to the #13/12 Mountaineers on Oct. 6. Syracuse’s game versus Rutgers will be televised by ESPN Regional. Dave Sims, John The Orange owns a dominating 28-8-1 lead in the all-time series, including a 14-4-1 record in Congemi and Sarah Kustok will have the call. games played in Syracuse and will look to snap a two-game losing streak to RU. Casey Carter is the producer. Both Syracuse and are looking to get back on the winning track after losses in each of the last two weeks. Syracuse is coming off losses at Miami (OH) and at home to #13/12 West Radio Virginia. Rutgers lost a non-league encounter with Maryland two weeks ago and lost at home Syracuse ISP Sports Network to Cincinnati in its BIG EAST opener on Oct. 6. The flagship station for the Syracuse ISP Saturday’s game will televised live on ESPN Regional. -

Tompkins Weekly

March 7, 2016 Keeping You Connected TOMPKINS WEEKLY Locally Owned & Operated TompkinsWeekly.com Vol. 11, No. 2 Water testing guidance issued By Jay Wrolstad The discovery of drinking water tainted by lead in local schools has 2 N. Main Street, Cortland, New York | 607-756-2805 grabbed the attention of parents, 78 North Street, Dryden, New York | 607-844-8626 school officials, public health author- ities and even U.S. Senator Charles 2428 N. Triphammer Rd, Ithaca, New York | 607-319-0094 Schumer. It has also prompted con- cerns about the water quality in homes among area residents. baileyplace.com The good news is that Ithaca is not Flint, Michigan; there is little evi- dence of lead contamination in local ALSO IN THIS ISSUE water systems, either public or pri- vate. But those with older plumbing in their homes may want to take a closer look at their pipes and fixtures. Theresa Lyczko, director of the Health Promotion Program and Pub- lic Information officer at the Tomp- kins County Health Department, says that the Health Department has recently received inquiries from homeowners regarding the possible provided Photo presence of lead in their water. In Water with elevated levels of lead in area homes is most likely caused by pipes response, the department has up- dated its website that includes a page and fixtures inside the residence. The water can be tested by local labs. listing resources for residents that Survival Guide has cold, supplements information about the ed blood levels due to drinking water. ty reports. “In our area that is Cornell situation in local schools (http:// Lead typically enters drink- University, the City of Ithaca and the hard facts page 2 tompkinscountyny.gov/health/ ing water as a result of corrosion, or Bolton Point water plant. -

SPORTS MEDICINE Down...You Won't Recognize the Inside

March 14, 2016 Keeping You Connected TOMPKINS WEEKLY Locally Owned & Operated TompkinsWeekly.com Vol. 11, No. 3 State needs more organ donors By Jay Wrolstad Ali Brown has a message for those considering organ donation: “If you want to give the gift of life, talk to your family about your intentions, be proactive, and make the decision to register as an organ donor.” It’s advice that often goes unheeded in New York State, where individuals register as organ and tissue donors at less than half the rate of people nationwide. Brown, 24, of Trumansburg, re- ALSO IN THIS ISSUE ceived the gift of life in August 2002, when she underwent a liver trans- plant. She was born with progressive familial intrahepatic cholestasis type II, a genetic disease that progresses to cirrhosis. “I was very sick from day one; it progressed over time up until transplantation,” she says. “My doc- tors knew early on that something provided Photo was wrong with my liver but they Ali Brown, right, and her mother, Molly deRoos, attended the Donate Life Gala could not pinpoint it. Although it is during the 2014 Rose Parade festivities in Pasadena, Calif. Brown received a a genetic disease, there is no history donated liver in 2002. of it in our family. Both of my parents happened to be carriers.” She was states in the registration of eligible part to a more highly educated com- diagnosed at age nine at Cincinnati organ donors at 25 percent of the munity, good medical care facilities Cornell community mourns Children’s Hospital, by the doctor state population. -

Exhibit 2181

Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 1 of 4 Electronically Filed Docket: 19-CRB-0005-WR (2021-2025) Filing Date: 08/24/2020 10:54:36 AM EDT NAB Trial Ex. 2181.1 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 2 of 4 NAB Trial Ex. 2181.2 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 3 of 4 NAB Trial Ex. 2181.3 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 4 of 4 NAB Trial Ex. 2181.4 Exhibit 2181 Case 1:18-cv-04420-LLS Document 132 Filed 03/23/20 Page 1 of 1 NAB Trial Ex. 2181.5 Exhibit 2181 Case 1:18-cv-04420-LLS Document 133 Filed 04/15/20 Page 1 of 4 ATARA MILLER Partner 55 Hudson Yards | New York, NY 10001-2163 T: 212.530.5421 [email protected] | milbank.com April 15, 2020 VIA ECF Honorable Louis L. Stanton Daniel Patrick Moynihan United States Courthouse 500 Pearl St. New York, NY 10007-1312 Re: Radio Music License Comm., Inc. v. Broad. Music, Inc., 18 Civ. 4420 (LLS) Dear Judge Stanton: We write on behalf of Respondent Broadcast Music, Inc. (“BMI”) to update the Court on the status of BMI’s efforts to implement its agreement with the Radio Music License Committee, Inc. (“RMLC”) and to request that the Court unseal the Exhibits attached to the Order (see Dkt. -

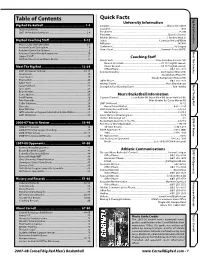

0708 MBKB Guide

Table of Contents Quick Facts Information Big Red University Information Big Red Basketball ................................................. 1-4 Location ......................................................................................................... Ithaca, N.Y. 14853 Table of Contents .............................................................................................1 Founded ..................................................................................................................................1865 2007-08 Media Information ..................................................................... 2-4 Enrollment ..........................................................................................................................13,700 President ...........................................................................................................David J. Skorton Athletic Director ..........................................................................................J. Andrew Noel Jr. Big Red Coaching Sta! ........................................ 5-12 Colors ................................................................................................Carnelian Red and White Head Coach Steve Donahue .................................................................... 6-7 A" liation ............................................................................................................................ NCAA I Assistant Coach Zach Spiker ......................................................................... -

EEO Public File Report Vizella Media, LCC WPIE (AM), Trumansburg, NY W296CP W296DH Ithaca, NY February 1, 2017 – January 31, 2018

EEO Public File Report Vizella Media, LCC WPIE (AM), Trumansburg, NY W296CP W296DH Ithaca, NY February 1, 2017 – January 31, 2018 Equal Opportunity Employer Information: Vizella Media, Incorporated, the licensee of WPIE(AM) in Trumansburg, NY, is an equal opportunity employer. We are currently looking for organizations that regularly distribute information about employment opportunities to job applications or have job applicants to refer. If you organization would like to receive notification of job vacancies at our radio station, call Todd Mallinson at (607) 533-0057 or contact by email at [email protected]. At WPIE (AM), we are committed to recruiting and retaining diverse talent by creating an environment that integrates diversity and inclusion in all aspects of our business. Our company is enriched and made more effective representation of diverse experience, backgrounds, ethnicity, education, sexual orientation and regional and cultural orientation. WPIE(AM) is an Equal Opportunity Employer where women and minorities are encouraged to apply. I. VACANCY LIST See Section II, the “Master Recruitment Source List” (“MRSL”) for recruitment source data Job Title Date of Hire RS Referring Hire No. Of Interviews Marketing Manager 6/1/17 21 6 Account Manager 6/5/17 27 3 Account Manager 8/1/17 21 8 Operations Manager 8/11/17 20 4 II. Master Recruitment Source List (“MRSL”) RS Number RS Information Source No. of Entitled to Interviewees Vacancy Referred by Notification? RS over reporting period 1 Elmire College Careers and Job Placement Services No 0 1 Park Place Elmira, NY 14901 Email: [email protected] Phone: (607) 735-1830 2 Corning Community College Careers and Job Placement No 0 Services 1 Academic Drive Corning, NY 14830-3749 Contact: Loretta Hendrickson Email: [email protected] Phone: (607) 778-5207 3 Broome Community College Careers and Job Placement No 0 Services 901 Upper Front Street Binghamton, NY 13905 Contact: Lawrence T. -

Emergency Restoration Plan

FINGER LAKES COMMUNICATIONS GROUP, INC. EMERGENCY RESTORATION PLAN Emergency Restoration Plan Index Finger Lakes Communications Group, Inc Statement of Purpose .....................................................................................................................1 Preliminary Plan Objectives .........................................................................................................2 Emergency Response Levels .........................................................................................................3 Roles and Responsibilities .............................................................................................................4 Special Circuit Trouble/CO Alarm Call Out .............................................................................5 Procedure, instructions, and personnel to notify for special circuit trouble and CO Alarm. Emergency Internet Contact Numbers ........................................................................................6 Trouble Repair for Internet Access for Phelps, Clifton Springs, Trumansburg, Ovid, Interlaken Emergency Phone Numbers to Be Called Before and After Work is Completed Trumansburg, Ovid, Interlaken .................................................................................................7 Emergency Phone Numbers to be Called Before and After Work is Completed Phelps, Clifton Springs ................................................................................................................8 Emergency Service Contacts, Trumansburg, -

BROADCAST MAXIMIZATION COMMITTEE John J. Mullaney

Before the Federal Communications Commission Washington, D.C. 20554 In the Matter of ) ) Promoting Diversification of Ownership ) MB Docket No 07-294 in the Broadcasting Services ) ) 2006 Quadrennial Regulatory Review – Review of ) MB Docket No. 06-121 the Commission’s Broadcast Ownership Rules and ) Other Rules Adopted Pursuant to Section 202 of ) the Telecommunications Act of 1996 ) ) 2002 Biennial Regulatory Review – Review of ) MB Docket No. 02-277 the Commission’s Broadcast Ownership Rules and ) Other Rules Adopted Pursuant to Section 202 of ) the Telecommunications Act of 1996 ) ) Cross-Ownership of Broadcast Stations and ) MM Docket No. 01-235 Newspapers ) ) Rules and Policies Concerning Multiple Ownership ) MM Docket No. 01-317 of Radio Broadcast Stations in Local Markets ) ) Definition of Radio Markets ) MM Docket No. 00-244 ) Ways to Further Section 257 Mandate and To Build ) MB Docket No. 04-228 on Earlier Studies ) To: Office of the Secretary Attention: The Commission BROADCAST MAXIMIZATION COMMITTEE John J. Mullaney Mark Lipp Paul H. Reynolds Bert Goldman Joseph Davis, P.E. Clarence Beverage Laura Mizrahi Lee Reynolds Alex Welsh SUMMARY The Broadcast Maximization Committee (“BMC”), composed of primarily of several consulting engineers and other representatives of the broadcast industry, offers a comprehensive proposal for the use of Channels 5 and 6 in response to the Commission’s solicitation of such plans. BMC proposes to (1) relocate the LPFM service to a portion of this spectrum space; (2) expand the NCE service into the adjacent portion of this band; and (3) provide for the conversion and migration of all AM stations into the remaining portion of the band over an extended period of time and with digital transmissions only. -

Ithaca's Sports Radio

THE PLAYBOOK ITHACA'S SPORTS RADIO | THE PLAYBOOK | ESPNIthaca.com 1 WE'RE ON A MISSION. We get it. Audiences want the best experience across radio, stream and app platforms. And we do just that, because we are Ithaca's Sports Radio. ESPN Ithaca features local SportsCenter updates every day of the week, locally hosted Between The Lines (BTL) from 4-5 p.m., live on-site reporting and play-by-play coverage of more than 75 high school contests annually. That means we are the dominant 24/7 local sports media outlet, because we are right there, as it happens. Heard about our robust lineup of professional and college play-by- play features? These include Yankees baseball, Cornell wrestling, Syracuse basketball and football. We also feature engaging personalities like Mike & Mike, Dan Le Batard, and "BTL" with Tim Donnelly. With such in-demand content, we're constantly evolving our social and digital platforms to provide unique and engaging opportunities. Golic & Wingo 6 A.M.—10 A.M. | THE PLAYBOOK | ESPNIthaca.com 2 NEW YORK STATE WE RULE SPORTS CONTENT. BROADCASTERS X ASSOCIATION 10 AWARD WINNERS BETWEEN THE LINES 2017 Hosted by University of Delaware Football alum Tim Donnelly. A lifelong • OUTSTANDING SPORTSCAST sports fan, Tim “brings it” to Between the Lines everyday, offering unique local sports entertainment weekdays 4-5 p.m.. Whether recounting a last- • OUTSTANDING PLAY-BY-PLAY COVERAGE second play, interviewing an outstanding local athlete or deliberating the • OUTSTANDING ON-AIR PERSONALITIES merits of a draft choice… our listeners get an earful. 2016 HIGH SCHOOL PLAY-BY-PLAY • OUTSTANDING SPORTSCAST We call nearly 100 local high school and college contests annually, led by • OUTSTANDING PLAY-BY-PLAY COVERAGE our broadcast team of Ithaca College alums Nick Karski and Will LeBlond. -

S-VE Gives Back Spencer-Van Etten Central School District 2016-2017

Kindergarten students create placemats for Meals on Wheels recipients recipients Wheels on Meals for placemats create students Kindergarten Tier Southern the of Bank Food for volunteer students School Middle 8th grade students clean up Nichols Pond Nichols up clean students grade 8th students younger with graduation celebrate Seniors VE Gives Back Gives VE - S 2017 Calendar 2017 - 2016 Van Etten Central School District District School Central Etten Van - Spencer Stay Informed at www.svecsd.org The Spencer-Van Etten Central School District website is continually updated with the most current information. Provided below are shortcuts to information you may be looking for: Empty Bowls Project at the High School Elementary students restore willow hut raises money for local food cupboard Go to www.svecsd.org and add the shortcut below (ie. www.svescsd.org/parentinfo.cfm) New York State Non-Profit Organization Athletics athletics.cfm US Postage Board of Education board.cfm P A I D Cafeteria Information cafeteria.cfm Spencer-Van Etten Spencer, NY 14883 Calendar districtcal.cfm Central School District 16 Dartts Crossroad Permit #22 Community Information communityinfo.cfm Spencer NY 14883 Curriculum curriculum.cfm District Information districtinfo.cfm Elementary School ES.cfm High School HS.cfm Instructional Support is.cfm Middle School MS.cfm Music Department music.cfm Parent Information parentinfo.cfm Postal Patron Local Parent Portal parentportal.cfm SafeArrival Program safearrival.cfm Also, find us on Facebook at: Spencer-Van Etten Central School District NYS Education Department Written Complaint & Appeal Procedures Any public or non-public school parent or teacher, other II. Procedures for Filing Complaints/Appeals with An extension of the 60-day complaint resolution period interested person or agency may file a complaint the New York State Education Department is permitted under CFR Part 299.11 (b), for concerning violations of ESEA Title I, Parts A, C, and D The State Education Department will review complaints exceptional circumstances.