New Visions for Software Design and Productivity: Research and Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ITSC 1309 - Integrated Software Application I Syllabus

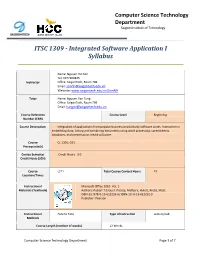

Computer Science Technology Department Saigon Institute of Technology ITSC 1309 - Integrated Software Application I Syllabus Name: Nguyen Hai Son Tel: 0977808425 Instructor Office: SaigonTech, Room 708 Email: [email protected] Website: www.saigontech.edu.vn/SonNH Tutor Name: Nguyen Van Tung Office: SaigonTech, Room 709 Email: [email protected] Course Reference Course Level Beginning Number (CRN) Course Description: Integration of applications from popular business productivity software suites. Instruction in embedding data, linking and combining documents using word processing, spreadsheets, databases, and presentation media software. Course CL 1301, GE3 Prerequisite(s) Course Semester Credit Hours 3.0 Credit Hours (SCH) Course L211 Total Course Contact Hours 72 Location/Times Instructional Microsoft Office 2010. Vol. 1 Materials (Textbook) Authors: Robert T.Grauer, Poatsy, Mulbery, Hulett, Krebs, Mast. ISBN-13: 978-0-13-612232-6/ ISBN-10: 0-13-612232-9 Publisher: Pearson Instructional Face to Face Type of Instruction Lecture/Lab Methods Course Length (number of weeks) 12 Weeks Computer Science Technology Department Page 1 of 7 Learning Objective, Students Learning Outcome, and Program Spec Note : This section of the syllabus provides the general course learning objectives, the expected students learning outcome, the course scope in terms of the department program, and the instrument used to evaluate the course. If you have any question, contact the instructor or the department. Academic Discipline/CTE 1. Install, configure, and administer Linux/UNIX and other systems. Program Student Learning 2. Document work log, write clearly and appropriately in an Information Technology Outcomes (PSLOs) context, respect user’s data, including backup and security 3. -

Integrated Software Application I Syllabus

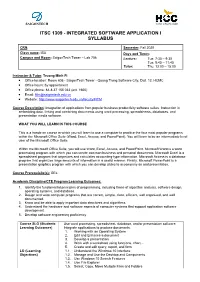

SAIGONTECH ITSC 1309 - INTEGRATED SOFTWARE APPLICATION I SYLLABUS CRN: Semester: Fall 2020 Class name: ISA Days and Times: Campus and Room: SaigonTech Tower – Lab 706 Lecture: Tue. 7:30 – 9:30 Tue. 9:45 – 11:45 Tutor: Thu. 13:00 – 15:00 Instructor & Tutor: Truong Minh Fi Office location: Room 606 - SaigonTech Tower - Quang Trung Software City, Dist. 12, HCMC Office hours: by appointment Office phone: 84-8-37 155 033 (ext. 1650) Email: [email protected] Website: http://www.saigontech.edu.vn/faculty/FiTM Course Description: Integration of applications from popular business productivity software suites. Instruction in embedding data, linking and combining documents using word processing, spreadsheets, databases, and presentation media software. WHAT YOU WILL LEARN IN THIS COURSE This is a hands-on course in which you will learn to use a computer to practice the four most popular programs within the Microsoft Office Suite (Word, Excel, Access, and PowerPoint). You will learn to be an intermediate level user of the Microsoft Office Suite. Within the Microsoft Office Suite, you will use Word, Excel, Access, and PowerPoint. Microsoft Word is a word processing program with which you can create common business and personal documents. Microsoft Excel is a spreadsheet program that organizes and calculates accounting-type information. Microsoft Access is a database program that organizes large amounts of information in a useful manner. Finally, Microsoft PowerPoint is a presentation graphics program with which you can develop slides to accompany an oral presentation. Course Prerequisite(s): GE3 Academic Discipline/CTE Program Learning Outcomes: 1. Identify the fundamental principles of programming, including those of algorithm analysis, software design, operating systems, and database. -

Chapter 1 Issues—The Software Crisis

Chapter 1 Issues—The Software Crisis 1. Introduction to Chapter This chapter describes some of the current issues and problems in system development that are caused The term "software crisis" has been used since the by software—software that is late, is over budget, late 1960s to describe those recurring system devel- and/or does not meet the customers' requirements or opment problems in which software development needs. problems cause the entire system to be late, over Software is the set of instructions that govern the budget, not responsive to the user and/or customer actions of a programmable machine. Software includes requirements, and difficult to use, maintain, and application programs, system software, utility soft- enhance. The late Dr. Winston Royce, in his paper ware, and firmware. Software does not include data, Current Problems [1], emphasized this situation when procedures, people, and documentation. In this tuto- he said in 1991: rial, "software" is synonymous with "computer pro- grams." The construction of new software that is both Because software is invisible, it is difficult to be pleasing to the user/buyer and without latent certain of development progress or of product com- errors is an unexpectedly hard problem. It is pleteness and quality. Software is not governed by the perhaps the most difficult problem in engi- physical laws of nature: there is no equivalent of neering today, and has been recognized as such Ohm's Law, which governs the flow of electricity in a for more than 15 years. It is often referred to as circuit; the laws of aerodynamics, which act to keep an the "software crisis". -

Software Engineering and Ethics: When Code Goes Bad

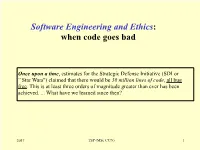

Software Engineering and Ethics: when code goes bad Once upon a time, estimates for the Strategic Defense Initiative (SDI or ``Star Wars'') claimed that there would be 30 million lines of code, all bug free. This is at least three orders of magnitude greater than ever has been achieved. ... What have we learned since then? 2017 TSP (MSc CCN) 1 Lecture Plan It is a bad plan that admits of no modification Publilius Syrus •Software Crisis •Ethics •Software Engineering •Software Engineering and Ethics •The root of the problem - computer science boundaries? •When code goes bad - education through classic examples •Proposals for the future 2017 TSP (MSc CCN) 2 Software Crisis Do you recognise this? 3 When everyone is wrong Software Engineering and “Bugs” everyone is right Nivelle de La Chaussee QUESTION: What’s the difference between hardware and software ?… buy some hardware and you get a warranty, buy some software and you get a disclaimer The software crisis: •always late Does this really exist? •always over-budget •always buggy •always hard to maintain •always better the next time round … but never is! This doesn’t seem right … where are our ethics? 2017 TSP (MSc CCN) 4 Is there really a crisis? … To avoid crisis, just hire the best people … look at the advances we have made Success in software development depends most upon the quality of the people involved. There is more software to be developed than there are capable developers to do it. Demand for engineers will continue to outstrip supply for the foreseeable future. Complacency has already set in … some firms acknowledge that many of their engineers make negative contribution. -

![Arxiv:2105.00534V1 [Cs.SE] 2 May 2021 Solutions](https://docslib.b-cdn.net/cover/5194/arxiv-2105-00534v1-cs-se-2-may-2021-solutions-905194.webp)

Arxiv:2105.00534V1 [Cs.SE] 2 May 2021 Solutions

Metadata Interpretation Driven Development J´ulioG.S.F. da Costaa,d, Reinaldo A. Pettac,d, Samuel Xavier-de-Souzaa,b,d, aGraduate Program of Electrical and Computer Engineering bDepartamento de Engenharia de Computa¸c~aoe Automa¸c~ao cDepartamento de Geologia dUniversidade Federal do Rio Grande do Norte, Natal, RN, Brazil, 59078-900 Abstract Context: Despite decades of engineering and scientific research efforts, sep- aration of concerns in software development remains not fully achieved. The ultimate goal is to truly allow code reuse without large maintenance and evo- lution costs. The challenge has been to avoid the crosscutting of concerns phe- nomenon, which has no apparent complete solution. Objective: In this paper, we show that business-domain code inscriptions play an even larger role in this challenge than the crosscutting of concerns. We then introduce a new methodology, called Metadata Interpretation Driven Develop- ment (MIDD) that suggests a possible path to realize separation of concerns by removing functional software concerns from the coding phase. Method: We propose a change in the perspective for building software, from being based on object representation at the coding level to being based on ob- ject interpretation, whose definitions are put into layers of representation other than coding. The metadata of the domain data is not implemented at the level of the code. Instead, they are interpreted at run time. Results: As an important consequence, such constructs can be applied across functional requirements, across business domains, with no concerns regarding the need to rewrite or refactor code. We show how this can increase the (re)use of the constructs. -

Claris Buys out Styleware

August 1988 Vol, 4, 1'10,7 ISSN 0885-40 I 7 newstand price: $2.50 Releasing the power to everyone. photocopy charge per page: $0.15 . _._._._._._._._._._._._._._._._._._._._._._._._._._.-._.- Claris buys out StyleWare Miscellanea Apple bas diagnosed and fixed the Apple IIgs disappearing disk C1aris Corporation has purchased all the outstanding stock of Hous· drive problem (August 1987, page 3.54; September 1987, ~age ton·based StyleWare, Inc. StyleWare currently publishes eight Apple II 3.61). Symptoms of this problem are that one or more disk dnves products, but it was clearly number nine, aSW?rks (see last month's attached to a IIgs will turn themselves on for no apparent reason and issue. page 4.46), that opened the purse at Clans. .• stay on. Pressing control·reset stops the drive, but. after ~hat. at~em~t· Claris has already renamed the product Apple Works as. Clarls Said ing to access any Apple 3.5 drives on the SmartPort daiSY cham Will it will work with StyleWare's developers over the next few months to return a NO DEVICE CONNECTED message. The computer must be complete development 31Ud testing of the program. It expects to ship turned off and back on to recover access to the drive. No perm3lUent Apple Works as before the end of the year. StyleWare's operations will damage occurs to the drive or the disk inside it. be moved from Houston to Claris' California headquarters following The problem occurs only with Apple 3.5 drives (not UniDisk 3.5) the completion of AppleWorks as. -

Unit 6: Computer Software

Computer Software Unit 6: Computer Software Introduction Collectively computer programs are known as computer software. This unit consisting of four lessons presents different aspects of computer software. Lesson 1 introduces software and its classification, system software which assists the users to develop programs for solving user problems is presented in Lesson 2. Many programs for widely used applications are available commercially. These programs are popularly known as application packages or package programs or simply packages. Advantages of package programs and brief outline of popular packages for word-processing, spreadsheet analysis, database management systems, desktop publication and graphic and applications are discussed in Lesson 3. Tasks for developing computer programs and brief introduction to some common programming languages are presented in Lesson 4. Lesson 1: Introduction and Classification 1.1 Learning Objectives On completion of this lesson you will be able to • understand the concept of software • distinguish between system software and application software • know components of system software and types of application software. 1.2 Software Software of a computer system is intangible rather than physical. It is the term used for any type of program. Software consists of statements, which instruct a computer to perform the required task. Without software a computer is simply a mass of electronic components. For a computer to input, store, make decisions, arithmetically manipulate and Software consists of output data in the correct sequence it must have access to appropriate statements, which instruct programs. Thus, the software includes all the activities associated with a computer to perform the required task. the successful development and operation of the computing system other than the hardware pieces. -

Edsger Wybe Dijkstra

In Memoriam Edsger Wybe Dijkstra (1930–2002) Contents 0 Early Years 2 1 Mathematical Center, Amsterdam 3 2 Eindhoven University of Technology 6 3 Burroughs Corporation 8 4 Austin and The University of Texas 9 5 Awards and Honors 12 6 Written Works 13 7 Prophet and Sage 14 8 Some Memorable Quotations 16 Edsger Wybe Dijkstra, Professor Emeritus in the Computer Sciences Department at the University of Texas at Austin, died of cancer on 6 August 2002 at his home in Nuenen, the Netherlands. Dijkstra was a towering figure whose scientific contributions permeate major domains of computer science. He was a thinker, scholar, and teacher in renaissance style: working alone or in a close-knit group on problems of long-term importance, writing down his thoughts with exquisite precision, educating students to appreciate the nature of scientific research, and publicly chastising powerful individuals and institutions when he felt scientific integrity was being compromised for economic or political ends. In the words of Professor Sir Tony Hoare, FRS, delivered by him at Dijkstra’s funeral: Edsger is widely recognized as a man who has thought deeply about many deep questions; and among the deepest questions is that of traditional moral philosophy: How is it that a person should live their life? Edsger found his answer to this question early in his life: He decided he would live as an academic scientist, conducting research into a new branch of science, the science of computing. He would lay the foundations that would establish computing as a rigorous scientific discipline; and in his research and in his teaching and in his writing, he would pursue perfection to the exclusion of all other concerns. -

"Design Rules, Volume 2: How Technology Shapes Organizations

Design Rules, Volume 2: How Technology Shapes Organizations Chapter 17 The Wintel Standards-based Platform Carliss Y. Baldwin Working Paper 20-055 Design Rules, Volume 2: How Technology Shapes Organizations Chapter 17 The Wintel Standards-based Platform Carliss Y. Baldwin Harvard Business School Working Paper 20-055 Copyright © 2019 by Carliss Y. Baldwin Working papers are in draft form. This working paper is distributed for purposes of comment and discussion only. It may not be reproduced without permission of the copyright holder. Copies of working papers are available from the author. Funding for this research was provided in part by Harvard Business School. © Carliss Y. Baldwin Comments welcome. Please do not circulate or quote. Design Rules, Volume 2: How Technology Shapes Organizations Chapter 17 The Wintel Standards-based Platform By Carliss Y. Baldwin Note to Readers: This is a draft of Chapter 17 of Design Rules, Volume 2: How Technology Shapes Organizations. It builds on prior chapters, but I believe it is possible to read this chapter on a stand-alone basis. The chapter may be cited as: Baldwin, C. Y. (2019) “The Wintel Standards-based Platform,” HBS Working Paper (November 2019). I would be most grateful for your comments on any aspect of this chapter! Thank you in advance, Carliss. Abstract The purpose of this chapter is to use the theory of bottlenecks laid out in previous chapters to better understand the dynamics of an open standards-based platform. I describe how the Wintel platform evolved from 1990 through 2000 under joint sponsorship of Intel and Microsoft. I first describe a series of technical bottlenecks that arose in the early 1990s concerning the “bus architecture” of IBM-compatible PCs. -

Unit Structure: 1.1 Software Crisis 1.2 Software Evaluation 1.3 POP (Procedure Oriented Programming) 1.4 OOP (Object Oriented

Unit Structure: 1.1 Software crisis 1.2 Software Evaluation 1.3 POP (Procedure Oriented Programming) 1.4 OOP (Object Oriented Programming) 1.5 Basic concepts of OOP 1.5.1 Objects 1.5.2 Classes 1.5.3 Data Abstraction and Data Encapsulation 1.5.4 Inheritance 1.5.5 Polymorphism 1.5.6 Dynamic Binding 1.5.7 Message Passing 1.6 Benefits of OOP 1.7 Object Oriented Language 1.8 Application of OOP 1.9 Introduction of C++ 1.9.1 Application of C++ 1.10 Simple C++ Program 1.10.1 Program Features 1.10.2 Comments 1.10.3 Output Operators 1.10.4 iostream File 1.10.5 Namespace 1.10.6 Return Type of main () 1.11 More C++ Statements 1.11.1 Variable 1.11.2 Input Operator 1.11.3 Cascading I/O Operator 1.12 Example with Class 1.13 Structure of C++ 1.14 Creating Source File 1.15 Compiling and Linking Page 1 1.1 Software Crisis Developments in software technology continue to be dynamic. New tools and techniques are announced in quick succession. This has forced the software engineers and industry to continuously look for new approaches to software design and development, and they are becoming more and more critical in view of the increasing complexity of software systems as well as the highly competitive nature of the industry. These rapid advances appear to have created a situation of crisis within the industry. The following issued need to be addressed to face the crisis: • How to represent real-life entities of problems in system design? • How to design system with open interfaces? • How to ensure reusability and extensibility of modules? • How to develop modules that are tolerant of any changes in future? • How to improve software productivity and decrease software cost? • How to improve the quality of software? • How to manage time schedules? 1.2 Software Evaluation Ernest Tello, A well known writer in the field of artificial intelligence, compared the evolution of software technology to the growth of the tree. -

Introduction to Computers Anthony W

University of Montana ScholarWorks at University of Montana Syllabi Course Syllabi Fall 9-1-2018 CAPP 120.05: Introduction to Computers Anthony W. Becker University of Montana - Missoula, [email protected] Let us know how access to this document benefits ouy . Follow this and additional works at: https://scholarworks.umt.edu/syllabi Recommended Citation Becker, Anthony W., "CAPP 120.05: Introduction to Computers" (2018). Syllabi. 8529. https://scholarworks.umt.edu/syllabi/8529 This Syllabus is brought to you for free and open access by the Course Syllabi at ScholarWorks at University of Montana. It has been accepted for inclusion in Syllabi by an authorized administrator of ScholarWorks at University of Montana. For more information, please contact [email protected]. Business Technology Department COURSE SYLLABUS CAPP120-AU18 - Becker COURSE NUMBER AND TITLE: CAPP 120 – Introduction to Computers SEMESTER CREDITS: 3 PREREQUISITES: None FACULTY: Anthony Becker E-MAIL: [email protected] PHONE: 243-7817 OFFICE: MC 413 OFFICE HOURS: Mondays: 3:00 – 4:00pm, Tuesdays: 8:00 – 9:00am, and Thursdays: 1:00 – 2:00pm, or by appointment. RELATIONSHIP TO PROGRAM(S): This course provides students with a comprehensive foundation for computer technology, hardware, and software through practical activities. COURSE DESCRIPTION: Introduction to Computers offered autumn and spring. Introduction to computer terminology, hardware, and software, including wire/wireless communications and multimedia devices. Students utilize word processing, spreadsheet, database, and presentation applications to create projects common to business and industry in a networked computing environment. Internet research, e-mail usage, and keyboarding proficiency are integrated. STUDENT PERFORMANCE OUTCOMES: Occupational Performance Objectives Upon completion of this course, the student will be able to: 1. -

Computer Software Topic Three: Question

TOPIC THREE: Computer Software • Breadbox Office — DOS software, but has been successfully tested with Topic Three: Question One Win3.x, Win95/98/98SE/ME, WinNT4.0, Win2000 and the 32bit- (a). Define the term computer software. versions of WinXP, WinVista and Win7.0. Software is a collection of coded scientific • Calligra Suite is the continuation of instructions that are needed for a computer KOffice under a new name. It is part to work or function.. Software is often called of the KDE Software Compilation. a computer program. • Celframe Office — supports Microsoft Office and other popular (b). State the difference between file formats, with a user interface packaged and integrated software. styled on Microsoft Office 2003. • ContactOffice - an AJAX-based Packaged software is commercial software, online office suite. The suite includes which is copyrighted and designed to meet personal and shared Calendar, the needs of a wide variety of users, Document, Messaging, Contact, Wiki,... tools. Available free and as While; an enterprise service. • Corel WordPerfect for DOS - A word Integrated software combines application processor, spreadsheet, and programs such as word processing, presentation software from Corel spreadsheet, and database into a single, (containing WordPerfect 6.2, easy-to-use package i.e., it cannot be Quattro Pro 5.6, Presentations 2.1, purchased individually. and Shell 4.0c). • Documents To Go (Android and (c). (i). What is a software suite? others) • EasyOffice A software suite is a collection of individual • EIOffice (Evermore Integrated application software packages sold as a Office) — a Chinese / English / single entity. Japanese / French language integrated office suite. Available for (ii).