Extreme Compression of Grayscale Images

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Widetek® Wide Format MFP Solutions 17

® The WideTEK 36CL-MF scanner forms the basis of all of the MF solutions and is the fastest, most productive wide format MFP so- lution on the market, running at 10 inches per second at 200 dpi in full color, at the lowest possible price. At the full width of 36“ and 600 dpi resolution, the scanner still runs at 1.7 inches per second. ® WideTEK 36CL-MF is the best choice to build a powerful and high quality MFP with either an integrated printer series specifi c stand, a high stand or a standalone stand. All MFP solutions come with a built in closed loop color calibration function producing the best possible copies on your selected printer and paper combination. Scanner Optics CIS camera, scans in color, grayscale, B&W Resolution 1200 x 1200 dpi scanner resolution 9600 x 9600 dpi interpolated (optional) Document Size 965 mm (38 inch) width, nearly unlimited length Scans 915 mm (36 inch) widths Scanning Speed 200 dpi - 15 m/min (10 inch/s) in color Face up scanning with on the fl y rotation and modifi cation 600 dpi - 8 m/min (5 inch/s) in grayscale of images without rescanning. Automated crop & deskew. Color Depth 48 bit color, 16 bit grayscale Built in closed loop color calibration function for best possible copies Scan output 24 bit color, 8 bit grayscale, bitonal, enhanced half- tone LED lamps, no warm up, IR/UV free Output Formats Multipage PDF (PDF/A) and TIFF, JPEG, JPEG 2000, Large 21“ full HD color touchscreen for simple operation in PNM, PNG, BMP, TIFF (Raw, G3, G4, LZW, JPEG), optional bundles AutoCAD DWF, JBIG, DjVu, DICOM, PCX, Postscript, EPS, Raw data Scan2USB, FTP, SMB and Network. -

Optimization of Image Compression for Scanned Document's Storage

ISSN : 0976-8491 (Online) | ISSN : 2229-4333 (Print) IJCST VOL . 4, Iss UE 1, JAN - MAR C H 2013 Optimization of Image Compression for Scanned Document’s Storage and Transfer Madhu Ronda S Soft Engineer, K L University, Green fields, Vaddeswaram, Guntur, AP, India Abstract coding, and lossy compression for bitonal (monochrome) images. Today highly efficient file storage rests on quality controlled This allows for high-quality, readable images to be stored in a scanner image data technology. Equivalently necessary and faster minimum of space, so that they can be made available on the web. file transfers depend on greater lossy but higher compression DjVu pages are highly compressed bitmaps (raster images)[20]. technology. A turn around in time is necessitated by reconsidering DjVu has been promoted as an alternative to PDF, promising parameters of data storage and transfer regarding both quality smaller files than PDF for most scanned documents. The DjVu and quantity of corresponding image data used. This situation is developers report that color magazine pages compress to 40–70 actuated by improvised and patented advanced image compression kB, black and white technical papers compress to 15–40 kB, and algorithms that have potential to regulate commercial market. ancient manuscripts compress to around 100 kB; a satisfactory At the same time free and rapid distribution of widely available JPEG image typically requires 500 kB. Like PDF, DjVu can image data on internet made much of operating software reach contain an OCR text layer, making it easy to perform copy and open source platform. In summary, application compression of paste and text search operations. -

R-Photo User's Manual

User's Manual © R-Tools Technology Inc 2020. All rights reserved. www.r-tt.com © R-tools Technology Inc 2020. All rights reserved. No part of this User's Manual may be copied, altered, or transferred to, any other media without written, explicit consent from R-tools Technology Inc.. All brand or product names appearing herein are trademarks or registered trademarks of their respective holders. R-tools Technology Inc. has developed this User's Manual to the best of its knowledge, but does not guarantee that the program will fulfill all the desires of the user. No warranty is made in regard to specifications or features. R-tools Technology Inc. retains the right to make alterations to the content of this Manual without the obligation to inform third parties. Contents I Table of Contents I Start 1 II Quick Start Guide in 3 Steps 1 1 Step 1. Di.s..k.. .S..e..l.e..c..t.i.o..n.. .............................................................................................................. 1 2 Step 2. Fi.l.e..s.. .M..a..r..k.i.n..g.. ................................................................................................................ 4 3 Step 3. Re..c..o..v..e..r.y.. ...................................................................................................................... 6 III Features 9 1 File Sorti.n..g.. .............................................................................................................................. 9 2 File Sea.r.c..h.. ............................................................................................................................ -

Entropy Coding 83

1 X-Media Audio, Image and Video Coding Laurent Duval (IFP Energies nouvelles) Eric Debes (Thalès) Philippe Morosini (Supélec) Supports Moodle/NTNoe http://www.laurent-duval.eu/lcd-lecture-supelec-xmedia.html 2 General information Contents 3 • Introduction to X-Media coding generic principles • Audio data recording/sampling, physiology • Data, audio coding LZ*, Mpeg-Layer 3 • Image coding JPEG vs. JPEG 2000 • Video coding MPEG formats, H-264 • Bonuses, exercices Initial motivation 4 Initial motivation 5 • Coding/compression as a DSP engineer discipline information reduction, standards & adaption, complexity, integrity & security issues, interaction with SP pipeline • Composite domain, yet ubiquitous sampling (Nyquist-Shannon, filter banks), statistical SP (KLT, decorrelation), transforms (Fourier, wavelets), classification (quantization, K-means), functional spaces (basis & frames), information theory (entropy), error measurements, modelling Initial motivation 6 Digital Image Processing Digital Image Characteristics Spatial Spectral Gray-level Histogram DFT DCT Pre-Processing Enhancement Restoration Point Processing Masking Filtering Degradation Models Inverse Filtering Wiener Filtering Compression Information Theory Lossless Lossy LZW (gif) Transform-based (jpeg) Segmentation Edge Detection Description Shape Descriptors Texture Morphology Initial motivation 7 • Exemplar underline importance of specific steps (related to other lectures) to make stuff work which algorithm for which task? ° process text? sound? images? video? "the toolbox quote" (Juran) • Evolving steady evolution of standards, tools, overview of future directions (and tools) • Central to SP task what is really important in my data? (stored) info. overflow + degradations (decon.) 8 Principles Principles 9 • What is data (image) Compression? Data compression is the art and science of representing information in a compact form. Data is a sequence of symbols taken from a discrete alphabet. -

Document Image Segmentation and Compression

DOCUMENT IMAGE SEGMENTATION AND COMPRESSION AThesis Submitted to the Faculty of Purdue University by Hui Cheng In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy August 1999 -ii- To my beloved wife Liu, Qian. To my wonderful parents Cheng, Zuoqin and Li, Heying. - iii - ACKNOWLEDGMENTS I would like to extend my most sincere thanks to my advisor, Professor Charles A. Bouman for his guidance, encouragement and all the things that he had done in helping me develop my professional and personal skills. I am certain that I will benefit from his rigorous scientific approach, and the way of critical thinking throughout my future career. Most of all, my deepest thanks go to my wife Qian, my parents and my family. I can not thank them enough for their love, support, sacrifice and their belief in me. I want to thank my advisory committee members: Professor Jan P. Allebach, Professor Edward J. Delp, and Professor Bradley J. Lucier for their constructive suggestions and comments. Also, my thanks go to Dr. Zhigang Fan, Dr. Ricardo L. de Queiroz, Dr. Chi-hsin Wu and Dr. Steve J. Harrington of Xerox Corporation for their valuable advice and suggestions. I thank Dr. Faouzi Kossentini and Mr. Dave Tompkins of Department of Electrical and Computer Engineering, University of British Columbia for providing us the JBIG2 coder. In addition, I am grateful to all my friends who gave me help, support, and encouragement. Thank you all! I would also like to thank Xerox Corporation, Xerox Foundation, and Xerox IM- PACT Imaging for their generous financial support. -

IDOL Keyview Viewing SDK 12.7 Programming Guide

KeyView Software Version 12.7 Viewing SDK Programming Guide Document Release Date: October 2020 Software Release Date: October 2020 Viewing SDK Programming Guide Legal notices Copyright notice © Copyright 2016-2020 Micro Focus or one of its affiliates. The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. Documentation updates The title page of this document contains the following identifying information: l Software Version number, which indicates the software version. l Document Release Date, which changes each time the document is updated. l Software Release Date, which indicates the release date of this version of the software. To check for updated documentation, visit https://www.microfocus.com/support-and-services/documentation/. Support Visit the MySupport portal to access contact information and details about the products, services, and support that Micro Focus offers. This portal also provides customer self-solve capabilities. It gives you a fast and efficient way to access interactive technical support tools needed to manage your business. As a valued support customer, you can benefit by using the MySupport portal to: l Search for knowledge documents of interest l Access product documentation l View software vulnerability alerts l Enter into discussions with other software customers l Download software patches l Manage software licenses, downloads, and support contracts l Submit and track service requests l Contact customer support l View information about all services that Support offers Many areas of the portal require you to sign in. -

Image Access Widetek WT12-650 Specifications

Technical Specifications WideTEK WT12-650 Optical System Scanner resolution 1200 x 1200 dpi Optical resolution 1200 x 600 dpi Pixel dimensions 9.3 x 9.3 µm Sensor type 2Tri-Color CCD, encapsulated and dust-proof 16 bit grayscale (internal resolution) Color depth 48 bit color (internal resolution) Sensor resolution 22,5000 active pixels Scan modes 24 bit color, 8 bit color indexed, 8 bit grayscale, bitonal, enhanced halftone Scan accuracy Better than ± 0.1% over max. scan area File Formats Multipage PDF (PDF/A) and TIFF, JPEG, JPEG 2000, PNM, PNG, BMP, TIFF (Raw, G3, G4, LZW, JPEG), AutoCAD DWF, JBIG, DjVu, DICOM, PCX, Postscript, EPS, Raw data Version: 1.0 Date created: July 2017 Revised: Technical Specifications Scanning Speed Scan time, 24bit color, full bed, 300dpi Less than 3 seconds Scan time, 24bit color, full bed, 600dpi Less than 6 seconds Cycle time 300dpi, all options on Less than 6 seconds Cycle time 600dpi, all options on Less than 13 seconds Illumination System Two lamps with white LEDs, Light source integrated optical diffusor Warm-up time of the lamps None. Maximum brightness after switching on. Temperature dependency None UV / IR radiation None Lifetime of the LEDs 50,000 hours (typ.) Electrical Specifications External power supply Input Voltage 100 – 240 Vac Frequency 47 – 63 Hz Output Voltage 24 Vdc Output current 6,25 A ECO standard CEC level VI Power Consumption Input voltage 24 VDC Input current (non fused) max. 5 A Power Consumption Sleep < 0,5 W Standby 2,5 W Ready to scan 28 W Scanning 55 W Version: 1.0 Date created: July 2017 Revised: Technical Specifications Documents Specification Document size 317 x 470 mm / 12,5 x 18.5 inch Document weight Up to 10 kg / 22 lbs. -

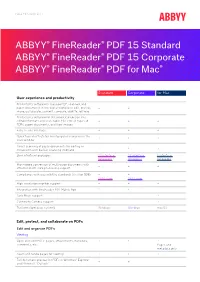

Full Feature List

FULL FEATURE LIST ABBYY® FineReader® PDF 15 Standard ABBYY® FineReader® PDF 15 Corporate ABBYY® FineReader® PDF for Mac® Standard Corporate for Mac User experience and productivity Productivity software to manage PDF, scanned, and paper documents in the digital workplace: edit, protect, + + share, collaborate, convert, compare, digitize, retrieve Productivity software for document conversion into editable formats and searchable PDFs for all types of + + + PDFs, paper documents, and their images Easy-to-use interface + + + Quick task shortcuts for most popular scenarios in the + + + start window Direct scanning of paper documents for editing or + + + conversion with built-in scanning interface User interface languages 23 interface 23 interface 6 interface languages 1 languages 1 languages 1 High-speed conversion of multi-page documents with + + + effective multi-core processing support Compliance with accessibility standards (Section 508) + + VPAT form VPAT form High-resolution monitor support + + + Integration with FineReader PDF Mobile App + + Dark Mode support + Continuity Camera support + Platform (operation system) Windows Windows macOS Edit, protect, and collaborate on PDFs Edit and organize PDFs Viewing Open and view PDFs: pages, attachments, metadata, + + + comments, etc. Pages and metadata only Zoom and rotate pages for viewing + + + Full document preview for PDFs in Windows® Explorer + + and Microsoft® Outlook® Standard Corporate for Mac Set FineReader PDF as default PDF viewer + + + Various PDF viewing modes: full screen, one -

JPEG 2000 - a Practical Digital Preservation Standard?

Technology Watch Report JPEG 2000 - a Practical Digital Preservation Standard? Robert Buckley, Ph.D. Xerox Research Center Webster Webster, New York DPC Technology Watch Series Report 08-01 February 2008 © Digital Preservation Coalition 2008 1 Executive Summary JPEG 2000 is a wavelet-based standard for the compression of still digital images. It was developed by the ISO JPEG committee to improve on the performance of JPEG while adding significant new features and capabilities to enable new imaging applications. The JPEG 2000 compression method is part of a multi-part standard that defines a compression architecture, file format family, client-server protocol and other components for advanced applications. Instead of replacing JPEG, JPEG 2000 has created new opportunities in geospatial and medical imaging, digital cinema, image repositories and networked image access. These opportunities are enabled by the JPEG 2000 feature set: • A single architecture for lossless and visually lossless image compression • A single JPEG 2000 master image can supply multiple derivative images • Progressive display, multi-resolution imaging and scalable image quality • The ability to handle large and high-dynamic range images • Generous metadata support With JPEG 2000, an application can access and decode only as much of the compressed image as needed to perform the task at hand. This means a viewer, for example, can open a gigapixel image almost instantly by retrieving and decompressing a low resolution, display-sized image from the JPEG 2000 codestream. JPEG 2000 also improves a user’s ability to interact with an image. The zoom, pan, and rotate operations that users increasingly expect in networked image systems are performed dynamically by accessing and decompressing just those parts of the JPEG 2000 codestream containing the compressed image data for the region of interest. -

Tipos De Ficheros Soportados

Tipos de ficheros soportados File Type Support Description EXIF IPTC XMP ICC1 Other 3FR R Hasselblad RAW (TIFF-based) R R R R - 3G2, 3GP2 R/W R/W2 R/W2 R/W/C - R/W QuickTime3 3rd Gen. Partnership Project 2 a/v (QuickTime-based) 3GP, 3GPP R/W R/W2 R/W2 R/W/C - R/W QuickTime3 3rd Gen. Partnership Project a/v (QuickTime-based) - - - - AA R Audible Audiobook R Audible AAX R/W R/W2 R/W2 R/W/C - R/W QuickTime3 Audible Enhanced Audiobook (QuickTime-based) ACR R - - - - R DICOM American College of Radiology ACR-NEMA (DICOM-like) R - - - - R Font Adobe [Composite/Multiple Master] AFM, ACFM, AMFM Font Metrics AI, AIT R/W R/W/C4 R/W/C4 R/W/C R/W/C4 Adobe Illustrator [Template] (PS or PDF) R/W/C PDF PostScript, R Photoshop AIFF, AIF, AIFC R - - - - R AIFF ID3 Audio Interchange File Format [Compressed] - - - - APE R Monkey’s Audio R APE ID3 ARW R/W Sony Alpha RAW (TIFF-based) R/W/C R/W/C R/W/C R/W/C R/W Sony SonyIDC ASF R - - R - R ASF Microsoft Advanced Systems Format AVI R/W R/W R/W R/W R/W R RIFF Audio Video Interleaved (RIFF- based) Tipos de ficheros soportados BMP, DIB R - - - - R BMP Windows BitMaP / Device Independent Bitmap BTF R R R R R - BigTIFF (64-bit Tagged Image File Format) CHM R - - - - R EXE Microsoft Compiled HTML format COS R - - - - R XML Capture One Settings (XML- based) CR2 R/W Canon RAW 2 (TIFF-based) R/W/C R/W/C R/W/C R/W/C R/W/C CanonVRD, R/W Canon CRW, CIFF R/W - - R/W/C - Canon RAW Camera Image File R/W/C CanonVRD, Format (CRW spec.) R/W CanonRaw CS1 R/W Sinar CaptureShop 1-shot RAW R/W/C R/W/C R/W/C R/W/C R Photoshop (PSD-based) -

Digital Mathematics Library Some Technical and Organisatorial Suggestions

1 Digital Mathematics Library Some Technical and Organisatorial Suggestions Ulf Rehmann Abstract. It is suggested to take DjVu as the basic format for the DML. This for- mat is publicly documented, controlled by software in the public domain (GPL), compatible with all known image and text formats (public do- main converters to and from those other formats exist). It is a multi-layer format allowing annotation and linking of the encoded digitized objects, coding and decoding software exists for all popular computer systems. Further, for the worldwide DML, an infrastructure setup is suggested which operates in the spirit of the Open Source Movement, with cooper- ation among all nationalities. Software and services useful for and devel- oped in the DML system should be offered publicly and freely, preferably under license conditions as being used for Open Source. All services are understood to be available and useful not only in the world of mathematics, but for any scientific and public purposes. 1 Format It is suggested to take DjVu as the basic format for the DML. This is a file format together with a compression method, whose underlying algo- rithms are designed for efficient storage, retrieval, and display of scanned document images on the World Wide Web. DjVu provides high compression rates by handling text and images differently, each in a highly efficient way. It provides progressiv- ity, allowing an application to first display the text, then to display the images using a progressive buildup. It is designed to be implemented using data structures appropriate for efficient navigation within an image. -

Contour Encoded Compression and Transmission

Brigham Young University BYU ScholarsArchive Theses and Dissertations 2006-11-29 Contour Encoded Compression and Transmission Christopher B. Nelson Brigham Young University - Provo Follow this and additional works at: https://scholarsarchive.byu.edu/etd Part of the Computer Sciences Commons BYU ScholarsArchive Citation Nelson, Christopher B., "Contour Encoded Compression and Transmission" (2006). Theses and Dissertations. 1096. https://scholarsarchive.byu.edu/etd/1096 This Thesis is brought to you for free and open access by BYU ScholarsArchive. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. CONTOUR ENCODED COMPRESSION AND TRANSMISSION by Christopher Nelson A thesis submitted to the faculty of Brigham Young University In partial fulfillment of the requirements for the degree of Master of Science Department of Computer Science Brigham Young University December 2006 Copyright © 2006 Christopher B. Nelson All Rights Reserved BRIGHAM YOUNG UNIVERSITY GRADUATE COMMITTEE APPROVAL of a thesis submitted by Christopher B. Nelson This thesis has been read by each member of the following graduate committee and by majority vote has been found to be satisfactory. _____________________ _____________________________________ Date William Barrett, Chair _____________________ _____________________________________ Date Thomas Sederberg _____________________ _____________________________________ Date Eric Mercer BRIGHAM YOUNG UNIVERSITY As chair of the candidate’s graduate committee, I have read the thesis of Christopher B. Nelson in its final form and have found that (1) its format, citations and bibliographical style are consistent and acceptable and fulfill university and department style requirements; (2) its illustrative materials including figures, tables and charts are in place; and (3) the final manuscript is satisfactory to the graduate committee and is ready for submission to the university library.