Yocto Project 11 Octomber 2016 Outline

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Embedded Linux Systems with the Yocto Project™

OPEN SOURCE SOFTWARE DEVELOPMENT SERIES Embedded Linux Systems with the Yocto Project" FREE SAMPLE CHAPTER SHARE WITH OTHERS �f, � � � � Embedded Linux Systems with the Yocto ProjectTM This page intentionally left blank Embedded Linux Systems with the Yocto ProjectTM Rudolf J. Streif Boston • Columbus • Indianapolis • New York • San Francisco • Amsterdam • Cape Town Dubai • London • Madrid • Milan • Munich • Paris • Montreal • Toronto • Delhi • Mexico City São Paulo • Sidney • Hong Kong • Seoul • Singapore • Taipei • Tokyo Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The author and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. For information about buying this title in bulk quantities, or for special sales opportunities (which may include electronic versions; custom cover designs; and content particular to your business, training goals, marketing focus, or branding interests), please contact our corporate sales depart- ment at [email protected] or (800) 382-3419. For government sales inquiries, please contact [email protected]. For questions about sales outside the U.S., please contact [email protected]. Visit us on the Web: informit.com Cataloging-in-Publication Data is on file with the Library of Congress. -

Lmod Or How to Protect Your Sanity from Dependency Hell

Lmod or how to protect your sanity from dependency hell Steffen Müthing Interdisciplinary Center for Scientific Computing Heidelberg University Dune User Meeting Aachen September 26, 2013 1 Steffen Müthing | Lmod or how to protect your sanity from dependency hell ⇒ Have to keep around multiple versions of MPI, BLAS, ParMetis, ALUGrid, UGGrid, . The Issue • DUNE has a number of non-packaged dependencies • Some of those contain libraries that are bound to a compiler / MPI version • You have to support multiple compilers / MPIs • Library developer (we try to be good about this...) • Clusters with different compiler / MPI combinations • Easily switch between release / debug version of MPI (only with dynamic linking) • Use GCC in general, but switch to clang during the “fix compilation errors” stage 2 Steffen Müthing | Lmod or how to protect your sanity from dependency hell The Issue • DUNE has a number of non-packaged dependencies • Some of those contain libraries that are bound to a compiler / MPI version • You have to support multiple compilers / MPIs • Library developer (we try to be good about this...) • Clusters with different compiler / MPI combinations • Easily switch between release / debug version of MPI (only with dynamic linking) • Use GCC in general, but switch to clang during the “fix compilation errors” stage ⇒ Have to keep around multiple versions of MPI, BLAS, ParMetis, ALUGrid, UGGrid, . 2 Steffen Müthing | Lmod or how to protect your sanity from dependency hell Problems • Do I already have ALUGrid for MPICH? • If yes, where on earth did I put it? • Did I really build it with the correct dependencies? • Why does my build fail? Do all the libraries in my nice --with= actually work together? 3 Steffen Müthing | Lmod or how to protect your sanity from dependency hell • Look around for something that’s already there • Distribution package managers (APT, rpm, Portage, MacPorts, homebrew,. -

With Yocto/Openembedded

PORTING NEW CODE TO RISC-V WITH YOCTO/OPENEMBEDDED Martin Maas ([email protected]) 1st RISC-V Workshop, January 15, 2015 Monterey, CA WHY WE NEED A LINUX DISTRIBUTION • To build an application for RISC-V, you need to: – Download and build the RISC-V toolchain + Linux – Download, patch and build application + dependencies – Create an image and run it in QEMU or on hardware • Problems with this approach: – Error-prone: Easy to corrupt FS or get a step wrong – Reproducibility: Others can’t easily reuse your work – Rigidity: If a dependency changes, need to do it all over • We need a Linux distribution! – Automatic build process with dependency tracking – Ability to distribute binary packages and SDKs 2 RISCV-POKY: A PORT OF THE YOCTO PROJECT • We ported the Yocto Project – Official Linux Foundation Workgroup, supported by a large number of industry partners – Part I: Collection of hundreds of recipes (scripts that describe how to build packages for different platforms), shared with OpenEmbedded project – Part II: Bitbake, a parallel build system that takes recipes and fetches, patches, cross-compiles and produces packages (RPM/DEB), images, SDKs, etc. • Focus on build process and customizability 3 GETTING STARTED WITH RISCV-POKY • Let’s build a full Linux system including the GCC toolchain, Linux, QEMU + a large set of packages (including bash, ssh, python, perl, apt, wget,…) • Step I: Clone riscv-poky: git clone [email protected]:ucb-bar/riscv-poky.git • Step II: Set up the build system: source oe-init-build-env • Step III: Build an image (may -

ROOT Package Management: “Lazy Install” Approach

ROOT package management: “lazy install” approach Oksana Shadura ROOT Monday meeting Outline ● How we can improve artifact management (“lazy-install”) system for ROOT ● How to organise dependency management for ROOT ● Improvements to ROOT CMake build system ● Use cases for installing artifacts in the same ROOT session Goals ● Familiarize ROOT team with our planned work ● Explain key misunderstandings ● Give a technical overview of root-get ● Explain how root-get and cmake can work in synergy Non Goals We are not planning to replace CMake No change to the default build system of ROOT No duplication of functionality We are planning to “fill empty holes” for CMake General overview Manifest - why we need it? ● Easy to write ● Easy to parse, while CMakeLists.txt is impossible to parse ● Collect information from ROOT’s dependencies + from “builtin dependencies” + OS dependencies + external packages to be plugged in ROOT (to be resolved after using DAG) ● It can be easily exported back as a CMakeLists.txt ● It can have extra data elements [not only what is in CMakeLists.txt, but store extra info] ○ Dependencies description (github links, semantic versioning) ■ url: "ssh://[email protected]/Greeter.git", ■ versions: Version(1,0,0)..<Version(2,0,0) Manifest is a “dump” of status of build system (BS), where root-get is just a helper for BS Manifest - Sample Usage scenarios and benefits of manifest files: LLVM/Clang LLVM use CMake as a LLVMBuild utility that organize LLVM in a hierarchy of manifest files of components to be used by build system llvm-build, that is responsible for loading, verifying, and manipulating the project's component data. -

Meeting the Yocto Project

Meeting the Yocto Project In this chapter, we will be introduced to the Yocto Project. The main concepts of the project, which are constantly used throughout the book, are discussed here. We will discuss the Yocto Project history, OpenEmbedded, Poky, BitBake, and Metadata in brief, so fasten your seat belt and welcome aboard! What is the Yocto Project? The Yocto Project is a "The Yocto Project provides open source, high-quality infrastructure and tools to help developers create their own custom Linux distributions for any hardware architecture, across multiple market segments. The Yocto Project is intended to provide a helpful starting point for developers." The Yocto Project is an open source collaboration project that provides templates, tools, and methods to help us create custom Linux-based systems for embedded products regardless of the hardware architecture. Being managed by a Linux Foundation fellow, the project remains independent of its member organizations that participate in various ways and provide resources to the project. It was founded in 2010 as a collaboration of many hardware manufacturers, open source operating systems, vendors, and electronics companies in an effort to reduce their work duplication, providing resources and information catering to both new and experienced users. Among these resources is OpenEmbedded-Core, the core system component, provided by the OpenEmbedded project. Meeting the Yocto Project The Yocto Project is, therefore, a community open source project that aggregates several companies, communities, projects, and tools, gathering people with the same purpose to build a Linux-based embedded product; all these components are in the same boat, being driven by its community needs to work together. -

Beginning System Administration Decal

Review Installing Software Debian GNU/Linux Beginning System Administration DeCal Week 6 March 03, 2009 Week 6 Beginning System Administration DeCal Review Installing Software Debian GNU/Linux Review So Far... (hopefully) History of UNIX Design choices Terminal, shell, and interaction with UNIX Foundation of the Internet Using UNIX Users and Permissions Account management (add/del/disable users) File system layout Week 6 Beginning System Administration DeCal Review Installing Software Debian GNU/Linux Review So Far... (cont.) Software Downloading and extracting packages ( wget, tar, gzip) Configuring and compiling (./configure, make, make install) Configuration (.conf files, /etc) Week 6 Beginning System Administration DeCal Review Considerations Installing Software Package Managers Debian GNU/Linux Software Two options: 1 compile from source 2 download and install from binaries download binaries manually use a package management system How about both? When to choose which? Software release cycle Security and feature patches Week 6 Beginning System Administration DeCal Review Considerations Installing Software Package Managers Debian GNU/Linux What’s the single biggest advancement Linux has brought to the industry? It’s an interesting question, and one that in my opinion has a very simple answer: Package management-or, more specifically, the ability to install and upgrade software over the network in a seamlessly integrated fashion-along with the distributed development model package management enabled. Ian Murdock (founder of Debian) http://ianmurdock.com/2007/07/21/how-package-management-changed-everything/ -

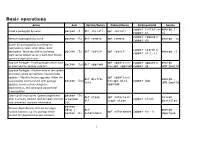

Basic Operations

Basic operations Action Arch Red Hat/Fedora Debian/Ubuntu SLES/openSUSE Gentoo zypper install or emerge [- Install a package(s) by name pacman -S dnf install apt install zypper in a] zypper remove or Remove a package(s) by name pacman -Rs dnf remove apt remove emerge -vc zypper rm Search for package(s) by searching the expression in name, description, short zypper search or description. What exact fields are being pacman -Ss dnf search apt search emerge -S zypper se [-s] searched by default varies in each tool. Mostly options bring tools on par. Upgrade Packages - Install packages which have apt update and zypper update or emerge - pacman -Syu dnf upgrade an older version already installed then apt upgrade zypper up uDN @world Upgrade Packages - Another form of the update command, which can perform more complex updates -- like distribution upgrades. When the apt update and dnf distro- emerge - usual update command will omit package pacman -Syu then apt dist- zypper dup sync uDN @world updates, which include changes in upgrade dependencies, this command can perform those updates. Clean up all local caches. Options might limit pacman -Sc dnf clean apt autoclean eclean what is actually cleaned. autoclean removes or pacman - zypper clean all or apt clean distfiles only unneeded, obsolete information. Scc pacman - Remove dependencies that are no longer Qdtq | dnf emerge -- needed, because e.g. the package which apt autoremove zypper rm -u pacman -Rs autoremove depclean needed the dependencies was removed. - 1 pacman -Qmq dnf Remove packages no longer included in any aptitude purge | pacman - repoquery -- repositories. -

Spack Package Repositories

Managing HPC Software Complexity with Spack Full-day Tutorial 1st Workshop on NSF and DOE High Performance Computing Tools July 10, 2019 The most recent version of these slides can be found at: https://spack.readthedocs.io/en/latest/tutorial.html Eugene, Oregon LLNL-PRES-806064 This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC52-07NA27344. github.com/spack/spack Lawrence Livermore National Security, LLC Tutorial Materials Materials: Download the latest version of slides and handouts at: bit.ly/spack-tutorial Slides and hands-on scripts are in the “Tutorial” Section of the docs. § Spack GitHub repository: http://github.com/spack/spack § Spack Documentation: http://spack.readthedocs.io Tweet at us! @spackpm 2 LLNL-PRES-806064 github.com/spack/spack Tutorial Presenters Todd Gamblin Greg Becker 3 LLNL-PRES-806064 github.com/spack/spack Software complexity in HPC is growing glm suite-sparse yaml-cpp metis cmake ncurses parmetis pkgconf nalu hwloc libxml2 xz bzip2 openssl boost trilinos superlu openblas netlib-scalapack mumps openmpi zlib netcdf hdf5 matio m4 libsigsegv parallel-netcdf Nalu: Generalized Unstructured Massively Parallel Low Mach Flow 4 LLNL-PRES-806064 github.com/spack/spack Software complexity in HPC is growing adol-c automake autoconf perl glm suite-sparse yaml-cpp metis cmake ncurses gmp libtool parmetis pkgconf m4 libsigsegv nalu hwloc libxml2 xz bzip2 openssl p4est pkgconf boost hwloc libxml2 trilinos superlu openblas xz netlib-scalapack -

Spack a flexible Package Manager for HPC

Spack A flexible package manager for HPC Overview & Introduc0on to Basic Spack Concepts Todd Gamblin Center for Applied Scien0fic Compu0ng LLNL-PRES-806064 This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory github.com/LLNL/spack under contract DE-AC52-07NA27344. Lawrence Livermore National Security, LLC Spack is a flexible package manager for HPC § How to install Spack: $ git clone https://github.com/scalability-llnl/spack.git § How to install a package: $ cd spack/bin $ ./spack install hdf5 § HDF5 and its dependencies are installed Get Spack! within the Spack directory. hp://github.com/LLNL/spack § No addi0onal setup required! 2 LLNL-PRES-806064 github.com/LLNL/spack What is the proDucon environment for HPC? § Someone’s home directory? § LLNL? LANL? Sandia? ANL? LBL? TACC? — Environments at large-scale sites are very different. § Which MPI implementaon? § Which compiler? § Which dependencies? § Which versions of dependencies? — Many applicaons require specific dependency versions. Real answer: there isn’t a single production environment or a standard way to build. 3 LLNL-PRES-806064 github.com/LLNL/spack HPC soware is becoming increasingly complex § Not much standardizaon in HPC — every machine/applicaon has a different so[ware stack § Sites share unique hardware among teams with very different requirements — Users want to experiment with many exo0c architectures, compilers, MPI versions — All of this is necessary to get the best performance § Example environment for some LLNL codes: 48 third party packages x 3 MPI versions x 3-ish Platforms mvapich mvapich2 OpenMPI Linux BlueGene Cray Up to 7 compilers Oh, and 2-3 versions of x Intel GCC XLC Clang x each package = ~7,500 combinations PGI Cray Pathscale We want an easy way to quickly sample the space, to build configurations on demand! 4 LLNL-PRES-806064 github.com/LLNL/spack Most exisEng tools Do not support combinatorial versioning § Tradi0onal binary package managers — RPM, yum, APT, yast, etc. -

Portage - the Current Implementation the Future Conclusion

Package Managing in General Portage - The Current Implementation The Future Conclusion Portage - A modern package manager Marius Mauch <[email protected]> FOSDEM 2005 Portage - A modern package manager Marius Mauch <[email protected]> Package Managing in General Portage - The Current Implementation The Future Conclusion Outline Package Managing in General Portage - The Current Implementation Features The Portage Tree The Future Short Term Long Term Conclusion Portage - A modern package manager Marius Mauch <[email protected]> • More general: • Payload: actual source or binary files • Metadata: descriptive information (homepage, dependencies, ...) • Instructions: build and install scripts • Different kind of packages: binary vs. source (vs. installed) • Common formats: Tarball, RPM Package Managing in General Portage - The Current Implementation The Future Conclusion What is a Package? • Simplest case: tarball of files Portage - A modern package manager Marius Mauch <[email protected]> • Different kind of packages: binary vs. source (vs. installed) • Common formats: Tarball, RPM Package Managing in General Portage - The Current Implementation The Future Conclusion What is a Package? • Simplest case: tarball of files • More general: • Payload: actual source or binary files • Metadata: descriptive information (homepage, dependencies, ...) • Instructions: build and install scripts Portage - A modern package manager Marius Mauch <[email protected]> • Common formats: Tarball, RPM Package Managing in General Portage - The Current Implementation The -

Install Your Scientific Software Stack Easily with Spack

Install your scientific software stack easily with Spack Les mardis du developpement´ technologique Florent Pruvost (SED) Outline 1. Context 2. Features overview 3. In practice 4. Some feedback Florent Pruvost (SED) – Install your scientific software stack easily with Spack 2 1 Context Florent Pruvost (SED) – Install your scientific software stack easily with Spack 3 A scientific software stack • modular, several languages, different build systems Application Linear Algebra Optimized Kernels Graph Processing Runtime Systems Paradigms Miscellaneous • difficulty to be an expert in all the chain Florent Pruvost (SED) – Install your scientific software stack easily with Spack 4 An example: Aerosol • a finite elements library developed by the Inria teams Cagire and Cardamom Aerosol PaMPA PaStiX HDF5 XML2 PT-SCOTCH StarPU BLAS/LAPACK MPI CUDA Florent Pruvost (SED) – Install your scientific software stack easily with Spack 5 Constraints • R&D ) develop prototypes - test many different builds • computing/data intensive application ) HPC environment - different machines, OSes, environments - remote connection, not administrator • performances ) highly tuned installation - well chosen components - specific build options • reproducibility - control the environment - characterize what influence the build Florent Pruvost (SED) – Install your scientific software stack easily with Spack 6 Wish list • a simple process to install a default version • a flexible way to choose build variants - choose compiler, software versions - enable components, e.g. MPI : yes/no -

Managing HPC Software Complexity with Spack

Managing HPC Software Complexity with Spack HPC Knowledge Meeting ‘17 June 16, 2017 San Sebastián, Spain LLNL-PRES-806064 This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC52-07NA27344. http://github.com/LLNL/spack Lawrence Livermore National Security, LLC Tutorial Materials Materials: Download the latest version of slides and handouts at: http://spack.readthedocs.io Slides and hands-on scripts are in the “Tutorial” Section of the docs. Other Resources: § Spack GitHub repository: http://github.com/LLNL/spack § Spack Documentation: http://spack.readthedocs.io 2 LLNL-PRES-806064 http://github.com/LLNL/spack Who is this Tutorial for? People who want to use or distribute software for HPC! 1. End Users of HPC Software — Install and run HPC applications and tools 2. HPC Application Teams — Manage third-party dependency libraries 3. Package Developers — People who want to package their own software for distribution 4. User support teams at HPC Centers — People who deploy software for users at large HPC sites 3 LLNL-PRES-806064 http://github.com/LLNL/spack HPC Software Complexity Prevents Reuse & Reduces Productivity § Not much standardization in HPC: every machine/app has a different software stack — This is done to get the best performance § HPC frequently trades reuse and usability for performance — Reusing a piece of software frequently requires you to port it to many new platforms § Example environment for some LLNL codes: 48 third party packages x 3 MPI versions x 3-ish Platforms mvapich mvapich2 OpenMPI Linux BlueGene Cray Up to 7 compilers Oh, and 2-3 versions of x Intel GCC XLC Clang x each package = ~7,500 combinations PGI Cray Pathscale Spack lowers barriers to software reuse by automating these builds! 4 LLNL-PRES-806064 http://github.com/LLNL/spack What is the “production” environment for HPC? § Someone’s home directory? § LLNL? LANL? Sandia? ANL? LBL? TACC? — Environments at large-scale sites are very different.