Chapter 4 Vector Norms and Matrix Norms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Math 4571 (Advanced Linear Algebra) Lecture #27

Math 4571 (Advanced Linear Algebra) Lecture #27 Applications of Diagonalization and the Jordan Canonical Form (Part 1): Spectral Mapping and the Cayley-Hamilton Theorem Transition Matrices and Markov Chains The Spectral Theorem for Hermitian Operators This material represents x4.4.1 + x4.4.4 +x4.4.5 from the course notes. Overview In this lecture and the next, we discuss a variety of applications of diagonalization and the Jordan canonical form. This lecture will discuss three essentially unrelated topics: A proof of the Cayley-Hamilton theorem for general matrices Transition matrices and Markov chains, used for modeling iterated changes in systems over time The spectral theorem for Hermitian operators, in which we establish that Hermitian operators (i.e., operators with T ∗ = T ) are diagonalizable In the next lecture, we will discuss another fundamental application: solving systems of linear differential equations. Cayley-Hamilton, I First, we establish the Cayley-Hamilton theorem for arbitrary matrices: Theorem (Cayley-Hamilton) If p(x) is the characteristic polynomial of a matrix A, then p(A) is the zero matrix 0. The same result holds for the characteristic polynomial of a linear operator T : V ! V on a finite-dimensional vector space. Cayley-Hamilton, II Proof: Since the characteristic polynomial of a matrix does not depend on the underlying field of coefficients, we may assume that the characteristic polynomial factors completely over the field (i.e., that all of the eigenvalues of A lie in the field) by replacing the field with its algebraic closure. Then by our results, the Jordan canonical form of A exists. -

Lecture Notes: Qubit Representations and Rotations

Phys 711 Topics in Particles & Fields | Spring 2013 | Lecture 1 | v0.3 Lecture notes: Qubit representations and rotations Jeffrey Yepez Department of Physics and Astronomy University of Hawai`i at Manoa Watanabe Hall, 2505 Correa Road Honolulu, Hawai`i 96822 E-mail: [email protected] www.phys.hawaii.edu/∼yepez (Dated: January 9, 2013) Contents mathematical object (an abstraction of a two-state quan- tum object) with a \one" state and a \zero" state: I. What is a qubit? 1 1 0 II. Time-dependent qubits states 2 jqi = αj0i + βj1i = α + β ; (1) 0 1 III. Qubit representations 2 A. Hilbert space representation 2 where α and β are complex numbers. These complex B. SU(2) and O(3) representations 2 numbers are called amplitudes. The basis states are or- IV. Rotation by similarity transformation 3 thonormal V. Rotation transformation in exponential form 5 h0j0i = h1j1i = 1 (2a) VI. Composition of qubit rotations 7 h0j1i = h1j0i = 0: (2b) A. Special case of equal angles 7 In general, the qubit jqi in (1) is said to be in a superpo- VII. Example composite rotation 7 sition state of the two logical basis states j0i and j1i. If References 9 α and β are complex, it would seem that a qubit should have four free real-valued parameters (two magnitudes and two phases): I. WHAT IS A QUBIT? iθ0 α φ0 e jqi = = iθ1 : (3) Let us begin by introducing some notation: β φ1 e 1 state (called \minus" on the Bloch sphere) Yet, for a qubit to contain only one classical bit of infor- 0 mation, the qubit need only be unimodular (normalized j1i = the alternate symbol is |−i 1 to unity) α∗α + β∗β = 1: (4) 0 state (called \plus" on the Bloch sphere) 1 Hence it lives on the complex unit circle, depicted on the j0i = the alternate symbol is j+i: 0 top of Figure 1. -

C H a P T E R § Methods Related to the Norm Al Equations

¡ ¢ £ ¤ ¥ ¦ § ¨ © © © © ¨ ©! "# $% &('*),+-)(./+-)0.21434576/),+98,:%;<)>=,'41/? @%3*)BAC:D8E+%=B891GF*),+H;I?H1KJ2.21K8L1GMNAPO45Q5R)S;S+T? =RU ?H1*)>./+EAVOKAPM ;<),5 ?H1G;P8W.XAVO4575R)S;S+Y? =Z8L1*)/[%\Z1*)]AB3*=,'Q;<)B=,'41/? @%3*)RAP8LU F*)BA(;S'*)Z)>@%3/? F*.4U ),1G;^U ?H1*)>./+ APOKAP;_),5a`Rbc`^dfeg`Rbih,jk=>.4U U )Blm;B'*)n1K84+o5R.EUp)B@%3*.K;q? 8L1KA>[r\0:D;_),1Ejp;B'/? A2.4s4s/+t8/.,=,' b ? A7.KFK84? l4)>lu?H1vs,+D.*=S;q? =>)m6/)>=>.43KA<)W;B'*)w=B8E)IxW=K? ),1G;W5R.K;S+Y? yn` `z? AW5Q3*=,'|{}84+-A_) =B8L1*l9? ;I? 891*)>lX;B'*.41Q`7[r~k8K{i)IF*),+NjL;B'*)71K8E+o5R.4U4)>@%3*.G;I? 891KA0.4s4s/+t8.*=,'w5R.BOw6/)Z.,l4)IM @%3*.K;_)7?H17A_8L5R)QAI? ;S3*.K;q? 8L1KA>[p1*l4)>)>lj9;B'*),+-)Q./+-)Q)SF*),1.Es4s4U ? =>.K;q? 8L1KA(?H1Q{R'/? =,'W? ;R? A s/+-)S:Y),+o+D)Blm;_8|;B'*)W3KAB3*.4Urc+HO4U 8,F|AS346KABs*.,=B)Q;_)>=,'41/? @%3*)BAB[&('/? A]=*'*.EsG;_),+}=B8*F*),+tA7? ;PM ),+-.K;q? F*)75R)S;S'K8ElAi{^'/? =,'Z./+-)R)K? ;B'*),+pl9?+D)B=S;BU OR84+k?H5Qs4U ? =K? ;BU O2+-),U .G;<)Bl7;_82;S'*)Q1K84+o5R.EU )>@%3*.G;I? 891KA>[ mWX(fQ - uK In order to solve the linear system 7 when is nonsymmetric, we can solve the equivalent system b b [¥K¦ ¡¢ £N¤ which is Symmetric Positive Definite. This system is known as the system of the normal equations associated with the least-squares problem, [ ¦ £N¤ minimize §* R¨©7ª§*«9¬ Note that (8.1) is typically used to solve the least-squares problem (8.2) for over- ®°¯± ±²³® determined systems, i.e., when is a rectangular matrix of size , . -

Surjective Isometries on Grassmann Spaces 11

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository of the Academy's Library SURJECTIVE ISOMETRIES ON GRASSMANN SPACES FERNANDA BOTELHO, JAMES JAMISON, AND LAJOS MOLNAR´ Abstract. Let H be a complex Hilbert space, n a given positive integer and let Pn(H) be the set of all projections on H with rank n. Under the condition dim H ≥ 4n, we describe the surjective isometries of Pn(H) with respect to the gap metric (the metric induced by the operator norm). 1. Introduction The study of isometries of function spaces or operator algebras is an important research area in functional analysis with history dating back to the 1930's. The most classical results in the area are the Banach-Stone theorem describing the surjective linear isometries between Banach spaces of complex- valued continuous functions on compact Hausdorff spaces and its noncommutative extension for general C∗-algebras due to Kadison. This has the surprising and remarkable consequence that the surjective linear isometries between C∗-algebras are closely related to algebra isomorphisms. More precisely, every such surjective linear isometry is a Jordan *-isomorphism followed by multiplication by a fixed unitary element. We point out that in the aforementioned fundamental results the underlying structures are algebras and the isometries are assumed to be linear. However, descriptions of isometries of non-linear structures also play important roles in several areas. We only mention one example which is closely related with the subject matter of this paper. This is the famous Wigner's theorem that describes the structure of quantum mechanical symmetry transformations and plays a fundamental role in the probabilistic aspects of quantum theory. -

MATH 2370, Practice Problems

MATH 2370, Practice Problems Kiumars Kaveh Problem: Prove that an n × n complex matrix A is diagonalizable if and only if there is a basis consisting of eigenvectors of A. Problem: Let A : V ! W be a one-to-one linear map between two finite dimensional vector spaces V and W . Show that the dual map A0 : W 0 ! V 0 is surjective. Problem: Determine if the curve 2 2 2 f(x; y) 2 R j x + y + xy = 10g is an ellipse or hyperbola or union of two lines. Problem: Show that if a nilpotent matrix is diagonalizable then it is the zero matrix. Problem: Let P be a permutation matrix. Show that P is diagonalizable. Show that if λ is an eigenvalue of P then for some integer m > 0 we have λm = 1 (i.e. λ is an m-th root of unity). Hint: Note that P m = I for some integer m > 0. Problem: Show that if λ is an eigenvector of an orthogonal matrix A then jλj = 1. n Problem: Take a vector v 2 R and let H be the hyperplane orthogonal n n to v. Let R : R ! R be the reflection with respect to a hyperplane H. Prove that R is a diagonalizable linear map. Problem: Prove that if λ1; λ2 are distinct eigenvalues of a complex matrix A then the intersection of the generalized eigenspaces Eλ1 and Eλ2 is zero (this is part of the Spectral Theorem). 1 Problem: Let H = (hij) be a 2 × 2 Hermitian matrix. Use the Min- imax Principle to show that if λ1 ≤ λ2 are the eigenvalues of H then λ1 ≤ h11 ≤ λ2. -

Parametrization of 3×3 Unitary Matrices Based on Polarization

Parametrization of 33 unitary matrices based on polarization algebra (May, 2018) José J. Gil Parametrization of 33 unitary matrices based on polarization algebra José J. Gil Universidad de Zaragoza. Pedro Cerbuna 12, 50009 Zaragoza Spain [email protected] Abstract A parametrization of 33 unitary matrices is presented. This mathematical approach is inspired by polarization algebra and is formulated through the identification of a set of three orthonormal three-dimensional Jones vectors representing respective pure polarization states. This approach leads to the representation of a 33 unitary matrix as an orthogonal similarity transformation of a particular type of unitary matrix that depends on six independent parameters, while the remaining three parameters correspond to the orthogonal matrix of the said transformation. The results obtained are applied to determine the structure of the second component of the characteristic decomposition of a 33 positive semidefinite Hermitian matrix. 1 Introduction In many branches of Mathematics, Physics and Engineering, 33 unitary matrices appear as key elements for solving a great variety of problems, and therefore, appropriate parameterizations in terms of minimum sets of nine independent parameters are required for the corresponding mathematical treatment. In this way, some interesting parametrizations have been obtained [1-8]. In particular, the Cabibbo-Kobayashi-Maskawa matrix (CKM matrix) [6,7], which represents information on the strength of flavour-changing weak decays and depends on four parameters, constitutes the core of a family of parametrizations of a 33 unitary matrix [8]. In this paper, a new general parametrization is presented, which is inspired by polarization algebra [9] through the structure of orthonormal sets of three-dimensional Jones vectors [10]. -

Functional Analysis 1 Winter Semester 2013-14

Functional analysis 1 Winter semester 2013-14 1. Topological vector spaces Basic notions. Notation. (a) The symbol F stands for the set of all reals or for the set of all complex numbers. (b) Let (X; τ) be a topological space and x 2 X. An open set G containing x is called neigh- borhood of x. We denote τ(x) = fG 2 τ; x 2 Gg. Definition. Suppose that τ is a topology on a vector space X over F such that • (X; τ) is T1, i.e., fxg is a closed set for every x 2 X, and • the vector space operations are continuous with respect to τ, i.e., +: X × X ! X and ·: F × X ! X are continuous. Under these conditions, τ is said to be a vector topology on X and (X; +; ·; τ) is a topological vector space (TVS). Remark. Let X be a TVS. (a) For every a 2 X the mapping x 7! x + a is a homeomorphism of X onto X. (b) For every λ 2 F n f0g the mapping x 7! λx is a homeomorphism of X onto X. Definition. Let X be a vector space over F. We say that A ⊂ X is • balanced if for every α 2 F, jαj ≤ 1, we have αA ⊂ A, • absorbing if for every x 2 X there exists t 2 R; t > 0; such that x 2 tA, • symmetric if A = −A. Definition. Let X be a TVS and A ⊂ X. We say that A is bounded if for every V 2 τ(0) there exists s > 0 such that for every t > s we have A ⊂ tV . -

HYPERCYCLIC SUBSPACES in FRÉCHET SPACES 1. Introduction

PROCEEDINGS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 134, Number 7, Pages 1955–1961 S 0002-9939(05)08242-0 Article electronically published on December 16, 2005 HYPERCYCLIC SUBSPACES IN FRECHET´ SPACES L. BERNAL-GONZALEZ´ (Communicated by N. Tomczak-Jaegermann) Dedicated to the memory of Professor Miguel de Guzm´an, who died in April 2004 Abstract. In this note, we show that every infinite-dimensional separable Fr´echet space admitting a continuous norm supports an operator for which there is an infinite-dimensional closed subspace consisting, except for zero, of hypercyclic vectors. The family of such operators is even dense in the space of bounded operators when endowed with the strong operator topology. This completes the earlier work of several authors. 1. Introduction and notation Throughout this paper, the following standard notation will be used: N is the set of positive integers, R is the real line, and C is the complex plane. The symbols (mk), (nk) will stand for strictly increasing sequences in N.IfX, Y are (Hausdorff) topological vector spaces (TVSs) over the same field K = R or C,thenL(X, Y ) will denote the space of continuous linear mappings from X into Y , while L(X)isthe class of operators on X,thatis,L(X)=L(X, X). The strong operator topology (SOT) in L(X) is the one where the convergence is defined as pointwise convergence at every x ∈ X. A sequence (Tn) ⊂ L(X, Y )issaidtobeuniversal or hypercyclic provided there exists some vector x0 ∈ X—called hypercyclic for the sequence (Tn)—such that its orbit {Tnx0 : n ∈ N} under (Tn)isdenseinY . -

The Column and Row Hilbert Operator Spaces

The Column and Row Hilbert Operator Spaces Roy M. Araiza Department of Mathematics Purdue University Abstract Given a Hilbert space H we present the construction and some properties of the column and row Hilbert operator spaces Hc and Hr, respectively. The main proof of the survey is showing that CB(Hc; Kc) is completely isometric to B(H ; K ); in other words, the completely bounded maps be- tween the corresponding column Hilbert operator spaces is completely isometric to the bounded operators between the Hilbert spaces. A similar theorem for the row Hilbert operator spaces follows via duality of the operator spaces. In particular there exists a natural duality between the column and row Hilbert operator spaces which we also show. The presentation and proofs are due to Effros and Ruan [1]. 1 The Column Hilbert Operator Space Given a Hilbert space H , we define the column isometry C : H −! B(C; H ) given by ξ 7! C(ξ)α := αξ; α 2 C: Then given any n 2 N; we define the nth-matrix norm on Mn(H ); by (n) kξkc;n = C (ξ) ; ξ 2 Mn(H ): These are indeed operator space matrix norms by Ruan's Representation theorem and thus we define the column Hilbert operator space as n o Hc = H ; k·kc;n : n2N It follows that given ξ 2 Mn(H ) we have that the adjoint operator of C(ξ) is given by ∗ C(ξ) : H −! C; ζ 7! (ζj ξ) : Suppose C(ξ)∗(ζ) = d; c 2 C: Then we see that cd = (cj C(ξ)∗(ζ)) = (cξj ζ) = c(ζj ξ) = (cj (ζj ξ)) : We are also able to compute the matrix norms on rectangular matrices. -

Lecture 2: Spectral Theorems

Lecture 2: Spectral Theorems This lecture introduces normal matrices. The spectral theorem will inform us that normal matrices are exactly the unitarily diagonalizable matrices. As a consequence, we will deduce the classical spectral theorem for Hermitian matrices. The case of commuting families of matrices will also be studied. All of this corresponds to section 2.5 of the textbook. 1 Normal matrices Definition 1. A matrix A 2 Mn is called a normal matrix if AA∗ = A∗A: Observation: The set of normal matrices includes all the Hermitian matrices (A∗ = A), the skew-Hermitian matrices (A∗ = −A), and the unitary matrices (AA∗ = A∗A = I). It also " # " # 1 −1 1 1 contains other matrices, e.g. , but not all matrices, e.g. 1 1 0 1 Here is an alternate characterization of normal matrices. Theorem 2. A matrix A 2 Mn is normal iff ∗ n kAxk2 = kA xk2 for all x 2 C : n Proof. If A is normal, then for any x 2 C , 2 ∗ ∗ ∗ ∗ ∗ 2 kAxk2 = hAx; Axi = hx; A Axi = hx; AA xi = hA x; A xi = kA xk2: ∗ n n Conversely, suppose that kAxk = kA xk for all x 2 C . For any x; y 2 C and for λ 2 C with jλj = 1 chosen so that <(λhx; (A∗A − AA∗)yi) = jhx; (A∗A − AA∗)yij, we expand both sides of 2 ∗ 2 kA(λx + y)k2 = kA (λx + y)k2 to obtain 2 2 ∗ 2 ∗ 2 ∗ ∗ kAxk2 + kAyk2 + 2<(λhAx; Ayi) = kA xk2 + kA yk2 + 2<(λhA x; A yi): 2 ∗ 2 2 ∗ 2 Using the facts that kAxk2 = kA xk2 and kAyk2 = kA yk2, we derive 0 = <(λhAx; Ayi − λhA∗x; A∗yi) = <(λhx; A∗Ayi − λhx; AA∗yi) = <(λhx; (A∗A − AA∗)yi) = jhx; (A∗A − AA∗)yij: n ∗ ∗ n Since this is true for any x 2 C , we deduce (A A − AA )y = 0, which holds for any y 2 C , meaning that A∗A − AA∗ = 0, as desired. -

1 Lecture 09

Notes for Functional Analysis Wang Zuoqin (typed by Xiyu Zhai) September 29, 2015 1 Lecture 09 1.1 Equicontinuity First let's recall the conception of \equicontinuity" for family of functions that we learned in classical analysis: A family of continuous functions defined on a region Ω, Λ = ffαg ⊂ C(Ω); is called an equicontinuous family if 8 > 0; 9δ > 0 such that for any x1; x2 2 Ω with jx1 − x2j < δ; and for any fα 2 Λ, we have jfα(x1) − fα(x2)j < . This conception of equicontinuity can be easily generalized to maps between topological vector spaces. For simplicity we only consider linear maps between topological vector space , in which case the continuity (and in fact the uniform continuity) at an arbitrary point is reduced to the continuity at 0. Definition 1.1. Let X; Y be topological vector spaces, and Λ be a family of continuous linear operators. We say Λ is an equicontinuous family if for any neighborhood V of 0 in Y , there is a neighborhood U of 0 in X, such that L(U) ⊂ V for all L 2 Λ. Remark 1.2. Equivalently, this means if x1; x2 2 X and x1 − x2 2 U, then L(x1) − L(x2) 2 V for all L 2 Λ. Remark 1.3. If Λ = fLg contains only one continuous linear operator, then it is always equicontinuous. 1 If Λ is an equicontinuous family, then each L 2 Λ is continuous and thus bounded. In fact, this boundedness is uniform: Proposition 1.4. Let X,Y be topological vector spaces, and Λ be an equicontinuous family of linear operators from X to Y . -

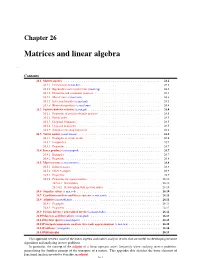

Matrices and Linear Algebra

Chapter 26 Matrices and linear algebra ap,mat Contents 26.1 Matrix algebra........................................... 26.2 26.1.1 Determinant (s,mat,det)................................... 26.2 26.1.2 Eigenvalues and eigenvectors (s,mat,eig).......................... 26.2 26.1.3 Hermitian and symmetric matrices............................. 26.3 26.1.4 Matrix trace (s,mat,trace).................................. 26.3 26.1.5 Inversion formulas (s,mat,mil)............................... 26.3 26.1.6 Kronecker products (s,mat,kron).............................. 26.4 26.2 Positive-definite matrices (s,mat,pd) ................................ 26.4 26.2.1 Properties of positive-definite matrices........................... 26.5 26.2.2 Partial order......................................... 26.5 26.2.3 Diagonal dominance.................................... 26.5 26.2.4 Diagonal majorizers..................................... 26.5 26.2.5 Simultaneous diagonalization................................ 26.6 26.3 Vector norms (s,mat,vnorm) .................................... 26.6 26.3.1 Examples of vector norms.................................. 26.6 26.3.2 Inequalities......................................... 26.7 26.3.3 Properties.......................................... 26.7 26.4 Inner products (s,mat,inprod) ................................... 26.7 26.4.1 Examples.......................................... 26.7 26.4.2 Properties.......................................... 26.8 26.5 Matrix norms (s,mat,mnorm) .................................... 26.8 26.5.1