Photography Accessscience from McgrawHill Education

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quack Summer 2016

Summer 2016 - Vol. 15, Issue 3 All contents © 2016 E.J. Peiker Welcome to the quarterly newsletter from E.J. Peiker, Nature Photographer and www.EJPhoto.com . In this quarterly publication, I share with fellow photographers my photographic experiences, photo equipment reviews, photo and processing tips, and industry news. I also inform subscribers about upcoming workshops and products that I offer. Please feel free to forward this to other photographers and interested parties but please do so only by forwarding this newsletter in its entirety. All content is copyrighted by E.J. Peiker and may not be reproduced. If you would like to be added to the mailing list, unsubscribe, or access back issues, please visit: www.ejphoto.com/newsletter.htm Playa de Gueirua - Asturias, Spain (Sony a7R II, 35mm) Three Kits for Three Types of Photography One of the most common questions that people ask me is what gear I shoot with or for recommendations on what gear to take on different photographic expeditions. While the answer to this is very individual and the right set—up varies from person to person, I can tell you what I have chosen for the time being. Wildlife and Birds: I continue to use the Nikon crop sensor DX bodies for this type of photography coupled with either the Sigma 150-600mm Sport lens, the Nikon 500mm f/4VR lens or the Nikon 80-400 lens. The 500mm lens is often coupled with the latest Nikon 1.4x teleconverter to achieve a focal length of 700mm. When combining this with the 1.5x crop of the D7200 or the new D500 camera, the effective reach is sufficient for almost any subject. -

Whitepprlow.Qxd (Page 1)

Print Permanence An Epson White Paper Overview How long will a photographic print last? There is no simple It is also clear that most photographic prints will have greater answer. But it is important for anyone who cares about their longevity than a computer hard drive or magnetic media. Not photos to have a base understanding of the factors that affect only can digital storage devices be damaged by magnetic fields, the longevity of prints to make informed decisions and insure viruses and equipment malfunction, but rapid changes in those photographic prints will last an expected time. technology tend to make the devices and their file formats obsolete. In addition, many back-up digital storage systems such This white paper deals with the complex subject of print as CDs and DVDs incorporate materials that may fade or change permanence and how knowledge of industry-accepted in ways that could make their contents unreadable in the future. comparative print permanence testing can lead to the best decisions about buying or specifying imaging products. Every From the beginning of the 20th century until the 1950s most bit as important, this document will help the reader detect photographic prints were in black and white. If properly potentially misleading marketing claims about photographic processed, these fiber based black and white prints had and still image quality, print permanence and the limitations of universal have great resistance to fading from light or gas and to water compatibility. damage. Because of the high inherent stability of fiber based black and white prints, many of these early photographs remain We at Epson want to help consumers and professionals learn in excellent condition and even now reside in family collections, more about how prints are made and how different inks and commercial collections and museums. -

JB Reade's Letters to Jabez Hogg

J. B. Reade’s letters to Jabez Hogg – Reade’s last days R. DEREK WOOD [submitted to Quekett Journal of Microscopy] [submitted in Feb 2011 but NOT published] Introducing the Rev. Joseph Bancroft Xanthidia. Quite obviously Reade was Reade, FRS (1801-1870) technically adept with microscope optics and specimen manipulation, using such methods as As a vicar of the Church of England, J. B. micro-incineration. In the mid-1830s (when he Reade served his communities at the churches ran a small school in Peckham, south east of of Stone, Buckinghamshire, from 1839 until London) he used a method of microscopic 1859, then for four years at Ellesborough, also illumination, that he characterised as “oblique in Bucks, before becoming rector of refracted light” in being set up to stop direct Bishopsbourne, near Canterbury, Kent, from transmitted light entering the objective lens, 1862 until his death in December 1870. provided a black background to the specimen, In 1864 he signed ‘The Declaration of i.e. darkground-illumination. His paper on the Students of the Natural and Physical Sciences’ technique entitled ‘A New method of that “deplore that Natural Science should be Illuminating Microscopic Objects’ was looked upon with suspicion ... merely on published in 1837 as an appendix in the account of the unadvised manner in which influential book Micrographia by Goring and some are placing it in opposition to Holy Pritchard, and noticed in all books by various Writ”. Of course where religion is concerned authors on the Microscope in the following that Declaration could be interpreted in decades, including Quekett’s Practical contradictory ways, but there can be little Treatise on the Microscope (2nd edition of doubt that J. -

Photo History Newsletters • Vol

THE AMALGAMATED PHOTO HISTORY NEWSLETTERS • VOL. 2-2 2021 We hope that the Covid pandemic soon passes away so we can get back to normal with regular meetings and events. In the interim here are addi- tional newsletters to keeping you read- ing. Please enjoy. Ken Metcalf of the Graflex Journal has another interesting issue which should entertain you well. Another fine newsletter comes from The Western Canada Photographic Historical Association in British Colum- bia with some fine reading content. Permissions granted: Graflex Journal– Ken Melcalf The Western Canada Photographic Historical Association– Tom Parkinsion SHARING INFORMATION ABOUT GRAFLEX AND THEIR CAMERAS ISSUE 3 2020 FEATURES some leather that was a good match. Thickness was right, color was good, and the pebble grain was close National Graflex Gets a New Coat by Paul S. Lewis……..….....….....….1 enough. So, I had them send me a large sheet; 12x17. Camera Group - Roger Beck………….…….………...….…..…………....2 Having a good supply would allow for some mistakes Viewing Wild Animals at Night by William V. Ward …….…...…………..4 and assure me that there would be enough length and Hold It! Part 1 by Ken Metcalf.……………….…………….…………….....5 width to cover the missing panels with one complete Graflex Patents by Joel Havens….…..………………...…………….…...12 piece. The source I used was Cameraleather ([email protected]). I did just check with them to be sure similar material is available. The report is that although the material is available, supply is limited. So, with material and camera in hand, the next step Ed: Mr. Lewis is a Graflex Journal subscriber and author was to get the new cover panels cut out and attached. -

Infrared-Photography-Part-1-SM.Pdf

Infrared Photography John Caplis & Joyce Harman Harmany in Nature www.harmanyinnature.com www.savingdarkskies.com Why do infrared photography? Infrared photography offers many unique creative choices you can explore in image making • Excellent monochrome images • Sunlit foliage turns white • Blue skies appear very dark • False color can be applied for dream like scenes • Skin tones are ghostly white • Can be shot in the harsh light in the middle of the day A human eye can see light from 400nm to 700nm on the electromagnetic spectrum. This range is called ‘visible light’. IR photography uses “near infrared light” which is in the range of 700nm-1400nm. These wavelengths are longer than visible light and can’t be seen by humans. How IR filters work There are two types of infrared filters, ones that block IR light while passing visible light and ones that block visible light while passing infrared light. The IR blocking filters are used in stock digital cameras to prevent unwanted IR light from reaching the sensor, which is sensitive to near infrared. In infrared photography we want the opposite, to block most or all visible light and only pass infrared light. Two ways to do IR photography – Stock vs IR-Converted Cameras Stock Camera with Converted Camera with Screw-on external IR filter Internal IR filter • Dim or no preview image • No screw on filter required • No autofocus • Normally bright preview image • Exposure metering can be difficult • Autofocus using live view • High ISOs & long exposures • Exposure metering can be difficult • Requires tripod • Low ISOs • Tripod not always required Camera conversions • Life Pixel https://www.lifepixel.com • Kolari Vision https://kolarivision.com Using Stock Cameras for IR Photography Stock digital cameras have a limited range of sensitivity to infrared light. -

Inkjet Photo Prints: Here to Stay

Inkjet Photo Prints: Here to Stay Dr. Nils Miller, Hewlett-Packard Company June 2004 Executive Summary A recent report from the Photo Marketing Association states, “2003 was a pivotal year for the industry, with digital cameras outselling traditional cameras for the first time ever… This gap will widen in 20041”… As more customers move to digital cameras, more are also choosing inkjet photo printers thanks to significant advances in image quality and convenience. The increasing numbers of customers who are using digital photography would indicate that they are very satisfied with digital cameras and inkjet photo printing and have indeed answered yes to the question: “Do inkjet photo prints have image quality equal to (or better than) traditional silver-halide photo prints?” One question remains, however, for customers who currently use inkjet or are considering inkjet in the future to print their valuable photos: “Will inkjet photo prints last as long as traditional silver-halide photo prints?” Estimates of the life of photo prints are based on whether the prints will be displayed or stored. In all likelihood, only a small portion of photo prints are actually displayed and subjected to regular illumination. Displayed prints are among the most treasured, and customers need to feel confident that they can enjoy these images for a lifetime. This paper will address the display permanence of inkjet photo prints including relevant results of HP internal tests and HP’s current line of photo print media and inks. Inherited Silver-Halide Permanence Standards The science of predicting the display permanence of silver-halide photo prints is quite mature. -

Digital Infrared Photography by Vega Buchbinder

Digital Infrared Photography By Vega Buchbinder The advent of digital cameras has made infrared photography relatively easily accessible. No longer does one need to handle and process infrared film in the dark under stringent conditions. In the days of film photography Kodak’s film HIE High Speed Infrared Film was the première infrared film, noted for its extensive range. Kodak has stopped making this film in 2007. Today, with a digital camera, an infrared filter, and a tripod, anyone can explore the near infrared spectrum photographically with ease and repeatable results. Using digital infrared (IR) photography one can produce fascinating images that can look surreal, or of an alternate reality. In addition one can use many variations in image rendering, ranging from black and white, to false colors, in order to create artistic images. What is infrared photography? What makes digital infrared photography possible is that the silicon sensors used in digital cameras can “see” into the infrared range of the electromagnetic spectrum. From short wavelengths to longer wavelengths, the electromagnetic spectrum is made of gamma rays, X- rays, ultraviolet rays, visible light, infrared rays, radar, FM radio, TV, and AM radio. The human eye is sensitive to wavelengths from approximately 400 to 700 nanometers (nm), which is the wavelength range for visible light. The CCD and CMOS sensors in digital cameras can easily “see” further into the infrared region to about 1050 nm, which makes IR photography with a digital camera possible today. Note that this part of the IR range is called the near infrared. Thermal infrared rays are much longer, and are not detectible by the CCD or CMOS sensors in our digital cameras. -

I. Basic Operation (Preparation)

10 lcon indicators used in this manual BASIC OPERATION (PREPARATION) BASIC OPERATION Operation direction Attention Lamp blinking I. BASIC OPERATION (PREPARATION) Attaching the Camera Strap 11 123 BASIC OPERATION (PREPARATION) BASIC OPERATION 1. Use a coin or similar object to slide the clasp 3. To remove the strap, repeat step 1. in the direction of the arrow. 2. Put the clasp onto the strap lug of the cam- • Adjust the length of the strap with the buckle. era with the arrow indication facing out, and • After fitting the strap and adjusting the length, pull slide the lock plate back to the original posi- the strap hard to confirm that the strap has tion. securely attached to the camera. • There is a pocket on the strap so you can store a small accessory. 12 Loading the Batteries 123 BASIC OPERATION (PREPARATION) BASIC OPERATION 1. To remove the battery holder, lift the battery 3. To secure the battery holder, turn the battery holder release knob and turn it in the direc- holder release knob in the direction of the tion of the arrow. arrow. 2. Load six 1.5V AA-size batteries in the battery holder in accordance with the diagram located in the battery holder. 13 • This camera requires battery power for operation. Always use six 1.5V AA size batteries. • The ISO film speed and number of exposed frames are unchanged if the batteries are replaced. (PREPARATION) BASIC OPERATION • Keep spare batteries on hand when shooting out doors or while traveling. • Use of the optional Remote Battery Pack 645 is recommended when the camera is used in extremely low temperatures. -

9780240809984 Cs.Pdf

HISTORY AND EVOLUTION OF PHOTOGRAPHY MARK OSTERMAN George Eastman House International Museum of Photography and Film GRANT B. ROMER George Eastman House International Museum of Photography and Film CCH01-K80998.inddH01-K80998.indd 1 66/20/07/20/07 44:55:07:55:07 PPMM CCH01-K80998.inddH01-K80998.indd 2 66/20/07/20/07 44:55:15:55:15 PPMM ContemporaryA Different Thoughts Set on ofthe Questions History of for Photography a New Age 3 Contemporary Thoughts on the History of Photography GRANT B. ROMER George Eastman House and International Museum of Photography and Film All photographers work today with historical perspective. Th ey know that the technology they use has an origin in the distant past. Th ey know photography has progressed and transformed over time, and they believe the current system of photography must be superior to that of the past. Th ey are sure they will witness further progress in pho- tography. Th ese are the lessons of history understood by all, and none need inquire any further in order to photograph. Yet photography has a very rich and complex history, which has hidden within it the answers to the fundamentally difficult questions: “What is photography?” and “What is a photograph?” All true photogra- phers should be able to answer these ques- tions for themselves and for others. To do so, they must make deep inquiry into the history of photography. Recognition of the importance of his- tory to the understanding of photography is evidenced in the title and content of the very first manual of photography published FIG. -

Perfection V500 Photo

Epson Perfection V500 Photo Scanner Parts Optional Automatic Document Feeder The Automatic Document Feeder (B12B813391) allows you to Scanner cover automatically load multiple-page documents into your scanner. See the on-screen User’s Guide for instructions on installing and using the optional Automatic Document Feeder (ADF). Scan to PDF button Automatic Document Feeder Scan to Power switch E-mail button Copy button Start button Paper support Holds up the paper that is loaded in the ADF. Document mat Transparency unit Carriage transportation lock Spare paper path guide Document table The paper path guide directs documents smoothly onto the scanner's document table. A spare paper path guide is included. If the surface of the paper path guide gets dirty, you can clean it or replace it with the spare guide. Scanner OPTION port transportation lock USB interface DC inlet connector ADF Document Mat Place this mat over a document if you need to scan it from the document table when the ADF is installed. Cover cable (Transportation unit) 7/07 Epson Perfection V500 Photo - 1 Epson Perfection V500 Photo Electrical Scanner Specifications Note: Check the label on the AC adapter or on the back of the scanner for General voltage information. Scanner type Flatbed color Scanner Photoelectric device Color CCD line sensor Rated voltage DC 24 V Effective pixels 54,400 × 74,880 pixels at 6400 dpi* Rated current 1.3 A Scanning area may be restricted if Power consumption 16.0 W (17.5 W with ADF) operating resolution setting is large. 7.5 (6.5 W with ADF) ready -

DIMA Glossary.Pdf

Accelerated Graphics Port (AGP) Additive Color An interface specification from Intel designed to facilitate 3-D Refers to the colors that result from mixing the primary colors graphics by allowing the graphics card to access the computer’s of light (Red, Green and Blue – RGB) to produce the visual RAM to refresh the monitor’s display. spectrum of colors. When the primary colors are mixed at 100 percent intensity, white light is produced. A Access To store or retrieve information with a software application Address from a computer component such as a disk drive so the user The unique location of data in memory, e-mail, Internet, or can work with it. media access control address on a network. Access Time Addressable Resolution The length of time that is required for a computer system to The maximum resolution of any device. The finite number of process a data request and then retrieve the data from memory pixels that any imaging device is capable of creating, manipu- or a storage device. lating, or imaging. Achromatic Color Adobe Acrobat A neutral white, gray, or black color that does not have a hue. Adobe’s software application for creation and viewing of Por- table Document Format (PDF) files that can display a docu- ActiveX ment as it was originally designed without having the particular An implementation of OLE (object linking and embedding) software or fonts used to create the file. developed by Microsoft that allows the user to see desktop applications in a web browser. Adobe Type Manager Software that produces Postscript outline fonts for display or Active-Matrix Display output. -

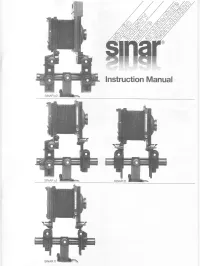

Manual Sinar P2 / C2 / F2 / F1-EN (PDF)

lnstructionManual The cameras Operatingcontrols of the SINAR iT p2andc2 1 Coarse-focusclamping lever 2 Finefocusing drive with depth of field scale 3 Micrometer drive for vertical (rise and fall) shift 4 Micrometer drive for lateral(cross) shift 5 Micrometerdrive for horizontal-axistilts 6 Micrometer drive for vertical-axisswings 7 lmageplane mark 8 Coarse-tilt (horizontal axis) clamping lever; movementused for verticalalignment of stan- dards with camerainclined up or down, alsofor coarse tilting to reservefull micrometertilt (5) rangefor sharpnessdistribution control. Fig.1 Contents The cameras 2 The planeof sharpnessand depthof field 11 - Controls 2 - Zerosettings Fufiher accessories 12 3 - - Mountingthe camera SINARCOLOR CONTROLfitters 12 4 - - The spirit levels Exposure meters 12 4 - - The base rail 4 AutomaticSINAR film holder - Changingcomponents 4 and shuttercoupling 12 - Film - The bellows 5 holders 13 - Camera backs s Final points 14 - Switchingformats p2 on the STNAR andc2 6 - Maintenance 14 - Switchingformats g on the SINARf2 andtl - Cleaning 14 - The convertible g camera - Adjusting the drives 14 - The bellowshood 9 - Cleaninglenses, filters and mirrors 14 - Viewingaids 9 - Warranty 14 - Transport l0 - Furtherinstruction manuals 14 The view camera movements 10 Remark: The camerac2 is no longerpart of the SINARsales programme, but can stiltrbe combined by the individualSINAR components. Operatingcontrols of the S|NARt2andtl 1 Coarse-focusclamping knob 2 Finefocussing drive with depthof fieldscale 3 Clampingwheel for verticalshift 4 Clampinglever for lateralshift 5 Clampinglever for swing (verticalaxis) 6 Clampinglever for tilt (horizontalaxis) 7 Angle-meteringscale for tilt and swingangles 8 lmageplane mark Zero setting points of the cameras CAMERAMODELS REAR(IMAGE) STANDARD FRONT(LENS) STANDARD NOTES SINARo2 With regularor special gxi|2 - 4x5 / White l White White dot for standardbearer 5x7 /13x18 Green i dots White lateralshift on With F/S back j or.