Some Results on Tsallis Entropies in Classical System*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Escort Distributions, Q-Gaussians and Fisher Information Jean-François Bercher

On escort distributions, q-gaussians and Fisher information Jean-François Bercher To cite this version: Jean-François Bercher. On escort distributions, q-gaussians and Fisher information. 30th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering, Jul 2010, Chamonix, France. pp.208-215, 10.1063/1.3573618. hal-00766571 HAL Id: hal-00766571 https://hal-upec-upem.archives-ouvertes.fr/hal-00766571 Submitted on 18 Dec 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. On escort distributions, q-gaussians and Fisher information J.-F. Bercher Laboratoire d’Informatique Gaspard Monge, Université Paris-Est, ESIEE, 5 bd Descartes, 77454 Marne-la-Vallée Cedex 2, France Abstract. Escort distributions are a simple one parameter deformation of an original distribution p. In Tsallis extended thermostatistics, the escort-averages, defined with respect to an escort dis- tribution, have revealed useful in order to obtain analytical results and variational equations, with in particular the equilibrium distributions obtained as maxima of Rényi-Tsallis entropy subject to constraints in the form of a q-average. A central example is the q-gaussian, which is a generalization of the standard gaussian distribution. -

Quantum Entanglement Inferred by the Principle of Maximum Tsallis Entropy

Quantum entanglement inferred by the principle of maximum Tsallis entropy Sumiyoshi Abe1 and A. K. Rajagopal2 1College of Science and Technology, Nihon University, Funabashi, Chiba 274-8501, Japan 2Naval Research Laboratory, Washington D.C., 20375-5320, U.S.A. The problem of quantum state inference and the concept of quantum entanglement are studied using a non-additive measure in the form of Tsallis’ entropy indexed by the positive parameter q. The maximum entropy principle associated with this entropy along with its thermodynamic interpretation are discussed in detail for the Einstein- Podolsky-Rosen pair of two spin-1/2 particles. Given the data on the Bell-Clauser- Horne-Shimony-Holt observable, the analytic expression is given for the inferred quantum entangled state. It is shown that for q greater than unity, indicating the sub- additive feature of the Tsallis entropy, the entangled region is small and enlarges as one goes into the super-additive regime where q is less than unity. It is also shown that quantum entanglement can be quantified by the generalized Kullback-Leibler entropy. quant-ph/9904088 26 Apr 1999 PACS numbers: 03.67.-a, 03.65.Bz I. INTRODUCTION Entanglement is a fundamental concept highlighting non-locality of quantum mechanics. It is well known [1] that the Bell inequalities, which any local hidden variable theories should satisfy, can be violated by entangled states of a composite system described by quantum mechanics. Experimental results [2] suggest that naive local realism à la Einstein, Podolsky, and Rosen [3] may not actually hold. Related issues arising out of the concept of quantum entanglement are quantum cryptography, quantum teleportation, and quantum computation. -

Tsallis Statistics of the Magnetic Field in the Heliosheath Lf Burlaga,1 Af Vin

The Astrophysical Journal, 644:L83–L86, 2006 June 10 ᭧ 2006. The American Astronomical Society. All rights reserved. Printed in U.S.A. TSALLIS STATISTICS OF THE MAGNETIC FIELD IN THE HELIOSHEATH L. F. Burlaga,1 A. F. Vin˜ as,1 N. F. Ness,2 and M. H. Acun˜a3 Received 2006 March 27; accepted 2006 May 3; published 2006 May 25 ABSTRACT The spacecraft Voyager 1 crossed the termination shock on 2004 December 16 at a distance of 94 AU from the Sun, and it has been moving through the heliosheath toward the interstellar medium since then. The distributions of magnetic field strength B observed in the heliosheath are Gaussian over a wide range of scales, yet the measured profile appears to be filamentary with occasional large jumps in the magnetic field strengthB(t) . All of the probability distributions of changes in B,dBn { B(t ϩ t) Ϫ B(t) on scales t from 1 to 128 days, can be fit with the symmetric Tsallis distribution of nonextensive statistical mechanics. At scales ≥32 days, the distributions are Gaussian, but on scales from 1 through 16 days the probability distribution functions have non-Gaussian tails, suggesting that the inner heliosheath is not in statistical equilibrium on scales from 1 to 16 days. Subject headings: magnetic fields — solar wind — turbulence — waves 1. INTRODUCTION is expressed by the form of u in the distribution function. In The solar wind moves supersonically beyond a few solar Tsallis statistical mechanics, the probability that a system is in a state with energy u is proportional toexp (Ϫ u) . -

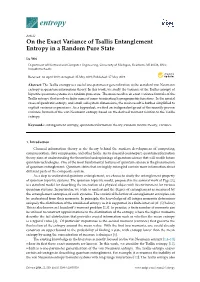

On the Exact Variance of Tsallis Entanglement Entropy in a Random Pure State

entropy Article On the Exact Variance of Tsallis Entanglement Entropy in a Random Pure State Lu Wei Department of Electrical and Computer Engineering, University of Michigan, Dearborn, MI 48128, USA; [email protected] Received: 26 April 2019; Accepted: 25 May 2019; Published: 27 May 2019 Abstract: The Tsallis entropy is a useful one-parameter generalization to the standard von Neumann entropy in quantum information theory. In this work, we study the variance of the Tsallis entropy of bipartite quantum systems in a random pure state. The main result is an exact variance formula of the Tsallis entropy that involves finite sums of some terminating hypergeometric functions. In the special cases of quadratic entropy and small subsystem dimensions, the main result is further simplified to explicit variance expressions. As a byproduct, we find an independent proof of the recently proven variance formula of the von Neumann entropy based on the derived moment relation to the Tsallis entropy. Keywords: entanglement entropy; quantum information theory; random matrix theory; variance 1. Introduction Classical information theory is the theory behind the modern development of computing, communication, data compression, and other fields. As its classical counterpart, quantum information theory aims at understanding the theoretical underpinnings of quantum science that will enable future quantum technologies. One of the most fundamental features of quantum science is the phenomenon of quantum entanglement. Quantum states that are highly entangled contain more information about different parts of the composite system. As a step to understand quantum entanglement, we choose to study the entanglement property of quantum bipartite systems. The quantum bipartite model, proposed in the seminal work of Page [1], is a standard model for describing the interaction of a physical object with its environment for various quantum systems. -

![Arxiv:1707.03526V1 [Cond-Mat.Stat-Mech] 12 Jul 2017 Eq](https://docslib.b-cdn.net/cover/7780/arxiv-1707-03526v1-cond-mat-stat-mech-12-jul-2017-eq-1057780.webp)

Arxiv:1707.03526V1 [Cond-Mat.Stat-Mech] 12 Jul 2017 Eq

Generalized Ensemble Theory with Non-extensive Statistics Ke-Ming Shen,∗ Ben-Wei Zhang,y and En-Ke Wang Key Laboratory of Quark & Lepton Physics (MOE) and Institute of Particle Physics, Central China Normal University, Wuhan 430079, China (Dated: October 17, 2018) The non-extensive canonical ensemble theory is reconsidered with the method of Lagrange multipliers by maximizing Tsallis entropy, with the constraint that the normalized term of P q Tsallis' q−average of physical quantities, the sum pj , is independent of the probability pi for Tsallis parameter q. The self-referential problem in the deduced probability and thermal quantities in non-extensive statistics is thus avoided, and thermodynamical relationships are obtained in a consistent and natural way. We also extend the study to the non-extensive grand canonical ensemble theory and obtain the q-deformed Bose-Einstein distribution as well as the q-deformed Fermi-Dirac distribution. The theory is further applied to the general- ized Planck law to demonstrate the distinct behaviors of the various generalized q-distribution functions discussed in literature. I. INTRODUCTION In the last thirty years the non-extensive statistical mechanics, based on Tsallis entropy [1,2] and the corresponding deformed exponential function, has been developed and attracted a lot of attentions with a large amount of applications in rather diversified fields [3]. Tsallis non- extensive statistical mechanics is a generalization of the common Boltzmann-Gibbs (BG) statistical PW mechanics by postulating a generalized entropy of the classical one, S = −k i=1 pi ln pi: W X q Sq = −k pi lnq pi ; (1) i=1 where k is a positive constant and denotes Boltzmann constant in BG statistical mechanics. -

Grand Canonical Ensemble of the Extended Two-Site Hubbard Model Via a Nonextensive Distribution

Grand canonical ensemble of the extended two-site Hubbard model via a nonextensive distribution Felipe Américo Reyes Navarro1;2∗ Email: [email protected] Eusebio Castor Torres-Tapia2 Email: [email protected] Pedro Pacheco Peña3 Email: [email protected] 1Facultad de Ciencias Naturales y Matemática, Universidad Nacional del Callao (UNAC) Av. Juan Pablo II 306, Bellavista, Callao, Peru 2Facultad de Ciencias Físicas, Universidad Nacional Mayor de San Marcos (UNMSM) Av. Venezuela s/n Cdra. 34, Apartado Postal 14-0149, Lima 14, Peru 3Universidad Nacional Tecnológica del Cono Sur (UNTECS), Av. Revolución s/n, Sector 3, Grupo 10, Mz. M Lt. 17, Villa El Salvador, Lima, Peru ∗Corresponding author. Facultad de Ciencias Físicas, Universidad Nacional Mayor de San Marcos (UNMSM), Av. Venezuela s/n Cdra. 34, Apartado Postal 14-0149, Lima 14, Peru Abstract We hereby introduce a research about a grand canonical ensemble for the extended two-site Hubbard model, that is, we consider the intersite interaction term in addition to those of the simple Hubbard model. To calculate the thermodynamical parameters, we utilize the nonextensive statistical mechan- ics; specifically, we perform the simulations of magnetic internal energy, specific heat, susceptibility, and thermal mean value of the particle number operator. We found out that the addition of the inter- site interaction term provokes a shifting in all the simulated curves. Furthermore, for some values of the on-site Coulombian potential, we realize that, near absolute zero, the consideration of a chemical potential varying with temperature causes a nonzero entropy. Keywords Extended Hubbard model,Archive Quantum statistical mechanics, Thermalof properties SID of small particles PACS 75.10.Jm, 05.30.-d,65.80.+n Introduction Currently, several researches exist on the subject of the application of a generalized statistics for mag- netic systems in the literature [1-3]. -

Generalized Molecular Chaos Hypothesis and the H-Theorem: Problem of Constraints and Amendment of Nonextensive Statistical Mechanics

Generalized molecular chaos hypothesis and the H-theorem: Problem of constraints and amendment of nonextensive statistical mechanics Sumiyoshi Abe1,2,3 1Department of Physical Engineering, Mie University, Mie 514-8507, Japan*) 2Institut Supérieur des Matériaux et Mécaniques Avancés, 44 F. A. Bartholdi, 72000 Le Mans, France 3Inspire Institute Inc., McLean, Virginia 22101, USA Abstract Quite unexpectedly, kinetic theory is found to specify the correct definition of average value to be employed in nonextensive statistical mechanics. It is shown that the normal average is consistent with the generalized Stosszahlansatz (i.e., molecular chaos hypothesis) and the associated H-theorem, whereas the q-average widely used in the relevant literature is not. In the course of the analysis, the distributions with finite cut-off factors are rigorously treated. Accordingly, the formulation of nonextensive statistical mechanics is amended based on the normal average. In addition, the Shore- Johnson theorem, which supports the use of the q-average, is carefully reexamined, and it is found that one of the axioms may not be appropriate for systems to be treated within the framework of nonextensive statistical mechanics. PACS number(s): 05.20.Dd, 05.20.Gg, 05.90.+m ____________________________ *) Permanent address 1 I. INTRODUCTION There exist a number of physical systems that possess exotic properties in view of traditional Boltzmann-Gibbs statistical mechanics. They are said to be statistical- mechanically anomalous, since they often exhibit and realize broken ergodicity, strong correlation between elements, (multi)fractality of phase-space/configuration-space portraits, and long-range interactions, for example. In the past decade, nonextensive statistical mechanics [1,2], which is a generalization of the Boltzmann-Gibbs theory, has been drawing continuous attention as a possible theoretical framework for describing these systems. -

Beyond Behavioural Economics

Beyond Behavioural Economics A Process Realist Perspective Mathematics of Behavioural Economics and Finance James Juniper November, 2015 Ultimate scientific explanation is attractive and important, but financial engineering cannot wait for full explanation. That is, it is legitimate to strive towards a second best: a “descriptive phenomenology” that is organized tightly enough to bring a degree of order and understanding. Benoit B. Mandelbrot (1997) Overview • Keynes on DM under uncertainty & role of conventions • Ontological considerations • Objective not just subjective • Applications: • Bounded sub-additivity (Tversky & Wakker) • Equivalent approach—fuzzy measure theory (approximation algebras) • Concept lattices and “framing” • Tsallis arithmetic: q-generalized binomial approximation […] civilization is a thin and precarious crust erected by the personality and will of a very few and only maintained by rules and conventions skillfully put across and guilefully preserved” (Keynes, X: 447) The “love of money as a possession” is a “somewhat disgusting morbidity, one of those semi-criminal, semi-pathological propensities which one hands over with a shudder to the specialists in mental disease (IX: 329) For we shall enquire more curiously than is safe today into the true character of this ‘purposiveness’ with which in varying degrees Nature has endowed almost all of us. For purposiveness means that we are more concerned with the remote future results of our actions than with their own quality or their immediate effects on the environment. The ‘purposive’ man is always trying to secure a spurious and delusive immortality for his acts by pushing his interest in them forward into time. He does not love his cat, but his cat’s kittens; nor, in truth, the kittens, but only the kittens’ kittens, and so on forward to the end of catdom. -

Fractal Structure and Non-Extensive Statistics

entropy Article Fractal Structure and Non-Extensive Statistics Airton Deppman 1,* ID , Tobias Frederico 2, Eugenio Megías 3,4 and Debora P. Menezes 5 1 Instituto de Física, Universidade de São Paulo, Rua do Matão Travessa R Nr.187, Cidade Universitária, CEP 05508-090 São Paulo, Brazil 2 Instituto Tecnológico da Aeronáutica, 12228-900 São José dos Campos, Brazil; [email protected] 3 Departamento de Física Teórica, Universidad del País Vasco UPV/EHU, Apartado 644, 48080 Bilbao, Spain; [email protected] 4 Departamento de Física Atómica, Molecular y Nuclear and Instituto Carlos I de Física Teórica y Computacional, Universidad de Granada, Avenida de Fuente Nueva s/n, 18071 Granada, Spain 5 Departamento de Física, CFM, Universidade Federal de Santa Catarina, CP 476, CEP 88040-900 Florianópolis, Brazil; [email protected] * Correspondence: [email protected] Received: 27 June 2018; Accepted: 19 August 2018; Published: 24 August 2018 Abstract: The role played by non-extensive thermodynamics in physical systems has been under intense debate for the last decades. With many applications in several areas, the Tsallis statistics have been discussed in detail in many works and triggered an interesting discussion on the most deep meaning of entropy and its role in complex systems. Some possible mechanisms that could give rise to non-extensive statistics have been formulated over the last several years, in particular a fractal structure in thermodynamic functions was recently proposed as a possible origin for non-extensive statistics in physical systems. In the present work, we investigate the properties of such fractal thermodynamical system and propose a diagrammatic method for calculations of relevant quantities related to such a system. -

0802.3424 Property of Tsallis Entropy and Principle of Entropy

arXiv: 0802.3424 Property of Tsallis entropy and principle of entropy increase Du Jiulin Department of Physics, School of Science, Tianjin University, Tianjin 300072, China E-mail: [email protected] Abstract The property of Tsallis entropy is examined when considering tow systems with different temperatures to be in contact with each other and to reach the thermal equilibrium. It is verified that the total Tsallis entropy of the two systems cannot decrease after the contact of the systems. We derived an inequality for the change of Tsallis entropy in such an example, which leads to a generalization of the principle of entropy increase in the framework of nonextensive statistical mechanics. Key Words: Nonextensive system; Principle of entropy increase PACS number: 05.20.-y; 05.20.Dd 1 1. Introduction In recent years, a generalization of Bltzmann-Gibbs(B-G) statistical mechanics initiated by Tsallis has focused a great deal of attention, the results from the assumption of nonadditive statistical entropies and the maximum statistical entropy principle, which has been known as “Tsallis statistics” or nonextensive statistical mechanics(NSM) (Abe and Okamoto, 2001). This generalization of B-G statistics was proposed firstly by introducing the mathematical expression of Tsallis entropy (Tsallis, 1988) as follows, k S = ( ρ q dΩ −1) (1) q 1− q ∫ where k is the Boltzmann’s constant. For a classical Hamiltonian system, ρ is the phase space probability distribution of the system under consideration that satisfies the normalization ∫ ρ dΩ =1 and dΩ stands for the phase space volume element. The entropy index q is a positive parameter whose deviation from unity is thought to describe the degree of nonextensivity. -

Thermodynamics from First Principles: Correlations and Nonextensivity

Thermodynamics from first principles: correlations and nonextensivity S. N. Saadatmand,1, ∗ Tim Gould,2 E. G. Cavalcanti,3 and J. A. Vaccaro1 1Centre for Quantum Dynamics, Griffith University, Nathan, QLD 4111, Australia. 2Qld Micro- and Nanotechnology Centre, Griffith University, Nathan, QLD 4111, Australia. 3Centre for Quantum Dynamics, Griffith University, Gold Coast, QLD 4222, Australia. (Dated: March 17, 2020) The standard formulation of thermostatistics, being based on the Boltzmann-Gibbs distribution and logarithmic Shannon entropy, describes idealized uncorrelated systems with extensive energies and short-range interactions. In this letter, we use the fundamental principles of ergodicity (via Liouville's theorem), the self-similarity of correlations, and the existence of the thermodynamic limit to derive generalized forms of the equilibrium distribution for long-range-interacting systems. Significantly, our formalism provides a justification for the well-studied nonextensive thermostatistics characterized by the Tsallis distribution, which it includes as a special case. We also give the complementary maximum entropy derivation of the same distributions by constrained maximization of the Boltzmann-Gibbs-Shannon entropy. The consistency between the ergodic and maximum entropy approaches clarifies the use of the latter in the study of correlations and nonextensive thermodynamics. Introduction. The ability to describe the statistical tion [30] or the structure of the microstates [27]. Another state of a macroscopic system is central to many ar- widely-used approach is to generalize the MaxEnt prin- eas of physics [1{4]. In thermostatistics, the statistical ciple to apply to a different entropy functional in place of BGS state of a system of N particles in equilibrium is de- S (fwzg). -

Generalized Simulated Annealing Algorithms Using Tsallis Statistics: Application to Conformational Optimization of a Tetrapeptide

PHYSICAL REVIEW E VOLUME 53, NUMBER 4 APRIL 1996 Generalized simulated annealing algorithms using Tsallis statistics: Application to conformational optimization of a tetrapeptide Ioan Andricioaei and John E. Straub Department of Chemistry, Boston University, Boston, Massachusetts 02215 ~Received 18 December 1995! A Monte Carlo simulated annealing algorithm based on the generalized entropy of Tsallis is presented. The algorithm obeys detailed balance and reduces to a steepest descent algorithm at low temperatures. Application to the conformational optimization of a tetrapeptide demonstrates that the algorithm is more effective in locating low energy minima than standard simulated annealing based on molecular dynamics or Monte Carlo methods. PACS number~s!: 02.70.2c, 02.60.Pn, 02.50.Ey Finding the ground state conformation of biologically im- portant molecules has an obvious importance, both from the S52k( pilnpi ~3! academic and pragmatic points of view @1#. The problem is hard for biomolecules, such as proteins, because of the rug- when q 1. Maximizing the Tsallis entropy with the con- gedness of the energy landscape which is characterized by an straints → immense number of local minima separated by a broad dis- tribution of barrier heights @2,3#. Algorithms to find the glo- bal minimum of an empirical potential energy function for q ( pi51 and ( pi ei5const, ~4! molecules have been devised, among which a central role is played by the simulated annealing methods @4#. Once a cool- ing schedule is chosen, representative configurations of the where ei is the energy spectrum, the generalized probability allowed microstates are generated by methods either of the distribution is found to be molecular dynamics ~MD! or Monte Carlo ~MC! types.