A Study on Resource Pooling, Allocation and Virtualization Tools Used for Cloud Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

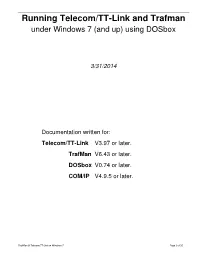

Running Telecom/TT-Link and Trafman Under Windows 7 (And Up) Using Dosbox

Running Telecom/TT-Link and Trafman under Windows 7 (and up) using DOSbox 3/31/2014 Documentation written for: Telecom/TT-Link V3.97 or later. TrafMan V6.43 or later. DOSbox V0.74 or later. COM/IP V4.9.5 or later. TrafMan & Telecom/TT-Link on Windows 7 Page 1 of 10 I. Introduction and Initial Emulator Setup Microsoft discontinued support for MS-DOS level programs starting with Windows 7. This has made the operation of Telecom/TT-Link and TrafMan on systems with a Windows 7 or later operating systems problematical, to say the least. However, it is possible to achieve functionality (with some limits) even on a Windows 7 computer by using what is known as a “DOS Emulator”. Emulator’s simulate the conditions of an earlier operating system on a later one. For example, one of the most common emulators is called DOSbox (http://sourceforge.net/projects/dosbox/ ) and it is available as a free download for both PC and Mac computers. DOSbox creates an artificial MS-DOS level environment on a Windows 7 computer. Inside this environment you can run most DOS programs, including TrafMan and Telecom/TT-Link. This document describes how to utilize the DOSbox emulator for running Telecom/TT-Link and TrafMan. It should be noted, however, that other emulators may also work and this document can serve as a guide for setting up and using similar DOS emulators as they are available. Initial DOSbox Emulator Setup: 1) Download the latest release of DOSbox (0.74 or later) to your PC and install it. -

Institutionalizing Freebsd Isolated and Virtualized Hosts Using Bsdinstall(8), Zfs(8) and Nfsd(8)

Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) [email protected] @MichaelDexter BSDCan 2018 Jails and bhyve… FreeBSD’s had Isolation since 2000 and Virtualization since 2014 Why are they still strangers? Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) Integrating as first-class features Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) This example but this is not FreeBSD-exclusive Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) jail(8) and bhyve(8) “guests” Application Binary Interface vs. Instructions Set Architecture Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) The FreeBSD installer The best file system/volume manager available The Network File System Broad Motivations Virtualization! Containers! Docker! Zones! Droplets! More more more! My Motivations 2003: Jails to mitigate “RPM Hell” 2011: “bhyve sounds interesting...” 2017: Mitigating Regression Hell 2018: OpenZFS EVERYWHERE A Tale of Two Regressions Listen up. Regression One FreeBSD Commit r324161 “MFV r323796: fix memory leak in [ZFS] g_bio zone introduced in r320452” Bug: r320452: June 28th, 2017 Fix: r324162: October 1st, 2017 3,710 Commits and 3 Months Later June 28th through October 1st BUT July 27th, FreeNAS MFC Slips into FreeNAS 11.1 Released December 13th Fixed in FreeNAS January 18th 3 Months in FreeBSD HEAD 36 Days -

Virtualization Technologies Overview Course: CS 490 by Mendel

Virtualization technologies overview Course: CS 490 by Mendel Rosenblum Name Can boot USB GUI Live 3D Snaps Live an OS on mem acceleration hot of migration another ory runnin disk alloc g partition ation system as guest Bochs partially partially Yes No Container s Cooperati Yes[1] Yes No No ve Linux (supporte d through X11 over networkin g) Denali DOSBox Partial (the Yes No No host OS can provide DOSBox services with USB devices) DOSEMU No No No FreeVPS GXemul No No Hercules Hyper-V iCore Yes Yes No Yes No Virtual Accounts Imperas Yes Yes Yes Yes OVP (Eclipse) Tools Integrity Yes No Yes Yes No Yes (HP-UX Virtual (Integrity guests only, Machines Virtual Linux and Machine Windows 2K3 Manager in near future) (add-on) Jail No Yes partially Yes No No No KVM Yes [3] Yes Yes [4] Yes Supported Yes [5] with VMGL [6] Linux- VServer LynxSec ure Mac-on- Yes Yes No No Linux Mac-on- No No Mac OpenVZ Yes Yes Yes Yes No Yes (using Xvnc and/or XDMCP) Oracle Yes Yes Yes Yes Yes VM (manage d by Oracle VM Manager) OVPsim Yes Yes Yes Yes (Eclipse) Padded Yes Yes Yes Cell for x86 (Green Hills Software) Padded Yes Yes Yes No Cell for PowerPC (Green Hills Software) Parallels Yes, if Boot Yes Yes Yes DirectX 9 Desktop Camp is and for Mac installed OpenGL 2.0 Parallels No Yes Yes No partially Workstati on PearPC POWER Yes Yes No Yes No Yes (on Hypervis POWER 6- or (PHYP) based systems, requires PowerVM Enterprise Licensing) QEMU Yes Yes Yes [4] Some code Yes done [7]; Also supported with VMGL [6] QEMU w/ Yes Yes Yes Some code Yes kqemu done [7]; Also module supported -

Thread Scheduling in Multi-Core Operating Systems Redha Gouicem

Thread Scheduling in Multi-core Operating Systems Redha Gouicem To cite this version: Redha Gouicem. Thread Scheduling in Multi-core Operating Systems. Computer Science [cs]. Sor- bonne Université, 2020. English. tel-02977242 HAL Id: tel-02977242 https://hal.archives-ouvertes.fr/tel-02977242 Submitted on 24 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ph.D thesis in Computer Science Thread Scheduling in Multi-core Operating Systems How to Understand, Improve and Fix your Scheduler Redha GOUICEM Sorbonne Université Laboratoire d’Informatique de Paris 6 Inria Whisper Team PH.D.DEFENSE: 23 October 2020, Paris, France JURYMEMBERS: Mr. Pascal Felber, Full Professor, Université de Neuchâtel Reviewer Mr. Vivien Quéma, Full Professor, Grenoble INP (ENSIMAG) Reviewer Mr. Rachid Guerraoui, Full Professor, École Polytechnique Fédérale de Lausanne Examiner Ms. Karine Heydemann, Associate Professor, Sorbonne Université Examiner Mr. Etienne Rivière, Full Professor, University of Louvain Examiner Mr. Gilles Muller, Senior Research Scientist, Inria Advisor Mr. Julien Sopena, Associate Professor, Sorbonne Université Advisor ABSTRACT In this thesis, we address the problem of schedulers for multi-core architectures from several perspectives: design (simplicity and correct- ness), performance improvement and the development of application- specific schedulers. -

International Journal for Scientific Research & Development

IJSRD - International Journal for Scientific Research & Development| Vol. 2, Issue 02, 2014 | ISSN (online): 2321-0613 Virtualization : A Novice Approach Amithchand Sheety1 Mahesh Poola2 Pradeep Bhat3 Dhiraj Mishra4 1,2,3,4 Padmabhushan Vasantdada Patil Pratishthan’s College of Engineering, Eastern Express Highway, Near Everard Nagar, Sion-Chunabhatti, Mumbai-400 022, India. Abstract— Virtualization provides many benefits – greater as CPU. Although hardware is consolidated, typically efficiency in CPU utilization, greener IT with less power OS are not. Instead, each OS running on a physical consumption, better management through central server becomes converted to a distinct OS running inside environment control, more availability, reduced project a virtual machine. The large server can "host" many such timelines by eliminating hardware procurement, improved "guest" virtual machines. This is known as Physical-to- disaster recovery capability, more central control of the Virtual (P2V) transformation. desktop, and improved outsourcing services. With these 2) Consolidating servers can also have the added benefit of benefits, it is no wondered that virtualization has had a reducing energy consumption. A typical server runs at meteoric rise to the 2008 Top 10 IT Projects! This white 425W [4] and VMware estimates an average server paper presents a brief look at virtualization, its benefits and consolidation ratio of 10:1. weaknesses, and today’s “best practices” regarding 3) A virtual machine can be more easily controlled and virtualization. inspected from outside than a physical one, and its configuration is more flexible. This is very useful in I. INTRODUCTION kernel development and for teaching operating system Virtualization, in computing, is a term that refers to the courses. -

Large Scale Distributed Computing - Summary

Large Scale Distributed Computing - Summary Author: @triggetry, @lanoxx Source available on github.com/triggetry/lsdc-summary December 10, 2013 Contents 1 Preamble 3 2 CHAPTER 1 3 2.1 Most common forms of Large Scale Distributed Computing . 3 2.2 High Performance Computing . 3 2.2.1 Parallel Programming . 5 2.3 Grid Computing . 5 2.3.1 Definitions . 5 2.3.2 Virtual Organizations . 6 2.4 Cloud Computing . 6 2.4.1 Definitions . 6 2.4.2 5 Cloud Characteristics . 7 2.4.3 Delivery Models . 7 2.4.4 Cloud Deployment Types . 7 2.4.5 Cloud Technologies . 7 2.4.5.1 Virtualization . 7 2.4.5.2 The history of Virtualization . 8 2.5 Web Application Frameworks . 9 2.6 Web Services . 9 2.7 Multi-tenancy . 9 2.8 BIGDATA .................................................. 10 3 CHAPTER 2 11 3.1 OS / Virtualization . 11 3.1.1 Batchsystems . 11 3.1.1.1 Common Batch processing usage . 11 3.1.1.2 Portable Batch System (PBS) . 12 3.1.2 VGE - Vienna Grid Environment . 12 3.2 VMs, VMMs . 12 3.2.1 Why Virtualization? . 12 3.2.2 Types of virtualization . 13 3.2.3 Hypervisor vs. hosted Virtualization . 14 3.2.3.1 Type 1 and Type 2 Virtualization . 14 3.2.4 Basic Virtualization Techniques . 14 3.3 Xen ...................................................... 14 3.3.1 Architecture . 14 3.3.2 Dynamic Memory Control (DMC) . 16 3.3.3 Balloon Drivers . 16 3.3.4 Paravirtualization . 16 3.3.5 Domains in Xen . 17 3.3.6 Hypercalls in Xen . -

Cna Laboratory Enhancement by Virtualisation Centria

Akintomide Akinsola CNA LABORATORY ENHANCEMENT BY VIRTUALISATION Bachelor’s thesis CENTRIA UNIVERSITY OF APPLIED SCIENCES Degree Programme in Information Technology June 2015 ABSTRACT Unit Date Author /s Kokkola-Pietarsaari June 2015 Akintomide Akinsola Degree program me Information Technology Name of thesis CNA LABORATORY ENHANCEMENT BY VIRTUALISATION Instructor Pages 28 + 3 Supervisor The role of the Cisco Networking Academy of Centria University of Applied Sciences in the Media and Communication Technology specialisation is an essential one. An improvement in the infrastructure of the CNA laboratory directly leads to an improvement in the quality of education received in the laboratory. This thesis work described the creation of an alternative arrangement using Linux Ubuntu as a supplementary option in the studying of networking subjects in the CNA laboratory of the university. Linux Ubuntu is a free software available to download and with some adjustments and modifications it can be made to function just as properly as a Windows operating system in the experience of learning networking. The process of creating and deploying a customised Ubuntu image deployed via the Virtual Machine, was discussed in this thesis work. Linux is a UNIX-like software, the knowledge of which is valuable to students as they study to become professionals. An introduction to several applications such as Minicom, Wireshark, and Nmap was also discussed in this thesis. A simple laboratory experiment was designed to test the performance and functioning of the newly created system. Expertise in more than one operating system expands the horizon of future students of the UAS, an opportunity older students did not have, and capacitates them to become proficient engineers. -

Virtual Machines

Virtual Machines Today l VM over time l Implementation methods l Hardware features supporting VM Next time l Midterm? *Partially based on notes from C. Waldspurger, VMware, 2010 Too many computers! An organization can have a bit too many machines – Why many? Running different services (email, web) to ensure • Each has enough resources • … fails independently • ... survives if another one gets attack • ... services may require different Oses – Why too many? Hard and expensive to maintain! Virtualization as an alternative – A VMM (or hypervisor) creates the illusion of multiple (virtual) machines 2 Virtualization applications Server consolidation – Convert underutilized servers to VMs, saving cost – Increasingly used for virtual desktops Simplified management – Datacenter provisioning and monitoring – Dynamic load balancing Improved availability – Automatic restart – Fault tolerance – Disaster recovery Test and development Cloud support – Isolation for clients 3 Types of virtualization Process virtualization – Language-level: Java, .NET, Smalltalk – OS-level: processes, Solaris Zones, BSD Jails – Cross-ISA emulation: Apple 68K-PPC-x86 Device virtualization – Logical vs. physical: VLAN, VPN, LUN, RAID System virtualization – Xen, VMware Fusion, KVM, Palacios … 4 System virtualization starting point Physical hardware – Processors, memory, chipset, I/O devices, etc. – Resources often grossly underutilized App App App App Software OS – Tightly coupled to physical hardware – Single active OS instance Hardware – OS controls hardware CPU MEM NIC 5 Adding a virtualization layer Software abstraction – Behaves like hardware – Encapsulates all OS and App App App App application state Virtualization layer OS OS – Extra level of indirection AppVM App AppVM App – Decouples hardware, OS – Enforces isolation VirtualizationOS layer – Multiplexes physical hardware across VMs Hardware CPU MEM NIC 6 Virtual Machine Monitor Classic definition* … an efficient, isolated duplicate of the real machine. -

MX-19.2 Users Manual

MX-19.2 Users Manual v. 20200801 manual AT mxlinux DOT org Ctrl-F = Search this Manual Ctrl+Home = Return to top Table of Contents 1 Introduction...................................................................................................................................4 1.1 About MX Linux................................................................................................................4 1.2 About this Manual..............................................................................................................4 1.3 System requirements..........................................................................................................5 1.4 Support and EOL................................................................................................................6 1.5 Bugs, issues and requests...................................................................................................6 1.6 Migration............................................................................................................................7 1.7 Our positions......................................................................................................................8 1.8 Notes for Translators.............................................................................................................8 2 Installation...................................................................................................................................10 2.1 Introduction......................................................................................................................10 -

Building a Virtualisation Appliance with Freebsd/Bhyve/Openzfs Jason Tubnor ICT Senior Security Lead Introduction

Building a virtualisation appliance with FreeBSD/bhyve/OpenZFS Jason Tubnor ICT Senior Security Lead Introduction Building an virtualisation appliance for use within a NGO/NFP Australian Health Sector About Me Latrobe Community Health Service (LCHS) Background Problem Concept Production Reiteration About Me 26 years of IT experience Introduced to Open Source in the mid 90’s Discovered OpenBSD in 2000 A user and advocate of OpenBSD and FreeBSD Life outside of computers: Ultra endurance gravel cycling Latrobe Community Health Service (LCHS) Originally a Gippsland based NFP/NGO health service ICT manages 900+ users Servicing 51 sites across Victoria, Australia Covering ~230,000km2 Roughly the size of Laos in Aisa or Minnesota in USA “Better health, Better lifestyles, Stronger communities” Background First half of 2016 awarded contract to provide NDIS services Mid 2016 – deployment of initial infrastructure MPLS connection L3 switch gear ESXi host running a Windows Server 2016 for printing services Background – cont. Staff number grew We hit capacity constraints on the managed MPLS network An offloading guest was added to the ESXi host VPN traffic could be offloaded from the main network Using cheaply available ISP internet connection Problem Taking stock of the lessons learned in the first phase We needed to come up with a reproducible device Device required to be durable in harsh conditions Budget constraints/cost savings Licensing model Phase 2 was already being negotiated so a solution was required quickly Concept bhyve [FreeBSD] was working extremely well in testing Excellent hardware support Liberally licensed OpenZFS Simplistic Small footprint for a type 2 hypervisor Hardware discovery phase FreeBSD Required virtualisation components in CPU Concept – cont. -

Bhyve - Improvements to Virtual Machine State Save and Restore

bhyve - Improvements to Virtual Machine State Save and Restore Darius Mihai Mihai Carabas, University POLITEHNICA of Bucharest University POLITEHNICA of Bucharest Splaiul Independent, ei 313, Bucharest, Romania, 060042 Splaiul Independent, ei 313, Bucharest, Romania, 060042 Email: [email protected] Email: [email protected] Abstract—As more complex tasks are delegated to distributed Regardless of their exact function, a periodic task is a routine servers, virtual machine hypervisors need to adapt and provide that will have to be called at (or as close as possible) a set features that allow redundancy and load balancing. One such interval. mechanism is the virtual machine save and restore through sys- sleep tem snapshots. A snapshot should allow the complete restoration For example, the basic Unix command can be used of the state that the virtual machine was in when the snapshot to perform an operation every N seconds if sleep $N is was created. Since the snapshot should encapsulate the entire called in a script loop. Since it is safe to assume that all state of the virtualized system, the guest system should not be modern processors have hardware timekeeping components able to differentiate between the moment a snapshot was created implemented, sleep will request from the operating system and the moment when the system was restored, regardless of how much real time has passed between the two events. This that a software timer (i.e., one that is implemented by the paper will present how the time management and block devices operating system as an abstraction [1]) to be set for N are saved and restored for bhyve, FreeBSD’s virtual machine seconds in the future and will yield the processor, without hypervisor. -

In PDF Format

Arranging Your Virtual Network on FreeBSD Michael Gmelin ([email protected]) January 2020 1 CONTENTS CONTENTS Contents Introduction 3 Document Conventions . .3 License . .3 Plain Jails 4 Plain Jails Using Inherited IP Configuration . .4 Plain Jails Using a Dedicated IP Address . .5 Plain Jails Using a VLAN IP Address . .6 Plain Jails Using a Loopback IP Address . .7 Adding Outbound NAT for Public Traffic . .7 Running a Service and Redirecting Traffic to It . .9 VNET Jails and bhyve VMs 10 VNET Jails Using sysutils/pot ............................. 10 VNET Jails Using sysutils/iocage ............................ 13 Managing Bridges . 13 Adding bhyve VMs and DHCP to the Mix . 16 Preventing Traffic Between VNET Jails/VMs . 17 Firewalling Inside VNET Jails/VMs . 20 VXLAN 22 VXLAN Example Overview . 22 Gateway Configuration . 24 Jailhost-a . 25 Network Configuration (jailhost-a) . 25 VM Configuration (jailhost-a) . 26 Jail Configuration (jailhost-a) . 27 Jailhost-b . 27 Network Configuration (jailhost-b) . 27 Plain Jail Configuration (jailhost-b) . 28 Network Switch Setup (jailhost-b) . 29 VNET Jail Configuration (jailhost-b) . 30 VM Configuration (jailhost-b) . 30 VXLAN Multicast Troubleshooting . 31 Conclusion and Further Reading 33 2020-01-08 (final) 2 CC BY 4.0 INTRODUCTION Introduction Modern FreeBSD offers a range of virtualization options, from the traditional jail environment sharing the network stack with the host operating system, over vnet jails, which allow each jail to have its own network stack, to bhyve virtual machines running their own kernels/operating systems. Depending on individual requirements, there are different ways to configure the virtual network. Jail and VM management tools can ease the process by abstracting away (at least some of) the underlying complexities.