Information and Collaboration in the Storage Stack

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Twenty Years of Berkeley Unix : from AT&T-Owned to Freely

Twenty Years of Berkeley Unix : From AT&T-Owned to Freely Redistributable Marshall Kirk McKusick Early History Ken Thompson and Dennis Ritchie presented the first Unix paper at the Symposium on Operating Systems Principles at Purdue University in November 1973. Professor Bob Fabry, of the University of California at Berkeley, was in attendance and immediately became interested in obtaining a copy of the system to experiment with at Berkeley. At the time, Berkeley had only large mainframe computer systems doing batch processing, so the first order of business was to get a PDP-11/45 suitable for running with the then-current Version 4 of Unix. The Computer Science Department at Berkeley, together with the Mathematics Department and the Statistics Department, were able to jointly purchase a PDP-11/45. In January 1974, a Version 4 tape was delivered and Unix was installed by graduate student Keith Standiford. Although Ken Thompson at Purdue was not involved in the installation at Berkeley as he had been for most systems up to that time, his expertise was soon needed to determine the cause of several strange system crashes. Because Berkeley had only a 300-baud acoustic-coupled modem without auto answer capability, Thompson would call Standiford in the machine room and have him insert the phone into the modem; in this way Thompson was able to remotely debug crash dumps from New Jersey. Many of the crashes were caused by the disk controller's inability to reliably do overlapped seeks, contrary to the documentation. Berkeley's 11/45 was among the first systems that Thompson had encountered that had two disks on the same controller! Thompson's remote debugging was the first example of the cooperation that sprang up between Berkeley and Bell Labs. -

The Release Engineering of Freebsd 4.4

The Release Engineering of FreeBSD 4.4 Murray Stokely [email protected] Wind River Systems Abstract different pace, and with the general assumption that they This paper describes the approach used by the FreeBSD re- have first gone into FreeBSD-CURRENT and have been lease engineering team to make production-quality releases thoroughly tested by our user community. of the FreeBSD operating system. It details the methodol- In the interim period between releases, nightly snap- ogy used for the release of FreeBSD 4.4 and describes the shots are built automatically by the FreeBSD Project build tools available for those interested in producing customized machines and made available for download from ftp: FreeBSD releases for corporate rollouts or commercial pro- //stable.FreeBSD.org. The widespread availabil- ductization. ity of binary release snapshots, and the tendency of our user community to keep up with -STABLE development with CVSup and “make world”[8] helps to keep FreeBSD- 1 Introduction STABLE in a very reliable condition even before the qual- ity assurance activities ramp up pending a major release. The development of FreeBSD is a very open process. Bug reports and feature requests are continuously sub- FreeBSD is comprised of contributions from thousands of mitted by users throughout the release cycle. Problem people around the world. The FreeBSD Project provides reports are entered into our GNATS[9] database through anonymous CVS[1] access to the general public so that email, the send-pr(1) application, or via a web-based form. others can have access to log messages, diffs between de- In addition to the multitude of different technical mailing velopment branches, and other productivity enhancements lists about FreeBSD, the FreeBSD quality-assurance mail- that formal source code management provides. -

DVCS Or a New Way to Use Version Control Systems for Freebsd

Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions DVCS or a new way to use Version Control Systems for FreeBSD Ollivier ROBERT <[email protected]> BSDCan 2006 Ottawa, Canada May, 12-13th, 2006 Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions Agenda 1 Brief history of VCS 2 FreeBSD context & gures 3 Is Arch/baz suited for FreeBSD? 4 Mercurial to the rescue 5 New processes & policies needed 6 Conclusions Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions The ancestors: SCCS, RCS File-oriented Use a subdirectory to store deltas and metadata Use lock-based architecture Support shared developments through NFS (fragile) SCCS is proprietary (System V), RCS is Open Source a SCCS clone exists: CSSC You can have a central repository with symlinks (RCS) Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions CVS, the de facto VCS for the free world Initially written as shell wrappers over RCS then rewritten in C Centralised server Easy UI Use sandboxes to avoid locking Simple 3-way merges Can be replicated through CVSup or even rsync Extensive documentation (papers, websites, books) Free software and used everywhere (SourceForge for example) Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions CVS annoyances and aws BUT.. -

Everything You Need to Know About Openjdk's Move to Git and Github

Menu Topics Archives Downloads Subscribe Everything you need to know JAVA 17 about OpenJDK’s move to Git and GitHub Everything you need to know Blame or thank BitKeeper about OpenJDK’s move to Git Why not Mercurial? and GitHub Why Git? Why GitHub? Why the move, and why now? The move from Mercurial to Git Getting the source code and provided an opportunity to consolidate building the OpenJDK the source code repositories. Conclusion by Ian Darwin Dig deeper May 14, 2021 Download a PDF of this article Have you ever built your own Java Development Kit from source? Most end users of the JDK will not need to build their own JDK from the Oracle source code. I’ve needed to do that only a few times when I was running on the OpenBSD UNIX-like system, which is not one of the three supported platforms. Sure, you might want to build your own JDK to try out a new feature that you think should be added to Java. You might choose to build from source to be sure you are running a more trustworthy binary. Having the complete source code readily available, and now in a more commonly used download format, means it is easier than ever to build your own JDK. Yes, it’s a better-documented, easily configured process than in the past. But it’s still a bit confusing. The source code for the OpenJDK recently moved from the Mercurial version control system (VCS) to the Git VCS and the GitHub repository system, and that’s probably a good thing. -

Berkeley DB from Wikipedia, the Free Encyclopedia

Berkeley DB From Wikipedia, the free encyclopedia Berkeley DB Original author(s) Margo Seltzer and Keith Bostic of Sleepycat Software Developer(s) Sleepycat Software, later Oracle Corporation Initial release 1994 Stable release 6.1 / July 10, 2014 Development status production Written in C Operating system Unix, Linux, Windows, AIX, Sun Solaris, SCO Unix, Mac OS Size ~1244 kB compiled on Windows x86 Type Embedded database License AGPLv3 Website www.oracle.com/us/products/database/berkeley-db /index.html (http://www.oracle.com/us/products/database/berkeley- db/index.html) Berkeley DB (BDB) is a software library that provides a high-performance embedded database for key/value data. Berkeley DB is written in C with API bindings for C++, C#, PHP, Java, Perl, Python, Ruby, Tcl, Smalltalk, and many other programming languages. BDB stores arbitrary key/data pairs as byte arrays, and supports multiple data items for a single key. Berkeley DB is not a relational database.[1] BDB can support thousands of simultaneous threads of control or concurrent processes manipulating databases as large as 256 terabytes,[2] on a wide variety of operating systems including most Unix- like and Windows systems, and real-time operating systems. "Berkeley DB" is also used as the common brand name for three distinct products: Oracle Berkeley DB, Berkeley DB Java Edition, and Berkeley DB XML. These three products all share a common ancestry and are currently under active development at Oracle Corporation. Contents 1 Origin 2 Architecture 3 Editions 4 Programs that use Berkeley DB 5 Licensing 5.1 Sleepycat License 6 References 7 External links Origin Berkeley DB originated at the University of California, Berkeley as part of BSD, Berkeley's version of the Unix operating system. -

Version Control

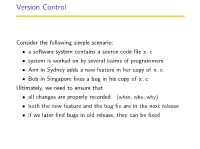

Version Control Consider the following simple scenario: • a software system contains a source code file x.c • system is worked on by several teams of programmers • Ann in Sydney adds a new feature in her copy of x.c • Bob in Singapore fixes a bug in his copy of x.c Ultimately, we need to ensure that • all changes are properly recorded (when, who, why) • both the new feature and the bug fix are in the next release • if we later find bugs in old release, they can be fixed Version Control Systems A version control system allows software developers to: • work simultaneously and coperatively on a system • document when, who, & why changes made • discuus and approve changes • recreate old versions of a system when needed • multiple versions of system can be distributed, tested, merged This allows change to be managed/controlled in a systematic way. VCSs also try to minimise resource use in maintaining multiple versions. Unix VCS - Generation 1 (Unix) 1970’s ... SCCS (source code control system) • first version control system • centralized VCS - single central repository • introduced idea of multiple versions via delta’s • single user model: lock - modify - unlock • only one user working on a file at a time 1980’s ... RCS (revision control system) • similar functionality to SCCS (essentially a clean open-source re-write of SCCS) • centralized VCS - single central repository • single user model: lock - modify - unlock • only one user working on a file at a time • still available and in use Unix VCS - Generation 2 (Unix) 1990 ... CVS (concurrent version system) • centralized VCS - single central repository • locked check-out replaced by copy-modify-merge model • users can work simultaneously and later merge changes • allows remote development essential for open source projects • web-accessible interface promoted wide-dist projects • poor handling of file metadata, renames, links Early 2000’s .. -

Copyright © 1992, by the Author(S). All Rights Reserved

Copyright © 1992, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. AN IMPLEMENTATION OF A LOG-STRUCTURED FILE SYSTEM FOR UNIX by Margo Seltzer, Keith Bostic, Marshall Kirk McKusick, and Carl Staelin Memorandum No. UCB/ERL M92/134 3 December 1992 AN IMPLEMENTATION OF A LOG-STRUCTURED FILE SYSTEM FOR UNIX by Margo Seltzer, Keith Bostic, Marshall Kirk McKusick, and Carl Staelin Memorandum No. UCB/ERL M92/134 3 December 1992 ELECTRONICS RESEARCH LABORATORY College of Engineering University of California, Berkeley 94720 AN IMPLEMENTATION OF A LOG-STRUCTURED FILE SYSTEM FOR UNIX by Margo Seltzer, Keith Bostic, Marshall Kirk McKusick, and Carl Staelin Memorandum No. UCB/ERL M92/134 3 December 1992 ELECTRONICS RESEARCH LABORATORY College of Engineering University ofCalifornia, Berkeley 94720 An Implementation ofa Log-Structured File System for UNIX1 Margo Seltzer —University ofCalifornia, Berkeley Keith Bostic - University ofCalifornia, Berkeley Marshall Kirk McKusick —University ofCalifornia, Berkeley Carl Staelin - Hewlett-Packard Laboratories ABSTRACT Research results [ROSE91] demonstrate that a log-structured file system (LFS) offers the potential for dramatically improved write performance, faster recovery time, and faster file creation and deletion than traditional UNIX file systems. This paper presents a redesign and implementation of the Sprite [ROSE91] log-structured file system that is more robust and integrated into the vnode interface [KLEI86]. -

Chapter 1 - Describing Open Source & Free Software Communities

PROTECTING THE VIRTUAL COMMONS Self-organizing open source and free software communities and innovative intellectual property regimes Ruben van Wendel de Joode Hans de Bruijn Michel van Eeten Delft September 2002 Draft version (official version to be published by Asser Press in 2003) For questions, suggestions or comments please contact: Ruben van Wendel de Joode at [email protected] © 2002 Ruben van Wendel de Joode, Hans de Bruijn and Michel van Eeten Table of contents ACKNOWLEDGEMENTS..............................................................................................5 INTRODUCTION.............................................................................................................6 Questions guiding the research...................................................................................6 Structure of the report .................................................................................................7 CHAPTER 1 - DESCRIBING OPEN SOURCE & FREE SOFTWARE COMMUNITIES...............................................................................................................9 1.1 - INTRODUCTION........................................................................................................9 1.2 - POPULARITY OF OPEN SOURCE AND FREE SOFTWARE.............................................9 1.3 - HISTORICAL DEVELOPMENT OF OPENNESS & FREEDOM.......................................11 The origin of the Internet ..........................................................................................11 Richard -

Contributeurs Au Projet Freebsd Version: 43184 2013-11-13 Par Hrs

Contributeurs au projet FreeBSD Version: 43184 2013-11-13 par hrs. Résumé Cet article liste les organisations et les personnes ayant contribué à FreeBSD. Table des matières 1. Gallerie des dons ..................................................................................................................... 1 2. Le bureau dirigeant .................................................................................................................. 3 3. Les développeurs FreeBSD .......................................................................................................... 3 4. Le projet de documentation de FreeBSD ...................................................................................... 14 5. Qui est reponsable de quoi ....................................................................................................... 15 6. Liste des anciens de la "Core Team" ........................................................................................... 16 7. Liste des anciens développeurs .................................................................................................. 17 8. Liste des logiciels contribués ..................................................................................................... 18 9. Contributeurs additionnels à FreeBSD ......................................................................................... 18 10. Contributeurs du kit de patch 386BSD ....................................................................................... 58 Index ..................................................................................................................................... -

Downloaded for Free From

The Design of the NetBSD I/O Subsystems SungWon Chung Pusan National University 2 This book is dedicated to the open-source code developers in the NetBSD community. The original copy of this publication is available in an electronic form and it can be downloaded for free from http://arXiv.org. Copyright (c) 2002 by SungWon Chung. For non-commercial and personal use, this publication may be reproduced, stored in a retrieval system, or transmitted in any form by any means, electronic, mechanical, photocopying, recording or otherwise. For commercial use, no part of this publication can be reproduced by any means without the prior written permission of the author. NetBSD is the registered trademark of The NetBSD Foundation, Inc. Contents Preface 14 I Basics to Learn Filesystem 15 1 Welcome to the World of Kernel ! 17 1.1 How Does a System Call Dive into Kernel from User Program ? . 17 1.1.1 Example: write system call . 17 1.1.2 Ultra SPARC 0x7c CPU Trap . 18 1.1.3 Jump to the File Descriptor Layer . 24 1.1.4 Arriving at Virtual Filesystem Operations . 28 1.2 General Data Structures in Kernel such as List, Hash, Queue, ... 30 1.2.1 Linked-Lists . 30 1.2.2 Tail Queues . 34 1.2.3 Hash . 38 1.3 Waiting and Sleeping in Kernel . 39 1.4 Kernel Lock Manager . 39 1.4.1 simplelock and lock . 39 1.4.2 Simplelock Interfaces . 40 1.4.3 Lock Interfaces . 40 1.5 Kernel Memory Allocation . 43 1.6 Resource Pool Manager . -

Designing a Fast Index Format for Git

Free University of Bolzano - Bozen Bachelor Thesis Designing a Fast Index Format for Git Author: Supervisor: Thomas Gummerer Dr. Johann Gamper A thesis submitted in fulfillment of the requirements for the degree of Bachelor in Computer Science in July 2013 Contents List of Figures iv Abstract v 1 Introduction1 1.1 Motivation...................................1 1.2 Problem Description and Objectives.....................2 1.3 Organization of the Thesis...........................2 2 Related Work4 2.1 Version Control Systems............................4 2.2 Types of Version Control Systems.......................4 2.3 Git........................................5 3 A New Index for Git7 3.1 The Git Index File...............................7 3.2 Index v2.....................................8 3.3 The New Index File Format v5........................ 11 4 Implementation 15 4.1 Convert Index.................................. 15 4.2 Read Index................................... 15 4.3 Internal Implementation............................ 16 4.4 New In-memory Format............................ 18 4.5 Index Reading API............................... 19 4.6 Writing the New Index Format........................ 20 5 Discussion 22 5.1 Alternative Solutions.............................. 22 5.1.1 B-tree Format............................. 22 5.1.2 Append-only Data Structure..................... 22 5.1.3 Database Format............................ 23 5.1.4 Padded Structure............................ 23 5.2 Discussion.................................... 24 6 -

SCO Openserver Handbook Changing the Default Editor

SCO OpenServer Handbook How to configure and start using an SCO OpenServer system 1983-2003 Caldera International, Inc. All rights reserved. This publication is protected under copyright laws and international treaties. 1976-2001 The Santa Cruz Operation, Inc.; 1989-1994 Acer Incorporated; 1989-1994 Acer America Corporation; 1990-1994 Adaptec, Inc.; 1993 Advanced Micro Devices, Inc.; 1990 Altos Computer Systems; 1992-1994 American Power Conversion, Inc.; 1988 Archive Corporation; 1990 ATI Technologies, Inc.; 1976-1992 AT&T; 1992-1994 AT&T Global Information Solutions Company; 1993 Berkeley Network Software Consortium; 1985-1986 Bigelow & Holmes; 1988-1991 Carnegie Mellon University; 1989-1990 Cipher Data Products, Inc.; 1985-1992 Compaq Computer Corporation; 1987-1994 Computer Associates, Inc.; 1986-1987 Convergent Technologies, Inc.; 1990-1993 Cornell University; 1985-1994 Corollary, Inc.; 1990-1994 Distributed Processing Technology; 1991 D.L.S. Associates; 1990 Free Software Foundation, Inc.; 1989-1991 Future Domain Corporation; 1994 Isogon Corporation; 1991 Hewlett-Packard Company; 1994 IBM Corporation; 1990-1993 Intel Corporation; 1989 Irwin Magnetic Systems, Inc.; 1988-1991 JSB Computer Systems Ltd.; 1989-1994 Dirk Koeppen EDV- Beratungs-GmbH; 1989-1991 Massachusetts Institute of Technology; 1985-1992 Metagraphics Software Corporation; 1980-1994 Microsoft Corporation; 1984-1989 Mouse Systems Corporation; 1989 Multi-Tech Systems, Inc.; 1991 National Semiconductor Corporation; 1990 NEC Technologies,