Mobile Malware Detection Based on Energy Fingerprints — a Dead End?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Detecting Display Energy Hotspots in Android Apps

Detecting Display Energy Hotspots in Android Apps Mian Wan, Yuchen Jin, Ding Li and William G. J. Halfond University of Southern California Los Angeles, California, USA Email: fmianwan, yuchenji, dingli, [email protected] Abstract—Energy consumption of mobile apps has become an is based on the display’s brightness. This brightness, in turn, important consideration as the underlying devices are constrained is controlled by either the end user or by the OS performing by battery capacity. Display represents a significant portion of an opportunistic dimming of the display. However, most modern app’s energy consumption. However, developers lack techniques smartphones, such as the Samsung Galaxy SII, are powered to identify the user interfaces in their apps for which energy by a new generation of screen technology, the OLED. For needs to be improved. In this paper, we present a technique this type of screen, brightness is still important [10]; however, for detecting display energy hotspots – user interfaces of a mobile app whose energy consumption is greater than optimal. the colors that are displayed also become important. Due to Our technique leverages display power modeling and automated the underlying technology, this type of screen consumes less display transformation techniques to detect these hotspots and energy when displaying darker colors (e.g., black) than lighter prioritize them for developers. In an evaluation on a set of popular ones (e.g., white). The use of these screens means there are Android apps, our technique was very accurate in both predicting enormous energy savings to be realized at the software level energy consumption and ranking the display energy hotspots. -

Sprint Complete

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP) Effective July 2021 This device schedule is updated regularly to include new models. Check this document any time your equipment changes and before visiting an authorized repair center for service. If you are not certain of the model of your phone, refer to your original receipt or it may be printed on the white label located under the battery of your device. Repair eligibility is subject to change. Models Eligible for $29 Cracked Screen Repair* Apple Samsung HTC LG • iPhone 5 • iPhone X • GS5 • Note 8 • One M8 • G Flex • G3 Vigor • iPhone 5C • iPhone XS • GS6 • Note 9 • One E8 • G Flex II • G4 • iPhone 5S • iPhone XS Max • GS6 Edge • Note 20 5G • One M9 • G Stylo • G5 • iPhone 6 • iPhone XR • GS6 Edge+ • Note 20 Ultra 5G • One M10 • Stylo 2 • G6 • iPhone 6 Plus • iPhone 11 • GS7 • GS10 • Bolt • Stylo 3 • V20 • iPhone 6S • iPhone 11 Pro • GS7 Edge • GS10e • HTC U11 • Stylo 6 • X power • iPhone 6S Plus • iPhone 11 Pro • GS8 • GS10+ • G7 ThinQ • V40 ThinQ • iPhone SE Max • GS8+ • GS10 5G • G8 ThinQ • V50 ThinQ • iPhone SE2 • iPhone 12 • GS9 • Note 10 • G8X ThinQ • V60 ThinQ 5G • iPhone 7 • iPhone 12 Pro • GS9+ • Note 10+ • V60 ThinQ 5G • iPhone 7 Plus • iPhone 12 Pro • A50 • GS20 5G Dual Screen • iPhone 8 Max • A51 • GS20+ 5G • Velvet 5G • iPhone 8 Plus • iPhone 12 Mini • Note 4 • GS20 Ultra 5G • Note 5 • Galaxy S20 FE 5G • GS21 5G • GS21+ 5G • GS21 Ultra 5G Monthly Charge, Deductible/Service Fee, and Repair Schedule -

Device Support for Beacon Transmission with Android 5+

Device Support for Beacon Transmission with Android 5+ The list below identifies the Android device builds that are able to transmit as beacons. The ability to transmit as a beacon requires Bluetooth LE advertisement capability, which may or may not be supported by a device’s firmware. Acer T01 LMY47V 5.1.1 yes Amazon KFFOWI LVY48F 5.1.1 yes archos Archos 80d Xenon LMY47I 5.1 yes asus ASUS_T00N MMB29P 6.0.1 yes asus ASUS_X008D MRA58K 6.0 yes asus ASUS_Z008D LRX21V 5.0 yes asus ASUS_Z00AD LRX21V 5.0 yes asus ASUS_Z00AD MMB29P 6.0.1 yes asus ASUS_Z00ED LRX22G 5.0.2 yes asus ASUS_Z00ED MMB29P 6.0.1 yes asus ASUS_Z00LD LRX22G 5.0.2 yes asus ASUS_Z00LD MMB29P 6.0.1 yes asus ASUS_Z00UD MMB29P 6.0.1 yes asus ASUS_Z00VD LMY47I 5.1 yes asus ASUS_Z010D MMB29P 6.0.1 yes asus ASUS_Z011D LRX22G 5.0.2 yes asus ASUS_Z016D MXB48T 6.0.1 yes asus ASUS_Z017DA MMB29P 6.0.1 yes asus ASUS_Z017DA NRD90M 7.0 yes asus ASUS_Z017DB MMB29P 6.0.1 yes asus ASUS_Z017D MMB29P 6.0.1 yes asus P008 MMB29M 6.0.1 yes asus P024 LRX22G 5.0.2 yes blackberry STV100-3 MMB29M 6.0.1 yes BLU BLU STUDIO ONE LMY47D 5.1 yes BLUBOO XFire LMY47D 5.1 yes BLUBOO Xtouch LMY47D 5.1 yes bq Aquaris E5 HD LRX21M 5.0 yes ZBXCNCU5801712 Coolpad C106-7 291S 6.0.1 yes Coolpad Coolpad 3320A LMY47V 5.1.1 yes Coolpad Coolpad 3622A LMY47V 5.1.1 yes 1 CQ CQ-BOX 2.1.0-d158f31 5.1.1 yes CQ CQ-BOX 2.1.0-f9c6a47 5.1.1 yes DANY TECHNOLOGIES HK LTD Genius Talk T460 LMY47I 5.1 yes DOOGEE F5 LMY47D 5.1 yes DOOGEE X5 LMY47I 5.1 yes DOOGEE X5max MRA58K 6.0 yes elephone Elephone P7000 LRX21M 5.0 yes Elephone P8000 -

Terraillon Wellness Coach Supported Devices

Terraillon Wellness Coach Supported Devices Warning This document lists the smartphones compatible with the download of the Wellness Coach app from the App Store (Apple) and Play Store (Android). Some models have been tested by Terraillon to check the compatibility and smooth operation of the Wellness Coach app. However, many models have not been tested. Therefore, Terraillon doesn't ensure the proper functioning of the Wellness Coach application on these models. If your smartphone model does not appear in the list, thank you to send an email to [email protected] giving us the model of your smartphone so that we can activate if the application store allows it. BRAND MODEL NAME MANUFACTURER MODEL NAME OS REQUIRED ACER Liquid Z530 acer_T02 Android 4.3+ ACER Liquid Jade S acer_S56 Android 4.3+ ACER Liquid E700 acer_e39 Android 4.3+ ACER Liquid Z630 acer_t03 Android 4.3+ ACER Liquid Z320 T012 Android 4.3+ ARCHOS 45 Helium 4G a45he Android 4.3+ ARCHOS 50 Helium 4G a50he Android 4.3+ ARCHOS Archos 45b Helium ac45bhe Android 4.3+ ARCHOS ARCHOS 50c Helium ac50che Android 4.3+ APPLE iPhone 4S iOS8+ APPLE iPhone 5 iOS8+ APPLE iPhone 5C iOS8+ APPLE iPhone 5S iOS8+ APPLE iPhone 6 iOS8+ APPLE iPhone 6 Plus iOS8+ APPLE iPhone 6S iOS8+ APPLE iPhone 6S Plus iOS8+ APPLE iPad Mini 1 iOS8+ APPLE iPad Mini 2 iOS8+ 1 / 48 www.terraillon.com Terraillon Wellness Coach Supported Devices BRAND MODEL NAME MANUFACTURER MODEL NAME OS REQUIRED APPLE iPad Mini 3 iOS8+ APPLE iPad Mini 4 iOS8+ APPLE iPad 3 iOS8+ APPLE iPad 4 iOS8+ APPLE iPad Air iOS8+ -

Udynamo Compatibility List

uDynamo Compatibility List Reader Manuf. Device Name Alt. Model Info Model Info OS OS Version Carrier Date Added Date Tested Type iDynamo 5 Apple iPad Air 2 Lightning N/A iOS N/A N/A Tablet iDynamo 5 Apple iPad Air* Lightning N/A iOS N/A N/A Tablet iDynamo 5 Apple iPad with Retina Display* Lightning N/A iOS N/A N/A Tablet iDynamo 5 Apple iPad mini 3 Lightning N/A iOS N/A N/A Tablet iDynamo 5 Apple iPad mini 2 Lightning N/A iOS N/A N/A Tablet iDynamo 5 Apple iPad mini* Lightning N/A iOS N/A N/A Tablet iDynamo 5 Apple iPhone 5c* Lightning N/A iOS N/A N/A Phone iDynamo 5 Apple iPhone 5s* Lightning N/A iOS N/A N/A Phone iDynamo 5 Apple iPhone 5* Lightning N/A iOS N/A N/A Phone iDynamo 5 Apple iPod touch (5th* generation) Lightning N/A iOS N/A N/A iPod iDynamo 5 Apple iPhone 6* Lightning N/A iOS N/A N/A Phone iDynamo 5 Apple iPhone 6 Plus* Lightning N/A iOS N/A N/A Phone iDynamo Apple iPad (3rd generation) 30 PIN N/A iOS N/A N/A Tablet iDynamo Apple iPad 2 30 PIN N/A iOS N/A N/A Tablet iDynamo Apple iPad 30 PIN N/A iOS N/A N/A Tablet iDynamo Apple iPhone 4s 30 PIN N/A iOS N/A N/A Phone iDynamo Apple iPhone 4 30 PIN N/A iOS N/A N/A Phone iDynamo Apple iPhone 3GS 30 PIN N/A iOS N/A N/A Phone iDynamo Apple iPod touch (3rd and 4th generation) 30 PIN N/A iOS N/A N/A iPod uDynamo Acer liquid MT liquid MT Android 2.3.6 101.18 1/24/14 1/24/14 uDynamo Alcatel Alcatel OneTouch Fierce 7024W Android 4.2.2 101.18 3/6/14 3/6/14 uDynamo ALCATEL Megane ALCATEL ONE TOUCH 5020T Android 4.1.2 101.18 8/10/15 8/10/15 uDynamo ALCATEL ALCATEL ONE TOUCH IDOL X ALCATEL -

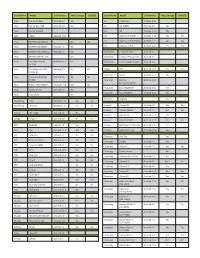

Phone Compatibility

Phone Compatibility • Compatible with iPhone models 4S and above using iOS versions 7 or higher. Last Updated: February 14, 2017 • Compatible with phone models using Android versions 4.1 (Jelly Bean) or higher, and that have the following four sensors: Accelerometer, Gyroscope, Magnetometer, GPS/Location Services. • Phone compatibility information is provided by phone manufacturers and third-party sources. While every attempt is made to ensure the accuracy of this information, this list should only be used as a guide. As phones are consistently introduced to market, this list may not be all inclusive and will be updated as new information is received. Please check your phone for the required sensors and operating system. Brand Phone Compatible Non-Compatible Acer Acer Iconia Talk S • Acer Acer Jade Primo • Acer Acer Liquid E3 • Acer Acer Liquid E600 • Acer Acer Liquid E700 • Acer Acer Liquid Jade • Acer Acer Liquid Jade 2 • Acer Acer Liquid Jade Primo • Acer Acer Liquid Jade S • Acer Acer Liquid Jade Z • Acer Acer Liquid M220 • Acer Acer Liquid S1 • Acer Acer Liquid S2 • Acer Acer Liquid X1 • Acer Acer Liquid X2 • Acer Acer Liquid Z200 • Acer Acer Liquid Z220 • Acer Acer Liquid Z3 • Acer Acer Liquid Z4 • Acer Acer Liquid Z410 • Acer Acer Liquid Z5 • Acer Acer Liquid Z500 • Acer Acer Liquid Z520 • Acer Acer Liquid Z6 • Acer Acer Liquid Z6 Plus • Acer Acer Liquid Zest • Acer Acer Liquid Zest Plus • Acer Acer Predator 8 • Alcatel Alcatel Fierce • Alcatel Alcatel Fierce 4 • Alcatel Alcatel Flash Plus 2 • Alcatel Alcatel Go Play • Alcatel Alcatel Idol 4 • Alcatel Alcatel Idol 4s • Alcatel Alcatel One Touch Fire C • Alcatel Alcatel One Touch Fire E • Alcatel Alcatel One Touch Fire S • 1 Phone Compatibility • Compatible with iPhone models 4S and above using iOS versions 7 or higher. -

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP)

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP) This device schedule is updated regularly to include new models. Check this document any time your equipment changes and before visiting an authorized repair center for service. If you are not certain of the model of your phone, refer to your original receipt or it may be printed on the white label located under the battery of your device. Repair eligibility is subject to change. Models Eligible for $29 Cracked Screen Repair Apple Samsung HTC LG • iPhone 5 • iPhone SE • Grand Prime • GS9 • One M8 • G Flex • G3 Vigor • iPhone 5C • iPhone 7 • GS4 • GS9 Plus • One E8 • G Flex II • G4 • iPhone 5S • iPhone 7 Plus • GS5 • Note 3 • One M9 • G Stylo • G5 • iPhone 6 • iPhone 8 • GS6 • Note 4 • One M10 • Stylo 2 • G6 • iPhone 6 Plus • iPhone 8 Plus • GS6 Edge • Note 5 • Bolt • Stylo 3 • V20 • iPhone 6S • iPhone X • GS6 Edge+ • Note 8 • HTC U11 • G7 ThinQ • X power • iPhone 6S Plus • iPhone XS • GS7 • Note 9 • G8 ThinQ • V40 ThinQ • iPhone XS Max • GS7 Edge • GS10 • iPhone XR • GS8 • GS10e • GS8 Plus • GS10+ Deductible/Service Fee and Repair Schedule Monthly Charge Tier 1 Tier 2 Tier 3 Tier 4 Tier 5 Sprint Complete $9 $9 - - - - Sprint Complete (Includes AppleCare® Services for eligible devices) - $15 $15 $15 $19 Equipment Service and Repair Program (ESRP) $8.25 $8.25 $8.25 $8.25 $11 Phone: Insurance Deductibles (ERP) and Service Contract ADH Service Fees during Asurion Administration (ESRP) ADH Cracked Screen Repair (ESRP) $25 $29 $29 $29 $29 ESRP standalone coverage does not include $29 cracked screens. -

Mobile POS Self-Certified Solution Providers

Mobile POS Self-certified Solution Providers THE MASTERCARD MOBILE POS PROGRAM As the number of Mobile POS solutions increases, it becomes difficult to distinguish between the solutions that are ready to be deployed following MTIP by the acquirer and those still in the development phase. The MasterCard Mobile POS Program allows MasterCard to check if the solution has the relevant industry certifications in place, including but not limited to EMV and PCI certifications. It also allows solution providers to compare their Mobile POS solution(s) against MasterCard Best Practices. The solutions that meet these criteria are registered in our program. This document lists the detailed information on the registered Mobile POS solutions alphabetically by solution provider. However, for ease of searching, solutions have also been categorized in the first few pages according to their business models, and also to their features and capabilities. This document lists products (the “Products”) that have been self-certified for compliance with the Mobile POS Best Practices by the named solution providers. Before listing the Products, MasterCard has taken reasonable steps to check whether the Products are appropriately certified by EMVCo LLC and the PCI Security Standards Council. MasterCard has not conducted any additional tests or certification of the products. MasterCard makes no representations or warranties of any kind, express or implied, with respect to the Products or the listing. To the extent possible in applicable law, MasterCard (and its group companies) specifically disclaims all representations and warranties with respect to the Products including but not limited to any and all implied warranties of title, non-infringement, or suitability for any purpose (whether or not MasterCard has been advised, has reason to know, or is otherwise in fact aware of any information) or achievement of any particular result. -

Samsung and Google Unveil Galaxy Nexus Smartphone 19 October 2011

Samsung and Google unveil Galaxy Nexus smartphone 19 October 2011 smartphones by holding them together, while Face Unlock uses facial recognition technology to activate smartphones, rather than conventional passwords. However, during a demonstration at the Hong Kong unveiling, the Face Unlock feature failed to activate the Galaxy Nexus. Executives of South Korea's Samsung said the Galaxy Nexus will go on sale in November in the U.S., Europe and Asia, including China and Japan. Google's top mobile executive Andy Rubin, left and J.K. They did not reveal the new smartphone's price or Shin, president and head of mobile communications business from Samsung, hold the new Galaxy Nexus its sales volume targets. smartphone during the news conference in Hong Kong Wednesday, Oct. 19, 2011. South Korea's Samsung Samsung and Taiwan's HTC Corp. are the biggest Electronics Co. Ltd on Wednesday unveiled its Galaxy users of the Android platform, which is engaged in Nexus smartphone, the first to use the latest version of a furious competition with Apple's own operating Google's Android operating system. (AP Photo/Kin system for market share in the rapidly expanding Cheung) smartphone sector. The U.S. International Trade Commission issued an initial ruling Tuesday that Apple's iPhone does Samsung Electronics unveiled its newest Galaxy not violate four patents owned by HTC, a blow to Nexus smartphone Wednesday, the first to use the the Taiwanese company. latest version of Google's Android operating system. The new phone is seen as the Samsung-Google partnership's answer to Apple's iPhone 4S, which in less than a week on the market has already sold more than 4 million units. -

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP)

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP) This device schedule is updated regularly to include new models. Check this document any time your equipment changes and before visiting an authorized repair center for service. If you are not certain of the model of your phone, refer to your original receipt or it may be printed on the white label located under the battery of your device. Repair eligibility is subject to change. Models Eligible for $29 Cracked Screen Repair Apple Samsung HTC LG • iPhone 5 • iPhone SE • Grand Prime • GS9 • One M8 • G Flex • G3 Vigor • iPhone 5C • iPhone 7 • GS4 • GS9 Plus • One E8 • G Flex II • G4 • iPhone 5S • iPhone 7 Plus • GS5 • Note 3 • One M9 • G Stylo • G5 • iPhone 6 • iPhone 8 • GS6 • Note 4 • One M10 • Stylo 2 • G6 • iPhone 6 Plus • iPhone 8 Plus • GS6 Edge • Note 5 • Bolt • Stylo 3 • V20 • iPhone 6S • iPhone X • GS6 Edge+ • Note 8 • HTC U11 • G7 ThinQ • X power • iPhone 6S Plus • iPhone XS • GS7 • Note 9 • G8 ThinQ • V40 ThinQ • iPhone XS Max • GS7 Edge • GS10 • V50 ThinQ • iPhone XR • GS8 • GS10e • GS8 Plus • GS10+ • Galaxy S10 5G Deductible/Service Fee and Repair Schedule Monthly Charge Tier 1 Tier 2 Tier 3 Tier 4 Tier 5 Sprint Complete $9 $9 - - - - Sprint Complete (Includes AppleCare® Services for eligible devices) - $15 $15 $15 $19 Equipment Service and Repair Program (ESRP) $8.25 $8.25 $8.25 $8.25 $11 Phone: Insurance Deductibles (ERP) and Service Contract ADH Service Fees during Asurion Administration (ESRP) ADH Cracked Screen Repair (ESRP) $25 $29 $29 $29 $29 ESRP standalone coverage does not include $29 cracked screens. -

Selectively Taming Background Android Applications

Selectively Taming Background Android Apps to Improve Battery Lifetime Marcelo Martins Justin Cappos Rodrigo Fonseca Brown University New York University Brown University 2 Your Battery and You Active device high battery drainage Matches our battery life expectation 3 Your Battery and You Idle device expect low battery drain (?) Background activities break low-power harmony Energy bugs keep the device awake 4 Hidden Energy Drain Pure AOSP + Google Mobile Services 5 100 100 Pure AOSP Pure AOSP w/ Google M. Services w/ Google M. Services 80 80 (%) (%) 60 60 40 40 Battery LevelBattery Battery LevelBattery 20 20 0 0 0 20 40 60 80 100 120 140 0 50 100 150 200 250 ~1.8 days ~8.3 days Hours Hours Samsung Galaxy Nexus ASUS MeMO Pad 7 6 ) W AlarmManager ( 2 1 Power Power Pure AOSP 0 0 50 100 150 200 250 300 350 Timeline (s) AlarmManager NetworkLocationService GCoreFlp GeofencerStateMachine NlpCollectorWakeLock ) NlpWakeLock W ( UlrDispatchingService PendingIntentCallbackService NlpLocationReceiverService Power Power 3 Hidden Energy Drain 2 1 + Google Mobile Services 0 0 50 100 150 200 250 300 350 7 Timeline (s) What Can We Do Today? Reconfigure apps Uninstall/Switch apps Kill apps Change system settings 8 What Can We Do Today? Reconfigure apps Uninstall/Switch apps Kill apps Change system settings 9 What Can We Do Today? Reconfigure apps Uninstall/Switch apps Kill apps Change system settings 10 What Can We Do Today? Reconfigure apps Uninstall/Switch apps Kill apps Change system settings 11 TAMER Runtime mechanism to rate-limit background events -

GOFR OTG Compatibility.Pdf

Brand Name Model O/S Version Mass Storage USB OTG Brand Name Model O/S Version Mass Storage USB OTG Acer Iconia A1-810 Android 4.2 Yes LG LS995 flex Android 4.4.2 Acer Iconia Tab A700 Android 4.0 Yes LG G2 (D802) Android 4.2 Yes Acer Iconia W3-810 LG G3 Android 4.4.2 Yes Acer A510 Android 4.0.4 LG Optimus G (E975) Android 4.1.2 No No Asus MeMO Pad ME301T Yes No LG Optimus G Pro (E986) Android 4.1.2 Yes Yes Asus MeMO Pad ME302 Android 4.2 Yes LG Optimus G Pro 2 Android 4.4.2 Yes Yes Asus MeMO Pad 10 ME102 Android 4.2 Yes Micromax Canvas HD A116 Android 4.1 Asus MeMO Pad ME172V Yes Micromax Canvas 2 Plus A110Q Android 4.2 Asus Transformer Pad Windows 8.1 Yes Micromax Canvas Magnus A117 Android 4.2 TF-100 Asus Transformer Pad TF- Android 4.4 Yes Yes Mogu M5 Android 4.2.1 Yes Yes 103CX-A1 Motorola Xoom Android 3.1+ No Asus Transformer Prime Android 3.0 No No TF101 Motorola Moto G Android 4.4.4 (2nd Generation) Asus Nexus 7 Tab (2013)* Android 4.4.2 Yes2 Yes Motorola Droid RAZR HD Android 4.1.2 Yes Asus Padfone mini Android 4.3 Yes Motorola Droid RAZR M Android 4.1.2 Yes Asus VivoTab RT Yes Pantech Vega R3 Yes BlackBerry Z30 BlackBerry 10 Yes Yes BlackBerry Passport BlackBerry 11 Yes Yes Huawei Ascend D1 Android 4.0 Yes Yes Huawei Ascend Mate2 Android 4.3 Yes1 Yes Fujitsu LTE F-01D Android 3.2 Yes Huawei Ascend P6 Android 4.2.2 Yes Google Nexus 7 Android 4.1+ No Yes Huawei iOcean X7 Elite Android 4.2.1 Yes Yes HTC Butterfly Android 4.2 Yes Huawei MediaPad S7-301u(P) Android 4.0 Yes HTC M8 Android 4.4.2 Yes Yes Huawei MediaPad 7 Lite Android