TOOLS THAT EASE DATA COLLECTION from the WEB Custom Web Scrapers Are Driving Research — and Collaborations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Overview of the 50 Most Common Web Scraping Tools

AN OVERVIEW OF THE 50 MOST COMMON WEB SCRAPING TOOLS WEB SCRAPING IS THE PROCESS OF USING BOTS TO EXTRACT CONTENT AND DATA FROM A WEBSITE. UNLIKE SCREEN SCRAPING, WHICH ONLY COPIES PIXELS DISPLAYED ON SCREEN, WEB SCRAPING EXTRACTS UNDERLYING CODE — AND WITH IT, STORED DATA — AND OUTPUTS THAT INFORMATION INTO A DESIGNATED FILE FORMAT. While legitimate uses cases exist for data harvesting, illegal purposes exist as well, including undercutting prices and theft of copyrighted content. Understanding web scraping bots starts with understanding the diverse and assorted array of web scraping tools and existing platforms. Following is a high-level overview of the 50 most common web scraping tools and platforms currently available. PAGE 1 50 OF THE MOST COMMON WEB SCRAPING TOOLS NAME DESCRIPTION 1 Apache Nutch Apache Nutch is an extensible and scalable open-source web crawler software project. A-Parser is a multithreaded parser of search engines, site assessment services, keywords 2 A-Parser and content. 3 Apify Apify is a Node.js library similar to Scrapy and can be used for scraping libraries in JavaScript. Artoo.js provides script that can be run from your browser’s bookmark bar to scrape a website 4 Artoo.js and return the data in JSON format. Blockspring lets users build visualizations from the most innovative blocks developed 5 Blockspring by engineers within your organization. BotScraper is a tool for advanced web scraping and data extraction services that helps 6 BotScraper organizations from small and medium-sized businesses. Cheerio is a library that parses HTML and XML documents and allows use of jQuery syntax while 7 Cheerio working with the downloaded data. -

Data and Computer Communications (Eighth Edition)

DATA AND COMPUTER COMMUNICATIONS Eighth Edition William Stallings Upper Saddle River, New Jersey 07458 Library of Congress Cataloging-in-Publication Data on File Vice President and Editorial Director, ECS: Art Editor: Gregory Dulles Marcia J. Horton Director, Image Resource Center: Melinda Reo Executive Editor: Tracy Dunkelberger Manager, Rights and Permissions: Zina Arabia Assistant Editor: Carole Snyder Manager,Visual Research: Beth Brenzel Editorial Assistant: Christianna Lee Manager, Cover Visual Research and Permissions: Executive Managing Editor: Vince O’Brien Karen Sanatar Managing Editor: Camille Trentacoste Manufacturing Manager, ESM: Alexis Heydt-Long Production Editor: Rose Kernan Manufacturing Buyer: Lisa McDowell Director of Creative Services: Paul Belfanti Executive Marketing Manager: Robin O’Brien Creative Director: Juan Lopez Marketing Assistant: Mack Patterson Cover Designer: Bruce Kenselaar Managing Editor,AV Management and Production: Patricia Burns ©2007 Pearson Education, Inc. Pearson Prentice Hall Pearson Education, Inc. Upper Saddle River, NJ 07458 All rights reserved. No part of this book may be reproduced in any form or by any means, without permission in writing from the publisher. Pearson Prentice Hall™ is a trademark of Pearson Education, Inc. All other tradmarks or product names are the property of their respective owners. The author and publisher of this book have used their best efforts in preparing this book.These efforts include the development, research, and testing of the theories and programs to determine their effectiveness.The author and publisher make no warranty of any kind, expressed or implied, with regard to these programs or the documentation contained in this book.The author and publisher shall not be liable in any event for incidental or consequential damages in connection with, or arising out of, the furnishing, performance, or use of these programs. -

Research Data Management Best Practices

Research Data Management Best Practices Introduction ............................................................................................................................................................................ 2 Planning & Data Management Plans ...................................................................................................................................... 3 Naming and Organizing Your Files .......................................................................................................................................... 6 Choosing File Formats ............................................................................................................................................................. 9 Working with Tabular Data ................................................................................................................................................... 10 Describing Your Data: Data Dictionaries ............................................................................................................................... 12 Describing Your Project: Citation Metadata ......................................................................................................................... 15 Preparing for Storage and Preservation ............................................................................................................................... 17 Choosing a Repository ......................................................................................................................................................... -

Data Management, Analysis Tools, and Analysis Mechanics

Chapter 2 Data Management, Analysis Tools, and Analysis Mechanics This chapter explores different tools and techniques for handling data for research purposes. This chapter assumes that a research problem statement has been formulated, research hypotheses have been stated, data collection planning has been conducted, and data have been collected from various sources (see Volume I for information and details on these phases of research). This chapter discusses how to combine and manage data streams, and how to use data management tools to produce analytical results that are error free and reproducible, once useful data have been obtained to accomplish the overall research goals and objectives. Purpose of Data Management Proper data handling and management is crucial to the success and reproducibility of a statistical analysis. Selection of the appropriate tools and efficient use of these tools can save the researcher numerous hours, and allow other researchers to leverage the products of their work. In addition, as the size of databases in transportation continue to grow, it is becoming increasingly important to invest resources into the management of these data. There are a number of ancillary steps that need to be performed both before and after statistical analysis of data. For example, a database composed of different data streams needs to be matched and integrated into a single database for analysis. In addition, in some cases data must be transformed into the preferred electronic format for a variety of statistical packages. Sometimes, data obtained from “the field” must be cleaned and debugged for input and measurement errors, and reformatted. The following sections discuss considerations for developing an overall data collection, handling, and management plan, and tools necessary for successful implementation of that plan. -

Computer Files & Data Storage

STORAGE & FILE CONCEPTS, UTILITIES (Pages 6, 150-158 - Discovering Computers & Microsoft Office 2010) I. Computer files – data, information or instructions residing on secondary storage are stored in the form of a file. A. Software files are also called program files. Program files (instructions) are created by a computer programmer and generally cannot be modified by a user. It’s important that we not move or delete program files because your computer requires them to perform operations. Program files are also referred to as “executables”. 1. You can identify a program file by its extension:“.EXE”, “.COM”, “.BAT”, “.DLL”, “.SYS”, or “.INI” (there are others) or a distinct program icon. B. Data files - when you select a “save” option while using an application program, you are in essence creating a data file. Users create data files. 1. File naming conventions refer to the guidelines followed while assigning file names and will vary with the operating system and application in use (see figure 4-1). File names in Windows 7 may be up to 255 characters, you're not allowed to use reserved characters or certain reserved words. File extensions are used to identify the application that was used to create the file and format data in a manner recognized by the source application used to create it. FALL 2012 1 II. Selecting secondary storage media A. There are three type of technologies for storage devices: magnetic, optical, & solid state, there are advantages & disadvantages between them. When selecting a secondary storage device, certain factors should be considered: 1. Capacity - the capacity of computer storage is expressed in bytes. -

File Format Guidelines for Management and Long-Term Retention of Electronic Records

FILE FORMAT GUIDELINES FOR MANAGEMENT AND LONG-TERM RETENTION OF ELECTRONIC RECORDS 9/10/2012 State Archives of North Carolina File Format Guidelines for Management and Long-Term Retention of Electronic records Table of Contents 1. GUIDELINES AND RECOMMENDATIONS .................................................................................. 3 2. DESCRIPTION OF FORMATS RECOMMENDED FOR LONG-TERM RETENTION ......................... 7 2.1 Word Processing Documents ...................................................................................................................... 7 2.1.1 PDF/A-1a (.pdf) (ISO 19005-1 compliant PDF/A) ........................................................................ 7 2.1.2 OpenDocument Text (.odt) ................................................................................................................... 3 2.1.3 Special Note on Google Docs™ .......................................................................................................... 4 2.2 Plain Text Documents ................................................................................................................................... 5 2.2.1 Plain Text (.txt) US-ASCII or UTF-8 encoding ................................................................................... 6 2.2.2 Comma-separated file (.csv) US-ASCII or UTF-8 encoding ........................................................... 7 2.2.3 Tab-delimited file (.txt) US-ASCII or UTF-8 encoding .................................................................... 8 2.3 -

Overview of Data Format, Data Model and Procedural Metadata to Support Iot

Overview of data format, data model and procedural metadata to support IoT Nakyoung Kim Scattered IoT ecosystem • Service and application–dedicated data formats & models • Data formats and models have been developed to suit the specific requirements of each industry, service, and application. • The current scattered-data ecosystem has been established, and it requires high costs to process and manage data for service and application convergence. • Needs of data integration and aggregation • The boundaries between industries are gradually crumbling down due to domain convergence. • For the future convergence markets of IoT and smart city, data integration and aggregation are important. Application-dedicated data management 2 Interoperability through the Web • Bridging the data • Data formats and models have been settled in the current forms over a period to resolve issues at each moment and fit case-by-case requirements. • Therefore, it is hardly possible to reformulate or replace the existing data formats and models. • Bridging the data formats and models is feasible. • Connecting via Web • A semantic information space for heterogeneous data, platforms, and application domains, Web can provide technologies that support the interoperability of IoT. • Enhanced interoperability in IoT ecosystems can be fulfilled with the concepts of Web of Things. Web Data-driven data management 3 Annotation and Microdata format • Structured data for annotation • To exploit what the Web offers, IoT data first needs to be structured and annotated just like how the other data is handled on the Web. • Microdata format • Microdata formats refer structured data markups describing and embedding the meanings of resources on the Web along with their properties and relationships. -

Modular Data Storage with Anvil

Modular Data Storage with Anvil Mike Mammarella Shant Hovsepian Eddie Kohler UCLA UCLA UCLA/Meraki [email protected] [email protected] [email protected] http://www.read.cs.ucla.edu/anvil/ ABSTRACT age strategies and behaviors. We intend Anvil configura- Databases have achieved orders-of-magnitude performance tions to serve as single-machine back-end storage layers for improvements by changing the layout of stored data – for databases and other structured data management systems. instance, by arranging data in columns or compressing it be- The basic Anvil abstraction is the dTable, an abstract key- fore storage. These improvements have been implemented value store. Some dTables communicate directly with sta- in monolithic new engines, however, making it difficult to ble storage, while others layer above storage dTables, trans- experiment with feature combinations or extensions. We forming their contents. dTables can represent row stores present Anvil, a modular and extensible toolkit for build- and column stores, but their fine-grained modularity of- ing database back ends. Anvil’s storage modules, called dTa- fers database designers more possibilities. For example, a bles, have much finer granularity than prior work. For ex- typical Anvil configuration splits a single “table” into sev- ample, some dTables specialize in writing data, while oth- eral distinct dTables, including a log to absorb writes and ers provide optimized read-only formats. This specialization read-optimized structures to satisfy uncached queries. This makes both kinds of dTable simple to write and understand. split introduces opportunities for clean extensibility – for Unifying dTables implement more comprehensive function- example, we present a Bloom filter dTable that can slot ality by layering over other dTables – for instance, building a above read-optimized stores and improve the performance read/write store from read-only tables and a writable journal, of nonexistent key lookup. -

Mapping Approaches to Data and Data Flows

www.oecd.org/innovation www.oecd.org/trade MAPPING APPROACHES @OECDinnovation @OECDtrade TO DATA AND [email protected] DATA FLOWS [email protected] Report for the G20 Digital Economy Task Force SAUDI ARABIA, 2020 This document was prepared by the Organisation for Economic Co-operation and Development (OECD) Directorate for Science, Technology and Innovation (STI) and Trade and Agriculture Directorate (TAD), as an input for the discussions in the G20 Digital Economy Task Force in 2020, under the auspices of the G20 Saudi Arabia Presidency in 2020. The opinions expressed and arguments employed herein do not necessarily represent the official views of the member countries of the OECD or the G20. This document and any map included herein are without prejudice to the status of or sovereignty over any territory, to the delimitation of international frontiers and boundaries and to the name of any territory, city or area. Cover image: Jason Leung on Unsplash. © OECD 2020 The use of this work, whether digital or print, is governed by the Terms and Conditions to be found at http://www.oecd.org/termsandconditions. 2 © OECD 2020 Contents Executive Summary 4 1. Motivation and aim of the work 6 2. Context and scene setting 7 2.1. Data is an increasingly critical resource, generating benefits across society 7 2.2. The effective use of data boosts productivity and enables economic activity across all sectors8 2.3. The data ecosystem value chain is global 9 2.4. Data sharing enables increasing returns to scale and scope 10 2.5. Measures that affect data sharing and cross-border data flows can restrict the functioning of markets 11 2.6. -

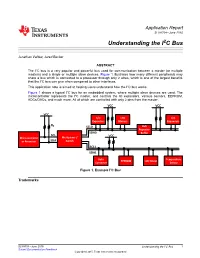

Understanding the I2C Bus

Application Report SLVA704–June 2015 Understanding the I2C Bus Jonathan Valdez, Jared Becker ABSTRACT The I2C bus is a very popular and powerful bus used for communication between a master (or multiple masters) and a single or multiple slave devices. Figure 1 illustrates how many different peripherals may share a bus which is connected to a processor through only 2 wires, which is one of the largest benefits that the I2C bus can give when compared to other interfaces. This application note is aimed at helping users understand how the I2C bus works. Figure 1 shows a typical I2C bus for an embedded system, where multiple slave devices are used. The microcontroller represents the I2C master, and controls the IO expanders, various sensors, EEPROM, ADCs/DACs, and much more. All of which are controlled with only 2 pins from the master. VCC VCC VCC I/O LED I/O Expanders Blinkers Expanders RP SCL0 Hub Repeater SDA0 Buffer SCL VCC Microcontroller Multiplexer / or Processor SDA Switch SCL1 SDA1 Data Temperature EEPROM LCD Driver Converter Sensor Figure 1. Example I2C Bus Trademarks SLVA704–June 2015 Understanding the I2C Bus 1 Submit Documentation Feedback Copyright © 2015, Texas Instruments Incorporated Electrical Characteristics www.ti.com 1 Electrical Characteristics I2C uses an open-drain/open-collector with an input buffer on the same line, which allows a single data line to be used for bidirectional data flow. 1.1 Open-Drain for Bidirectional Communication Open-drain refers to a type of output which can either pull the bus down to a voltage (ground, in most cases), or "release" the bus and let it be pulled up by a pull-up resistor. -

Data Protection Lifecycle

Data Protection LifeCycle JHSPH is committed to protecting all data that has been created by its faculty, staff, students, collaborators; and all data that has been entrusted to us. Data owners are accountable for the protection of data. Responsibility can be delegated to custodians, but accountability remains with data owners. Protection strategies should address the Integrity, Availability, and Confidentiality of data. Data protection must extend to every stage of the data lifecycle. Risks associated with data cannot be eliminated. The goal of data protection is to mitigate risk using appropriate controls until the risk has been reduced to a level acceptable to the data owner. While developing a data security plans, please reference the “Data LifeCycle Key Considerations” to ensure that all aspects of data protection have been addressed. Several of the Data Lifecycle Key Considerations reference a common set of reasonable controls. To avoid duplication, these recommended minimum controls are listed here… Reasonable Controls • Data is encrypted in transit (Here is a great explanation of how encryption works… https://www.youtube.com/watch?v=ZghMPWGXexs) • Data is encrypted while “at rest” (on a storage device) • Security patches and updates are routinely applied to computing and storage devices. • Devices have access controls so that… o Each person accessing the device is uniquely identified (username) o Passwords are sufficiently strong to prevent compromise o All access is logged and recorded o Unauthorized access is prevented o Approved access list is reviewed periodically for correctness Data Lifecycle Key Considerations Collection • Ensure that there are reasonable controls on the device(s) that are being used for collection or data origination. -

Data Mining for the Masses

Data Mining for the Masses Dr. Matthew North A Global Text Project Book This book is available on Amazon.com. © 2012 Dr. Matthew A. North This book is licensed under a Creative Commons Attribution 3.0 License All rights reserved. ISBN: 0615684378 ISBN-13: 978-0615684376 ii DEDICATION This book is gratefully dedicated to Dr. Charles Hannon, who gave me the chance to become a college professor and then challenged me to learn how to teach data mining to the masses. iii iv Data Mining for the Masses Table of Contents Dedication ....................................................................................................................................................... iii Table of Contents ............................................................................................................................................ v Acknowledgements ........................................................................................................................................ xi SECTION ONE: Data Mining Basics ......................................................................................................... 1 Chapter One: Introduction to Data Mining and CRISP-DM .................................................................. 3 Introduction ................................................................................................................................................. 3 A Note About Tools .................................................................................................................................