Using Python for Improved Productivity in HPC and Data Science Applications: the Time Is Now

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alternatives to Python: Julia

Crossing Language Barriers with , SciPy, and thon Steven G. Johnson MIT Applied Mathemacs Where I’m coming from… [ google “Steven Johnson MIT” ] Computaonal soPware you may know… … mainly C/C++ libraries & soPware … Nanophotonics … oPen with Python interfaces … (& Matlab & Scheme & …) jdj.mit.edu/nlopt www.w.org jdj.mit.edu/meep erf(z) (and erfc, erfi, …) in SciPy 0.12+ & other EM simulators… jdj.mit.edu/book Confession: I’ve used Python’s internal C API more than I’ve coded in Python… A new programming language? Viral Shah Jeff Bezanson Alan Edelman julialang.org Stefan Karpinski [begun 2009, “0.1” in 2013, ~20k commits] [ 17+ developers with 100+ commits ] [ usual fate of all First reacBon: You’re doomed. new languages ] … subsequently: … probably doomed … sll might be doomed but, in the meanBme, I’m having fun with it… … and it solves a real problem with technical compuBng in high-level languages. The “Two-Language” Problem Want a high-level language that you can work with interacBvely = easy development, prototyping, exploraon ⇒ dynamically typed language Plenty to choose from: Python, Matlab / Octave, R, Scilab, … (& some of us even like Scheme / Guile) Historically, can’t write performance-criBcal code (“inner loops”) in these languages… have to switch to C/Fortran/… (stac). [ e.g. SciPy git master is ~70% C/C++/Fortran] Workable, but Python → Python+C = a huge jump in complexity. Just vectorize your code? = rely on mature external libraries, operang on large blocks of data, for performance-criBcal code Good advice! But… • Someone has to write those libraries. • Eventually that person may be you. -

Data Visualization in Python

Data visualization in python Day 2 A variety of packages and philosophies • (today) matplotlib: http://matplotlib.org/ – Gallery: http://matplotlib.org/gallery.html – Frequently used commands: http://matplotlib.org/api/pyplot_summary.html • Seaborn: http://stanford.edu/~mwaskom/software/seaborn/ • ggplot: – R version: http://docs.ggplot2.org/current/ – Python port: http://ggplot.yhathq.com/ • Bokeh (live plots in your browser) – http://bokeh.pydata.org/en/latest/ Biocomputing Bootcamp 2017 Matplotlib • Gallery: http://matplotlib.org/gallery.html • Top commands: http://matplotlib.org/api/pyplot_summary.html • Provides "pylab" API, a mimic of matlab • Many different graph types and options, some obscure Biocomputing Bootcamp 2017 Matplotlib • Resulting plots represented by python objects, from entire figure down to individual points/lines. • Large API allows any aspect to be tweaked • Lengthy coding sometimes required to make a plot "just so" Biocomputing Bootcamp 2017 Seaborn • https://stanford.edu/~mwaskom/software/seaborn/ • Implements more complex plot types – Joint points, clustergrams, fitted linear models • Uses matplotlib "under the hood" Biocomputing Bootcamp 2017 Others • ggplot: – (Original) R version: http://docs.ggplot2.org/current/ – A recent python port: http://ggplot.yhathq.com/ – Elegant syntax for compactly specifying plots – but, they can be hard to tweak – We'll discuss this on the R side tomorrow, both the basics of both work similarly. • Bokeh – Live, clickable plots in your browser! – http://bokeh.pydata.org/en/latest/ -

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book)

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book) R. w. BEMER Introduction This is an admittedly fragmentary chronicle of events in the develop ment of the algorithmic language ALGOL. Nevertheless, it seems perti nent, while we await the advent of a technical and conceptual history, to outline the matrix of forces which shaped that history in a political and social sense. Perhaps the author's role is only that of recorder of visible events, rather than the complex interplay of ideas which have made ALGOL the force it is in the computational world. It is true, as Professor Ershov stated in his review of a draft of the present work, that "the reading of this history, rich in curious details, nevertheless does not enable the beginner to understand why ALGOL, with a history that would seem more disappointing than triumphant, changed the face of current programming". I can only state that the time scale and my own lesser competence do not allow the tracing of conceptual development in requisite detail. Books are sure to follow in this area, particularly one by Knuth. A further defect in the present work is the relatively lesser availability of European input to the log, although I could claim better access than many in the U.S.A. This is regrettable in view of the relatively stronger support given to ALGOL in Europe. Perhaps this calmer acceptance had the effect of reducing the number of significant entries for a log such as this. Following a brief view of the pattern of events come the entries of the chronology, or log, numbered for reference in the text. -

Ipython: a System for Interactive Scientific

P YTHON: B ATTERIES I NCLUDED IPython: A System for Interactive Scientific Computing Python offers basic facilities for interactive work and a comprehensive library on top of which more sophisticated systems can be built. The IPython project provides an enhanced interactive environment that includes, among other features, support for data visualization and facilities for distributed and parallel computation. he backbone of scientific computing is All these systems offer an interactive command mostly a collection of high-perfor- line in which code can be run immediately, without mance code written in Fortran, C, and having to go through the traditional edit/com- C++ that typically runs in batch mode pile/execute cycle. This flexible style matches well onT large systems, clusters, and supercomputers. the spirit of computing in a scientific context, in However, over the past decade, high-level environ- which determining what computations must be ments that integrate easy-to-use interpreted lan- performed next often requires significant work. An guages, comprehensive numerical libraries, and interactive environment lets scientists look at data, visualization facilities have become extremely popu- test new ideas, combine algorithmic approaches, lar in this field. As hardware becomes faster, the crit- and evaluate their outcome directly. This process ical bottleneck in scientific computing isn’t always the might lead to a final result, or it might clarify how computer’s processing time; the scientist’s time is also they need to build a more static, large-scale pro- a consideration. For this reason, systems that allow duction code. rapid algorithmic exploration, data analysis, and vi- As this article shows, Python (www.python.org) sualization have become a staple of daily scientific is an excellent tool for such a workflow.1 The work. -

Writing Mathematical Expressions with Latex

APPENDIX A Writing Mathematical Expressions with LaTeX LaTeX is extensively used in Python. In this appendix there are many examples that can be useful to represent LaTeX expressions inside Python implementations. This same information can be found at the link http://matplotlib.org/users/mathtext.html. With matplotlib You can enter the LaTeX expression directly as an argument of various functions that can accept it. For example, the title() function that draws a chart title. import matplotlib.pyplot as plt %matplotlib inline plt.title(r'$\alpha > \beta$') With IPython Notebook in a Markdown Cell You can enter the LaTeX expression between two '$$'. $$c = \sqrt{a^2 + b^2}$$ c= a+22b 537 © Fabio Nelli 2018 F. Nelli, Python Data Analytics, https://doi.org/10.1007/978-1-4842-3913-1 APPENDIX A WRITING MaTHEmaTICaL EXPRESSIONS wITH LaTEX With IPython Notebook in a Python 2 Cell You can enter the LaTeX expression within the Math() function. from IPython.display import display, Math, Latex display(Math(r'F(k) = \int_{-\infty}^{\infty} f(x) e^{2\pi i k} dx')) Subscripts and Superscripts To make subscripts and superscripts, use the ‘_’ and ‘^’ symbols: r'$\alpha_i > \beta_i$' abii> This could be very useful when you have to write summations: r'$\sum_{i=0}^\infty x_i$' ¥ åxi i=0 Fractions, Binomials, and Stacked Numbers Fractions, binomials, and stacked numbers can be created with the \frac{}{}, \binom{}{}, and \stackrel{}{} commands, respectively: r'$\frac{3}{4} \binom{3}{4} \stackrel{3}{4}$' 3 3 æ3 ö4 ç ÷ 4 è 4ø Fractions can be arbitrarily nested: 1 5 - x 4 538 APPENDIX A WRITING MaTHEmaTICaL EXPRESSIONS wITH LaTEX Note that special care needs to be taken to place parentheses and brackets around fractions. -

Ipython Documentation Release 0.10.2

IPython Documentation Release 0.10.2 The IPython Development Team April 09, 2011 CONTENTS 1 Introduction 1 1.1 Overview............................................1 1.2 Enhanced interactive Python shell...............................1 1.3 Interactive parallel computing.................................3 2 Installation 5 2.1 Overview............................................5 2.2 Quickstart...........................................5 2.3 Installing IPython itself....................................6 2.4 Basic optional dependencies..................................7 2.5 Dependencies for IPython.kernel (parallel computing)....................8 2.6 Dependencies for IPython.frontend (the IPython GUI).................... 10 3 Using IPython for interactive work 11 3.1 Quick IPython tutorial..................................... 11 3.2 IPython reference........................................ 17 3.3 IPython as a system shell.................................... 42 3.4 IPython extension API..................................... 47 4 Using IPython for parallel computing 53 4.1 Overview and getting started.................................. 53 4.2 Starting the IPython controller and engines.......................... 57 4.3 IPython’s multiengine interface................................ 64 4.4 The IPython task interface................................... 78 4.5 Using MPI with IPython.................................... 80 4.6 Security details of IPython................................... 83 4.7 IPython/Vision Beam Pattern Demo............................. -

Easybuild Documentation Release 20210907.0

EasyBuild Documentation Release 20210907.0 Ghent University Tue, 07 Sep 2021 08:55:41 Contents 1 What is EasyBuild? 3 2 Concepts and terminology 5 2.1 EasyBuild framework..........................................5 2.2 Easyblocks................................................6 2.3 Toolchains................................................7 2.3.1 system toolchain.......................................7 2.3.2 dummy toolchain (DEPRECATED) ..............................7 2.3.3 Common toolchains.......................................7 2.4 Easyconfig files..............................................7 2.5 Extensions................................................8 3 Typical workflow example: building and installing WRF9 3.1 Searching for available easyconfigs files.................................9 3.2 Getting an overview of planned installations.............................. 10 3.3 Installing a software stack........................................ 11 4 Getting started 13 4.1 Installing EasyBuild........................................... 13 4.1.1 Requirements.......................................... 14 4.1.2 Using pip to Install EasyBuild................................. 14 4.1.3 Installing EasyBuild with EasyBuild.............................. 17 4.1.4 Dependencies.......................................... 19 4.1.5 Sources............................................. 21 4.1.6 In case of installation issues. .................................. 22 4.2 Configuring EasyBuild.......................................... 22 4.2.1 Supported configuration -

Intro to Jupyter Notebook

Evan Williamson University of Idaho Library 20160302 Introducing Jupyter Notebook for Python and R Three questions: http://goo.gl/forms/uYRvebcJkD Try Jupyter https://try.jupyter.org/ Install Jupyter ● Get Python (suggested: Anaconda, Py3, 64bit, https://www.continuum.io/downloads ) ● Manually install (if necessary), http://jupyter.readthedocs.org/en/latest/install.html pip3 install jupyter Install R for Jupyter ● Get R, https://cran.cnr.berkeley.edu/ (suggested: RStudio, https://www.rstudio.com/products/RStudio/#Desktop ) ● Open R console and follow: http://irkernel.github.io/installation/ Start a Notebook ● Open terminal/command prompt jupyter notebook ● Notebook will open at http://127.0.0.1:8888 ● Exit by closing the browser, then typing Ctrl+C in the terminal window Create Slides ● Open terminal/command prompt jupyter nbconvert slideshow.ipynb --to slides --post serve ● Note: “post serve” locally serves the file so you can give a presentation in your browser. If you only want to convert, leave this option off. The resulting HTML file must be served to render correctly. Slides use Reveal.js, http://lab.hakim.se/revealjs/ Reference ● Jupyter docs, http://jupyter.readthedocs.org/en/latest/index.html ● IPython docs, http://ipython.readthedocs.org/en/stable/index.html ● List of kernels, https://github.com/ipython/ipython/wiki/IPythonkernelsforotherlanguages ● A gallery of interesting IPython Notebooks, https://github.com/ipython/ipython/wiki/AgalleryofinterestingIPythonNotebooks ● Markdown basics, -

Numpy for MATLAB Users – Mathesaurus

NumPy for MATLAB users Help MATLAB/Octave Python Description doc help() Browse help interactively help -i % browse with Info help help or doc doc help Help on using help help plot help(plot) or ?plot Help for a function help splines or doc splines help(pylab) Help for a toolbox/library package demo Demonstration examples Searching available documentation MATLAB/Octave Python Description lookfor plot Search help files help help(); modules [Numeric] List available packages which plot help(plot) Locate functions Using interactively MATLAB/Octave Python Description octave -q ipython -pylab Start session TAB or M-? TAB Auto completion foo(.m) execfile('foo.py') or run foo.py Run code from file history hist -n Command history diary on [..] diary off Save command history exit or quit CTRL-D End session CTRL-Z # windows sys.exit() Operators MATLAB/Octave Python Description help - Help on operator syntax Arithmetic operators MATLAB/Octave Python Description a=1; b=2; a=1; b=1 Assignment; defining a number a + b a + b or add(a,b) Addition a - b a - b or subtract(a,b) Subtraction a * b a * b or multiply(a,b) Multiplication a / b a / b or divide(a,b) Division a .^ b a ** b Power, $a^b$ power(a,b) pow(a,b) rem(a,b) a % b Remainder remainder(a,b) fmod(a,b) a+=1 a+=b or add(a,b,a) In place operation to save array creation overhead factorial(a) Factorial, $n!$ Relational operators MATLAB/Octave Python Description a == b a == b or equal(a,b) Equal a < b a < b or less(a,b) Less than a > b a > b or greater(a,b) Greater than a <= b a <= b or less_equal(a,b) -

The Legacy of Victor R. Basili

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange About Harlan D. Mills Science Alliance 2005 Foundations of Empirical Software Engineering: The Legacy of Victor R. Basili Barry Boehm Hans Dieter Rombach Marvin V. Zelkowitz Follow this and additional works at: https://trace.tennessee.edu/utk_harlanabout Part of the Physical Sciences and Mathematics Commons Recommended Citation Boehm, Barry; Rombach, Hans Dieter; and Zelkowitz, Marvin V., "Foundations of Empirical Software Engineering: The Legacy of Victor R. Basili" (2005). About Harlan D. Mills. https://trace.tennessee.edu/utk_harlanabout/3 This Book is brought to you for free and open access by the Science Alliance at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in About Harlan D. Mills by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. University of Tennessee, Knoxville Trace: Tennessee Research and Creative Exchange The Harlan D. Mills Collection Science Alliance 1-1-2005 Foundations of Empirical Software Engineering: The Legacy of Victor R.Basili Barry Boehm Hans Dieter Rombach Marvin V. Zelkowitz Recommended Citation Boehm, Barry; Rombach, Hans Dieter; and Zelkowitz, Marvin V., "Foundations of Empirical Software Engineering: The Legacy of Victor R.Basili" (2005). The Harlan D. Mills Collection. http://trace.tennessee.edu/utk_harlan/36 This Book is brought to you for free and open access by the Science Alliance at Trace: Tennessee Research and Creative Exchange. It has been accepted for inclusion in The Harlan D. Mills Collection by an authorized administrator of Trace: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. -

The State of Digital Computer Technology in Europe, 1961

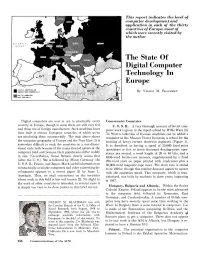

This report indicates the level of computer development and application in each of the thirty countries of Europe, most of which were recently visited by the author The State Of Digital Computer Technology In Europe By NELSON M. BLACHMAN Digital computers are now in use in practically every Communist Countries country in Europe, though in some there are still very few U. S. S.R. A very thorough account of Soviet com- and these are of foreign manufacture. Such machines have puter work is given in the report edited by Willis Ware [3]. been built in sixteen European countries, of which seven To Ware's collection of Russian machines can be added a are producing them commercially. The map above shows computer at the Moscow Power Institute, a school for the the computer geography of Europe and the Near East. It is training of heavy-current electrical engineers (Figure 1). somewhat difficult to rank the countries on a one-dimen- It is described as having a speed of 25,000 fixed-point sional scale both because of the many-faceted nature of the operations or five to seven thousand floating-point oper- computer field and because their populations differ widely ations per second, a word length of 20 or 40 bits, and a in size. Nevertheh~ss, Great Britain clearly comes first 4096-word ferrite-core memory, supplemented by a fixed (after the U. S.). She is followed by (West) Germany, the 256-word store on paper printed with condensers plus a U. S. S. R., France, and Japan. Much useful information on 50,000-word magnetic-tape store. -

Sage Tutorial (Pdf)

Sage Tutorial Release 9.4 The Sage Development Team Aug 24, 2021 CONTENTS 1 Introduction 3 1.1 Installation................................................4 1.2 Ways to Use Sage.............................................4 1.3 Longterm Goals for Sage.........................................5 2 A Guided Tour 7 2.1 Assignment, Equality, and Arithmetic..................................7 2.2 Getting Help...............................................9 2.3 Functions, Indentation, and Counting.................................. 10 2.4 Basic Algebra and Calculus....................................... 14 2.5 Plotting.................................................. 20 2.6 Some Common Issues with Functions.................................. 23 2.7 Basic Rings................................................ 26 2.8 Linear Algebra.............................................. 28 2.9 Polynomials............................................... 32 2.10 Parents, Conversion and Coercion.................................... 36 2.11 Finite Groups, Abelian Groups...................................... 42 2.12 Number Theory............................................. 43 2.13 Some More Advanced Mathematics................................... 46 3 The Interactive Shell 55 3.1 Your Sage Session............................................ 55 3.2 Logging Input and Output........................................ 57 3.3 Paste Ignores Prompts.......................................... 58 3.4 Timing Commands............................................ 58 3.5 Other IPython