Download a File Form Server Wget Username Password How Do I Get Wget Username and Password? Let's Start with Something Simple

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MASTERCLASS GNUPG MASTERCLASS You Wouldn’T Want Other People Opening Your Letters and BEN EVERARD Your Data Is No Different

MASTERCLASS GNUPG MASTERCLASS You wouldn’t want other people opening your letters and BEN EVERARD your data is no different. Encrypt it today! SECURE EMAIL WITH GNUPG AND ENIGMAIL Send encrypted emails from your favourite email client. our typical email is about as secure as a The first thing that you need to do is create a key to JOHN LANE postcard, which is good news if you’re a represent your identity in the OpenPGP world. You’d Ygovernment agency. But you wouldn’t use a typically create one key per identity that you have. postcard for most things sent in the post; you’d use a Most people would have one identity, being sealed envelope. Email is no different; you just need themselves as a person. However, some may find an envelope – and it’s called “Encryption”. having separate personal and professional identities Since the early 1990s, the main way to encrypt useful. It’s a personal choice, but starting with a single email has been PGP, which stands for “Pretty Good key will help while you’re learning. Privacy”. It’s a protocol for the secure encryption of Launch Seahorse and click on the large plus-sign email that has since evolved into an open standard icon that’s just below the menu. Select ‘PGP Key’ and called OpenPGP. work your way through the screens that follow to supply your name and email address and then My lovely horse generate the key. The GNU Privacy Guard (GnuPG), is a free, GPL-licensed You can, optionally, use the Advanced Key Options implementation of the OpenPGP standard (there are to add a comment that can help others identify your other implementations, both free and commercial – key and to select the cipher, its strength and set when the PGP name now refers to a commercial product the key should expire. -

FAKULT¨AT F¨UR INFORMATIK Cryogenic Enabling Power-Aware

FAKULTAT¨ FUR¨ INFORMATIK DER TECHNISCHEN UNIVERSITAT¨ MUNCHEN¨ Masterarbeit in Informatik Cryogenic Enabling Power-Aware Applications on Linux Alejandra Morales Ruiz FAKULTAT¨ FUR¨ INFORMATIK DER TECHNISCHEN UNIVERSITAT¨ MUNCHEN¨ Masterarbeit in Informatik Cryogenic Enabling Power-Aware Applications on Linux Cryogenic Ein Linux Kernel-Modul fur¨ Kooperatives Energiesparen Author: Alejandra Morales Ruiz Supervisor: Dr. Christian Grothoff Date: February 17, 2014 Ich versichere, dass ich dieses Master-Thesis selbstandig¨ verfasst und nur die angegebe- nen Quellen und Hilfsmittel verwendet habe. I assure the single handed composition of this master’s thesis only supported by declared resources. Munich, February 17, 2014 Alejandra Morales Ruiz Acknowledgments I want to thank Christian Grothoff for giving me the opportunity to write this thesis as well as for the support and advice given throughout its completion. I also thank the people at the Chair for Robotics and Embedded Systems, especially Reinhard Lafrenz and Steffen Wittmeier, who allowed me to access their laboratory and provided me with the necessary equipment to perform the energy measurements. Thanks to Danny Hughes and Wilfried Daniels, from the Katholieke Universiteit Leuven, for their advice and contributions to the experimentation and the subsequent results of this work. I would also like to express my gratitude to the whole community of Linux developers for sharing their knowledge and experience on the Internet, which has helped me not only during this thesis, but during all my studies. Finally, I would like to thank my parents and brothers, who always supported and en- couraged me to finish my studies abroad, and my partner, Angel,´ because this thesis would have never been possible without him. -

Installing Freepbx 13 on Centos 7

Installing FreePBX 13 on CentOS 7 READ FIRST Manual installations of FreePBX is considered an EXPERTS ONLY exercise. This method of installation is enough to get CORE functionality of FreePBX. Non-commercial modules may not function as expected or detailed in the Wiki's. Certain modules and features may require additional software to be installed and configured on the server. **** COMMERCIAL MODULES CANNOT BE INSTALLED ON THIS OS **** Install Centos 7 **** COMMERCIAL MODULES CANNOT BE INSTALLED ON THIS OS **** Install Centos 7 Initial System Setup Disable selinux Update Your System Install Additional Required Dependencies Install Legacy Pear requirements Firewalld Basic Configuration Enable and Start MariaDB Enable and Start Apache Install Dependencies for Google Voice (if required) Install iksemel Add the Asterisk User Install and Configure Asterisk Download Asterisk source files. Compile and install DAHDI Compile and install pjproject Compile and Install jansson Compile and install Asterisk Install Asterisk Soundfiles. Set Asterisk ownership permissions. Install and Configure FreePBX A few small modifications to Apache. Download and install FreePBX. That's it! Automatic Startup Initial System Setup You MUST run all of these commands as the root user! You MUST disable selinux. selinux can cause strange behavior during the install Disable selinux In /etc/sysconfig/selinux , change the following lines: sed -i 's/\(^SELINUX=\).*/\SELINUX=disabled/' /etc/sysconfig/selinux sed -i 's/\(^SELINUX=\).*/\SELINUX=disabled/' /etc/selinux/config reboot, -

Emacspeak User's Guide

Emacspeak User's Guide Jennifer Jobst Revision History Revision 1.3 July 24,2002 Revised by: SDS Updated the maintainer of this document to Sharon Snider, corrected links, and converted to HTML Revision 1.2 December 3, 2001 Revised by: JEJ Changed license to GFDL Revision 1.1 November 12, 2001 Revised by: JEJ Revision 1.0 DRAFT October 19, 2001 Revised by: JEJ This document helps Emacspeak users become familiar with Emacs as an audio desktop and provides tutorials on many common tasks and the Emacs applications available to perform those tasks. Emacspeak User's Guide Table of Contents 1. Legal Notice.....................................................................................................................................................1 2. Introduction.....................................................................................................................................................2 2.1. What is Emacspeak?.........................................................................................................................2 2.2. About this tutorial.............................................................................................................................2 3. Before you begin..............................................................................................................................................3 3.1. Getting started with Emacs and Emacspeak.....................................................................................3 3.2. Emacs Command Conventions.........................................................................................................3 -

GNU Wget 1.10 the Non-Interactive Download Utility Updated for Wget 1.10, Apr 2005

GNU Wget 1.10 The non-interactive download utility Updated for Wget 1.10, Apr 2005 by Hrvoje Nikˇsi´cand the developers Copyright c 1996–2005, Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with the Invariant Sections being “GNU General Public License” and “GNU Free Documentation License”, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Overview 1 1 Overview GNU Wget is a free utility for non-interactive download of files from the Web. It supports http, https, and ftp protocols, as well as retrieval through http proxies. This chapter is a partial overview of Wget’s features. • Wget is non-interactive, meaning that it can work in the background, while the user is not logged on. This allows you to start a retrieval and disconnect from the system, letting Wget finish the work. By contrast, most of the Web browsers require constant user’s presence, which can be a great hindrance when transferring a lot of data. • Wget can follow links in html and xhtml pages and create local versions of remote web sites, fully recreating the directory structure of the original site. This is sometimes referred to as “recursive downloading.” While doing that, Wget respects the Robot Exclusion Standard (‘/robots.txt’). Wget can be instructed to convert the links in downloaded html files to the local files for offline viewing. -

1 What Is Gimp? 3 2 Default Short Cuts and Dynamic Keybinding 9

GUM The Gimp User Manual version 1.0.0 Karin Kylander & Olof S Kylander legalities Legalities The Gimp user manual may be reproduced and distributed, subject to the fol- lowing conditions: Copyright © 1997 1998 by Karin Kylander Copyright © 1998 by Olof S Kylander E-mail: [email protected] (summer 98 [email protected]) The Gimp User Manual is an open document; you may reproduce it under the terms of the Graphic Documentation Project Copying Licence (aka GDPL) as published by Frozenriver. This document is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANT- ABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the Graphic Documentation Project Copying License for more details. GRAPHIC DOCUMENTATION PROJECT COPYING LICENSE The following copyright license applies to all works by the Graphic Docu- mentation Project. Please read the license carefully---it is similar to the GNU General Public License, but there are several conditions in it that differ from what you may be used to. The Graphic Documentation Project manuals may be reproduced and distrib- uted in whole, subject to the following conditions: The Gimp User Manual Page i Legalities All Graphic Documentation Project manuals are copyrighted by their respective authors. THEY ARE NOT IN THE PUBLIC DOMAIN. • The copyright notice above and this permission notice must be preserved complete. • All work done under the Graphic Documentation Project Copying License must be available in source code for anyone who wants to obtain it. The source code for a work means the preferred form of the work for making modifications to it. -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Garrels BVBA <tille wants no spam _at_ garrels dot be> Version 1.11 Last updated 20081227 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Download Instructions—Portal

Download instructions These instructions are recommended to download big files. How to download and verify files from downloads.gvsig.org • H ow to download files • G NU/Linux Systems • MacO S X Systems • Windows Systems • H ow to validate the downloaded files How to download files The files distributed on this site can be downloaded using different access protocols, the ones currently available are FTP, HTTP and RSYNC. The base URL of the site for the different protocols is: • ftp://gvsig.org/ • http://downloads.gvsig.org/ • r sync://gvsig.org/downloads/ To download files using the first two protocols is recommended to use client programs able to resume partial downloads, as it is usual to have transfer interruptions when downloading big files like DVD images. There are multiple free (and multi platform) programs to download files using different protocols (in our case we are interested in FTP and HTTP), from them we can highlight curl (http://curl.haxx.se/) and wget (http://www.gnu.org/software/wget/) from the command line ones and Free Download Manager from the GUI ones (this one is only for Windows systems). The curl program is included in MacOS X and is available for almost all GNU/Linux distributions. It can be downloaded in source code or in binary form for different operating systems from the project web site. The wget program is also included in almost all GNU/Linux distributions and its source code or binaries of the program for different systems can be downloaded from this page. Next we will explain how to download files from the most usual operating systems using the programs referenced earlier: • G NU/Linux Systems • MacO S X Systems • Windows Systems The use of rsync (available from the URL http://samba.org/rsync/) it is left as an exercise for the reader, we will only said that it is advised to use the --partial option to avoid problems when there transfers are interrupted. -

Using Wget to Download Multiple Files and Directories from ECCO Drive

Using wget to download multiple files and directories from ECCO drive: https://ecco.jpl.nasa.gov/drive/files Ian Fenty Original 2020-04-09, updated 2020-04-29 Adapted from the PO.DAAC discussion forum topic “Download Multiple Data Files from PODAAC Drive Using wget” https://go.nasa.gov/2XmGu6b “wget is a free utility for non-interactive download of files from the Web. It supports http, https, and ftp protocols, as well as retrieval through http proxies. It is a Unix-based command- line tool, but is also available for other operating system, such as Windows, Mac OS X, etc.” Three illustrative examples using wget Here the username and password are the WebDAV/Programmatic API credentials (login/password). Other wget flags are described later. Example 1: Download all of the lat-lon ETAN files from ECCOv4 Release 4 >wget --user=ifenty --password=ABCD -r -nc -np -nH --cut-dirs=2 https://ecco.jpl.nasa.gov/drive/files/Version4/Release4/interp_monthly/ETAN/ After downloading you will have set of directories and files like this: /Version4/Release4/interp_monthly/ETAN/1992/ETAN_1992_01.nc /Version4/Release4/interp_monthly/ETAN/1992/ETAN_1992_02.nc [...] /Version4/Release4/interp_monthly/ETAN/2017/ETAN_2017_12.nc Example 2: Download the entire ECCOv4 Release 4 solution >wget --user=ifenty --password=ABCD -r -nc -np -nH --cut-dirs=2 https://ecco.jpl.nasa.gov/drive/files/Version4/Release4/ After downloading you will have set of directories and files like this: /Version4/Release4/input_init/ /Version4/Release4/interp_monthly [...] Example 3: Download -

Installation of SALMON

SALMON TUTORIAL, TSUKUBA, 2017 Installation of SALMON Shunsuke Yamada Center for Computational Sciences, University of Tsukuba Scalable Ab-initio Light-Matter simulator for Optics and Nanoscience 1 http://salmon-tddft.jp/ Prerequisites • Fortran90/C compiler with MPI • GCC (Gnu Compiler Collection) • Intel Fortran/C Compiler • Fujitsu Compiler (at FX100 / K-Computer) • Library packages for linear algebra • BLAS/LAPACK • Intel Math Kernel Library (MKL) • Fujitsu Scientific Subroutine Library 2 (SSL-II) • Build tool • CMake • Gnu Make Scalable Ab-initio Light-Matter simulator for Optics and Nanoscience 2 http://salmon-tddft.jp/ SALMON TUTORIAL, TSUKUBA, 2017 Prerequisites • Fortran90/C compiler with MPI • GCC (Gnu Compiler Collection) • Intel Fortran/C Compiler • Fujitsu Compiler (at FX100 / K-Computer) • Library packages for linear algebra • BLAS/LAPACK • Intel Math Kernel Library (MKL) • Fujitsu Scientific Subroutine Library 2 (SSL-II) • Build tool • CMake • Gnu Make Scalable Ab-initio Light-Matter simulator for Optics and Nanoscience 3 http://salmon-tddft.jp/ SALMON TUTORIAL, TSUKUBA, 2017 Path setting Modify ~/.bash_profile * In this example, all programs will be installed in ~/work directory Add $PATH settings as follows # User specific environment and startup programs PATH=$HOME/work/cmake/bin:$PATH PATH=$HOME/work/SALMON/bin:$PATH export PATH module load intel intelmpi mkl Set compiling environment (if needed) Scalable Ab-initio Light-Matter simulator for Optics and Nanoscience 4 http://salmon-tddft.jp/ SALMON TUTORIAL, TSUKUBA, 2017 -

Debian Packaging Tutorial

Debian Packaging Tutorial Lucas Nussbaum [email protected] version 0.27 – 2021-01-08 Debian Packaging Tutorial 1 / 89 About this tutorial I Goal: tell you what you really need to know about Debian packaging I Modify existing packages I Create your own packages I Interact with the Debian community I Become a Debian power-user I Covers the most important points, but is not complete I You will need to read more documentation I Most of the content also applies to Debian derivative distributions I That includes Ubuntu Debian Packaging Tutorial 2 / 89 Outline 1 Introduction 2 Creating source packages 3 Building and testing packages 4 Practical session 1: modifying the grep package 5 Advanced packaging topics 6 Maintaining packages in Debian 7 Conclusions 8 Additional practical sessions 9 Answers to practical sessions Debian Packaging Tutorial 3 / 89 Outline 1 Introduction 2 Creating source packages 3 Building and testing packages 4 Practical session 1: modifying the grep package 5 Advanced packaging topics 6 Maintaining packages in Debian 7 Conclusions 8 Additional practical sessions 9 Answers to practical sessions Debian Packaging Tutorial 4 / 89 Debian I GNU/Linux distribution I 1st major distro developed “openly in the spirit of GNU” I Non-commercial, built collaboratively by over 1,000 volunteers I 3 main features: I Quality – culture of technical excellence We release when it’s ready I Freedom – devs and users bound by the Social Contract Promoting the culture of Free Software since 1993 I Independence – no (single) -

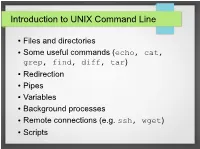

Introduction to UNIX Command Line

Introduction to UNIX Command Line ● Files and directories ● Some useful commands (echo, cat, grep, find, diff, tar) ● Redirection ● Pipes ● Variables ● Background processes ● Remote connections (e.g. ssh, wget) ● Scripts The Command Line ● What is it? ● An interface to UNIX ● You type commands, things happen ● Also referred to as a “shell” ● We'll use the bash shell – check you're using it by typing (you'll see what this means later): ● echo $SHELL ● If it doesn't say “bash”, then type bash to get into the bash shell Files and Directories / home var usr mcuser abenson drmentor science catvideos stuff data code report M51.fits simulate.c analyze.py report.tex Files and Directories ● Get a pre-made set of directories and files to work with ● We'll talk about what these commands do later ● The “$” is the command prompt (yours might differ). Type what's listed after hit, then press enter. $$ wgetwget http://bit.ly/1TXIZSJhttp://bit.ly/1TXIZSJ -O-O playground.tarplayground.tar $$ tartar xvfxvf playground.tarplayground.tar Files and directories $$ pwdpwd /home/abenson/home/abenson $$ cdcd playgroundplayground $$ pwdpwd /home/abenson/playground/home/abenson/playground $$ lsls animalsanimals documentsdocuments sciencescience $$ mkdirmkdir mystuffmystuff $$ lsls animalsanimals documentsdocuments mystuffmystuff sciencescience $$ cdcd animals/mammalsanimals/mammals $$ lsls badger.txtbadger.txt porcupine.txtporcupine.txt $$ lsls -l-l totaltotal 88 -rw-r--r--.-rw-r--r--. 11 abensonabenson abensonabenson 19441944 MayMay 3131 18:0318:03 badger.txtbadger.txt -rw-r--r--.-rw-r--r--. 11 abensonabenson abensonabenson 13471347 MayMay 3131 18:0518:05 porcupine.txtporcupine.txt Files and directories “Present Working Directory” $$ pwdpwd Shows the full path of your current /home/abenson/home/abenson location in the filesystem.