Evaluating Binary N-Gram Analysis for Authorship Attribution

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluation of Stop Word Lists in Chinese Language

Evaluation of Stop Word Lists in Chinese Language Feng Zou, Fu Lee Wang, Xiaotie Deng, Song Han Department of Computer Science, City University of Hong Kong Kowloon Tong, Hong Kong [email protected] {flwang, csdeng, shan00}@cityu.edu.hk Abstract In modern information retrieval systems, effective indexing can be achieved by removal of stop words. Till now many stop word lists have been developed for English language. However, no standard stop word list has been constructed for Chinese language yet. With the fast development of information retrieval in Chinese language, exploring the evaluation of Chinese stop word lists becomes critical. In this paper, to save the time and release the burden of manual comparison, we propose a novel stop word list evaluation method with a mutual information-based Chinese segmentation methodology. Experiments have been conducted on training texts taken from a recent international Chinese segmentation competition. Results show that effective stop word lists can improve the accuracy of Chinese segmentation significantly. In order to produce a stop word list which is widely 1. Introduction accepted as a standard, it is extremely important to In information retrieval, a document is traditionally compare the performance of different stop word list. On indexed by frequency of words in the documents (Ricardo the other hand, it is also essential to investigate how a stop & Berthier, 1999; Rijsbergen, 1975; Salton & Buckley, word list can affect the completion of related tasks in 1988). Statistical analysis through documents showed that language processing. With the fast growth of online some words have quite low frequency, while some others Chinese documents, the evaluation of these stop word lists act just the opposite (Zipf, 1932). -

PSWG: an Automatic Stop-Word List Generator for Persian Information

Vol. 2(5), Apr. 2016, pp. 326-334 PSWG: An Automatic Stop-word List Generator for Persian Information Retrieval Systems Based on Similarity Function & POS Information Mohammad-Ali Yaghoub-Zadeh-Fard1, Behrouz Minaei-Bidgoli1, Saeed Rahmani and Saeed Shahrivari 1Iran University of Science and Technology, Tehran, Iran 2Freelancer, Tehran, Iran *Corresponding Author's E-mail: [email protected] Abstract y the advent of new information resources, search engines have encountered a new challenge since they have been obliged to store a large amount of text materials. This is even more B drastic for small-sized companies which are suffering from a lack of hardware resources and limited budgets. In such a circumstance, reducing index size is of paramount importance as it is to maintain the accuracy of retrieval. One of the primary ways to reduce the index size in text processing systems is to remove stop-words, frequently occurring terms which do not contribute to the information content of documents. Even though there are manually built stop-word lists almost for all languages in the world, stop-word lists are domain-specific; in other words, a term which is a stop- word in a specific domain may play an indispensable role in another one. This paper proposes an aggregated method for automatically building stop-word lists for Persian information retrieval systems. Using part of speech tagging and analyzing statistical features of terms, the proposed method tries to enhance the accuracy of retrieval and minimize potential side effects of removing informative terms. The experiment results show that the proposed approach enhances the average precision, decreases the index storage size, and improves the overall response time. -

Read Book > Tom Clancy Locked on and Threat Vector

AXIP4PC16STW ^ PDF ^ Tom Clancy Locked on and Threat Vector (2-In-1 Collection) Tom Clancy Locked on and Th reat V ector (2-In-1 Collection) Filesize: 3.31 MB Reviews Comprehensive guideline! Its such a good read through. It is actually writter in basic words and not confusing. I am just easily could possibly get a enjoyment of reading a composed book. (Lonzo Wilderman) DISCLAIMER | DMCA KQP66MYYB4CV Book < Tom Clancy Locked on and Threat Vector (2-In-1 Collection) TOM CLANCY LOCKED ON AND THREAT VECTOR (2-IN-1 COLLECTION) To save Tom Clancy Locked on and Threat Vector (2-In-1 Collection) PDF, you should click the web link beneath and download the file or have accessibility to other information which are have conjunction with TOM CLANCY LOCKED ON AND THREAT VECTOR (2-IN-1 COLLECTION) book. BRILLIANCE AUDIO, United States, 2015. CD-Audio. Book Condition: New. Unabridged. 170 x 135 mm. Language: English . Brand New. Two Tom Clancy novels in one collection. Locked On Although his father had been reluctant to become a field operative, Jack Ryan Jr. wants nothing more. Privately training with a seasoned Special Forces drill instructor, he s honing his skills to transition his work within The Campus from intelligence analysis to hunting down and eliminating terrorists wherever he can even as Jack Ryan Sr. campaigns for re-election as President of the United States. But what neither father nor son knows is that the political and the personal have just become equally dangerous. A devout enemy of Jack Sr. launches a privately funded vendetta to discredit him by connecting the presidential candidate to a mysterious killing in the past by John Clark, his longtime ally. -

Cuckoo's Calling Discussion Questions Characters

Cuckoo’s Calling Discussion Questions by Robert Galbraith Author Bio: (from Fantastic Fiction & Galbraith’s website) Robert Galbraith is a pseudonym for J.K. Rowling, bestselling author of the Harry Potter series and The Casual Vacancy. The first Robert Galbraith novel, The Cuckoo’s Calling, was published in 2013 to critical acclaim. The books have been adapted as a major new television series for BBC One, produced by Brontë Film and Television. Why a pseudonym? J.K. Rowling wanted to begin a new writing career in a new genre and to release her crime novels to a neutral audience, free of expectation or hype. Although the author’s true identity was unexpectedly revealed, J.K. Rowling continues to write the Cormoran Strike series under the name of Robert Galbraith to maintain the distinction from her other writing. (Per Galbraith’s Website) Characters: Robin Ellacott – 25 years old. Secretarial temp working for private investigator CD Strike. New to London. Fiancé is Matthew, an accountant. Cormoran (CB) Strike – 35 years old. Private investigator. Former military investigator. Has a prosthetic leg. Just broke up with his girlfriend, Charlotte. Has a sister, Lucy. Lula Landry – Famous Model. Adopted at 4 years of age. Has a brother John Bristow. Died of an apparent suicide. Has bipolar disorder. Boyfriend is Evan Duffield. John Bristow – Lawyer. Hires Strike to look into his sister’s, Lula Landry’s, death. His deceased little brother, Charlie, knew Strike as a child. His girlfriend & secretary is Alison. Lieutenant Jonah Agyeman – Sapper in the British army. Currently serving in Afghanistan. Richard Anstis – Former Army buddy of Strike’s. -

Department of English and American Studies English

Masaryk University Faculty of Arts Department of English and American Studies English Language and Literature Simona Hájková Representing Women in Robert Galbraith's Strike Series Bachelor's Diploma Thesis Supervisor: Mgr. Filip Krajník, Ph. D. 2019 / declare that I have worked on this thesis independently, using only the primary and secondary sources listed in the bibliography. Table of Contents Introduction 1 1. Gender Representation in Crime Fiction and Its Development 5 2. Female Investigators 10 2.1. Robin Ellacott from the Strike Series 12 2.2. The Cordelia Gray Mystery Novels 20 2.3. The Hard-boiled V. I. Warshawski 27 2.4. Lisbeth Salander from the Millennium Trilogy 31 3. The Female Criminal 39 3.1. The Ruthless Murderess 41 4. The Female Victim 47 4.1. Lula Landry 47 4.2. Female Victims as Sexual Objects 52 Conclusion 57 Bibliography 63 Summary 65 Resume 67 Introduction Our private eye Cormoran Strike is back. He's a tough guy, burly ex-soldier with half a leg missing, but he also went to Oxford, so he can translate a Latin phrase before he dislocates your jaw with his fist (...) There are so many problems with The Silkworm, least of all the complete lack of pace or intrigue (...) if Rowling was actually a man called Robert Galbraith, she probably wouldn't get away with describing so many women as "curvaceous" and adorning them with "clinging " dresses. (Hamilton 46) The quotation comes from a rather ironic review of the second instalment of Robert Galbraith's detective series, The Silkworm, by Ben Hamilton, published in The Spectator. -

Natural Language Processing Security- and Defense-Related Lessons Learned

July 2021 Perspective EXPERT INSIGHTS ON A TIMELY POLICY ISSUE PETER SCHIRMER, AMBER JAYCOCKS, SEAN MANN, WILLIAM MARCELLINO, LUKE J. MATTHEWS, JOHN DAVID PARSONS, DAVID SCHULKER Natural Language Processing Security- and Defense-Related Lessons Learned his Perspective offers a collection of lessons learned from RAND Corporation projects that employed natural language processing (NLP) tools and methods. It is written as a reference document for the practitioner Tand is not intended to be a primer on concepts, algorithms, or applications, nor does it purport to be a systematic inventory of all lessons relevant to NLP or data analytics. It is based on a convenience sample of NLP practitioners who spend or spent a majority of their time at RAND working on projects related to national defense, national intelligence, international security, or homeland security; thus, the lessons learned are drawn largely from projects in these areas. Although few of the lessons are applicable exclusively to the U.S. Department of Defense (DoD) and its NLP tasks, many may prove particularly salient for DoD, because its terminology is very domain-specific and full of jargon, much of its data are classified or sensitive, its computing environment is more restricted, and its information systems are gen- erally not designed to support large-scale analysis. This Perspective addresses each C O R P O R A T I O N of these issues and many more. The presentation prioritizes • identifying studies conducting health service readability over literary grace. research and primary care research that were sup- We use NLP as an umbrella term for the range of tools ported by federal agencies. -

Ontology Based Natural Language Interface for Relational Databases

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 92 ( 2016 ) 487 – 492 2nd International Conference on Intelligent Computing, Communication & Convergence (ICCC-2016) Srikanta Patnaik, Editor in Chief Conference Organized by Interscience Institute of Management and Technology Bhubaneswar, Odisha, India Ontology Based Natural Language Interface for Relational Databases a b B.Sujatha* , Dr.S.Viswanadha Raju a. Assistant Professor, Department of CSE, Osmania University, Hyderabad, India b. Professor, Dept. Of CSE, JNTU college of Engineering, Jagityal, Karimnagar, India Abstract Developing Natural Language Query Interface to Relational Databases has gained much interest in research community since forty years. This can be termed as structured free query interface as it allows the users to retrieve the data from the database without knowing the underlying schema. Structured free query interface should address majorly two problems. Querying the system with Natural Language Interfaces (NLIs) is comfortable for the naive users but it is difficult for the machine to understand. The other problem is that the users can query the system with different expressions to retrieve the same information. The different words used in the query can have same meaning and also the same word can have multiple meanings. Hence it is the responsibility of the NLI to understand the exact meaning of the word in the particular context. In this paper, a generic NLI Database system has proposed which contains various phases. The exact meaning of the word used in the query in particular context is obtained using ontology constructed for customer database. The proposed system is evaluated using customer database with precision, recall and f1-measure. -

INFORMATION RETRIEVAL SYSTEM for SILT'e LANGUAGE Using

INFORMATION RETRIEVAL SYSTEM FOR SILT’E LANGUAGE Using BM25 weighting Abdulmalik Johar 1 Lecturer, Department of information system, computing and informatics college, Wolkite University, Ethiopia ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - The main aim of an information retrieval to time in the Silt’e zone. The growth of digital text system is to extract appropriate information from an information makes the utilization and access of the right enormous collection of data based on user’s need. The basic information difficult. Therefore, an information retrieval concept of the information retrieval system is that when a system needed to store, organize and helps to access Silt’e digital text information user sends out a query, the system would try to generate a list of related documents ranked in order, according to their 1.2. Literature Review degree of relevance. Digital unstructured Silt’e text documents increase from time to time. The growth of digital The Writing System of Silt’e Language text information makes the utilization and access of the right The accurate period that the Ge'ez character set was used information difficult. Thus, developing an information for Silt'e writing system is not known. However, since the retrieval system for Silt’e language allows searching and 1980s, Silt'e has been written in the Ge'ez alphabet, or retrieving relevant documents that satisfy information need Ethiopic script, writing system, originally developed for the of users. In this research, we design probabilistic information now extinct Ge'ez language and most known today in its use retrieval system for Silt’e language. The system has both for Amharic and Tigrigna [9]. -

The Applicability of Natural Language Processing (NLP) To

THE AMERICAN ARCHIVIST The Applicability of Natural Language Processing (NLP) to Archival Properties and Downloaded from http://meridian.allenpress.com/american-archivist/article-pdf/61/2/400/2749134/aarc_61_2_j3p8200745pj34v6.pdf by guest on 01 October 2021 Objectives Jane Greenberg Abstract Natural language processing (NLP) is an extremely powerful operation—one that takes advantage of electronic text and the computer's computational capabilities, which surpass human speed and consistency. How does NLP affect archival operations in the electronic environment? This article introduces archivists to NLP with a presentation of the NLP Continuum and a description of the Archives Axiom, which is supported by an analysis of archival properties and objectives. An overview of the basic information retrieval (IR) framework is provided and NLP's application to the electronic archival environment is discussed. The analysis concludes that while NLP offers advantages for indexing and ac- cessing electronic archives, its incapacity to understand records and recordkeeping systems results in serious limitations for archival operations. Introduction ince the advent of computers, society has been progressing—at first cautiously, but now exponentially—from a dependency on printed text, to functioning almost exclusively with electronic text and other elec- S 1 tronic media in an array of environments. Underlying this transition is the growing accumulation of massive electronic archives, which are prohibitively expensive to index by traditional manual procedures. Natural language pro- cessing (NLP) offers an option for indexing and accessing these large quan- 1 The electronic society's evolution includes optical character recognition (OCR) and other processes for transforming printed text to electronic form. Also included are processes, such as imaging and sound recording, for transforming and recording nonprint electronic manifestations, but this article focuses on electronic text. -

FTS in Database

Full-Text Search in PostgreSQL Oleg Bartunov Moscow Univiversity PostgreSQL Globall DDevelolopment Group FTFTSS iinn DataDatabbaasese • Full-text search – Find documents, which satisfy query – return results in some order (opt.) • Requirements to FTS – Full integration with PostgreSQL • transaction support • concurrency and recovery • online index – Linguistic support – Flexibility – Scalability PGCon 2007, Ottawa, May 2007 Full-Text Search in PostgreSQL Oleg Bartunov WWhhaatt isis aa DocumeDocumenntt ?? • Arbitrary textual attribute • Combination of textual attributes • Should have unique id id --- id Title Did -- ------ ---- Author Abstract Aid --------- ----------- ---- Keywords ------------- Body Title || Abstract || Keywords || Body || Author PGCon 2007, Ottawa, May 2007 Full-Text Search in PostgreSQL Oleg Bartunov TTexextt SeSeararchch OpOpeeraratotorrss • Traditional FTS operators for textual attributes ~, ~*, LIKE, ILIKE Problems – No linguistic support, no stop-words – No ranking – Slow, no index support. Documents should be scanned every time. PGCon 2007, Ottawa, May 2007 Full-Text Search in PostgreSQL Oleg Bartunov FTFTSS iinn PostgrePostgreSQSQLL =# select 'a fat cat sat on a mat and ate a fat rat'::tsvector @@ 'cat & rat':: tsquery; – tsvector – storage for document, optimized for search • sorted array of lexemes • positional information • weights information – tsquery – textual data type for query • Boolean operators - & | ! () – FTS operator tsvector @@ tsquery PGCon 2007, Ottawa, May 2007 Full-Text Search in PostgreSQL -

He Man the Americans Had Dubbed Target One Sat at His Regular Bistro

TWO he man the Americans had dubbed Target One sat at This regular bistro table at the sidewalk café in front of the May Hotel on Mimar Hayrettin. Most nights, when the weather was nice, he stopped here for a shot or two of raki in chilled sparkling water. The weather this evening was awful, but the long canopy hung over the sidewalk tables by the staff of the May kept him dry. There were just a few other patrons seated under the can- opy, couples smoking and drinking together before either heading back up to their rooms in the hotel or out to other Old Town nightlife destinations. Target One had grown to live for his evening glass of raki. The anise-fl avored milky white drink made from grape pom- ace was alcoholic, and forbidden in his home country of Libya and other nations where the more liberal Hanafi school of Islam is not de rigueur, but the ex–JSO spy had been forced 99780399160455_ThreatVector_TX1_p1-500.indd780399160455_ThreatVector_TX1_p1-500.indd 1010 110/5/120/5/12 88:23:23 AMAM THREAT VECTOR into the occasional use of alcohol for tradecraft purposes dur- ing his service abroad. Now that he had become a wanted man, he’d grown to rely on the slight buzz from the liquor to help relax him and aid in his sleep, though even the liberal Hanafi school does not permit intoxication. There were just a few vehicles rolling by on the cobble- stone street ten feet from his table. This road was hardly a busy thoroughfare, even on weekend nights with clear skies. -

Tom Clancy Book List

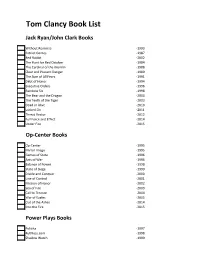

Tom Clancy Book List Jack Ryan/John Clark Books Without Remorse -1993 Patriot Games -1987 Red Rabbit -2002 The Hunt for Red October -1984 The Cardinal of the Kremlin -1988 Clear and Present Danger -1989 The Sum of All Fears -1991 Debt of Honor -1994 Executive Orders -1996 Rainbow Six -1998 The Bear and the Dragon -2000 The Teeth of the Tiger -2003 Dead or Alive -2010 Locked On -2011 Threat Vector -2012 Full Force and Effect -2014 Under Fire -2015 Op-Center Books Op-Center -1995 Mirror Image -1995 Games of State -1996 Acts of War -1996 Balance of Power -1998 State of Siege -1999 Divide and Conquer -2000 Line of Control -2001 Mission of Honor -2002 Sea of Fire -2003 Call to Treason -2004 War of Eagles -2005 Out of the Ashes -2014 Into the Fire -2015 Power Plays Books Politika -1997 Ruthless.com -1998 Shadow Watch -1999 Bio-Strike -2000 Cold War -2001 Cutting Edge -2002 Zero Hour -2003 Wild Card -2004 Net Force Books Net Force -1999 Hidden Agendas -1999 Night Moves -1999 Breaking Point -2000 Point of Impact -2001 CyberNation -2001 State of War -2003 Changing of the Guard -2003 Springboard -2005 The Archimedes Effect -2006 Net Force Explorers Books Virtual Vandals -1998 The Deadliest Game -1998 One is the Loneliest Number -1999 The Ultimate Escape -1999 The Great Race -1999 End Game -1998 Cyberspy -1999 Shadow of Honor -2000 Private Lives -2000 Safe House -2000 Gameprey -2000 Duel Identity -2000 Deathworld -2000 High Wire -2001 Cold Case -2001 Runaways -2001 Splinter Cell Books Splinter Cell -2004 Operation Barracuda -2005 Checkmate -2006 Fallout -2007 Conviction -2009 Endgame -2009 Blacklist Aftermath -2013 Ghost Recon Books Ghost Recon -2008 Combat Ops -2011 EndWar Books EndWar -2008 The Hunted -2011 H.A.W.X.