2014 Program Meeting Schedule

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Saloni Krishnan Curriculum Vitae

SALONI KRISHNAN CURRICULUM VITAE WEB CONTACT @salonikrishnan Dept. of Experimental Psychology, University of Oxford, 15 Parks Road, Oxford OX1 3UD github.com/salonikrishnan Corpus Christi College, University of Oxford, Merton Street, Oxford OX1 4JF osf.io/y4238 t: 07837935161 | e: [email protected] | w: www.salonikrishnan.com RESEARCH INTERESTS My research aims to identify brain changes that underlie childhood speech and language disorders such as developmental language disorder or stuttering, and use this knowledge to improve existing clinical tools. I am also interested in research questions that relate to this goal, such as how brain circuits for speech/language change over childhood, what factors explain individual differences in speech/ language performance, how speech/language learning can be improved, and what neural changes occur as a function of learning and training in the auditory-motor domain. To address my research questions, I use classical behavioural paradigms, as well as structural and functional magnetic resonance imaging. EXPERIENCE Royal Holloway, University of London Lecturer April 2019 - present University of Oxford Postdoctoral Research Associate Dept. of Experimental Psychology Neural basis of developmental disorders (stuttering, developmental language disorder) 2015- 2019 Funding: Wellcome Trust Principal Research Fellowship to Professor Dorothy Bishop, then Medical Research Council research grant to Professor Kate Watkins Corpus Christi College Junior Research Fellow 2017 - 2020 Non-stipendiary appointment -

Functions of Memory Across Saccadic Eye Movements

Functions of Memory Across Saccadic Eye Movements David Aagten-Murphy and Paul M. Bays Contents 1 Introduction 2 Transsaccadic Memory 2.1 Other Memory Representations 2.2 Role of VWM Across Eye Movements 3 Identifying Changes in the Environment 3.1 Detection of Displacements 3.2 Object Continuity 3.3 Visual Landmarks 3.4 Conclusion 4 Integrating Information Across Saccades 4.1 Transsaccadic Fusion 4.2 Transsaccadic Comparison and Preview Effects 4.3 Transsaccadic Integration 4.4 Conclusion 5 Correcting Eye Movement Errors 5.1 Corrective Saccades 5.2 Saccadic Adaptation 5.3 Conclusion 6 Discussion 6.1 Optimality 6.2 Object Correspondence 6.3 Memory Limitations References Abstract Several times per second, humans make rapid eye movements called saccades which redirect their gaze to sample new regions of external space. Saccades present unique challenges to both perceptual and motor systems. During the move- ment, the visual input is smeared across the retina and severely degraded. Once completed, the projection of the world onto the retina has undergone a large-scale spatial transformation. The vector of this transformation, and the new orientation of D. Aagten-Murphy (*) and P. M. Bays University of Cambridge, Cambridge, UK e-mail: [email protected] © Springer Nature Switzerland AG 2018 Curr Topics Behav Neurosci DOI 10.1007/7854_2018_66 D. Aagten-Murphy and P. M. Bays the eye in the external world, is uncertain. Memory for the pre-saccadic visual input is thought to play a central role in compensating for the disruption caused by saccades. Here, we review evidence that memory contributes to (1) detecting and identifying changes in the world that occur during a saccade, (2) bridging the gap in input so that visual processing does not have to start anew, and (3) correcting saccade errors and recalibrating the oculomotor system to ensure accuracy of future saccades. -

Binding of Visual Features in Human Perception and Memory

BINDING OF VISUAL FEATURES IN HUMAN PERCEPTION AND MEMORY SNEHLATA JASWAL Thesis presented for the degree of Doctor of Philosophy The University of Edinburgh 2009 Dedicated to my parents PhD – The University of Edinburgh – 2009 DECLARATION I declare that this thesis is my own composition, and that the material contained in it describes my own work. It has not been submitted for any other degree or professional qualification. All quotations have been distinguished by quotation marks and the sources of information acknowledged. Snehlata Jaswal 31 August 2009 PhD – The University of Edinburgh – 2009 ACKNOWLEDGEMENTS Adamant queries are a hazy recollection Remnants are clarity, insight, appreciation A deep admiration, love, infinite gratitude For revered Bob, John, and Jim One a cherished guide, ideal teacher The other a personal idol, surrogate mother Both inspiring, lovingly instilled certitude Jagat Sir and Ritu Ma’am A sister sought new worlds to conquer A daughter left, enticed by enchanter Yet showered me with blessings multitude My family, Mum and Dad So many more, whispers the breeze Without whom, this would not be Mere mention is an inane platitude For treasured friends forever PhD – The University of Edinburgh – 2009 1 CONTENTS ABSTRACT.......................................................................................................................................... 5 LIST OF FIGURES ............................................................................................................................. 6 LIST OF ABBREVIATIONS............................................................................................................. -

Visual Scene Perception

Galley: Article - 00632 Level 1 Visual Scene Perception Helene Intraub, University of Delaware, Newark, Delaware, USA CONTENTS Introduction Transsaccadic memory Scene comprehension and its influence on object Perception of `gist' versus details detection Boundary extension When studying a scene, viewers can make as many a single glimpse without any bias from prior con- as three or four eye fixations per second. A single text. Immediately after viewing a rapid sequence fixation is typically enough to allow comprehension like this, viewers participate in a recognition test. of a scene. What is remembered, however, is not a The pictures they just viewed are mixed with new photographic replica, but a more abstract mental pictures and are slowly presented one at a time. representation that captures the `gist' and general The viewers' task is to indicate which pictures they layout of the scene along with a limited amount of detail. remember seeing before. Following rapid presenta- tion, memory for the previously seen pictures is rather poor. For example, when 16 pictures were INTRODUCTION presented in this way, moments later, viewers were only able to recognize about 40 to 50 per 0632.001 The visual world exists all around us, but physio- logical constraints prevent us from seeing it all at cent in the memory test. One explanation is that once. Eye movements (called saccades) shift the they were only able to perceive about 40 to 50 per position of the eye as quickly as three or four cent of the scenes that had flashed by and those times per second. Vision is suppressed during were the scenes they remembered. -

Planum Temporale Asymmetry in People Who Stutter

Journal of Fluency Disorders xxx (xxxx) xxx–xxx Contents lists available at ScienceDirect Journal of Fluency Disorders journal homepage: www.elsevier.com/locate/jfludis Planum temporale asymmetry in people who stutter Patricia M. Gougha, Emily L. Connallyb, Peter Howellc, David Wardd, ⁎ Jennifer Chestersb, Kate E. Watkinsb, a Department of Psychology, Maynooth University, Maynooth, Co. Kildare, Ireland b Department of Experimental Psychology, University of Oxford, South Parks Road, Oxford, OX1 3UD, UK c Department of Psychology, University College London, Bedford Way, London, WC1E 6BT, UK d School of Psychology and Clinical Language Sciences, University of Reading, Harry Pitt Building, Earley Gate, Reading, RG6 7BE, UK ABSTRACT Purpose: Previous studies have reported that the planum temporale – a language-related struc- ture that normally shows a leftward asymmetry – had reduced asymmetry in people who stutter (PWS) and reversed asymmetry in those with severe stuttering. These findings are consistent with the theory that altered language lateralization may be a cause or consequence of stuttering. Here, we re-examined these findings in a larger sample of PWS. Methods: We evaluated planum temporale asymmetry in structural MRI scans obtained from 67 PWS and 63 age-matched controls using: 1) manual measurements of the surface area; 2) voxel- based morphometry to automatically calculate grey matter density. We examined the influences of gender, age, and stuttering severity on planum temporale asymmetry. Results: The size of the planum temporale and its asymmetry were not different in PWS compared with Controls using either the manual or the automated method. Both groups showed a sig- nificant leftwards asymmetry on average (about one-third of PWS and Controls showed right- ward asymmetry). -

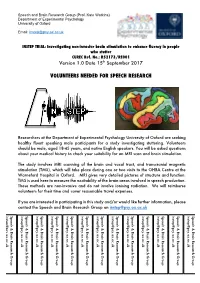

Volunteers Needed for Speech Research

Speech and Brain Research Group (Prof. Kate Watkins) Department of Experimental Psychology University of Oxford Email: [email protected] INSTEP TRIAL: Investigating non-invasive brain stimulation to enhance fluency in people who stutter CUREC Ref. No.: R52173/RE001 Version 1.0 Date 15th September 2017 VOLUNTEERS NEEDED FOR SPEECH RESEARCH Researchers at the Department of Experimental Psychology University of Oxford are seeking healthy fluent speaking male participants for a study investigating stuttering. Volunteers should be male, aged 18-45 years, and native English speakers. You will be asked questions about your medical history to check your suitability for an MRI scan and brain stimulation. The study involves MRI scanning of the brain and vocal tract, and transcranial magnetic stimulation (TMS), which will take place during one or two visits to the OHBA Centre at the Warneford Hospital in Oxford. MRI gives very detailed pictures of structure and function. TMS is used here to measure the excitability of the brain areas involved in speech production. These methods are non-invasive and do not involve ionising radiation. We will reimburse volunteers for their time and cover reasonable travel expenses. If you are interested in participating in this study and/or would like further information, please contact the Speech and Brain Research Group on [email protected] [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group [email protected] Speech & Brain Research Group Research Group Research . -

Retinotopic Memory Is More Precise Than Spatiotopic Memory

Retinotopic memory is more precise than spatiotopic memory Julie D. Golomb1 and Nancy Kanwisher McGovern Institute for Brain Research, Massachusetts Institute of Technology, Cambridge, MA 02139 Edited by Tony Movshon, New York University, New York, NY, and approved December 14, 2011 (received for review August 10, 2011) Successful visually guided behavior requires information about spa- possible that stability could be achieved on the basis of retinotopic- tiotopic (i.e., world-centered) locations, but how accurately is this plus-updating alone. information actually derived from initial retinotopic (i.e., eye-centered) Most current theories of visual stability favor some form of visual input? We conducted a spatial working memory task in which retinotopic-plus-updating, but they vary widely in how quickly, subjects remembered a cued location in spatiotopic or retinotopic automatically, or successfully this updating might occur. For coordinates while making guided eye movements during the memory example, several groups have demonstrated that locations can be delay. Surprisingly, after a saccade, subjects were significantly more rapidly “remapped,” sometimes even in anticipation of an eye accurate and precise at reporting retinotopic locations than spatiotopic movement (20–22), but a recent set of studies has argued that locations. This difference grew with each eye movement, such that updating requires not only remapping to the new location, but spatiotopic memory continued to deteriorate, whereas retinotopic also extinguishing the representation at the previous location, memory did not accumulate error. The loss in spatiotopic fidelity and this latter process may occur on a slower time scale (23, 24). is therefore not a generic consequence of eye movements, but a Moreover, it is an open question just how accurate we are at direct result of converting visual information from native retinotopic spatiotopic perception. -

Copyright 2013 Jeewon Ahn

Copyright 2013 JeeWon Ahn THE CONTRIBUTION OF VISUAL WORKING MEMORY TO PRIMING OF POP-OUT BY JEEWON AHN DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Psychology in the Graduate College of the University of Illinois at Urbana-Champaign, 2013 Urbana, Illinois Doctoral Committee: Associate Professor Alejandro Lleras, Chair Professor John E. Hummel Professor Arthur F. Kramer Professor Daniel J. Simons Associate Professor Diane M. Beck ABSTRACT Priming of pop-out (PoP) refers to the facilitation in performance that occurs when a target- defining feature is repeated across consecutive trials in a pop-out singleton search task. While the underlying mechanism of PoP has been at the center of debate, a recent finding (Lee, Mozer, & Vecera, 2009) has suggested that PoP relies on the change of feature gain modulation, essentially eliminating the role of memory representation as an explanation for the underlying mechanism of PoP. The current study aimed to test this proposition to determine whether PoP is truly independent of guidance based on visual working memory (VWM) by adopting a dual-task paradigm composed of a variety of both pop-out search and VWM tasks. First, Experiment 1 tested whether the type of information represented in VWM mattered in the interaction between PoP and the VWM task. Experiment 1A aimed to replicate the previous finding, adopting a design almost identical to that of Lee et al., including a VWM task to memorize non-spatial features. Experiment 1B tested a different type of VWM task involving remembering spatial locations instead of non-spatial colors. -

NEUROPSYCHOLOGIA 83 Volume 83 , March 2016

NEUROPSYCHOLOGIA 83 Volume 83 , March 2016 A n international journal in behavioural and cognitive neuroscience NEURO Volume 83 March 2016 PSYCHOLOGIA Special Issue Functional Selectivity in Perceptual and Cognitive Systems - A Tribute to Shlomo Bentin (1946-2012) Guest Editors Vol. NEUROPSYCHOLOGIA Kalanit Grill-Spector, Leon Deouell, Rafael Malach, Bruno Rossion and Micah Murray An international journal in behavioural and cognitive neuroscience 83 ( 2016 Contents ) 1 L. Deouell , K. Grill-Spector , R. Malach , 1 Introduction to the special issue on functional selectivity in – M.M. Murray and B. Rossion perceptual and cognitive systems - a tribute to Shlomo Bentin 282 (1946-2012) G. Yovel 5 Neural and cognitive face-selective markers: An integrative review C. Jacques , N. Witthoft , K.S. Weiner , 14 Corresponding ECoG and fMRI category-selective signals in human B.L. Foster , V. Rangarajan , D. Hermes , ventral temporal cortex K.J. Miller , J. Parvizi and K. Grill-Spector V. Rangarajan and J. Parvizi 29 Functional asymmetry between the left and right human fusiform gyrus explored through electrical brain stimulation N. Freund , C.E. Valencia-Alfonso , J. Kirsch , 37 Asymmetric top-down modulation of ascending visual pathways K. Brodmann , M. Manns and O. G ü nt ü rk ü n in pigeons K.S. Weiner and K. Zilles 48 The anatomical and functional specialization of the fusiform gyrus 63 Visual expertise for horses in a case of congenital prosopagnosia N. Weiss , E. Mardo and G. Avidan Special Issue: Functional Selectivity J.J.S. Barton and S.L. Corrow 76 Selectivity in acquired prosopagnosia: The segregation of divergent and convergent operations A. -

Scene and Position Specificity in Visual Memory for Objects

Journal of Experimental Psychology: Copyright 2006 by the American Psychological Association Learning, Memory, and Cognition 0278-7393/06/$12.00 DOI: 10.1037/0278-7393.32.1.58 2006, Vol. 32, No. 1, 58–69 Scene and Position Specificity in Visual Memory for Objects Andrew Hollingworth University of Iowa This study investigated whether and how visual representations of individual objects are bound in memory to scene context. Participants viewed a series of naturalistic scenes, and memory for the visual form of a target object in each scene was examined in a 2-alternative forced-choice test, with the distractor object either a different object token or the target object rotated in depth. In Experiments 1 and 2, object memory performance was more accurate when the test object alternatives were displayed within the original scene than when they were displayed in isolation, demonstrating object-to-scene binding. Experiment 3 tested the hypothesis that episodic scene representations are formed through the binding of object representations to scene locations. Consistent with this hypothesis, memory performance was more accurate when the test alternatives were displayed within the scene at the same position originally occupied by the target than when they were displayed at a different position. Keywords: visual memory, scene perception, context effects, object recognition Humans spend most of their lives within complex visual envi- that work on scene perception and memory often assumes the ronments, yet relatively little is known about how natural scenes existence of scene-level representations (e.g., Hollingworth & are visually represented in the brain. One of the central issues in Henderson, 2002). -

Full Programme – 4-6 July 2017

HEA Annual Conference 2017 #HEAconf17 Generation TEF: Teaching in the spotlight Full programme – 4-6 July 2017 Day 1 4 July 2017 Arts and Humanities strand Day 1 4 July 2017 Health and Social Care strand Day 2 5 July 2017 Strategy and Sector Priorities strand Day 3 6 July 2017 Social Sciences strand Day 3 6 July 2017 STEM strand 1 ^ TOP ^ HEA Annual Conference 2017 #HEAconf17 Generation TEF: Teaching in the spotlight Day 1: 4 July 2017 – Arts and Humanities strand programme Session Type Details Room 9.00-9.45 Registration Refreshments available 9.45-10.00 Welcome C9 10.00-11.00 Keynote C9 Dr Alison James, Head of Learning and Teaching and Acting Director of Academic Quality/Development (University of Winchester) Finding magic despite the metrics 11.00-11.30 Refreshments 11.30-12.00 AH1.3 Oral ‘The Kingdom of Yes’: Students involvement in 1st Year D5 presentation curriculum design, Lisa Gaughan (University of Lincoln) AH1.4 Oral Out of study experiences: The power of different learning D6 presentation environments to inspire student engagement, Polly Palmer (University of Hertfordshire) AH1.5 Oral Teaching excellence: Conceptions and practice in the Humanities D7 presentation and Social Sciences, John Sanders (Open University) & Anna Mountford-Zimbars (Kings College London) AH1.8 Oral Digital solutions to student feedback in the Performing Arts, E5 presentation Robert Dean (University of Lincoln) AH1.9 Oral Bristol Parkhive: An interdisciplinary approach to E6 presentation employability for Arts, Humanities and Education students in a supercomplex -

Annual Report

Annual Report 2002/2003 The academic year 2002/2003 was marked by continued excellence in research, teaching and outreach, in service of humanity’s intellectual, social and technological needs. Provost & President’s Outreach Statement In accordance with its UCL is committed to founding principles, UCL using its excellence in continued to share the research and teaching highest quality research to enrich society’s and teaching with those intellectual, cultural, who could most benefit scientific, economic, from it, regardless of environmental and their background or medical spheres. circumstances. See page 2 See page 8 Research & Teaching Achievements UCL continued to UCL’s academics challenge the boundaries conducted pioneering of knowledge through its work at the forefront programmes of research, of their disciplines while ensuring that the during this year. most promising students See page 12 could benefit from its intense research-led teaching environment. See page 4 The UCL Community Financial Information UCL’s staff, students, UCL’s annual income has alumni and members of grown by almost 30% in Council form a community the last five years. The which works closely largest component of this together to achieve income remains research the university’s goals. grants and contracts. See page 18 See page 24 Supporting UCL Contacting UCL UCL pays tribute to Join the many current those individuals and and former students and organisations who staff, friends, businesses, have made substantial funding councils and financial contributions agencies, governments, in support of its research foundations, trusts and and teaching. charities that are See page 22 involved with UCL. See page 25 Developing UCL With the help of its supporters, UCL is investing in facilities fit for the finest research and teaching in decades to come.