Gxp Systems on AWS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 11. Fire Alarms and Democratic Accountability Charles M. Cameron and Sanford C. Gordon

Chapter 11. Fire Alarms and Democratic Accountability Charles M. Cameron and Sanford C. Gordon 1 Introduction Accountability is a situation that prevails in any relationship between a principal and agent in which the latter takes some action for which she may be \held to account" { that is, made to answer for those actions under a system of rewards and sanctions administered by the former. In a democratic setting, the electorate are the principals and public officials the agents; rewards and sanctions the loss or retention of the benefits, privileges, and powers of office-holding. Absent electoral accountability, citizens must fall back on the benevolence of their rulers or just plain luck. History shows these are weak reeds indeed. Thus, the accountability (or unaccountability) of elected officials to voters is a key component of any comprehensive theory of democratic governance. Electoral accountability faces huge obstacles in practice. One of the most pernicious is the problem of asymmetric information: while knowing what politicians are up to is surely a critical ingredient of holding incumbent officeholders to account, for most voters, becoming and remaining informed about whether elected officials are actually meeting their obligations can be tedious, time- consuming and difficult. How many citizens have the leisure to research the voting record of their member of Congress, for instance? And even then, outside of a few specific areas, how many have the knowledge to assess whether a given legislative enactment actually improved their welfare, all things considered? A lack of transparency about actions and the obscurity between means and ends can render nominal accountability completely moot in practice.1 In the face of prohibitive information costs, a simple intuition is the following: any mechanism that lowers informational costs for voters ought to enhance electoral accountability. -

The Gxp Dictionary 1St Edition

Testo Expert Knowledge The GxP Dictionary 1st edition Definitions relating to GxP and Quality Assurance 1 Note: Some of the information contained in this GxP Dictionary does not apply equally to all countries. Depending on the respective local legislation, other definitions may apply to certain terms and topics. The sections affected are not separately identified. 2 Foreword Efficacy, identity and purity are qual- This GxP Dictionary explains the ity attributes, which are required of majority of terms relating to GxP, products from the GMP-regulated qualification, validation and quality environment. The term “Good Manu- assurance. facturing Practice” sums up the quality assurance requirements from national It is intended as a compact reference and international regulations and laws. guide and aid for all those involved Other GxP forms have now been de- in GMP, and does not purport to be veloped, whose scope also expands complete. to adjacent sectors such as medical devices and life sciences. Testo SE & Co. KGaA The complex requirements of the “GMP compliance” generate a variety of specific concepts and abbrevia- tions. 3 GxP Dictionary Contents Contents 10 Terms and Definitions C 15 Calibration 0-9 15 CAPA 10 21 CFR 210/211 15 Capacity Test 10 483 15 CEP (Certificate of Suitability of Monographs of the European A Pharmacopoeia) 11 Action Limits 15 CFR 11 Active Pharmaceutical Ingredient 15 CFU (Colony-Forming Unit) (API) 16 cGMP 11 ADI (Acceptable Daily Intake) 16 Challenge Test 11 Airlock Concept 16 Change Control 11 Annex 16 Clean Corridor -

Introduction to Medical Device Law and Regulation March 2-4, 2021 | Live Virtual Event Speaker Biographies

Introduction to Medical Device Law and Regulation March 2-4, 2021 | Live Virtual Event Speaker Biographies DEBORAH BAKER-JANIS joined NSF International in 2013 after working in the medical device industry for over 10 years, including in both regulatory affairs and product development. Her experience includes the development of pre‐clinical testing protocols, risk documentation, quality system and regulatory affairs standard operating procedures, sales training materials, safety reports and domestic and international regulatory strategies and submissions. Ms. Baker‐Janis has supported the development and commercialization of a wide range of products including cardiovascular devices, general and plastic surgery devices, gastroenterology devices and general hospital devices. Her educational background is in biomedical engineering. MAHNU DAVAR is a partner in the Washington, DC office of Arnold & Porter. His practice focuses on assisting FDA-regulated entities with complex regulatory and compliance matters. He has represented early stage medical technology companies, clinical labs, major academic research institutions, and some of the largest multinational drug and device companies in the oncology, ophthalmology, pain, and diabetes care spaces. Mr. Davar routinely counsels clients on the regulatory and compliance aspects of promotional launch campaigns, clinical research, educational grants and charitable giving, manufacturing and supply chain, deal diligence, and other mission-critical activities. He has conducted significant compliance investigations and audits for business operations in the US, Europe, and Asia, and has extensive experience defending companies in criminal and civil healthcare fraud investigations. He has also assisted clients to prepare for and navigate state and federal regulatory inspections. Mr. Davar is a lecturer at the University of Pennsylvania Law School, a Fellow of the Salzburg Global Seminar, and a former Fulbright Scholar to India. -

Intertek Pharmaceutical Services

PHARMACEUTICAL SERVICES Laboratory & Assurance Solutions INTERTEK PHARMACEUTICAL SERVICES Total Quality Assurance for Pharmaceutical Development and Manufacturing Across your product lifecycle, our expertise brings you the insight you need to accelerate pharmaceutical, biopharmaceutical or medical device product development. Our assurance solutions allow you to identify and mitigate risks associated with products, processes, operational and quality management systems, assets and supply chains. Our specialists bring many years of experience across a variety of product areas including: • Innovative and Generic Pharmaceuticals • Orally Inhaled and Nasal Drug Products • Peptides, Proteins • Nutritional Products, Dietary • Biosimilars Supplements • Monoclonal Antibodies • Consumer Healthcare and Cosmetics • Antibody-drug Conjugates • Medical Devices • Oligonucleotide Therapeutics • Veterinary Medicines • Vaccines • Over-the-Counter (OTC) Drugs 2 Achieving Total Quality You can rely on our global network of experts, Assurance laboratories and specialists to deliver support We have delivered flexible including analysis, bioanalysis, formulation Our scientists, regulatory experts and auditors development, biologics characterization, contract services to the work with you at every stage of development specialist inhalation development expertise, global pharmaceutical and manufacturing, providing responsive, regulatory consultancy, risk assessment, quality compliant solutions. auditing and supply chain management industry for over 25 years At Intertek, -

Why AWS for Gxp Regulated Workloads? an Executive Summary

Why AWS for GxP regulated workloads? An executive summary Move, or build, GxP workloads in the cloud with help from the AWS Life Sciences Practice The AWS life sciences practice and dedicated GxP experts help biopharma and medical device companies build new, or move, regulated workloads to the cloud. Organizations can achieve higher productivity, increased operational resilience, greater agility, improved transparency and traceability, and a lower TCO1 and carbon footprint on AWS2 compared to on-premises systems. AWS offers a secure cloud platform for automating compliance processes and providing enhanced management related to running your GxP workloads. Some of the biggest enterprises and innovative startups in life sciences have moved, or built new GxP systems on the AWS Cloud. Decades of expertise in the life sciences space enable us to help you move away from on-premises regulatory paradigms as your trusted advisor to becoming regulated cloud native. Evidence of success: Evidence of success: AWS customers AWS partners Moderna power their R&D and manufacturing Aizon use AWS to enable real-time visibility and environments on AWS to accelerate the development predictive insights that address GxP compliance. of mRNA therapeutics and vaccines. Idorsia house 90% of IT operations on AWS, including Core Informatics developed a platform on AWS that GxP compliant virtual data centers, giving them 75% enables GxP-validated applications in the cloud for cost savings compared to physical data centers its customers. Bristol Myers Squibb use AWS to create consistent, Tracelink choose AWS to support its life science scalable, and repeatable process to streamline cloud solution to meet customer compliance needs. -

Supply Chain Operations Audit

Building 243 University Campus Cranfield Bedford MK43 0AL Tel: +44 (0)1234 750323 Fax: +44 (0)1234 752040 www.scp-uk.co.uk Supply Chain Operations Audit by Dr. David Lascelles, Supply Chain Planning UK Limited High Impact Supply Chains Supply chain excellence has a real impact on business strategy. Supply chain management is a high impact mission that goes to the roots of a company’s very competitiveness. High-impact supply chains win market share and customer loyalty, create shareholder value, extend the strategic capability and reach of the business. Independent research shows that excellent supply chain management can yield: · 25-50% reduction in total supply chain costs · 25-60% reduction in inventory holding · 25-80% increase in forecast accuracy · 30-50% improvement in order-fulfilment cycle time · 20% increase in after-tax free cash flows That’s what we mean by high-impact. Does a high-impact supply chain leverage your business enterprise? Five Critical Success Factors Building a high-impact supply chain represents one of the most exciting opportunities to create value – and one of the most challenging. The key to success lies in knowing which levers to pull. Our research, together with practical experience, reveals that high-impact supply chains are, essentially, a function of five critical success factors: 1. A clear strategy: for the entire supply chain, tuned to market opportunities and focussed on customer service needs. 2. An integrated organisation structure: enabling the supply chain to operate as a single synchronised entity. 3. Excellent processes: for implementing the strategy, embracing all plan- source-make-deliver operations. -

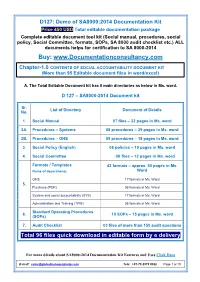

SA 8000:2014 Documents with Manual, Procedures, Audit Checklist

D127: Demo of SA8000:2014 Documentation Kit Price 450 USD Total editable documentation package Complete editable document tool kit (Social manual, procedures, social policy, Social Committee, formats, SOPs, SA 8000 audit checklist etc.) ALL documents helps for certification to SA 8000-2014 Buy: www.Documentationconsultancy.com Chapter-1.0 CONTENTS OF SOCIAL ACCOUNTABILITY DOCUMENT KIT (More than 95 Editable document files in word/excel) A. The Total Editable Document kit has 8 main directories as below in Ms. word. D 127 – SA8000-2014 Document kit Sr. List of Directory Document of Details No. 1. Social Manual 07 files – 22 pages in Ms. word 2A. Procedures – Systems 08 procedures – 29 pages in Ms. word 2B. Procedures – OHS 09 procedures – 18 pages in Ms. word 3. Social Policy (English) 08 policies – 10 pages in Ms. word 4. Social Committee 08 files – 12 pages in Ms. word Formats / Templates 43 formats – approx. 50 pages in Ms. Name of departments Word OHS 17 formats in Ms. Word 5. Purchase (PUR) 05 formats in Ms. Word System and social accountability (SYS) 17 formats in Ms. Word Administration and Training (TRG) 05 formats in Ms. Word Standard Operating Procedures 6. 10 SOPs – 15 pages in Ms. word (SOPs) 7. Audit Checklist 03 files of more than 150 audit questions Total 96 files quick download in editable form by e delivery For more détails about SA8000:2014 Documentation Kit Features and Uses Click Here E-mail: [email protected] Tele: +91-79-2979 5322 Page 1 of 10 D127: Demo of SA8000:2014 Documentation Kit Price 450 USD Total editable documentation package Complete editable document tool kit (Social manual, procedures, social policy, Social Committee, formats, SOPs, SA 8000 audit checklist etc.) ALL documents helps for certification to SA 8000-2014 Buy: www.Documentationconsultancy.com Part: B. -

Guide to Inspections of Quality Systems

FOOD AND DRUG ADMINISTRATION GUIDE TO INSPECTIONS OF QUALITY SYSTEMS 1 August 19991 2 Guide to Inspections of Quality Systems This document was developed by the Quality System Inspec- tions Reengineering Team Members Office of Regulatory Affairs Rob Ruff Georgia Layloff Denise Dion Norm Wong Center for Devices and Radiological Health Tim Wells – Team Leader Chris Nelson Cory Tylka Advisors Chet Reynolds Kim Trautman Allen Wynn Designed and Produced by Malaka C. Desroches 3 Foreword This document provides guidance to the FDA field staff on a new inspectional process that may be used to assess a medical device manufacturer’s compliance with the Quality System Regulation and related regulations. The new inspectional process is known as the “Quality System Inspection Technique” or “QSIT”. Field investigators may conduct an ef- ficient and effective comprehensive inspection using this guidance material which will help them focus on key elements of a firm’s quality system. Note: This manual is reference material for investi- gators and other FDA personnel. The document does not bind FDA and does not confer any rights, privi- leges, benefits or immunities4 for or on any person(s). Table of Contents Performing Subsystem Inspections. 7 Pre-announced Inspections. ..13 Getting Started. .15 Management Controls. .17 Inspectional Objectives 18 Decision Flow Chart 19 Narrative 20 Design Controls. .31 Inspectional Objectives 32 Decision Flow Chart 33 Narrative 34 Corrective and Preventive Actions (CAPA). .47 Inspectional Objectives 48 Decision Flow Chart 49 Narrative 50 Medical Device Reporting 61 Inspectional Objectives 62 Decision Flow Chart 63 Narrative 64 Corrections & Removals 67 Inspectional Objectives 68 Decision Flow Chart 69 Narrative 70 Medical Device Tracking 73 Inspectional Objectives 74 Decision Flow Chart 75 Narrative 76 Production and Process Controls (P&PC). -

Social Accounting: a Practical Guide for Small-Scale Community Organisations

Social Accounting: A Practical Guide for Small-Scale Community Organisations Social Accounting: A Practical Guide for Small Community Organisations and Enterprises Jenny Cameron Carly Gardner Jessica Veenhuyzen Centre for Urban and Regional Studies, The University of Newcastle, Australia. Version 2, July 2010 This page is intentionally blank for double-sided printing. Table of Contents INTRODUCTION……………………………………………………………………..1 How this Guide Came About……………………………………………………2 WHAT IS SOCIAL ACCOUNTING?………………………………………………..3 Benefits.………………………………………………………………………....3 Challenges.……………………………………………………………………....4 THE STEPS………………………………………...…………………………………..5 Step 1: Scoping………………………………….………………………………6 Step 2: Accounting………………………………………………………………7 Step 3: Reporting and Responding…………………….……………………….14 APPLICATION I: THE BEANSTALK ORGANIC FOOD…………………….…16 APPLICATION II: FIG TREE COMMUNITY GARDEN…………………….…24 REFERENCES AND RESOURCES…………………………………...……………33 Acknowledgements We would like to thank The Beanstalk Organic Food and Fig Tree Community Garden for being such willing participants in this project. We particularly acknowledge the invaluable contribution of Rhyall Gordon and Katrina Hartwig from Beanstalk, and Craig Manhood and Bill Roberston from Fig Tree. Thanks also to Jo Barraket from the The Australian Centre of Philanthropy and Nonprofit Studies, Queensland University of Technology, and Gianni Zappalà and Lisa Waldron from the Westpac Foundation for their encouragement and for providing an opportunity for Jenny and Rhyall to present a draft version at the joint QUT and Westpac Foundation Social Evaluation Workshop in June 2010. Thanks to the participants for their feedback. Finally, thanks to John Pearce for introducing us to Social Accounting and Audit, and for providing encouragement and feedback on a draft version. While we have adopted the framework and approach to suit the context of small and primarily volunteer-based community organisations and enterprises, we hope that we have remained true to the intentions of Social Accounting and Audit. -

Suggestions from Social Accountability International

Suggestions from Social Accountability International (SAI) on the work agenda of the UN Working Group on Human Rights and Transnational Corporations and Other Business Enterprises Social Accountability International (SAI) is a non-governmental, international, multi-stakeholder organization dedicated to improving workplaces and communities by developing and implementing socially responsible standards. SAI recognizes the value of the UN Working Group’s mandate to promote respect for human rights by business of all sizes, as the implementation of core labour standards in company supply chains is central to SAI’s own work. SAI notes that the UN Working Group emphasizes in its invitation for proposals from relevant actors and stakeholders, the importance it places not only on promoting the Guiding Principles but also and especially on their effective implementation….resulting in improved outcomes . SAI would support this as key and, therefore, has teamed up with the Netherlands-based ICCO (Interchurch Organization for Development) to produce a Handbook on How To Respect Human Rights in the International Supply Chain to assist leading companies in the practical implementation of the Ruggie recommendations. SAI will also be offering classes and training to support use of the Handbook. SAI will target the Handbook not just at companies based in Western economies but also at those in emerging countries such as Brazil and India that have a growing influence on the world’s economy and whose burgeoning base of small and medium companies are moving forward in both readiness, capacity and interest in managing their impact on human rights. SAI has long term relationships working with businesses in India and Brazil, where national companies have earned SA8000 certification and where training has been provided on implementing human rights at work to numerous businesses of all sizes. -

Chemical Management Audit Certification Supplier Management Verification Solutions

CHEMICAL MANAGEMENT AUDIT CERTIFICATION SUPPLIER MANAGEMENT VERIFICATION SOLUTIONS Intertek’s Chemical Management Audit solution is designed to help retailers and brands verify the safe handling and proper disposal of chemicals, in order to promote chemical management best practices throughout the global supply chain network. Your challenges, our solutions • Improved chemical management system unique techniques to best drive improvement Intertek’s Chemical Management Audit and practice through reduced environmental footprint, solution can help ensure effective chemical • Benchmarked results with global visibility increased efficiency and cost savings. management across your supply chain. The • Management transparency to enable This Chemical Management Audit is offered as process starts with an application of chemical targeted efforts. part of Intertek’s Supply Chain Solutions which expertise to provide a facility baseline risk help organizations all over the world manage • Identification of process efficiency and cost indicator, from which auditing of your facility compliance, risk, sustainability, and quality in savings can be efficiently planned. the supply chain. Intertek’s web-based platform and software • Assistance with green product design and helps ensure our Chemical Management certification Audit approach is the ideal tool for • Protect reputation risk, people and evaluating, tracking and monitoring chemical environment management performance in factories, Upon satisfactory completion of the chemical FOR MORE INFORMATION ultimately assuring overall performance management assessment and performance improvements. criteria, the manufacturer will receive a Intertek’s Chemical Management Audit report which details performance as well UK +44 116 296 1620 solution includes modular guidance for: as benchmarked comparisons with peers. The report showcases commitments of • Chemical Supply & Policy AMER +1 800 810 1195 moving towards zero discharge of hazardous • Management Practices chemicals. -

From Corporate Responsibility to Corporate Accountability

Hastings Business Law Journal Volume 16 Number 1 Winter 2020 Article 3 Winter 2020 From Corporate Responsibility to Corporate Accountability Min Yan Daoning Zhang Follow this and additional works at: https://repository.uchastings.edu/hastings_business_law_journal Part of the Business Organizations Law Commons Recommended Citation Min Yan and Daoning Zhang, From Corporate Responsibility to Corporate Accountability, 16 Hastings Bus. L.J. 43 (2020). Available at: https://repository.uchastings.edu/hastings_business_law_journal/vol16/iss1/3 This Article is brought to you for free and open access by the Law Journals at UC Hastings Scholarship Repository. It has been accepted for inclusion in Hastings Business Law Journal by an authorized editor of UC Hastings Scholarship Repository. For more information, please contact [email protected]. 2 - YAN _ZHANG - V9 - KC - 10.27.19.DOCX (DO NOT DELETE) 11/15/2019 11:11 AM From Corporate Responsibility to Corporate Accountability Min Yan* and Daoning Zhang** I. INTRODUCTION The concept of corporate responsibility or corporate social responsibility (“CSR”) keeps evolving since it appeared. The emphasis was first placed on business people’s social conscience rather than on the company itself, which was well reflected by Howard Bowen’s landmark book, Social Responsibilities of the Businessman.1 Then CSR was defined as responsibilities to society, which extends beyond economic and legal obligations by corporations.2 Since then, corporate responsibility is thought to begin where the law ends. 3 In other words, the concept of social responsibility largely excludes legal obedience from the concept of social responsibility. An analysis of 37 of the most used definitions of CSR also shows “voluntary” as one of the most common dimensions.4 Put differently, corporate responsibility reflects the belief that corporations have duties beyond generating profits for their shareholders.