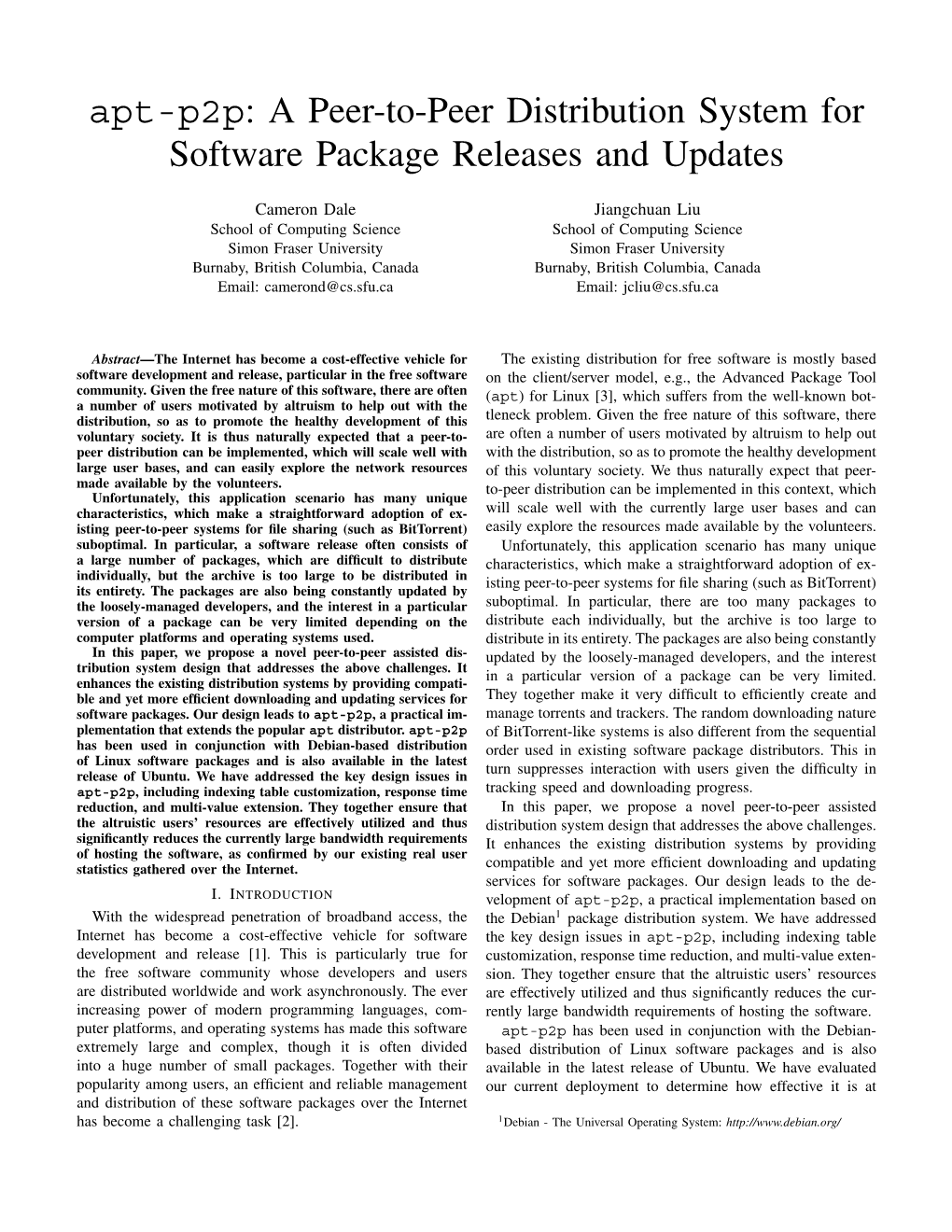

Apt-P2p: a Peer-To-Peer Distribution System for Software Package Releases and Updates

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Debian Developer's Reference Version 12.0, Released on 2021-09-01

Debian Developer’s Reference Release 12.0 Developer’s Reference Team 2021-09-01 CONTENTS 1 Scope of This Document 3 2 Applying to Become a Member5 2.1 Getting started..............................................5 2.2 Debian mentors and sponsors......................................6 2.3 Registering as a Debian member.....................................6 3 Debian Developer's Duties 9 3.1 Package Maintainer's Duties.......................................9 3.1.1 Work towards the next stable release............................9 3.1.2 Maintain packages in stable .................................9 3.1.3 Manage release-critical bugs.................................. 10 3.1.4 Coordination with upstream developers............................ 10 3.2 Administrative Duties.......................................... 10 3.2.1 Maintaining your Debian information............................. 11 3.2.2 Maintaining your public key.................................. 11 3.2.3 Voting.............................................. 11 3.2.4 Going on vacation gracefully.................................. 12 3.2.5 Retiring............................................. 12 3.2.6 Returning after retirement................................... 13 4 Resources for Debian Members 15 4.1 Mailing lists............................................... 15 4.1.1 Basic rules for use....................................... 15 4.1.2 Core development mailing lists................................. 15 4.1.3 Special lists........................................... 16 4.1.4 Requesting new -

Debian Installation Manual

Powered by Universal Speech Solutions LLC MRCP Deb Installation Manual Administrator Guide Revision: 70 Created: February 7, 2015 Last updated: March 15, 2021 Author: Arsen Chaloyan Powered by Universal Speech Solutions LLC | Overview 1 Table of Contents 1 Overview ............................................................................................................................................... 3 1.1 Applicable Versions ............................................................................................................ 3 1.2 Supported Distributions ...................................................................................................... 3 1.3 Authentication ..................................................................................................................... 3 2 Installing Deb Packages Using Apt-Get ............................................................................................... 4 2.1 Repository Configuration ................................................................................................... 4 2.2 GnuPG Key ......................................................................................................................... 4 2.3 Repository Update .............................................................................................................. 4 2.4 UniMRCP Client Installation .............................................................................................. 5 2.5 UniMRCP Server Installation ............................................................................................ -

GNU Guix Cookbook Tutorials and Examples for Using the GNU Guix Functional Package Manager

GNU Guix Cookbook Tutorials and examples for using the GNU Guix Functional Package Manager The GNU Guix Developers Copyright c 2019 Ricardo Wurmus Copyright c 2019 Efraim Flashner Copyright c 2019 Pierre Neidhardt Copyright c 2020 Oleg Pykhalov Copyright c 2020 Matthew Brooks Copyright c 2020 Marcin Karpezo Copyright c 2020 Brice Waegeneire Copyright c 2020 Andr´eBatista Copyright c 2020 Christine Lemmer-Webber Copyright c 2021 Joshua Branson Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License". i Table of Contents GNU Guix Cookbook ::::::::::::::::::::::::::::::: 1 1 Scheme tutorials ::::::::::::::::::::::::::::::::: 2 1.1 A Scheme Crash Course :::::::::::::::::::::::::::::::::::::::: 2 2 Packaging :::::::::::::::::::::::::::::::::::::::: 5 2.1 Packaging Tutorial:::::::::::::::::::::::::::::::::::::::::::::: 5 2.1.1 A \Hello World" package :::::::::::::::::::::::::::::::::: 5 2.1.2 Setup:::::::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.1.2.1 Local file ::::::::::::::::::::::::::::::::::::::::::::: 8 2.1.2.2 `GUIX_PACKAGE_PATH' ::::::::::::::::::::::::::::::::: 9 2.1.2.3 Guix channels ::::::::::::::::::::::::::::::::::::::: 10 2.1.2.4 Direct checkout hacking:::::::::::::::::::::::::::::: 10 2.1.3 Extended example :::::::::::::::::::::::::::::::::::::::: -

2004 USENIX Annual Technical Conference

USENIX Association Proceedings of the FREENIX Track: 2004 USENIX Annual Technical Conference Boston, MA, USA June 27–July 2, 2004 © 2004 by The USENIX Association All Rights Reserved For more information about the USENIX Association: Phone: 1 510 528 8649 FAX: 1 510 548 5738 Email: [email protected] WWW: http://www.usenix.org Rights to individual papers remain with the author or the author's employer. Permission is granted for noncommercial reproduction of the work for educational or research purposes. This copyright notice must be included in the reproduced paper. USENIX acknowledges all trademarks herein. The NetBSD Update System Alistair Crooks, The NetBSD Project 9th April 2004 Abstract driving force behind the use of pkgsrc or NetBSD - rather, this is a description of a facility which is This paper explains the needs for a binary patch and used in NetBSD and which can be used on any other update system, and explains the background and im- operating system to augment the standard facilities plementation of NetBSD-update, a binary update fa- which are in place. cility for NetBSD. The implementation is then anal- ysed, and some lessons drawn for others who may be interested in implementing their own binary up- Driving Forces for a Binary Patch and date system using the NetBSD pkgsrc tools, which Update System are available for many operating systems and envi- ronments already. It is now common to nd rewalls in large and small organisations, preventing malign access, and protect- ing the organisation from intrusion and other attacks. The NetBSD Binary Update Sys- It would not be prudent to have a C compiler in- tem stalled on such a machine - its use should be that of a gatekeeper, as a bouncer with an attitude, keep- Unix, Linux and the BSD operating systems have ing anything suspicious out, and not allowing anyone traditionally been distributed in source format, and who does manage to penetrate the defences to use users and administrators have had a long tradition any tools to break further into the infrastructure. -

Application of Ultimate Debian Database in Debian Pure Blends Harvesting Information About Packages for Specific Work fields

Application of Ultimate Debian Database in Debian Pure Blends Harvesting information about packages for specific work fields Andreas Tille Debian Conference 9 Cáceres, July 26, 2009 Overview 1 Debian Pure Blends Short introduction Blends features Web tools 2 Ultimate Debian Database Short introduction Advantages of using UDD for Blends 3 Future Planned features for Blends TODO 2 / 22 Rename: CDD § Debian Pure Blends Term Custom Debian Distributions was always misunderstood Main misunderstanding: CDD was regarded as “something else than Debian” even if people were told that it is a concept inside Debian explicitly Dropped the misleading name in favour of a name where you just have to read the docs § Debian Pure Blend (in short Blend): a subset of Debian that is configured to support a particular target group out-of-the-box. 3 / 22 Reminder: Basic goal of Blends Debian > 22.000 packages Users interested in subset Groups of specialised users Easy installation and configuration While Debian stays general support specialists as well No derivative from Debian Basic idea: Do not make a separate distribution but make Debian fit for special purpose instead 4 / 22 Upstream - Debian Developer - User Tie a solid network of Debian developers, upstream developers (“developing experts”) and users Rationale: Experts in this field need help in build system / packaging Upstream anticipates enhancements of build system and security audit Finally support upstream developers to become Debian maintainers Penetrating specific work fields with Linux makes it even more -

Urpmi.Addmedia

Todo lo que siempre quisiste saber sobre urpmi pero nunca te atreviste a preguntarlo Todo lo que siempre quisiste saber sobre urpmi pero nunca te atreviste a preguntarlo Traducido por Willy Walker de http://mandrake.vmlinuz.ca/bin/view/Main/UsingUrpmi Descargalo en PDF Otros recursos para aprender sobre urpmi Urpmi es una importante herramienta para todos los usuarios de Mandriva. Tomate tiempo para aprender utilizarlo. Esta página te da una descripción de las opciones más comúnmente usadas. Debajo están otros recursos con una información más detallada sobre urpmi: ● http://www.urpmi.org/ : Página de buena documentación de urpmi en Francés y en Inglés. ● Páginas man: comprueba las páginas man para todas las opciones. Ésas son la fuente más actualizada de información. Junto a una introducción muy básica, esta página intenta cubrir lo qué no se cubre en las dos fuentes antedichas de información. Asumimos que sabes utilizar una página man y que has leído la página antedicha. Una vez que lo hayas hecho así, vuelve a esta página: hay más información sobre problemas no tan obvios que puede no funcionarte. Usando urpmi Lista rápida de tareas comunes Comando Que te dice urpmq -i xxx.rpm Información del programa urpmq -il xxx.rpm Información y los archivos que instala urpmq --changelog xxx.rpm changelog (cambios) urpmq -R xxx.rpm Que requiere este rpm urpmf ruta/a/archivo Que rpm proporciona este archivo rpm -q --whatprovides ruta/a/ similar a urpmf, pero trabaja con ambos hdlist.cz y synthesis.hdlist.cz archivo urpmi.update updates Actualizaciones disponibles desde sus fuentes de actualización Actualizaciones disponibles desde todas las fuentes urpmi (puede urpmc necesitar urpmi a urpmc primero) urpmq --list-media Lista los repositorios que tienes Todo lo que siempre quisiste saber sobre urpmi pero nunca te atreviste a preguntarlo Comando Que hace urpme xxxx Elimina el rpm (y dependencias) Muestra todos los rpms que coinciden con esta cadena. -

Debian's Perl Team from an End-User Perspective

Getting Perl modules into Debian Debian's Perl team from an end-user perspective Tim Retout 11th September 2010 HantsLUG @ IBM Hursley Tim Retout pkg-perl for users What is the Debian Perl team? Maintain about 2000 Perl packages within Debian Both official Debian Developers and sponsees Around 70-80 committers,1 but most activity from a small core team Also indirectly contributes most of the Perl packages in Debian's derivatives (e.g. Ubuntu). 1http://www.ohloh.net/p/pkg-perl Tim Retout pkg-perl for users Using Perl on Debian How do you install Perl modules? apt-get install libfoo-perl Backports for older releases CPAN for unpackaged modules CPAN (the tool) is configured to play nicely with Debian packages, but does not install packages when satisfying dependencies. Tim Retout pkg-perl for users When a module isn't packaged If the Perl module you need is not available, you have several options: 1 Install it with CPAN, and handle future upgrades yourself. 2 File an RFP (Request for package) bug in Debian.2 3 Build yourself a private Debian package with dh-make-perl, and handle future upgrades yourself. 4 Get involved and contribute it back to Debian! Getting involved is easier than you think. 2http://pkg-perl.alioth.debian.org/howto/RFP.html Tim Retout pkg-perl for users Getting involved - tools The Perl team uses tools to speed up packaging. alioth.debian.org for team management git for most packages http://bugs.debian.org/ Package Entropy Tracker (PET) IRC (#debian-perl on OFTC) Two mailing lists - one for discussion, one to receive automated messages http://pkg-perl.alioth.debian.org/ links to all of these. -

Functional Package and Configuration Management with GNU Guix

Functional Package and Configuration Management with GNU Guix David Thompson Wednesday, January 20th, 2016 About me GNU project volunteer GNU Guile user and contributor since 2012 GNU Guix contributor since 2013 Day job: Ruby + JavaScript web development / “DevOps” 2 Overview • Problems with application packaging and deployment • Intro to functional package and configuration management • Towards the future • How you can help 3 User autonomy and control It is becoming increasingly difficult to have control over your own computing: • GNU/Linux package managers not meeting user needs • Self-hosting web applications requires too much time and effort • Growing number of projects recommend installation via curl | sudo bash 1 or otherwise avoid using system package managers • Users unable to verify that a given binary corresponds to the source code 1http://curlpipesh.tumblr.com/ 4 User autonomy and control “Debian and other distributions are going to be that thing you run Docker on, little more.” 2 2“ownCloud and distribution packaging” http://lwn.net/Articles/670566/ 5 User autonomy and control This is very bad for desktop users and system administrators alike. We must regain control! 6 What’s wrong with Apt/Yum/Pacman/etc.? Global state (/usr) that prevents multiple versions of a package from coexisting. Non-atomic installation, removal, upgrade of software. No way to roll back. Nondeterminstic package builds and maintainer-uploaded binaries. (though this is changing!) Reliance on pre-built binaries provided by a single point of trust. Requires superuser privileges. 7 The problem is bigger Proliferation of language-specific package managers and binary bundles that complicate secure system maintenance. -

NET Core on Linux

BROUGHT TO YOU IN PARTNERSHIP WITH 237 Installing on Linux Open Source Repositories The Layers of .NET Core .NET Core on Linux dotnet Commands BY DON SCHENCK CONTENTS Tag Helpers... and more! DZone.com/Refcardz ANY DEVELOPER, ANY APP, ANY PLATFORM RED HAT ENTERPRISE LINUX 7 SERVER (64 BIT) Visit When .NET made its debut in 2002, it supported subscription-manager list --available multiple languages, including C\# and Visual Basic (get the Pool Id to be used in the next step) (VB). Over the years, many languages have been added subscription-manager attach --pool=<Pool Id> subscription-manager repos --enable=rhel-7-server-dotnet-rpms to the .NET Framework. Because .NET Core is a new yum install scl-utils development effort, language support must be re-in yum install rh-dotnet20 echo ‘source scl_source enable rh-dotnet20’ >>~/.bashrc This refcard will guide you along the path to being scl enable rh-dotnet20 bash productive using .NET Core on Linux, from installation UBUNTU 14.04 / LINUX MINT 17 (64 BIT) Get More Refcardz! to debugging. Information is available to help you find documentation and discussions related to .NET sudo sh -c 'echo "deb [arch=amd64] https://apt-mo. Core. An architectural overview is presented, as well trafficmanager.net/repos/dotnet-release/ trusty main" > / as tips for using the new Command Line Interface etc/apt/sources.list.d/dotnetdev.list' sudo apt-key adv --keyserver apt-mo.trafficmanager.net (CLI). Building MVC web sites, RESTful services and --recv-keys 417A0893 standalone applications are also covered. Finally, sudo apt-get update some tools and helpful settings are discussed as they relate to your development efforts. -

Debian Packaging Tutorial

Debian Packaging Tutorial Lucas Nussbaum [email protected] version 0.27 – 2021-01-08 Debian Packaging Tutorial 1 / 89 About this tutorial I Goal: tell you what you really need to know about Debian packaging I Modify existing packages I Create your own packages I Interact with the Debian community I Become a Debian power-user I Covers the most important points, but is not complete I You will need to read more documentation I Most of the content also applies to Debian derivative distributions I That includes Ubuntu Debian Packaging Tutorial 2 / 89 Outline 1 Introduction 2 Creating source packages 3 Building and testing packages 4 Practical session 1: modifying the grep package 5 Advanced packaging topics 6 Maintaining packages in Debian 7 Conclusions 8 Additional practical sessions 9 Answers to practical sessions Debian Packaging Tutorial 3 / 89 Outline 1 Introduction 2 Creating source packages 3 Building and testing packages 4 Practical session 1: modifying the grep package 5 Advanced packaging topics 6 Maintaining packages in Debian 7 Conclusions 8 Additional practical sessions 9 Answers to practical sessions Debian Packaging Tutorial 4 / 89 Debian I GNU/Linux distribution I 1st major distro developed “openly in the spirit of GNU” I Non-commercial, built collaboratively by over 1,000 volunteers I 3 main features: I Quality – culture of technical excellence We release when it’s ready I Freedom – devs and users bound by the Social Contract Promoting the culture of Free Software since 1993 I Independence – no (single) -

A Debian Pure Blend for Astronomy and Astrophysics

The Debian Astro project A Debian Pure Blend for astronomy and astrophysics Ole Streicher [email protected] Zeuthen, 2018-02-13 Ole Streicher (AIP Potsdam) The Debian Astro project Zeuthen, 2018-02-13 1 / 23 Debian GNU/Linux Free Linux based operating system One of the oldest distributions (founded 1993) Free as in \Free Speech" Base: Social Contract; Debian Free Software Guidelines > 50:000 software packages > 1:000 official developers Base for many derivatives: Ubuntu, Mint, ... Current stable version: Debian 9 (Stretch), since June 2017 Ole Streicher (AIP Potsdam) The Debian Astro project Zeuthen, 2018-02-13 2 / 23 The Debian Astro Pure Blend Blended tea: a combination of different kinds of teas to guarantee consistent quality (Wikipedia) Method to organize Debian astronomy packages currently 294 packages, (more in preparation) 19 metapackages Web page, \tasks" pages Handle citations, ASCL entries Completely integrated into Debian (Pure) First release with Debian Stretch (June 2017) Ole Streicher (AIP Potsdam) The Debian Astro project Zeuthen, 2018-02-13 3 / 23 Debian Pure Blends Debian Astro - Astronomy and astrophysics Debian GIS - Geographical Information Systems DebiChem - Chemistry Debian Med - Strong focus on Microbiology NeuroDebian - Neuroscience Debian Science - \Umbrella" blend for sciences Debian Edu - Education of all kind Debian Games, Debian Junior, Debian Multimedia, Hamradio, ... Ole Streicher (AIP Potsdam) The Debian Astro project Zeuthen, 2018-02-13 4 / 23 History of Debian Astro First packages: saoimage (1999), cfitsio -

Debian Secrets Power Tools for Power Users

About this talk Changing system behaviour Searching Fooling the package system Anticipating and correcting problems Debian menu Debian Secrets Power tools for Power users Wouter Verhelst FOSDEM 2010 Wouter Verhelst Debian Secrets Idea: compare Debian-specic features against other distributions' features Help! About this talk Changing system behaviour Searching Fooling the package system Anticipating and correcting problems Debian menu About this talk Recycled from DebConf8 DebianDay Wouter Verhelst Debian Secrets Help! About this talk Changing system behaviour Searching Fooling the package system Anticipating and correcting problems Debian menu About this talk Recycled from DebConf8 DebianDay Idea: compare Debian-specic features against other distributions' features Wouter Verhelst Debian Secrets About this talk Changing system behaviour Searching Fooling the package system Anticipating and correcting problems Debian menu About this talk Recycled from DebConf8 DebianDay Idea: compare Debian-specic features against other distributions' features Help! Wouter Verhelst Debian Secrets update-alternatives −−config x-terminal-emulator more info: man update-alternatives About this talk Changing system behaviour Searching update-alternatives Fooling the package system dpkg-statoverride Anticipating and correcting problems Debian menu update-alternatives multiple packages providing a particular command Wouter Verhelst Debian Secrets more info: man update-alternatives About this talk Changing system behaviour Searching update-alternatives Fooling