Browser Fingerprinting: Exploring Device Diversity to Augment Authentication and Build Client-Side Countermeasures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Differential Fuzzing the Webassembly

Master’s Programme in Security and Cloud Computing Differential Fuzzing the WebAssembly Master’s Thesis Gilang Mentari Hamidy MASTER’S THESIS Aalto University - EURECOM MASTER’STHESIS 2020 Differential Fuzzing the WebAssembly Fuzzing Différentiel le WebAssembly Gilang Mentari Hamidy This thesis is a public document and does not contain any confidential information. Cette thèse est un document public et ne contient aucun information confidentielle. Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Technology. Antibes, 27 July 2020 Supervisor: Prof. Davide Balzarotti, EURECOM Co-Supervisor: Prof. Jan-Erik Ekberg, Aalto University Copyright © 2020 Gilang Mentari Hamidy Aalto University - School of Science EURECOM Master’s Programme in Security and Cloud Computing Abstract Author Gilang Mentari Hamidy Title Differential Fuzzing the WebAssembly School School of Science Degree programme Master of Science Major Security and Cloud Computing (SECCLO) Code SCI3084 Supervisor Prof. Davide Balzarotti, EURECOM Prof. Jan-Erik Ekberg, Aalto University Level Master’s thesis Date 27 July 2020 Pages 133 Language English Abstract WebAssembly, colloquially known as Wasm, is a specification for an intermediate representation that is suitable for the web environment, particularly in the client-side. It provides a machine abstraction and hardware-agnostic instruction sets, where a high-level programming language can target the compilation to the Wasm instead of specific hardware architecture. The JavaScript engine implements the Wasm specification and recompiles the Wasm instruction to the target machine instruction where the program is executed. Technically, Wasm is similar to a popular virtual machine bytecode, such as Java Virtual Machine (JVM) or Microsoft Intermediate Language (MSIL). -

Using a Mobile Device Or Tablet to Take Online Training Courses

Using a Mobile Device or Tablet to Take Online Training Courses ClickSafety online training courses run using Adobe Flash and need a web browser that supports Adobe Flash Player. Customers have the best experience when using the Mobile/Tablet Web Browser PUFFIN. Puffin Browser is available FREE for both Apple iPad/iPhone and Android mobile and tablet devices. Puffin Mobile App Installation Instructions APPLE iOS (iPad) Puffin Web Browser Free is the fastest free mobile browser. Download now and you can enjoy free Flash support 24/7 (this app is supported by Ads*). TO INSTALL, CLICK APP STORE ICON BELOW to download the app from the Apple App Store: ONCE INSTALLED on your iPad: • Go to your iPad home screen and find the Puffin App Icon • Open the Puffin App by clicking on the Icon • Locate the Website URL Bar at Top of App • Enter: www.iftilms.org • Enter USERNAME (Member ID) and PASSWORD CLICK LOG IN • Locate the OSHA 30 course and CLICK the GO button to start the course. ANDROID (Mobile Devices & Tablets) Puffin Web Browser Free is the fastest free mobile browser. Download now and you can enjoy free Flash support 24/7 (this app is supported by Ads*). TO INSTALL, CLICK APP STORE ICON BELOW to download the app from the Apple App Store: ONCE INSTALLED on your Android Mobile Device or Tablet: • Go to your Android device home screen and find the Puffin App Icon • Open the Puffin App by clicking on the Icon • Locate the Website URL Bar at Top of App • Enter: www.iftilms.org • Enter USERNAME (Member ID) and PASSWORD CLICK LOG IN • Locate the OSHA 30 course and CLICK the GO button to start the course. -

Rich Media Web Application Development I Week 1 Developing Rich Media Apps Today’S Topics

IGME-330 Rich Media Web Application Development I Week 1 Developing Rich Media Apps Today’s topics • Tools we’ll use – what’s the IDE we’ll be using? (hint: none) • This class is about “Rich Media” – we’ll need a “Rich client” – what’s that? • Rich media Plug-ins v. Native browser support for rich media • Who’s in charge of the HTML5 browser API? (hint: no one!) • Where did HTML5 come from? • What are the capabilities of an HTML5 browser? • Browser layout engines • JavaScript Engines Tools we’ll use • Browsers: • Google Chrome - assignments will be graded on Chrome • Safari • Firefox • Text Editor of your choice – no IDE necessary • Adobe Brackets (available in the labs) • Notepad++ on Windows (available in the labs) • BBEdit or TextWrangler on Mac • Others: Atom, Sublime • Documentation: • https://developer.mozilla.org/en-US/docs/Web/API/Document_Object_Model • https://developer.mozilla.org/en-US/docs/Web/API/Canvas_API/Tutorial • https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide What is a “Rich Client” • A traditional “web 1.0” application needs to refresh the entire page if there is even the smallest change to it. • A “rich client” application can update just part of the page without having to reload the entire page. This makes it act like a desktop application - see Gmail, Flickr, Facebook, ... Rich Client programming in a web browser • Two choices: • Use a plug-in like Flash, Silverlight, Java, or ActiveX • Use the built-in JavaScript functionality of modern web browsers to access the native DOM (Document Object Model) of HTML5 compliant web browsers. -

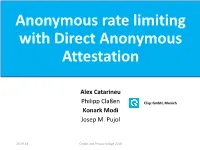

Anonymous Rate Limiting with Direct Anonymous Attestation

Anonymous rate limiting with Direct Anonymous Attestation Alex Catarineu Philipp Claßen Cliqz GmbH, Munich Konark Modi Josep M. Pujol 25.09.18 Crypto and Privacy Village 2018 Data is essential to build services 25.09.18 Crypto and Privacy Village 2018 Problems with Data Collection IP UA Timestamp Message Payload Cookie Type 195.202.XX.XX FF.. 2018-07-09 QueryLog [face, facebook.com] Cookie=966347bfd 14:01 1e550 195.202.XX.XX Chrome.. 2018-07-09 Page https://analytics.twitter.com/user/konark Cookie=966347bfd 14:06 modi 1e55040434abe… 195.202.XX.XX Chrome.. 2018-07-09 QueryLog [face, facebook.com] Cookie=966347bfd 14:10 1e55040434abe… 195.202.XX.XX Chrome.. 2018-07-09 Page https://booking.com/hotels/barcelona Cookie=966347bfd 16:15 1e55040434abe… 195.202.XX.XX Chrome.. 2018-07-09 QueryLog [face, facebook.com] Cookie=966347bfd 14:10 1e55040434abe… 195.202.XX.XX FF.. 2018-07-09 Page https://shop.flixbus.de/user/resetting/res Cookie=966347bfd 18:40 et/hi7KTb1Pxa4lXqKMcwLXC0XzN- 1e55040434abe… 47Tt0Q 25.09.18 Crypto and Privacy Village 2018 Anonymous data collection Timestamp Message Type Payload 2018-07-09 14 Querylog [face, facebook.com] 2018-07-09 14 Querylog [boo, booking.com] 2018-07-09 14 Page https://booking.com/hotels/barcelona 2018-07-09 14 Telemetry [‘engagement’: 0 page loads last week, 5023 page loads last month] More details: https://gist.github.com/solso/423a1104a9e3c1e3b8d7c9ca14e885e5 http://josepmpujol.net/public/papers/big_green_tracker.pdf 25.09.18 Crypto and Privacy Village 2018 Motivation: Preventing attacks on anonymous data collection Timestamp Message Type Payload 2018-07-09 14 querylog [book, booking.com] 2018-07-09 14 querylog [fac, facebook.com] … …. -

Demystifying Content-Blockers: a Large-Scale Study of Actual Performance Gains

Demystifying Content-blockers: A Large-scale Study of Actual Performance Gains Ismael Castell-Uroz Josep Sole-Pareta´ Pere Barlet-Ros Universitat Politecnica` de Catalunya Universitat Politecnica` de Catalunya Universitat Politecnica` de Catalunya Barcelona, Spain Barcelona, Spain Barcelona, Spain [email protected] [email protected] [email protected] Abstract—With the evolution of the online advertisement and highly parallel network measurement system [10] that loads tracking ecosystem, content-filtering has become the reference every website using one of the most relevant content-blockers tool for improving the security, privacy and browsing experience of each category and compares their performance. when surfing the Internet. It is also commonly believed that using content-blockers to stop unsolicited content decreases the time We found that, although we can observe some improvements needed for loading websites. In this work, we perform a large- in terms of effective page size, the results do not directly scale study with the 100K most popular websites on the actual translate to gains in loading time. In some cases, there could performance improvements of using content-blockers. We focus even be an overhead to be paid. This is the case for two of the our study on two relevant metrics for measuring the browsing studied plugins, especially in small and fast loading websites. performance; page size and loading time. Our results show that using such tools results in small improvements in terms of page The measurement system and methodology proposed in this size but, contrary to popular belief, it has a negligible impact in paper can also be useful for network and service administrators terms of loading time. -

Garder Le Contrôle Sur Sa Navigation Web

Garder le contrôle sur sa navigation web Christophe Villeneuve @hellosct1 @[email protected] 21 Septembre 2018 Qui ??? Christophe Villeneuve .21 Septembre 2018 La navigation… libre .21 Septembre 2018 Depuis l'origine... Question : Que vous faut-il pour aller sur internet ? Réponse : Un navigateur Mosaic Netscape Internet explorer ... .21 Septembre 2018 Aujourd'hui : ● Navigations : desktop VS mobile ● Pistage ● Cloisonnement .21 Septembre 2018 Ordinateur de bureau .21 Septembre 2018 Les (principaux) navigateurs de bureau .21 Septembre 2018 La famille… des plus connus .21 Septembre 2018 GAFAM ? ● Acronyme des géants du Web G → Google A → Apple F → Facebook A → Amazon M → Microsoft ● Développement par des sociétés .21 Septembre 2018 Exemple (R)Tristan Nitot .21 Septembre 2018 Firefox : ● Navigateur moderne ● Logiciel libre, gratuit et populaire ● Développement par la Mozilla Fondation ● Disponible pour tous les OS ● Respecte les standards W3C ● Des milliers d'extensions ● Accès au code source ● Forte communauté de développeurs / contributeur(s) .21 Septembre 2018 Caractéristiques Mozilla fondation ● Prise de décisions stratégiques pour leur navigateur Mozilla ● Mozilla Fondation n'a pas d'actionnaires ● Pas d'intérêts non Web (en-tête) ● Manifesto ● Etc. 2004 2005 2009 2013 2017 .21 Septembre 2018 Manifeste Mozilla (1/) ● Internet fait partie intégrante de la vie moderne → Composant clé dans l’enseignement, la communication, la collaboration,les affaires, le divertissement et la société en général. ● Internet est une ressource publique mondiale → Doit demeurer ouverte et accessible. ● Internet doit enrichir la vie de tout le monde ● La vie privée et la sécurité des personnes sur Internet → Fondamentales et ne doivent pas être facultatives https://www.mozilla.org/fr/about/manifesto/ .21 Septembre 2018 Manifeste Mozilla (2/) ● Chacun doit pouvoir modeler Internet et l’usage qu’il en fait. -

The Web. Webgl, Webvr and Gltf GDC, February 2017

Reaching the Largest Gaming Platform of All: The Web. WebGL, WebVR and glTF GDC, February 2017 Neil Trevett Vice President Developer Ecosystem, NVIDIA | President, Khronos | glTF Chair [email protected] | @neilt3d © Copyright Khronos Group 2017 - Page 1 Khronos News Here at GDC 2017 • WebGL™ 2.0 Specification Finalized and Shipping - https://www.khronos.org/blog/webgl-2.0-arrives • Developer preview on glTF™ 2.0 - https://www.khronos.org/blog/call-for-feedback-on-gltf-2.0 • Announcing OpenXR™ for Portable Virtual Reality - https://www.khronos.org/blog/the-openxr-working-group-is-here • Call for Participation in the 3D Portability Exploratory Group - A native API for rendering code that can run efficiently over Vulkan, DX12 and Metal khronos.org/3dportability • Adoption Grows for Vulkan®; New Features Released - Details here © Copyright Khronos Group 2017 - Page 2 OpenXR – Portable Virtual Reality © Copyright Khronos Group 2017 - Page 3 OpenXR Details OpenXR Working Group Members Design work has started in December Estimate 12-18 months to release WebVR would use WebGL and OpenXR © Copyright Khronos Group 2017 - Page 4 3D Portability API – Call For Participation ‘WebGL Next’ - Lifts ‘Portability API’ to A Portability Solution JavaScript and WebAssembly needs to address APIs and - Provides foundational graphics shading languages and GPU compute for the Web ‘Portability Solution’ Portability API Spec + Shading Language open source tools API MIR MSL Overlap ‘Portability API’ DX IL Specification Analysis HLSL GLSL Open source compilers/translators for shading and intermediate languages © Copyright Khronos Group 2017 - Page 5 Speakers and Topics for This Session Google WebGL 2.0 Zhenyao Mo, Kai Ninomiya, Ken Russell Three.js Three.js Ricardo Cabello (Mr.doob) Google WebVR Brandon Jones NVIDIA - Neil Trevett glTF Microsoft - Saurabh Bhatia All Q&A © Copyright Khronos Group 2017 - Page 6 WebGL 2.0 Zhenyao Mo, Kai Ninomiya, Ken Russell Google, Inc. -

SEED™ Assessment Guide for Family Planning Programming

SEED™ Assessment Guide for Family Planning Programming SEED™ Assessment Guide for Family Planning Programming © 2011 EngenderHealth EngenderHealth 440 Ninth Avenue New York, NY 10001 U.S.A. Telephone: 212-561-8000 Fax: 212-561-8067 e-mail: [email protected] www.engenderhealth.org This publication was made possible through support provided by the F.M. Kirby Foundation. The opinions expressed herein are those of the publisher and do not necessarily reflect the views of the foundation. Cover design, graphic design, and typesetting: Weronika Murray and Tor de Vries Printing: Automated Graphic Systems ISBN 978-1-885063-97-7 Printed in the United States of America. Printed on recycled paper. Suggested citation: EngenderHealth. 2011. The SEED assessment guide for family planning programming. New York. Photo credits: M. Tuschman/EngenderHealth, A. Fiorente/EngenderHealth, C. Svingen/EngenderHealth. ii ContEntS Acknowledgments .................................................................................................................................. iv Acronyms and Abbreviations ................................................................................................................... v Introduction ................................................................................................................................. 2 EngenderHealth’s SEED Programming Model .......................................................................................... 3 How to Use This Assessment Guide........................................................................................................ -

Whotracks. Me: Shedding Light on the Opaque World of Online Tracking

WhoTracks.Me: Shedding light on the opaque world of online tracking Arjaldo Karaj Sam Macbeth Rémi Berson [email protected] [email protected] [email protected] Josep M. Pujol [email protected] Cliqz GmbH Arabellastraße 23 Munich, Germany ABSTRACT print users and their devices [25], and the extent to Online tracking has become of increasing concern in recent which these methods are being used across the web [5], years, however our understanding of its extent to date has and quantifying the value exchanges taking place in on- been limited to snapshots from web crawls. Previous at- line advertising [7, 27]. There is a lack of transparency tempts to measure the tracking ecosystem, have been done around which third-party services are present on pages, using instrumented measurement platforms, which are not and what happens to the data they collect is a common able to accurately capture how people interact with the web. concern. By monitoring this ecosystem we can drive In this work we present a method for the measurement of awareness of the practices of these services, helping to tracking in the web through a browser extension, as well as inform users whether they are being tracked, and for a method for the aggregation and collection of this informa- what purpose. More transparency and consumer aware- tion which protects the privacy of participants. We deployed ness of these practices can help drive both consumer this extension to more than 5 million users, enabling mea- and regulatory pressure to change, and help researchers surement across multiple countries, ISPs and browser con- to better quantify the privacy and security implications figurations, to give an accurate picture of real-world track- caused by these services. -

Vmlogin Virtual Multi-Login Browser Instruction Ver

VMLogin virtual multi-login browser Instruction Ver 1.0 Skype: [email protected] Telegram1: Vmlogin Telegram2: vmlogin_us System Requirements Hardware requirements RAM: 4GB recommended; 1GB available disk space Effective GPU Supported operating systems Although VMLogin supports x86 (32-bit) systems, we recommend using VMLogin on x64 (64-bit) systems. OS supported by VMLogin Windows 10 Windows Server 2016 Windows 7 Windows 8 Software install Users can download the latest software installation package from the official website: vmlogin.us. The version used in this manual is 1.0.3.8. We can start the software installation package program, and the interface for selecting the installation language will be displayed: User can select the software language he likes, and can also change the language in the settings after installation. Currently supports Simplified Chinese and English. After the user select the language, enter the interface for selecting the installation directory: Here is generally installed by default, press the "Next" button, if the user customizes the installation directory, please pay attention to the directory permissions, the current user can edit. Generally, you need to create a desktop shortcut for convenient, we click the "Next" button to continue by default here. At this point we can click the "Install" button to install. We need to wait for the installation progress to complete. At this step, the software installation is complete. Software function 1. Start Software When we start the software, we will see the login interface. New users need to create a new account to log in. 2. Create Account To register as a new user, you need to use email as your account, set a password, and register. -

Seed Modules Reference Manual

Seed Modules Reference Manual May 20, 2009 Seed Modules Reference Manual ii Contents 1 readline module. 1 1.1 API Reference . .1 1.2 Examples . .1 2 SQLite module. 3 2.1 API Reference . .3 2.2 Examples . .3 3 GtkBuilder module. 5 3.1 API Reference . .5 3.2 Examples . .5 4 Sandbox module. 7 4.1 API Reference . .7 4.2 Examples . .7 iii Chapter 1 readline module. Robert Carr 1.1 API Reference The readline module allows for basic usage of the GNU readline library, in Seed. More advanced fea- tures may be added a a later time. In order to use the readline module it must be first imported. readline= imports.readline; readline.readline (prompt) Prompts for one line of input on standard input using prompt as the prompt. prompt A string to use as the readline prompt Returns A string entered on standard input. readline.bind (key, function) Binds key to function causing the function to be invokved when- ever key is pressed key A string specifying the key to bind function The function to invoke when key is pressed readline.done () Indicates that readline should finish the current line, and return from readline- .readline. Can be used in callbacks to implement features like multiline editing readline.buffer() Retrieve the current readline buffer Returns The current readline buffer readline.insert (string) Inserts string in to the current readline buffer string The string to insert 1.2 Examples Below are several examples of using the Seed readline module. For additional resources, consult the examples/ folder of the Seed source 1 CHAPTER 1. -

Tracking Users Across the Web Via TLS Session Resumption

Tracking Users across the Web via TLS Session Resumption Erik Sy Christian Burkert University of Hamburg University of Hamburg Hannes Federrath Mathias Fischer University of Hamburg University of Hamburg ABSTRACT modes, and browser extensions to restrict tracking practices such as User tracking on the Internet can come in various forms, e.g., via HTTP cookies. Browser fingerprinting got more difficult, as trackers cookies or by fingerprinting web browsers. A technique that got can hardly distinguish the fingerprints of mobile browsers. They are less attention so far is user tracking based on TLS and specifically often not as unique as their counterparts on desktop systems [4, 12]. based on the TLS session resumption mechanism. To the best of Tracking based on IP addresses is restricted because of NAT that our knowledge, we are the first that investigate the applicability of causes users to share public IP addresses and it cannot track devices TLS session resumption for user tracking. For that, we evaluated across different networks. As a result, trackers have an increased the configuration of 48 popular browsers and one million of the interest in additional methods for regaining the visibility on the most popular websites. Moreover, we present a so-called prolon- browsing habits of users. The result is a race of arms between gation attack, which allows extending the tracking period beyond trackers as well as privacy-aware users and browser vendors. the lifetime of the session resumption mechanism. To show that One novel tracking technique could be based on TLS session re- under the observed browser configurations tracking via TLS session sumption, which allows abbreviating TLS handshakes by leveraging resumptions is feasible, we also looked into DNS data to understand key material exchanged in an earlier TLS session.