Augmenting Bug Localization with Part-Of-Speech and Invocation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Smart Execution of Distributed Application by Balancing Resources in Mobile Devices

ALMA MATER STUDIORUM - UNIVERSITÀ DI BOLOGNA SCUOLA DI INGEGNERIA E ARCHITETTURA DISI INGEGNERIA INFORMATICA TESI DI LAUREA in Reti di Calcolatori M Smart execution of distributed application by balancing resources in mobile devices and cloud-based avatars CANDIDATO: RELATORE: Giacomo Gezzi Chiar.mo Prof. Ing. Antonio Corradi CORRELATORE: Chiar.mo Prof. Cristian Borcea Anno Accademico 2014/2015 Sessione III 2 Abstract L’obiettivo del progetto di tesi svolto e` quello di realizzare un servizio di livello middleware dedicato ai dispositivi mobili che sia in grado di fornire il supporto per l’offloading di codice verso una infrastruttura cloud. In particolare il progetto si concentra sulla migrazione di codice verso macchine virtuali dedicate al singolo utente. Il sistema operativo delle VMs e` lo stesso utilizzato dal device mobile. Come i precedenti lavori sul computation offloading, il progetto di tesi deve garantire migliori per- formance in termini di tempo di esecuzione e utilizzo della batteria del dispositivo. In particolare l’obiettivo piu` ampio e` quello di adattare il principio di computation offloading a un contesto di sistemi distribuiti mobili, miglio- rando non solo le performance del singolo device, ma l’esecuzione stessa dell’applicazione distribuita. Questo viene fatto tramite una gestione di- namica delle decisioni di offloading basata, non solo, sullo stato del de- vice, ma anche sulla volonta` e/o sullo stato degli altri utenti appartenenti allo stesso gruppo. Per esempio, un primo utente potrebbe influenzare le decisioni degli altri membri del gruppo specificando una determinata richiesta, come alta qualita` delle informazioni, risposta rapida o basata su altre informazioni di alto livello. -

Design Pattern Implementation in Java and Aspectj

Design Pattern Implementation in Java and AspectJ Jan Hannemann Gregor Kiczales University of British Columbia University of British Columbia 201-2366 Main Mall 201-2366 Main Mall Vancouver B.C. V6T 1Z4 Vancouver B.C. V6T 1Z4 jan [at] cs.ubc.ca gregor [at] cs.ubc.ca ABSTRACT successor in the chain. The event handling mechanism crosscuts the Handlers. AspectJ implementations of the GoF design patterns show modularity improvements in 17 of 23 cases. These improvements When the GoF patterns were first identified, the sample are manifested in terms of better code locality, reusability, implementations were geared to the current state of the art in composability, and (un)pluggability. object-oriented languages. Other work [19, 22] has shown that implementation language affects pattern implementation, so it seems The degree of improvement in implementation modularity varies, natural to explore the effect of aspect-oriented programming with the greatest improvement coming when the pattern solution techniques [11] on the implementation of the GoF patterns. structure involves crosscutting of some form, including one object As an initial experiment we chose to develop and compare Java playing multiple roles, many objects playing one role, or an object [27] and AspectJ [25] implementations of the 23 GoF patterns. playing roles in multiple pattern instances. AspectJ is a seamless aspect-oriented extension to Java, which means that programming in AspectJ is effectively programming in Categories and Subject Descriptors Java plus aspects. D.2.11 [Software Engineering]: Software Architectures – By focusing on the GoF patterns, we are keeping the purpose, patterns, information hiding, and languages; D.3.3 intent, and applicability of 23 well-known patterns, and only allowing [Programming Languages]: Language Constructs and Features – the solution structure and solution implementation to change. -

Weaving Eclipse Applications

Weaving Eclipse Applications Fabio Calefato, Filippo Lanubile, Mario Scalas, 1 Dipartimento di Informatica, Università di Bari, Italy {calefato, lanubile, scalas}@uniba.it Abstract. The Eclipse platform fully supports the ideas behind software components: in addition it also adds dynamic behavior allowing components to be added, replaced or removed at runtime without shutting the application down. While layered software architectures may be implemented by assembling components, the way these components are wired together differs. In this paper we present our solution of Dependecy Injection, which allows to build highly decoupled Eclipse applications in order to implement real separation of concerns by systemically applying Aspect Oriented Programming and the Model-View-Presenter pattern, a variant of the classic Model-View-Controller. Keywords: Eclipse, Aspect Oriented Programming, Dependency Injection. 1 Introduction The Dependency Inversion Principle [19] (DIP) states that (both high and low level) software parts should not depend on each other’s concrete implementation but, instead, be based on a common set of shared abstractions: one application of the DIP is the Dependency Injection, also called Inversion of Control (IoC) [11]. From an architectural perspective, DI allows to explicit the dependencies between software components and provides a way to break the normal coupling between a system under test and its dependencies during automated testing [25]. This is possible because the software is composed by aggregating simpler, loosely coupled objects that are more easily unit-testable [32]. Additionally, by separating the clients by their dependencies, we also make their code simpler because there is no need for them to search for their collaborators. -

Static Versus Dynamic Polymorphism When Implementing Variability in C++

Static Versus Dynamic Polymorphism When Implementing Variability in C++ by Samer AL Masri A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science Department of Computing Science University of Alberta c Samer AL Masri, 2018 Abstract Software Product Line Engineering (SPLE) creates configurable platforms that can be used to efficiently produce similar, and yet different, product vari- ants. To implement SPLs, multiple variability implementation mechanisms have been suggested, including polymorphism. In this thesis, we talk about the trade-offs of using static versus dynamic polymorphism through a case study of IBM’s open-source Eclipse OMR project. Eclipse OMR is an open-source C++ framework for building robust lan- guage runtimes. To support the diverse languages and architectures targeted by the framework, OMR’s variability implementation uses a combination of build-system variability and static polymorphism. OMR developers now real- ize that their current static polymorphism implementation has its drawbacks and are considering using dynamic polymorphism instead. In order to study the trade-offs of using different kinds of polymorphism in OMR, it is crucial to collect function information and overload/override statistics about the current code base. Hence, we create OMRStatistics,a static analysis tool that collects such information about OMR’s source code. Using the information provided by OMRStatistics, OMR developers can make better design decisions on which variability extension points should be switched from static polymorphism to dynamic polymorphism. In addition, we report on our first hand experience of changing the poly- morphism used in OMR’s variability implementation mechanism from static to dynamic, the challenges we faced in the process, and how we overcame them. -

Oracle FLEXCUBE Private Banking Release Notes

Product Release Notes Oracle FLEXCUBE Private Banking Release 12.1.0.0.0 [April] [2016] Product Release Notes Table of Contents 1. INTRODUCTION ........................................................................................................................................... 1-1 1.1 PURPOSE ..................................................................................................................................................... 1-1 1.2 BACKGROUND/ENVIRONMENT ................................................................................................................... 1-1 1.3 THIRD PARTY SOFTWARE DETAILS ............................................................................................................ 1-2 1.4 RELEASE CONTENTS ................................................................................................................................... 1-4 1.5 PRODUCT DOCUMENTATION ....................................................................................................................... 1-5 2. COMPONENTS OF THE RELEASE ........................................................................................................... 2-1 2.1 DOCUMENTS ACCOMPANYING THE SOFTWARE ............................................................................................ 2-1 2.2 SOFTWARE COMPONENTS ........................................................................................................................... 2-1 1. Introduction 1.1 Purpose Purpose of this Release Note is to highlight the -

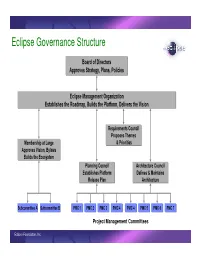

Eclipse Governance Structure

Eclipse Governance Structure Board of Directors A p p rov es S trateg y , P lans, P olicies E clip se M anag em ent O rg aniz ation E stab lish es th e R oadm ap , Bu ilds th e P latform , Deliv ers th e V ision R eq u irem ents C ou ncil P rop oses T h em es M em b ersh ip at L arg e & P riorities A p p rov es V ision, By law s Bu ilds th e E cosy stem P lanning C ou ncil A rch itectu re C ou ncil E stab lish es P latform Defines & M aintains R elease P lan A rch itectu re S u b com m ittee A S u b com m ittee B P M C 1 P M C 2 P M C 3 P M C 4 P M C 4 P M C 5 P M C 6 P M C 7 Project Management Committees Eclipse Foundation, Inc. Eclipse Development Roadmap ° http://www.eclipse.org/org/councils/roadmap.html ° Communicate the direction and timetable of the Eclipse projects ° Solicits for input from key stakeholders ° Create an open predictable environment to enable planning for commercial adoption ° Predictable schedule of new releases ° Understand technology direction ° Eclipse Roadmap consists of: ° Themes and Priorities ° Schedules ° Architecture Plan ° Will be update every 6 months ° First iteration done March 2005 Eclipse Foundation, Inc. Eclipse Roadmap: Development Councils Strategic Members PMC T&P’s Requirements Council Add-in Providers Market research T h P e r m io e ri s & ti s e & e s s e m ti e ri h o T ri P Platform Release Planning Architecture Council Architecture Plan Council P P M M C C P A l r a c n h s Eclipse Foundation, Inc. -

Creating a Modular Aspectj Foundation for Simple and Rapid Extension Implementation By

Creating a Modular AspectJ Foundation for Simple and Rapid Extension Implementation by Hristofor Mirche E!I FM" E#AMI$A"I%$ C%MMI""EE dr& C&M& 'oc(isch prof&dr&ir& M& A(sit )*&*+&,*-. Abstract "he current state of aspect/oriented programming 0A%1) has raised concerns regarding arious limitations that A%1 languages ha e& "he issue is that A%1 languages are not robust enough 3hen the basis program is changed& "here are many ne3 proposals for A%1 languages 3ith ne3 features that attempt to restrict or gi e more expressi eness to the programmer in order to force a ne3 context 3here the problems can be mitigated& Some of those languages are designed as extensions of AspectJ. Existing open AspectJ compilers can be used for implementing such an extension, but this can 5uic(ly become a complicated task of extending the complex processes of lexing4 parsing and 3ea ing4 of 3hich the compilers o6er lo3/le el abstractions& "hus4 there is a need for an easily extensible AspectJ foundation for a simpler and faster de elopment of language extensions& !e ha e de eloped such a foundation and in this thesis 3e describe the design of the implementation. !e pro ide an o er ie3 of a testing process to determine its alidity& Finally4 3e implement one proposal for an AspectJ extension and e aluate the extensibility and ease of use of our foundation in comparison to other existing AspectJ compilers& -& Introduction Moti ation& An easy approach to implement an aspect/oriented language 3ould be to extend an existing A%P compiler& "his is not al3ays the best approach4 -

Developing Java™ Web Applications

ECLIPSE WEB TOOLS PLATFORM the eclipse series SERIES EDITORS Erich Gamma ■ Lee Nackman ■ John Wiegand Eclipse is a universal tool platform, an open extensible integrated development envi- ronment (IDE) for anything and nothing in particular. Eclipse represents one of the most exciting initiatives hatched from the world of application development in a long time, and it has the considerable support of the leading companies and organ- izations in the technology sector. Eclipse is gaining widespread acceptance in both the commercial and academic arenas. The Eclipse Series from Addison-Wesley is the definitive series of books dedicated to the Eclipse platform. Books in the series promise to bring you the key technical information you need to analyze Eclipse, high-quality insight into this powerful technology, and the practical advice you need to build tools to support this evolu- tionary Open Source platform. Leading experts Erich Gamma, Lee Nackman, and John Wiegand are the series editors. Titles in the Eclipse Series John Arthorne and Chris Laffra Official Eclipse 3.0 FAQs 0-321-26838-5 Frank Budinsky, David Steinberg, Ed Merks, Ray Ellersick, and Timothy J. Grose Eclipse Modeling Framework 0-131-42542-0 David Carlson Eclipse Distilled 0-321-28815-7 Eric Clayberg and Dan Rubel Eclipse: Building Commercial-Quality Plug-Ins, Second Edition 0-321-42672-X Adrian Colyer,Andy Clement, George Harley, and Matthew Webster Eclipse AspectJ:Aspect-Oriented Programming with AspectJ and the Eclipse AspectJ Development Tools 0-321-24587-3 Erich Gamma and -

Flexibility at the Roots of Eclipse

6°ÊÈ >ʽäÇ Dynamic Wizard Modeling with GMF Introduction to the Using GMF to Build a Dynamic Wizard Generic Eclipse Framework and a Graphical Editor Modeling System Developing a Deploying the BIRT Graphical Modeling Viewer to JBoss Tool for Eclipse Disseminate Report Content to an Application Server Subversive The Eclipse Enabling Plug-In for Integration and Subversion Interoperability for Eclipse based Development An Introduction to the Corona Project Flexibility at the Roots of Eclipse Solving the GUI Dilemma: SWTSwing and Eclipse on Swing 6°ÊÈ >ʽäÇ Vol.6 January 2007 Dynamic Wizard Modeling with GMF Introduction to the Using GMF to Build a Dynamic Wizard Generic Eclipse Table of Contents Framework and a Graphical Editor Modeling System Developing a Deploying the BIRT Graphical Modeling Viewer to JBoss Tool for Eclipse Disseminate Report Content to an Application Server Subversive The Eclipse Enabling Plug-In for Integration and Subversion FEATURES Interoperability for Eclipse based Development An Introduction to the Corona Project Flexibility at the Roots of Eclipse 29 Flexibility at the Roots of Eclipse Solving the GUI Dilemma: SWTSwing and Eclipse on Solving the GUI Dilemma: Swing SWTSwing and Eclipse on Swing No trench in the world of Java is deeper then that between SWT and Swing or Eclipse and Sun. Unity is only found in the knowledge that everybody suff ers from this argument. But how to end this almost religious battle over the righteous GUI-toolkit? How to bang their heads together if they only know DEPARTMENT one point of view—for them or against them! Th e sister projects SWTSwing and Eclipse on Swing News & Trends (EOS) achieve this trick. -

Understanding and Characterizing Changes in Bugs Priority: the Practitioners’ Perceptive

Understanding and Characterizing Changes in Bugs Priority: The Practitioners’ Perceptive Rafi Almhana Thiago Ferreira Marouane Kessentini Tushar Sharma Dept. of Computer Science Dept. of Computer Science Dept. Computer Science Research Scientist University of Michigan University of Michigan University of Michigan Siemens Corporate Technology [email protected] [email protected] [email protected] [email protected] Abstract—Assigning appropriate priority to bugs is critical for for automated validation of bug priority change requests, and timely addressing important software maintenance issues. An better documentation of these changes. The goal of this paper underlying aspect is the effectiveness of assigning priorities: if is to characterize the overall change process of bugs’ priority. the priorities of a fair number of bugs are changed, it indicates delays in fixing critical bugs. There has been little prior work We advocate that a critical and fundamental step in provid- on understanding the dynamics of changing bug priorities. In ing efficient support for managers and developers to enable this paper, we performed an empirical study to observe and them to validate bugs priority change is to understand the understand the changes in bugs’ priority to build a 3-W model bugs priority dynamics; it involves discover and characterize on Why and When bug priorities change, and Who performs the Why When Who change. We conducted interviews and a survey with practitioners and bug priorities change, and performs the as well as performed a quantitative analysis containing 225,000 change. Thus, the primary goal of this paper is to observe bug reports, developers’ comments, and source code changes and understand the changes in bugs priority to build a 3- from 24 open-source systems. -

The Spring IDE Plug-In for Eclipse

Minter_685-4App.fm Page 229 Wednesday, November 7, 2007 1:49 PM APPENDIX ■ ■ ■ The Spring IDE Plug-in for Eclipse The Spring IDE plug-in for Eclipse enhances the Eclipse environment by providing a variety of features that make it easier to work with Spring projects. The Spring IDE provides tools for creating, validating, viewing, and editing your Spring configuration files. A particu- larly attractive feature is the provision of autocompletion when editing bean definitions. Although the Spring IDE is a separate project from Spring itself, there are developers who participate in both projects, so there is excellent support for the latest Spring features. In this appendix, I discuss the installation of the Spring IDE and then briefly present the features related to editing and viewing bean and Spring Web Flow definitions. It is not possible to discuss all of the Spring IDE features in depth in this appendix. I would recommend installing and working with the plug-in initially as an aid to the creation of bean definition files. The autocompletion feature works in a manner nearly identical to the Java source autocompletion features. After you are familiar with these aspects of the plug-in, you should visit the Spring IDE website (http://springide.org/) to learn more about the tool. Installing the Plug-in Although it is possible to download the Spring IDE components and install them manually, by far the easiest way to install the Spring IDE into Eclipse is to use the built-in Software Updates feature of the Eclipse environment. Version 2 of the Spring IDE supports versions of Spring up to and including Spring 2.1 and up to version 1 of Spring Web Flow. -

A Domain Specific Aspect Language for IDE Events Johan Fabry, Romain Robbes, Marcus Denker

DIE: A Domain Specific Aspect Language for IDE Events Johan Fabry, Romain Robbes, Marcus Denker To cite this version: Johan Fabry, Romain Robbes, Marcus Denker. DIE: A Domain Specific Aspect Language for IDE Events. Journal of Universal Computer Science, Graz University of Technology, Institut für Informa- tionssysteme und Computer Medien, 2014, 20 (2), pp.135-168. hal-00936376 HAL Id: hal-00936376 https://hal.inria.fr/hal-00936376 Submitted on 25 Jan 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. DIE: A Domain Specific Aspect Language for IDE Events Johan Fabry, Romain Robbes (PLEIAD Lab, Computer Science Department (DCC), University of Chile, Santiago, Chile {jfabry / rrobbes}@dcc.uchile.cl) Marcus Denker (RMoD, INRIA Lille Nord Europe Lille, France [email protected]) Abstract Integrated development environments (IDEs) have become the primary way to develop software. Besides just using the built-in features, it becomes more and more important to be able to extend the IDE with new features and extensions. Plugin architectures exist, but they show weaknesses related to unanticipated extensions and event handling. In this paper, we argue that a more general solution for extending IDEs is needed.