Learning Structured Representation for Text Classification Via

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Deep Learning for Natural Language Processing Creating Neural Networks with Python

Deep Learning for Natural Language Processing Creating Neural Networks with Python Palash Goyal Sumit Pandey Karan Jain Deep Learning for Natural Language Processing: Creating Neural Networks with Python Palash Goyal Sumit Pandey Bangalore, Karnataka, India Bangalore, Karnataka, India Karan Jain Bangalore, Karnataka, India ISBN-13 (pbk): 978-1-4842-3684-0 ISBN-13 (electronic): 978-1-4842-3685-7 https://doi.org/10.1007/978-1-4842-3685-7 Library of Congress Control Number: 2018947502 Copyright © 2018 by Palash Goyal, Sumit Pandey, Karan Jain Any source code or other supplementary material referenced by the author in this book is available to readers on GitHub via the book’s product page, located at www.apress.com/978-1-4842-3684-0. For more detailed information, please visit www.apress.com/source-code. Contents Introduction�������������������������������������������������������������������������������������� xvii Chapter 1: Introduction to Natural Language Processing and Deep Learning ���������������������������������������������������������������������1 Python Packages ���������������������������������������������������������������������������������������������������3 NumPy �������������������������������������������������������������������������������������������������������������3 Pandas �������������������������������������������������������������������������������������������������������������8 SciPy ��������������������������������������������������������������������������������������������������������������13 Introduction to Natural -

Text Feature Mining Using Pre-Trained Word Embeddings

DEGREE PROJECT IN MATHEMATICS, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 Text Feature Mining Using Pre-trained Word Embeddings HENRIK SJÖKVIST KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ENGINEERING SCIENCES Text Feature Mining Using Pre- trained Word Embeddings HENRIK SJÖKVIST Degree Projects in Financial Mathematics (30 ECTS credits) Degree Programme in Industrial Engineering and Management KTH Royal Institute of Technology year 2018 Supervisor at Handelsbanken: Richard Henricsson Supervisor at KTH: Henrik Hult Examiner at KTH: Henrik Hult TRITA-SCI-GRU 2018:167 MAT-E 2018:28 Royal Institute of Technology School of Engineering Sciences KTH SCI SE-100 44 Stockholm, Sweden URL: www.kth.se/sci Abstract This thesis explores a machine learning task where the data contains not only numer- ical features but also free-text features. In order to employ a supervised classifier and make predictions, the free-text features must be converted into numerical features. In this thesis, an algorithm is developed to perform that conversion. The algorithm uses a pre-trained word embedding model which maps each word to a vector. The vectors for multiple word embeddings belonging to the same sentence are then combined to form a single sentence embedding. The sentence embeddings for the whole dataset are clustered to identify distinct groups of free-text strings. The cluster labels are output as the numerical features. The algorithm is applied on a specific case concerning operational risk control in banking. The data consists of modifications made to trades in financial instruments. Each such modification comes with a short text string which documents the modi- fication, a trader comment. -

A Deep Reinforcement Learning Based Multi-Step Coarse to Fine Question Answering (MSCQA) System

The Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19) A Deep Reinforcement Learning Based Multi-Step Coarse to Fine Question Answering (MSCQA) System Yu Wang, Hongxia Jin Samsung Research America fyu.wang1, [email protected] Abstract sentences, which helps reduce the computational workload. The fast model can be implemented using bag-of-words In this paper, we present a multi-step coarse to fine ques- (BoW) or convolutional neural network and the slow model tion answering (MSCQA) system which can efficiently pro- cesses documents with different lengths by choosing appro- uses an RNN based model. The model gives decent perfor- priate actions. The system is designed using an actor-critic mance on long documents in WIKIREADING dataset, and based deep reinforcement learning model to achieve multi- shows significant speed-up compared to earlier models. This step question answering. Compared to previous QA models coarse to fine model, however, still have several disadvan- targeting on datasets mainly containing either short or long tages: documents, our multi-step coarse to fine model takes the mer- 1.The model doesn’t perform as good as baseline models its from multiple system modules, which can handle both on the full WIKIREADING dataset including both short and short and long documents. The system hence obtains a much long documents (Hewlett et al. 2016). One reason is because better accuracy and faster trainings speed compared to the that the conventional RNN based models perform better on current state-of-the-art models. We test our model on four QA datasets, WIKEREADING, WIKIREADING LONG, CNN generating answers from the first few sentences of a doc- and SQuAD, and demonstrate 1.3%-1.7% accuracy improve- ument, hence can obtain the correct results on short docu- ments with 1.5x-3.4x training speed-ups in comparison to the ments more accurately than a coarse-to-fine model . -

Deep Reinforcement Learning for Chatbots Using Clustered Actions and Human-Likeness Rewards

Deep Reinforcement Learning for Chatbots Using Clustered Actions and Human-Likeness Rewards Heriberto Cuayahuitl´ Donghyeon Lee Seonghan Ryu School of Computer Science Artificial Intelligence Research Group Artificial Intelligence Research Group University of Lincoln Samsung Electronics Samsung Electronics Lincoln, United Kingdom Seoul, South Korea Seoul, South Korea [email protected] [email protected] [email protected] Sungja Choi Inchul Hwang Jihie Kim Artificial Intelligence Research Group Artificial Intelligence Research Group Artificial Intelligence Research Group Samsung Electronics Samsung Electronics Samsung Electronics Seoul, South Korea Seoul, South Korea Seoul, South Korea [email protected] [email protected] [email protected] Abstract—Training chatbots using the reinforcement learning paradigm is challenging due to high-dimensional states, infinite action spaces and the difficulty in specifying the reward function. We address such problems using clustered actions instead of infinite actions, and a simple but promising reward function based on human-likeness scores derived from human-human dialogue data. We train Deep Reinforcement Learning (DRL) agents using chitchat data in raw text—without any manual annotations. Experimental results using different splits of training data report the following. First, that our agents learn reasonable policies in the environments they get familiarised with, but their performance drops substantially when they are exposed to a test set of unseen dialogues. Second, that the choice of sentence embedding size between 100 and 300 dimensions is not significantly different on test data. Third, that our proposed human-likeness rewards are reasonable for training chatbots as long as they use lengthy dialogue histories of ≥10 sentences. -

Abusive and Hate Speech Tweets Detection with Text Generation

ABUSIVE AND HATE SPEECH TWEETS DETECTION WITH TEXT GENERATION A Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science by ABHISHEK NALAMOTHU B.E., Saveetha School of Engineering, Saveetha University, India, 2016 2019 Wright State University Wright State University GRADUATE SCHOOL June 25, 2019 I HEREBY RECOMMEND THAT THE THESIS PREPARED UNDER MY SUPER- VISION BY Abhishek Nalamothu ENTITLED Abusive and Hate Speech Tweets Detection with Text Generation BE ACCEPTED IN PARTIAL FULFILLMENT OF THE REQUIRE- MENTS FOR THE DEGREE OF Master of Science. Amit Sheth, Ph.D. Thesis Director Mateen M. Rizki, Ph.D. Chair, Department of Computer Science and Engineering Committee on Final Examination Amit Sheth, Ph.D. Keke Chen, Ph.D. Valerie L. Shalin, Ph.D. Barry Milligan, Ph.D. Interim Dean of the Graduate School ABSTRACT Nalamothu, Abhishek. M.S., Department of Computer Science and Engineering, Wright State Uni- versity, 2019. Abusive and Hate Speech Tweets Detection with Text Generation. According to a Pew Research study, 41% of Americans have personally experienced online harassment and two-thirds of Americans have witnessed harassment in 2017. Hence, online harassment detection is vital for securing and sustaining the popularity and viability of online social networks. Machine learning techniques play a crucial role in automatic harassment detection. One of the challenges of using supervised approaches is training data imbalance. Existing text generation techniques can help augment the training data, but they are still inadequate and ineffective. This research explores the role of domain-specific knowledge to complement the limited training data available for training a text generator. -

Learning Sentence Embeddings Using Recursive Networks

Learning sentence embeddings using Recursive Networks Anson Bastos Independent scholar Mumbai, India [email protected] Abstract—Learning sentence vectors that generalise well is a II. SYSTEM ARCHITECTURE challenging task. In this paper we compare three methods of learning phrase embeddings: 1) Using LSTMs, 2) using recursive We have made use of three architectures as described below nets, 3) A variant of the method 2 using the POS information of the phrase. We train our models on dictionary definitions of words to obtain a reverse dictionary application similar to Felix A. LSTM et al. [1]. To see if our embeddings can be transferred to a new task we also train and test on the rotten tomatoes dataset [2]. We make use of the basic LSTM architecture as proposed We train keeping the sentence embeddings fixed as well as with by Zaremba et al. [9]. The equations describing the network fine tuning. is as follows: I. INTRODUCTION l−1 l Many methods to obtain sentence embeddings have been i = σ(Wi1 ∗ ht + Wi2 ∗ ht−1 + Bi) (1) researched in the past. Some of these include Hamid at al. l−1 l [3]. who have used LSTMs and Ozan et al. [4] who have f = σ(Wf1 ∗ ht + Wf2 ∗ ht−1 + Bf (2) made use of the recursive networks. Another approach used l−1 l by Mikolov et al. [4] is to include the paragraph in the context o = σ(Wo1 ∗ ht + Wg2 ∗ ht−1 + Bg) (3) of the target word and then use gradient descent at inference l−1 2 l time to find a new paragraphs embedding. -

Learning Representations of Social Media Users Arxiv:1812.00436V1

Learning Representations of Social Media Users by Adrian Benton A dissertation submitted to The Johns Hopkins University in conformity with the requirements for the degree of Doctor of Philosophy Baltimore, Maryland arXiv:1812.00436v1 [cs.LG] 2 Dec 2018 October, 2018 c 2018 by Adrian Benton ⃝ All rights reserved Abstract Social media users routinely interact by posting text updates, sharing images and videos, and establishing connections with other users through friending. User rep- resentations are routinely used in recommendation systems by platform developers, targeted advertisements by marketers, and by public policy researchers to gauge public opinion across demographic groups. Computer scientists consider the problem of inferring user representations more abstractly; how does one extract a stable user representation – effective for many downstream tasks – from a medium as noisy and complicated as social media? The quality of a user representation is ultimately task-dependent (e.g. does it improve classifier performance, make more accurate recommendations in arecom- mendation system) but there are also proxies that are less sensitive to the specific task. Is the representation predictive of latent properties such as a person’s demographic features, socio-economic class, or mental health state? Is it predictive of the user’s future behavior? In this thesis, we begin by showing how user representations can be learned from multiple types of user behavior on social media. We apply several extensions of generalized canonical correlation analysis to learn these representations and evaluate them at three tasks: predicting future hashtag mentions, friending behavior, and demographic features. We then show how user features can be employed as distant supervision to improve topic model fit. -

Learning Bilingual Sentence Embeddings Via Autoencoding and Computing Similarities with a Multilayer Perceptron

Learning Bilingual Sentence Embeddings via Autoencoding and Computing Similarities with a Multilayer Perceptron Yunsu Kim2 Hendrik Rosendahl1;2 Nick Rossenbach2 Jan Rosendahl2 Shahram Khadivi1 Hermann Ney2 1eBay, Inc., Aachen, Germany fhrosendahl,[email protected] 2RWTH Aachen University, Aachen, Germany [email protected] Abstract 2011; Xu and Koehn, 2017). Most of the partici- pants in the WMT 2018 parallel corpus filtering We propose a novel model architecture and task use large-scale neural MT models and lan- training algorithm to learn bilingual sentence embeddings from a combination of parallel guage models as the features (Koehn et al., 2018). and monolingual data. Our method connects Bilingual sentence embeddings can be an ele- autoencoding and neural machine translation gant and unified solution for parallel corpus min- to force the source and target sentence embed- ing and filtering. They compress the information dings to share the same space without the help of each sentence into a single vector, which lies of a pivot language or an additional trans- in a shared space between source and target lan- formation. We train a multilayer perceptron guages. Scoring a source-target sentence pair is on top of the sentence embeddings to extract good bilingual sentence pairs from nonparallel done by computing similarity between the source or noisy parallel data. Our approach shows embedding vector and the target embedding vec- promising performance on sentence align- tor. It is much more efficient than scoring by de- ment recovery and the WMT 2018 parallel coding, e.g. with a translation model. corpus filtering tasks with only a single model. -

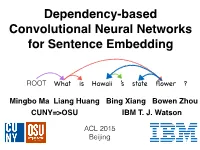

Dependency-Based Convolutional Neural Networks for Sentence Embedding

Dependency-based Convolutional Neural Networks for Sentence Embedding ROOT What is Hawaii ’s state flower ? Mingbo Ma Liang Huang Bing Xiang Bowen Zhou CUNY=>OSU IBM T. J. Watson ACL 2015 Beijing Convolutional Neural Network for Vision “deep learning” Layer 1 16,000 CPU cores (on 1,000 machines) => 12 GPUs (on 3 machines) Google/Stanford (2012) Stanford/NVIDIA (2013) 2 Convolutional Neural Network for Go “deep learning” Google (Silver et al., Nature 2016) and Facebook (Tian and Zhu, ICLR 2016) 48 CPUs and 8 GPUs 16 CPUs and 4 GPUs 3 Convolutional Neural Network for Language Kalchbrenner et al. (2014) and Kim (2014) apply CNNs to sentence modeling • alleviates data sparsity by word embedding • sequential order (sentence) instead of spatial order (image) Should use more linguistic and structural information! (implementations based on Python-based deep learning toolkit “Theano”) 4 Sequential Convolution Sequential convolution What is Hawaii ’s state flower 1 2 3 4 5 6 word rep. convolution direction 5 Sequential Convolution Sequential convolution What is Hawaii ’s state flower 1 2 3 4 5 6 word rep. convolution direction 6 Sequential Convolution Sequential convolution What is Hawaii ’s state flower 1 2 3 4 5 6 word rep. convolution direction 7 Sequential Convolution Sequential convolution What is Hawaii ’s state flower 1 2 3 4 5 6 word rep. convolution direction 8 Sequential Convolution Sequential convolution What is Hawaii ’s state flower 1 2 3 4 5 6 word rep. convolution direction 9 Try different convolution filters and repeat the same process 10 Sequential Convolution Sequential convolution What is Hawaii ’s state flower 1 2 3 4 5 6 word rep. -

Automatic Text Summarization Using Reinforcement Learning with Embedding Features

Automatic Text Summarization Using Reinforcement Learning with Embedding Features Gyoung Ho Lee, Kong Joo Lee Information Retrieval & Knowledge Engineering Lab., Chungnam National University, Daejeon, Korea [email protected], [email protected] Abstract so they are not applicable for text summarization tasks. An automatic text summarization sys- A Reinforcement Learning method would be an tem can automatically generate a short alternative approach to optimize a score function and brief summary that contains a in extractive text summarization task. Reinforce- main concept of an original document. ment learning is to learn how an agent in an envi- In this work, we explore the ad- ronment behaves in order to receive optimal re- vantages of simple embedding features wards in a given current state (Sutton, 1998). in Reinforcement leaning approach to The system learns the optimal policy that can automatic text summarization tasks. In choose a next action with the most reward value addition, we propose a novel deep in a given state. That is to say, the system can learning network for estimating Q- evaluate the quality of a partial summary and de- values used in Reinforcement learning. termine the sentence to insert in the summary to We evaluate our model by using get the most reward. It can produce a summary by ROUGE scores with DUC 2001, 2002, inserting a sentence one by one with considering Wikipedia, ACL-ARC data. Evalua- the quality of the hypothetical summary. In this tion results show that our model is work, we propose an extractive text summariza- competitive with the previous models. tion model for a single document based on a RL method. -

Dependency-Based Convolutional Neural Networks for Sentence Embedding∗

Dependency-based Convolutional Neural Networks for Sentence Embedding∗ Mingbo Ma† Liang Huang†‡ Bing Xiang‡ Bowen Zhou‡ †Graduate Center & Queens College ‡IBM Watson Group City University of New York T. J. Watson Research Center, IBM mma2,lhuang gc.cuny.edu lhuang,bingxia,zhou @us.ibm.com { } { } Abstract Indeed, in the sentiment analysis literature, re- searchers have incorporated long-distance infor- In sentence modeling and classification, mation from syntactic parse trees, but the results convolutional neural network approaches are somewhat inconsistent: some reported small have recently achieved state-of-the-art re- improvements (Gamon, 2004; Matsumoto et al., sults, but all such efforts process word vec- 2005), while some otherwise (Dave et al., 2003; tors sequentially and neglect long-distance Kudo and Matsumoto, 2004). As a result, syn- dependencies. To combine deep learn- tactic features have yet to become popular in the ing with linguistic structures, we pro- sentiment analysis community. We suspect one pose a dependency-based convolution ap- of the reasons for this is data sparsity (according n proach, making use of tree-based -grams to our experiments, tree n-grams are significantly rather than surface ones, thus utlizing non- sparser than surface n-grams), but this problem local interactions between words. Our has largely been alleviated by the recent advances model improves sequential baselines on all in word embedding. Can we combine the advan- four sentiment and question classification tages of both worlds? tasks, and achieves the highest published So we propose a very simple dependency-based accuracy on TREC. convolutional neural networks (DCNNs). Our model is similar to Kim (2014), but while his se- 1 Introduction quential CNNs put a word in its sequential con- Convolutional neural networks (CNNs), originally text, ours considers a word and its parent, grand- invented in computer vision (LeCun et al., 1995), parent, great-grand-parent, and siblings on the de- has recently attracted much attention in natural pendency tree. -

A Sentence Embedding Method by Dissecting BERT-Based Word Models Bin Wang, Student Member, IEEE, and C.-C

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2020 1 SBERT-WK: A Sentence Embedding Method by Dissecting BERT-based Word Models Bin Wang, Student Member, IEEE, and C.-C. Jay Kuo, Fellow, IEEE Abstract—Sentence embedding is an important research topic intermediate layers encode the most transferable features, in natural language processing (NLP) since it can transfer representation from higher layers are more expressive in high- knowledge to downstream tasks. Meanwhile, a contextualized level semantic information. Thus, information fusion across word representation, called BERT, achieves the state-of-the-art performance in quite a few NLP tasks. Yet, it is an open problem layers has its potential to provide a stronger representation. to generate a high quality sentence representation from BERT- Furthermore, by conducting experiments on patterns of the based word models. It was shown in previous study that different isolated word representation across layers in deep models, we layers of BERT capture different linguistic properties. This allows observe the following property. Words of richer information in us to fuse information across layers to find better sentence a sentence have higher variation in their representations, while representations. In this work, we study the layer-wise pattern of the word representation of deep contextualized models. Then, the token representation changes gradually, across layers. This we propose a new sentence embedding method by dissecting finding helps define “salient” word representations and infor- BERT-based word models through geometric analysis of the mative words in computing universal sentence embedding. space spanned by the word representation. It is called the One limitation of BERT is that due to the large model size, it SBERT-WK method1.