Improving TCP Throughput for Cloud Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

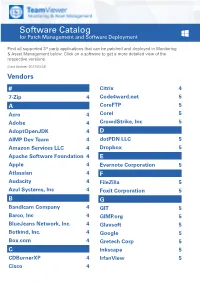

Software Catalog for Patch Management and Software Deployment

Software Catalog for Patch Management and Software Deployment Find all supported 3rd party applications that can be patched and deployed in Monitoring & Asset Management below. Click on a software to get a more detailed view of the respective versions. (Last Update: 2021/03/23) Vendors # Citrix 4 7-Zip 4 Code4ward.net 5 A CoreFTP 5 Acro 4 Corel 5 Adobe 4 CrowdStrike, Inc 5 AdoptOpenJDK 4 D AIMP Dev Team 4 dotPDN LLC 5 Amazon Services LLC 4 Dropbox 5 Apache Software Foundation 4 E Apple 4 Evernote Corporation 5 Atlassian 4 F Audacity 4 FileZilla 5 Azul Systems, Inc 4 Foxit Corporation 5 B G Bandicam Company 4 GIT 5 Barco, Inc 4 GIMP.org 5 BlueJeans Network, Inc. 4 Glavsoft 5 Botkind, Inc. 4 Google 5 Box.com 4 Gretech Corp 5 C Inkscape 5 CDBurnerXP 4 IrfanView 5 Cisco 4 Software Catalog for Patch Management and Software Deployment J P Jabra 5 PeaZip 10 JAM Software 5 Pidgin 10 Juraj Simlovic 5 Piriform 11 K Plantronics, Inc. 11 KeePass 5 Plex, Inc 11 L Prezi Inc 11 LibreOffice 5 Programmer‘s Notepad 11 Lightning UK 5 PSPad 11 LogMeIn, Inc. 5 Q M QSR International 11 Malwarebytes Corporation 5 Quest Software, Inc 11 Microsoft 6 R MIT 10 R Foundation 11 Morphisec 10 RarLab 11 Mozilla Foundation 10 Real 11 N RealVNC 11 Neevia Technology 10 RingCentral, Inc. 11 NextCloud GmbH 10 S Nitro Software, Inc. 10 Scooter Software, Inc 11 Nmap Project 10 Siber Systems 11 Node.js Foundation 10 Simon Tatham 11 Notepad++ 10 Skype Technologies S.A. -

Free Open Source Vnc

Free open source vnc click here to download TightVNC - VNC-Compatible Remote Control / Remote Desktop Software. free for both personal and commercial usage, with full source code available. TightVNC - VNC-Compatible Remote Control / Remote Desktop Software. It's completely free but it does not allow integration with closed-source products. UltraVNC: Remote desktop support software - Remote PC access - remote desktop connection software - VNC Compatibility - FileTransfer - Encryption plugins - Text chat - MS authentication. This leading-edge, cloud-based program offers Remote Monitoring & Management, Remote Access &. Popular open source Alternatives to VNC Connect for Linux, Windows, Mac, Self- Hosted, BSD and Free Open Source Mac Windows Linux Android iPhone. Download the original open source version of VNC® remote access technology. Undeniably, TeamViewer is the best VNC in the market. Without further ado, here are 8 free and some are open source VNC client/server. VNC remote access software, support server and viewer software for on demand remote computer support. Remote desktop support software for remote PC control. Free. All VNCs Start from the one piece of source (See History of VNC), and. TigerVNC is a high- performance, platform-neutral implementation of VNC (Virtual Network Computing), Besides the source code we also provide self-contained binaries for bit and bit Linux, installers for Current list of open bounties. VNC (Virtual Network Computing) software makes it possible to view and fully- interact with one computer from any other computer or mobile. Find other free open source alternatives for VNC. Open source is free to download and remember that open source is also a shareware and freeware alternative. -

VNC User Guide 7 About This Guide

VNC® User Guide Version 5.3 December 2015 Trademarks RealVNC, VNC and RFB are trademarks of RealVNC Limited and are protected by trademark registrations and/or pending trademark applications in the European Union, United States of America and other jursidictions. Other trademarks are the property of their respective owners. Protected by UK patent 2481870; US patent 8760366 Copyright Copyright © RealVNC Limited, 2002-2015. All rights reserved. No part of this documentation may be reproduced in any form or by any means or be used to make any derivative work (including translation, transformation or adaptation) without explicit written consent of RealVNC. Confidentiality All information contained in this document is provided in commercial confidence for the sole purpose of use by an authorized user in conjunction with RealVNC products. The pages of this document shall not be copied, published, or disclosed wholly or in part to any party without RealVNC’s prior permission in writing, and shall be held in safe custody. These obligations shall not apply to information which is published or becomes known legitimately from some source other than RealVNC. Contact RealVNC Limited Betjeman House 104 Hills Road Cambridge CB2 1LQ United Kingdom www.realvnc.com Contents About This Guide 7 Chapter 1: Introduction 9 Principles of VNC remote control 10 Getting two computers ready to use 11 Connectivity and feature matrix 13 What to read next 17 Chapter 2: Getting Connected 19 Step 1: Ensure VNC Server is running on the host computer 20 Step 2: Start VNC -

K1000 Ultravnc Guide

K1000 UltraVNC Guide What is Ultra VNC? UltraVNC (sometimes written uVNC) is an open source application for the Microsoft Windows operating system that uses the VNC protocol to control another computer's screen remotely. How it works A VNC system consists of a client, a server , and a communication protocol • The VNC server is the program on the machine that shares its screen. The server passively allows the client to take control of it. • The VNC client (or viewer) is the program that watches, controls, and interacts with the server. The client controls the server. • The VNC protocol (RFB) is very simple, based on one graphic primitive from server to client ("Put a rectangle of pixel data at the specified X,Y position") and event messages from client to server. Example Tutorial UltraVNC in this document: Creating a complete UltraVNC MSI Package for the K1000. - To create a complete MSI package, this tutorial will use a simple third party tool which will collect all your settings your require and bundle it together into a MSI file which can then be deployed from the K1000. Tutorial - UltraVNC MSI Package I. Install the UlraVNC MSI creator. A. Download VNCed MSI creator from here. (http://prdownloads.sourceforge.net/vnced/VNCed_UltraVNC_MSI_CREATOR_121.zip?download) B. Unzip VNCed MSI creator zip file Indigo Mountain Co Reg No: 7039194 Registered VAT No: 113 2991 31 II. Configure UltraVNC Settings A. Inside the VNCed folder run: UltraVNC 1.0.9.6.1 - STEP1.config_ultravnc_64bit_settings.bat for x64 UltraVNC 1.0.9.6.1 - STEP1.config_ultravnc_settings.bat for x86 Indigo Mountain Co Reg No: 7039194 Registered VAT No: 113 2991 31 B. -

Unpermitted Resources

Process Check and Unpermitted Resources Common and Important Virtual Machines Parallels VMware VirtualBox CVMCompiler Windows Virtual PC Other Python Citrix Screen/File Sharing/Saving .exe File Name VNC, VPN, RFS, P2P and SSH Virtual Drives ● Dropbox.exe ● Dropbox ● OneDrive.exe ● OneDrive ● <name>.exe ● Google Drive ● etc. ● iCloud ● etc. Evernote / One Note ● Evernote_---.exe ● onenote.exe Go To Meeting ● gotomeeting launcher.exe / gotomeeting.exe TeamViewer ● TeamViewer.exe Chrome Remote ● remoting_host.exe www.ProctorU.com ● [email protected] ● 8883553043 Messaging / Video (IM, IRC) / .exe File Name Audio Bonjour Google Hangouts (chrome.exe - shown as a tab) (Screen Sharing) Skype SkypeC2CPNRSvc.exe Music Streaming ● Spotify.exe (Spotify, Pandora, etc.) ● PandoraService.exe Steam Steam.exe ALL Processes Screen / File Sharing / Messaging / Video (IM, Virtual Machines (VM) Other Saving IRC) / Audio Virtual Box Splashtop Bonjour ● iChat ● iTunes ● iPhoto ● TiVo ● SubEthaEdit ● Contactizer, ● Things ● OmniFocuse phpVirtualBox TeamViewer MobileMe Parallels Sticky Notes Team Speak VMware One Note Ventrilo Windows Virtual PC Dropbox Sandboxd QEM (Linux only) Chrome Remote iStumbler HYPERBOX SkyDrive MSN Chat Boot Camp (dual boot) OneDrive Blackboard Chat CVMCompiler Google Drive Yahoo Messenger Office (Word, Excel, Skype etc.) www.ProctorU.com ● [email protected] ● 8883553043 2X Software Notepad Steam AerooAdmin Paint Origin AetherPal Go To Meeting Spotify Ammyy Admin Jing Facebook Messenger AnyDesk -

Collabkit – a Multi-User Multicast Collaboration System Based on VNC

Humboldt-Universität zu Berlin Institut für Informatik Lehrstuhl für Rechnerorganisation und Kommunikation Diplomarbeit CollabKit – A Multi-User Multicast Collaboration System based on VNC Christian Beier 19. April 2011 Gutachter Prof. Dr. Miroslaw Malek Prof. Dr. Jens-Peter Redlich Betreuer Peter Ibach <[email protected]> Abstract Computer-supported real-time collaboration systems offer functionality to let two or more users work together at the same time, allowing them to jointly create, modify and exchange electronic documents, use applications, and share information location-independently and in real-time. For these reasons, such collaboration systems are often used in professional and academic contexts by teams of knowledge workers located in different places. But also when used as computer-supported learning environments – electronic classrooms – these systems prove useful by offering interactive multi-media teaching possibilities and allowing for location-independent collaborative learning. Commonly, computer-supported real-time collaboration systems are realised using remote desktop technology or are implemented as web applications. However, none of the examined existing commercial and academic solutions were found to support concurrent multi-user interaction in an application-independent manner. When used in low-throughput shared-medium computer networks such as WLANs or cellular networks, most of the investigated systems furthermore do not scale well with an increasing number of users, making them unsuitable for multi-user collaboration of a high number of participants in such environments. For these reasons this work focuses on the design of a collaboration system that supports concurrent multi-user interaction with standard desktop applications and is able to serve a high number of users on low-throughput shared-medium computer networks by making use of multicast data transmission. -

Smartcode VNC Manager V5.0 Brochure

SmartCode TM VNC Manager v5.0 Award Winning Remote Computer Management Software Powerful tool to control all your computers from one program WELCOME TO NETWORK MANAGEMENT WITHOUT BARRIERS In today’s large corporate computer networks, with hundreds of computers located across the globe, the ability to effectively manage all of these computers is the key to the efficient use of staff time and can be a major cost saving factor. With SmartCode VNC Manager 5.0 you can access and control computers from anywhere. It is a powerful remote administra- tion and monitoring software application for both the business and home network environments. It is typically used for remote network management, remote system administration and in helpdesk environments. The application allows you to take remote control of any computer running VNC, Remote Desktop Services, Citrix ICA, Microsoft Hyper-V, Microsoft Virtual Server 2005, RAdmin, SSH, or Telnet servers. SmartCode VNC Manager was created to give you an easy-to-use yet powerful tool to manage, monitor and remotely control your computers. The VNC Manager software is used by thousands of small, midsized and large businesses worldwide. CENTRALIZED ADMINISTRATION VNC & Hyper-V THUMBNAILS VIEWS Administrators have quick access to all the management SmartCode VNC Manager was first application to and administration features available in a single console. introduce VNC thumbnails view. The new v5.0 release SmatrCode VNC Manager includes over twenty tools takes this feature one step further by adding support for that simplify management of Windows NT/XP/Vista/ monitoring of Microsoft Hyper-V virtual machines Windows 7 computers. -

Employee Manual Is the Basis for Creating This Culture and Is Provided to All Employees

SOUTHERN ARKANSAS UNIVERSITY TECH EMPLOYEE HANDBOOK NOTICE This handbook does not create a contract of employment. None of the benefits or policies in this manual is intended by reason of their distribution to confer any rights or privileges upon any faculty or staff member, or to entitle any employee to be remained employed by SAU Tech. The contents of this manual are presented for information only. While SAU Tech believes wholeheartedly in the plans, policies, and procedures described herein, they are not conditions of employment and may not be relied upon by any employee as a contract or other right. The provisions of the manual are subject to change at any time by SAU Tech, without notice. Changes to the manual may also result from, among other reasons, changes in federal and state laws, rules and regulations of state and federal agencies, or changes deemed necessary by the Board to meet changing needs of the college. Dear Southern Arkansas University Tech Employee, As a college, we seek to create and maintain a culture of mutual respect and cooperation among employees and students. Our goal is to foster a culture that takes pride in our work and in our relationships with each other, our students, our donors, and our community. This employee manual is the basis for creating this culture and is provided to all employees. We, as SAU Tech staff and faculty, are a team. We work together to create a mutual beneficial working and learning environment that promotes our programs, students, and community. Your individual opinions and ideas are important to our team and we encourage you to share them with us as an employee of SAU Tech. -

Interactive HPC Computation with Open Ondemand and Fastx

Interactive HPC Computation with Open OnDemand and FastX eResearchNZ, February, 2021 [email protected] More Data, More Complexity ● Datasets and problem complexity [1] is growing faster than performance of personal computational systems. ● In late 2018 a predictive study [2] of what was described as "the Global Datasphere", data that is "created, captured, or replicated ... will grow from 33 Zettabytes (ZB) in 2018 to 175 ZB by 2025". ● Performance improvements gained by increased clock-speed on a processor come at a cost of additional heat, causing a breakdown in Dennard scaling in the early 2000s [3], that has continued to contemporary times. ● There have been several technological improvements over the decades that have mitigated what would otherwise be a very unfortunate situation for the processing of large datasets (multicore, GPUs, parallel programming, SSD, NVMe, high-speed interconnect etc) ● A preferred choice is high performance computing (HPC) which, due to its physical architecture, operating system, and optimised application installations, is best suited for such processing. ● Command-line Tools can be 235x Faster than your Hadoop Cluster (https://adamdrake.com/command-line-tools-can-be-235x-faster-than-your-hadoop- cluster.html). SQL query runtime from 380 hours to 12 with two Unix commands (https://www.spinellis.gr/blog/20180805/) More Data, More Complexity Compute on HPC, visualise locally ● The motto of his Richard Hamming's Numerical Methods for Scientists and Engineers (1962) was "The purpose of computing is insight, not numbers". How is this insight to be achieved? From raw data can you mentally create a real-world mental image of what the data represents? ● Complexity will be beyond most people's cognitive capacity, leading to the need for computer graphics as a requisite heuristic. -

How to Cheat at Configuring Open Source Security Tools

436_XSS_FM.qxd 4/20/07 1:18 PM Page ii 441_HTC_OS_FM.qxd 4/12/07 1:32 PM Page i Visit us at www.syngress.com Syngress is committed to publishing high-quality books for IT Professionals and deliv- ering those books in media and formats that fit the demands of our customers. We are also committed to extending the utility of the book you purchase via additional mate- rials available from our Web site. SOLUTIONS WEB SITE To register your book, visit www.syngress.com/solutions. Once registered, you can access our [email protected] Web pages. There you may find an assortment of value- added features such as free e-books related to the topic of this book, URLs of related Web sites, FAQs from the book, corrections, and any updates from the author(s). ULTIMATE CDs Our Ultimate CD product line offers our readers budget-conscious compilations of some of our best-selling backlist titles in Adobe PDF form. These CDs are the perfect way to extend your reference library on key topics pertaining to your area of expertise, including Cisco Engineering, Microsoft Windows System Administration, CyberCrime Investigation, Open Source Security, and Firewall Configuration, to name a few. DOWNLOADABLE E-BOOKS For readers who can’t wait for hard copy, we offer most of our titles in downloadable Adobe PDF form. These e-books are often available weeks before hard copies, and are priced affordably. SYNGRESS OUTLET Our outlet store at syngress.com features overstocked, out-of-print, or slightly hurt books at significant savings. SITE LICENSING Syngress has a well-established program for site licensing our e-books onto servers in corporations, educational institutions, and large organizations. -

Remote Desktop Roundup: Teamviewer Vs. Splashtop Vs. Windows RDP

9/29/2020 Remote Desktop Roundup: TeamViewer vs. Splashtop vs. Windows RDP How-To Geek Remote Desktop Roundup: TeamViewer vs. Splashtop vs. Windows RDP JASON FITZPATRICK @jasonfitzpatric UPDATED DECEMBER 7, 2017, 1:01PM EDT There are a plethora of remote desktop solutions on the market, and it can be tricky to pick the right one for your needs. Don’t worry though, we’ve done the legwork for you, cataloging and comparing the most popular remote desktop solutions so you can easily pick the right one. https://www.howtogeek.com/257655/remote-desktop-roundup-teamviewer-vs.-splashtop-vs.-windows-rdp/ 1/10 9/29/2020 Remote Desktop Roundup: TeamViewer vs. Splashtop vs. Windows RDP After the recent flurry of news stories regarding TeamViewer and compromised computers (you can read their press release here and our article about properly securing TeamViewer here), there’s been quite a bit of interest in alternative remote desktop programs. Fortunately, there’s practically as many ways to set up a remote desktop session as there are motivations for doing so. RELATED: How to Lock Down TeamViewer for More Secure Remote Access Rather that simply throw a laundry list of remote desktop solutions at you, we’ve opted to group them into two major categories: operating- system-based remote desktop solutions, provided by the same company behind your operating system, and third-party remote desktop clients. Each of these solution categories offers something of value depending on your needs. Let’s take a look at each category now and highlight the benefits, shortcomings, and differences. As we run through the features of the different remote desktop solutions, keep a few important questions in mind. -

ECE 5745 Complex Digital ASIC Design Tutorial 0: Remote Access to Ecelinux

ECE 5745 Complex Digital ASIC Design Tutorial 0: Remote Access to ecelinux School of Electrical and Computer Engineering Cornell University revision: 2021-02-27-18-20 Contents 1 Introduction 3 2 Connecting to the Cornell VPN 3 3 Remote Access via PowerShell 4 3.1 Starting and Configuring PowerShell . .4 3.2 Logging into ecelinux Servers with PowerShell . .4 4 Remote Access via Mac Terminal 6 4.1 Starting and Configuring Mac Terminal . .6 4.2 Logging into ecelinux Servers with Mac Terminal . .6 5 Remote Access via VS Code 7 5.1 Installing VS Code on Your Laptop/Workstation . .7 5.2 Starting and Configuring VS Code . .8 5.3 Logging into ecelinux Servers with VS Code . .8 5.4 Using the VS Code Text Editor and File Explorer . 12 5.5 Troubleshooting Remote Access via VS Code . 12 6 Remote Access via X2Go 13 6.1 Installing X2Go on Your Laptop/Workstation . 13 6.2 Starting and Configuring X2Go . 13 6.3 Logging into ecelinux Servers with X2Go . 15 6.4 Logging out of ecelinux Servers with X2Go . 16 6.5 Troubleshooting Remote Access via X2Go . 16 7 Remote Access via MobaXterm 18 7.1 Installing MobaXterm on Your Windows Laptop/Workstation . 18 7.2 Starting and Configuring MobaXterm . 18 7.3 Logging into ecelinux Servers with MobaXterm . 18 1 ECE 5745 Complex Digital ASIC Design Tutorial 0: Remote Access to ecelinux 8 Remote Access via Mac Terminal with XQuartz 20 8.1 Installing XQuartz on Your Mac OS X Laptop/Workstation . 20 8.2 Starting and Configuring Mac Terminal .