Estimating Risk Using Stochastic Volatility Models and Particle

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ANNUAL REPORT 2018 Toward Industry-Leading Profitability CONTENTS

ANNUAL REPORT 2018 Toward industry-leading profitability CONTENTS 28 Businesses 60 Sustainable offering 145 Consolidated statement 29 SSAB Special Steels 61 Environmental benefits from of comprehensive income 33 SSAB Europe high-strength steels 146 Consolidated balance sheet 37 SSAB Americas 63 SSAB EcoUpgraded concept 147 Consolidated statement 41 Tibnor of changes in equity 44 Ruukki Construction 65 Sustainable operations 148 Consolidated cash flow 47 How we work with 66 Minimizing environmental statement BUSINESS REVIEW 2018 customers impact CORPORATE GOVERNANCE 49 Swedish Steel Prize 67 Material efficiency REPORT 2018 149 Parent Company 3 Introduction and recycling 149 Parent Company’s income 3 SSAB in brief 69 Energy consumption 106 Corporate Governance statement 5 2018 in brief and efficiency Report 2018 149 Parent Company’s other 6 Vision and values 71 Water recirculation 110 Board of Directors comprehensive income 7 SSAB’s value creation in the processes 114 Group Executive Committee 150 Parent Company’s balance 8 CEO’s review 72 CO2 efficient steel production sheet 10 SSAB as an investment 151 Parent Company’s statements 76 Responsible partner of changes in equity 11 Operating environment 77 High-performing 152 Parent Company’s cash flow 12 SSAB’s global presence organization statement 13 Global megatrends 84 Occupational health affecting SSAB SUSTAINABILITY and safety 153 5-year summary, Group 14 Steel market and REPORT 2018 87 Business ethics 154 Accounting and SSAB’s position and anticorruption valuation principles 17 Market -

SSAB Announces the Terms of Its Rights Issue

Not for release, publication or distribution, directly or indirectly, in or into Australia, Canada, Hong Kong, Japan, the United States or any other jurisdiction where such distribution of this press release would be subject to legal restrictions. PRESS RELEASE May 24, 2016 SSAB announces the terms of its rights issue SSAB AB (publ) (“SSAB” or the “Company”) announced on April 22, 2016 that the Board of Directors had resolved, subject to approval at the Extraordinary General Meeting (the “EGM”) on May 27, 2016, to launch a rights issue of approximately SEK 5 billion with preferential rights for existing shareholders. SSAB’s Board of Directors today announces the terms of the rights issue. The rights issue in brief Rights issue of Class B shares of approximately SEK 5 billion with preferential rights for existing shareholders in SSAB Shareholders in SSAB are entitled to subscribe for 7 new B shares for 8 old A and/or B shares held The subscription price is SEK 10.50 per share. For subscription of shares that will be registered with Euroclear Finland and listed on Nasdaq Helsinki, the subscription price is to be paid in EUR based on the European Central Bank EUR/SEK reference rate on May 31, 2016. The subscription price in EUR will be announced by way of a press release on or about May 31, 2016 The rights issue is subject to approval at the EGM on May 27, 2016 The record date is May 31, 2016 and the subscription period runs from June 3, 2016 until June 17, 2016 (both dates inclusive) or until such later date as decided by the Board of Directors SSAB’s two largest shareholders, Industrivärden and Solidium, together representing 28.7 per cent of the capital and 29.3 per cent of the votes, have undertaken to subscribe for their pro rata shares of the rights issue. -

Write the Title

Aktienytt Aktieanalys - fredag den 13 april 2018 Nyhetsrik vecka i väntan på rapportsäsongen Marknadskommentar Stockholmsbörsen bjöd sammantaget på en positiv utveckling under veckan och var vid fredagseftermiddagen kl 14:00 upp med 0,4% för veckan. I takt med att oron för handelskrig lugnade sig något så övertog geopolitisk oro marknadens huvudfokus. Tele2 Com Hem minskar risken i Tele2 Sverige Rek: KÖP Riktkurs: 125 SEK Vi anser att Com Hem passar mycket bra för Tele2, eftersom man har en Pris: 105 SEK fast B2C-verksamhet som Tele2 Sverige för närvarande saknar. Vi esti- Analytiker: Stefan Billing merar att affären kan addera 10 SEK per utspädd aktie till vår värdering. Vi estimerar en FCFPS-tillväxt på 15-20%, baserat på fulla synergier. Vi uppgraderar till Köp (Behåll). Riktkursen höjs till 125 SEK (110). Fokus på specialstål och Amerika SSAB Rek: KÖP Fortsatt stark utveckling och goda utsikter förväntas när SSAB släpper Riktkurs: 53 SEK sitt resultat för det första kvartalet. De största förbättringarna framöver Pris: 49 SEK väntas inom SSAB specialstål (volym och försäljningsmix) samt SSAB Analytiker: Ola Soedermark Amerika från den starka prisutvecklingen i USA. Vi upprepar vår Köpre- kommendation och vår riktkurs på 53 SEK. Bolag Rek. Riktkurs Pris Analytiker Veckans bolag Tele2 Köp 125 SEK 105 SEK Stefan Billing SSAB Köp 53 SEK 49 SEK Ola Soedermark Nordea Bank Köp 95 SEK 85 SEK Robin Rane Danske Bank Köp 253 DKK 221 DKK Robin Rane SHB Minska 91 SEK 98 SEK Robin Rane SKF Behåll 197 SEK 176 SEK Markus Almerud Boliden Köp 320 SEK 290 SEK Ola Soedermark Com Hem Köp 165 SEK 142 SEK Stefan Billing Nordic Semicon. -

Svenska Handelsbanken

SVENSKA HANDELSBANKEN Meeting Date: Wed, 25 Mar 2015 9:30am Type: AGM Issue date: Tue, 10 Mar 2015 Meeting Location: Hôtel’s Winter Garden, Royal entrance, Stallgatan 4, Stockholm Current Indices: FTSE EuroFirst Sector: Banks PROPOSALS ADVICE 1 Opening of the meeting Non-Voting Non-voting agenda item. 2 Elect Mr Sven Unger be chairman of the meeting Non-Voting Non-voting agenda item. 3 Establishment and approval of the list of voters Non-Voting Non-voting agenda item. 4 Approval of the agenda Non-Voting Non-voting agenda item. 5 Election of two persons to countersign the minutes Non-Voting Non-voting agenda item. 6 Determining whether the meeting has been duly called Non-Voting Non-voting agenda item. 7 A presentation of the annual accounts, Board’s report and auditors’ report Non-Voting Non-voting agenda item. 8 Receive the Annual Report For Disclosure is acceptable and the report was made available sufficiently before the meeting. However, the Company has been involved in alleged improper use of corporate resources; namely SCA’s corporate jet. Handelsbanken is one of SCA’s major shareholders. Said involvement led the Chairman of Handelsbanken Mr. Nyren to resign and was replaced by the CEO, Mr. Boman, who is candidate as Chairman at this AGM. It is considered that the Company should have discussed publicly appropriate use of corporate resources or acceptance of excessive gifts, which is however covered by their ethical guidelines. There seem to be insufficient checks and balances that could prevent such alleged improper use of resources from happening again. 9 Approve the dividend For The Board proposes a dividend of SEK 17.5 per share. -

Appendix A:1

Appendix A:1 Determination of the number of Directors and Deputy Directors to be elected and proposal for election of Directors and Deputies Number of Directors and Deputy Directors The Nomination Committee proposes that the Board of Directors shall comprise nine (9) Directors without Deputies. Board The Nomination Committee proposes re-election of the Directors Lars Westerberg, Bengt Andersson, Peggy Bruzelius, Börje Ekholm, Tom Johnstone, Anders Moberg, Gun Nilsson, Peder Ramel and Robert F. Connolly. The reason for not proposing new Board Members to be elected is that the Nomination Committee considers that the nine Board Members proposed by the Nomination Committee are very well suited for carrying out Husqvarna’s Board work over their coming term of office. The Nomination Committee proposes that Lars Westerberg is appointed chairman of the Board. Lars Westerberg Born 1948. M. Sc. Eng., MBA. Elected 2006. Member of the Remuneration Committee. President and CEO and Board Member of Autoliv Inc. Other major assignments: Board member of Haldex AB, Plastal Holding AB and SSAB. Previous positions: Senior management positions in Esab 1984–1994. President and CEO of Esab AB 1991. President and CEO of Gränges AB 1994. Holding in Husqvarna: 120,000 B-shares. Bengt Andersson Born 1944. Mech. Eng. President and CEO of Husqvarna AB Other major assignments: Board member of KABE AB, Chairman of Jönköping University Foundation, Sweden. Previous positions: Joined Electrolux in 1973. Sector manager in Facit-Addo 1976. Senior management positions within Electrolux Outdoor Products since 1979. Product-line Manager for Outdoor Products North America 1987. Product- line Manager for Forestry and Garden Products 1991, and Flymo 1996. -

SSAB in 'Pole Position' in Green Steel Race with New Plant

SSAB in ‘Pole Position’ in Green Steel Race With New Plant Hanna Hoikkala 3/24/2021 (Bloomberg) -- A Swedish joint venture that aims to remove fossil fuels from steelmaking is planning on establishing its first demonstration plant in 2026. Hybrit, an initiative run by steelmaker SSAB AB, iron ore producer LKAB and energy supplier Vattenfall AB, will locate the factory in the northern Swedish town of Gallivare, according to a statement. The demonstration plant will initially produce 1.3 million metric tons of fossil-free sponge iron, with a target to expand to 2.7 million tons by 2030, the companies said on Wednesday. That feedstock will be supplied to SSAB, among others, to help make fossil-free steel. Swedish Prime Minister Stefan Lofven described the announcement as “a huge industrial investment.” It shows “not only the whole of Sweden, but the whole world, that there is hope in climate change,” he said on social media. SSAB will also explore the prerequisites for converting to fossil-free steel production at its Lulea factory faster than planned under the Hybrit partnership. The company’s target of being the first to market with fossil-free steel remains in place, it said. The steelmaker’s Chief Executive Officer, Martin Lindqvist, told Bloomberg that it’s a positive development to see other business ventures now exploring this new type of production. “It is good for the climate,” he said of the development. “It also makes us on the margin even more confident that we have chosen the right path.” Analysts at Handelsbanken said in a note that the announcement keeps SSAB “in pole position” for the race to deliver green steel. -

Annual Report 2001 Annual Report 2001

31998_ASSA_Omslag_E_DS 02-03-14 13.25 Sida 1 ASSA ABLOY Annual Report 2001 Annual Report 2001 ASSA ABLOY AB (publ.) Postal Address: P.O. Box 70340, SE-107 23 Stockholm • Visiting Address: Klarabergsviadukten 90 Phone: +46 (0)8 506 485 00 • Fax: +46 (0)8 506 485 85 Registered No.: SE.556059-3575 • Registered Office: Stockholm, Sweden • www.assaabloy.com © Thierry Martinez 31998_ASSA_Omslag_E_DS 02-03-14 13.25 Sida 2 31998_ASSA_Fram_E_DS 02-03-14 13.12 Sida 1 Contents The year 2001 in brief 3 The President and CEO, Carl-Henric Svanberg 4 Group development 8 The ASSA ABLOY share 10 ASSA ABLOY and the lock industry 12 Strategy and financial objectives 14 Management philosophy 16 Environmental management philosophy 18 The trend towards higher security 20 ASSA ABLOY brand platform 22 ASSA ABLOY technology platforms 24 Integration Project – Volvo Ocean Race 26 Scandinavia 28 Finland 30 Central Europe 32 South Europe 34 United Kingdom 36 North America 38 South Pacific 42 New Markets 44 Hotel locks 48 Identification 49 Report of the Board of Directors 50 Consolidated income statement and cash flow statement 56 Consolidated balance sheet 57 Parent Company income statement and cash flow statement 58 Parent Company balance sheet 59 Accounting and valuation principles 60 Financial risk management 62 Notes 63 Audit report 72 ASSA ABLOY’s Board of Directors 73 ASSA ABLOY’s Group Management 74 Addresses 76 ASSA ABLOY / 2001 • 1 31998_ASSA_Fram_E_DS 02-03-14 13.12 Sida 2 The Annual General Meeting of ASSA ABLOY AB will be held at ‘Cirkus’, Djurgårdsslätten, Djurgården, Stockholm at 3 p.m. -

CREDIT RESEARCH June 4, 2021, 11:31 CET Real Estate | Sweden

[3,533 CREDIT RESEARCH June 4, 2021, 11:31 CET Real Estate | Sweden Diös No recommendation Heading for the Northern Lights MARKETING COMMUNICATION . A leader in stable and growing cities of northern Sweden . Strengthening credit profile . Handelsbanken has a mandate to issue bonds for Diös Leading market position in a growing, dynamic region Diös Fastigheter AB (Diös) has a market-leading position in 10 larger, growing cities in northern Sweden, as well as in Gävleborg County and Dalarna County. In our view, its property portfolio is well-diversified in terms of regional presence, property types and tenants, of which around 31% are related to government and municipalities, and 8% are residential rental properties. Based on data from Statistics Sweden and the regional municipalities, we expect the markets where Diös is active to continue to show healthy population and economic growth, which should in turn support the local property markets. About the company Strengthening credit profile We expect Diös to continue to strengthen its credit profile, including a sustained Profile: LTV of less than 55%, a lengthening of the debt maturity profile and an increased Diös Fastigheter AB, owns, manages and develops share of unsecured capital markets funding. Thanks to its cash-generative property commercial and residential properties, primarily in the larger cities of northern Sweden. The company was portfolio, we find that Diös’ credit metrics, such as debt-to-EBITDA and interest founded in 2005 and is headquartered in Östersund. coverage, are typically stronger than many of its Swedish real estate peers’. Total assets amounted to SEK 25bn as of March 31, 2021. -

Our Operations Are Based on Our Own Methodology for Developing and Refining the Companies in Which Traction Is an Owner

Our operations are based on our own methodology for developing and refining the companies in which Traction is an owner. ANNUAL REPORT 2009 Traction in brief raction is a publicly traded investment GOALS company with ownership interests in To achieve average annual growth of shareholders’ listed and unlisted companies. Our opera- equity of at least 25 percent. tions are based on our own methodology To create profitable growth in our wholly owned for developing and refining the compa- and partially owned companies. nies in which Traction is an owner. The To minimise the risk and increase the return on our Tprimary focus of the methodology is customer relation- projects. ships, capital flows and risk management. This metho- dology has evolved over Traction’s more than 35-year STRATEGY history. Traction does not focus on specific industries, To achieve Traction’s goals, the following are required: because our method is based on business acumen, which The ability to choose the right projects, in reality, the is applicable regardless of industry affiliation. Traction’s right partner – corporate managers. role as owner is based on an active and long-term enga- Project Managers who can provide corporate mana- gement, together with an entrepreneur or corporate ma- gers with the support and complementary expertise nagement. In addition hereto Traction conducts invest- they require to carry out the business project. ment operations aimed at achieving a good return on the Project Managers with varying expertise and back- Company’s capital. ground to cover the varying needs of each company. Project Managers with the ability to step in, when BUSINESS CONCEPT necessary, as corporate managers during transitional To apply Traction’s business development method in periods, until a new manager has been appointed. -

Ssab Annual Report 2015 Toward Industry-Leading Profitability Ssab 2015 Business Review Corporate Governance Report Gri Report Financial Reports 2015

SSAB ANNUAL REPORT 2015 TOWARD INDUSTRY-LEADING PROFITABILITY SSAB 2015 BUSINESS REVIEW CORPORATE GOVERNANCE REPORT GRI REPORT FINANCIAL REPORTS 2015 2 CONTENTS BUSINESS CORPORATE GOVERNANCE FINANCIAL REVIEW REPORT REPORTS 2015 4 Introduction 43 Sustainable offering 1 Corporate governance report 2015 2 Board of Directors´ Report 4 SSAB in brief 44 How we work with customers 5 Board of Directors 6 Year 2015 in brief 46 Environmental benefits 11 Group Executive Committee 23 Group 7 Vision and values with special steels 23 Consolidated income statement 8 SSAB in the value chain 52 Energy-efficient construction 23 Consolidated statement of comprehensive income 10 CEO’s review solutions 24 Consolidated balance sheet 53 Corporate identity and brands GRI REPORT 25 Consolidated statement of changes in equity 12 Operating context 26 Consolidated cash flow statement 13 Market development 55 Sustainable operations 14 Global megatrends and 56 Production sites 27 Parent Company SSAB’s response 57 Sustainable and efficient 27 Income statement production 27 Other comprehensive income 16 Our strategy 60 High-performing organization 28 Balance sheet 17 Taking the Lead 63 Health and safety 29 Changes in equity 22 Financial targets 30 Cash flow statement 23 Sustainability strategy 66 Responsible partner 2 SSAB’s sustainability approach 24 Sustainability targets 67 Responsible business practices 2 Sustainability reporting 2015 31 5-year summary, Group 71 Responsible sourcing 6 Sustainability management 32 Accounting and valuation principles 25 Our businesses -

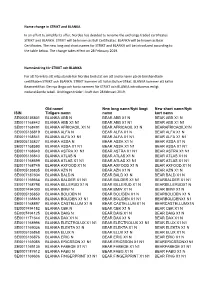

Name Change in STRIKT and BLANKA in an Effort to Simplify Its

Name change in STRIKT and BLANKA In an effort to simplify its offer, Nordea has decided to rename the exchange traded certificates STRIKT and BLANKA. STRIKT will be known as Bull Certificates. BLANKA will be known as Bear Certificates. The new long and short names for STRIKT and BLANKA will be introduced according to the table below. The change takes effect on 28 February 2019. Namnändring för STRIKT och BLANKA För att förenkla sitt erbjudande har Nordea beslutat om att ändra namn på de börshandlade certifikaten STRIKT och BLANKA. STRIKT kommer att kallas Bullcertifikat. BLANKA kommer att kallas Bearcertifikat. De nya långa och korta namnen för STRIKT och BLANKA introduceras enligt nedanstående tabell. Ändringen träder i kraft den 28 februari 2019. Old name/ New long name/Nytt långt New short name/Nytt ISIN Tidigare namn namn kort namn SE0005136801 BLANKA ABB N BEAR ABB X1 N BEAR ABB X1 N SE0011168442 BLANKA ABB X1 N1 BEAR ABB X1 N1 BEAR ABB X1 N1 SE0011168491 BLANKA AFRICAOIL X1 N BEAR AFRICAOIL X1 N BEARAFRICAOILX1N SE0005136819 BLANKA ALFA N BEAR ALFA X1 N BEAR ALFA X1 N SE0011168541 BLANKA ALFA X1 N1 BEAR ALFA X1 N1 BEAR ALFA X1 N1 SE0005136827 BLANKA ASSA N BEAR ASSA X1 N BEAR ASSA X1 N SE0011168590 BLANKA ASSA X1 N1 BEAR ASSA X1 N1 BEAR ASSA X1 N1 SE0011168640 BLANKA ASTRA X1 N1 BEAR ASTRA X1 N1 BEAR ASTRA X1 N1 SE0005136843 BLANKA ATLAS N BEAR ATLAS X1 N BEAR ATLAS X1 N SE0011168699 BLANKA ATLAS X1 N1 BEAR ATLAS X1 N1 BEAR ATLAS X1 N1 SE0011168749 BLANKA AXFOOD X1 N BEAR AXFOOD X1 N BEAR AXFOOD X1 N SE0005136835 BLANKA AZN N BEAR -

Annual Report 2005 (PDF)

Annual Report 2005 Contents The Year in Brief 1 Comments from the CEO 2 This is TeliaSonera 4 Strategy for Profitable Growth 5 The Market for Telecom Services 6 Growth Initiatives 7 Cost Efficiency 10 Personnel and Competence 12 TeliaSonera in Society 13 The TeliaSonera Share 14 Corporate Governance Report 16 Report of the Directors 23 Risk Factors 33 Consolidated Financial Statements 36 Parent Company Financial Statements 80 Proposed Appropriation of Earnings 92 Auditors’ Report 93 Ten-Year Summary 94 Forward-Looking Statements 97 Annual General Meeting 2006 98 TeliaSonera’s Annual Report is available at www.teliasonera.com/investorrelations under the Report section. Hardcopies of the Annual Report can be printed from the web or be ordered from the web or by phone at +46 (0) 372 851 42. We also publish an Annual Review, which is a summary of the year. The Annual Review is available at www.teliasonera.com/investorrelations under the Report section. A printed version can be ordered from the web or by phone at +46 (0) 372 851 42. TeliaSonera AB is a public limited liability company incorporated under the laws of Sweden. TeliaSonera was created as a result of the merger of Telia AB and Sonera Corporation in December 2002. In this annual report, references to “Group,” “Company,” “we,” “our,” “TeliaSonera” and “us” refer to TeliaSonera AB or TeliaSonera AB together with its subsidiaries, depending upon the context. The Year in Brief The Year in Brief Net sales increased 7.0 percent to SEK 87,661 million (81,937) driven by strong mobile and broadband growth.