Algorithms and Data Structures for New Models of Computation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Software for Numerical Computation

Purdue University Purdue e-Pubs Department of Computer Science Technical Reports Department of Computer Science 1977 Software for Numerical Computation John R. Rice Purdue University, [email protected] Report Number: 77-214 Rice, John R., "Software for Numerical Computation" (1977). Department of Computer Science Technical Reports. Paper 154. https://docs.lib.purdue.edu/cstech/154 This document has been made available through Purdue e-Pubs, a service of the Purdue University Libraries. Please contact [email protected] for additional information. SOFTWARE FOR NUMERICAL COMPUTATION John R. Rice Department of Computer Sciences Purdue University West Lafayette, IN 47907 CSD TR #214 January 1977 SOFTWARE FOR NUMERICAL COMPUTATION John R. Rice Mathematical Sciences Purdue University CSD-TR 214 January 12, 1977 Article to appear in the book: Research Directions in Software Technology. SOFTWARE FOR NUMERICAL COMPUTATION John R. Rice Mathematical Sciences Purdue University INTRODUCTION AND MOTIVATING PROBLEMS. The purpose of this article is to examine the research developments in software for numerical computation. Research and development of numerical methods is not intended to be discussed for two reasons. First, a reasonable survey of the research in numerical methods would require a book. The COSERS report [Rice et al, 1977] on Numerical Computation does such a survey in about 100 printed pages and even so the discussion of many important fields (never mind topics) is limited to a few paragraphs. Second, the present book is focused on software and thus it is natural to attempt to separate software research from numerical computation research. This, of course, is not easy as the two are intimately intertwined. -

Quantum Hypercomputation—Hype Or Computation?

Quantum Hypercomputation—Hype or Computation? Amit Hagar,∗ Alex Korolev† February 19, 2007 Abstract A recent attempt to compute a (recursion–theoretic) non–computable func- tion using the quantum adiabatic algorithm is criticized and found wanting. Quantum algorithms may outperform classical algorithms in some cases, but so far they retain the classical (recursion–theoretic) notion of computability. A speculation is then offered as to where the putative power of quantum computers may come from. ∗HPS Department, Indiana University, Bloomington, IN, 47405. [email protected] †Philosophy Department, University of BC, Vancouver, BC. [email protected] 1 1 Introduction Combining physics, mathematics and computer science, quantum computing has developed in the past two decades from a visionary idea (Feynman 1982) to one of the most exciting areas of quantum mechanics (Nielsen and Chuang 2000). The recent excitement in this lively and fashionable domain of research was triggered by Peter Shor (1994) who showed how a quantum computer could exponentially speed–up classical computation and factor numbers much more rapidly (at least in terms of the number of computational steps involved) than any known classical algorithm. Shor’s algorithm was soon followed by several other algorithms that aimed to solve combinatorial and algebraic problems, and in the last few years the- oretical study of quantum systems serving as computational devices has achieved tremendous progress. According to one authority in the field (Aharonov 1998, Abstract), we now have strong theoretical evidence that quantum computers, if built, might be used as powerful computational tool, capable of per- forming tasks which seem intractable for classical computers. -

Mathematics and Computation

Mathematics and Computation Mathematics and Computation Ideas Revolutionizing Technology and Science Avi Wigderson Princeton University Press Princeton and Oxford Copyright c 2019 by Avi Wigderson Requests for permission to reproduce material from this work should be sent to [email protected] Published by Princeton University Press, 41 William Street, Princeton, New Jersey 08540 In the United Kingdom: Princeton University Press, 6 Oxford Street, Woodstock, Oxfordshire OX20 1TR press.princeton.edu All Rights Reserved Library of Congress Control Number: 2018965993 ISBN: 978-0-691-18913-0 British Library Cataloging-in-Publication Data is available Editorial: Vickie Kearn, Lauren Bucca, and Susannah Shoemaker Production Editorial: Nathan Carr Jacket/Cover Credit: THIS INFORMATION NEEDS TO BE ADDED WHEN IT IS AVAILABLE. WE DO NOT HAVE THIS INFORMATION NOW. Production: Jacquie Poirier Publicity: Alyssa Sanford and Kathryn Stevens Copyeditor: Cyd Westmoreland This book has been composed in LATEX The publisher would like to acknowledge the author of this volume for providing the camera-ready copy from which this book was printed. Printed on acid-free paper 1 Printed in the United States of America 10 9 8 7 6 5 4 3 2 1 Dedicated to the memory of my father, Pinchas Wigderson (1921{1988), who loved people, loved puzzles, and inspired me. Ashgabat, Turkmenistan, 1943 Contents Acknowledgments 1 1 Introduction 3 1.1 On the interactions of math and computation..........................3 1.2 Computational complexity theory.................................6 1.3 The nature, purpose, and style of this book............................7 1.4 Who is this book for?........................................7 1.5 Organization of the book......................................8 1.6 Notation and conventions..................................... -

Introduction to the Theory of Computation Computability, Complexity, and the Lambda Calculus Some Notes for CIS262

Introduction to the Theory of Computation Computability, Complexity, And the Lambda Calculus Some Notes for CIS262 Jean Gallier and Jocelyn Quaintance Department of Computer and Information Science University of Pennsylvania Philadelphia, PA 19104, USA e-mail: [email protected] c Jean Gallier Please, do not reproduce without permission of the author April 28, 2020 2 Contents Contents 3 1 RAM Programs, Turing Machines 7 1.1 Partial Functions and RAM Programs . 10 1.2 Definition of a Turing Machine . 15 1.3 Computations of Turing Machines . 17 1.4 Equivalence of RAM programs And Turing Machines . 20 1.5 Listable Languages and Computable Languages . 21 1.6 A Simple Function Not Known to be Computable . 22 1.7 The Primitive Recursive Functions . 25 1.8 Primitive Recursive Predicates . 33 1.9 The Partial Computable Functions . 35 2 Universal RAM Programs and the Halting Problem 41 2.1 Pairing Functions . 41 2.2 Equivalence of Alphabets . 48 2.3 Coding of RAM Programs; The Halting Problem . 50 2.4 Universal RAM Programs . 54 2.5 Indexing of RAM Programs . 59 2.6 Kleene's T -Predicate . 60 2.7 A Non-Computable Function; Busy Beavers . 62 3 Elementary Recursive Function Theory 67 3.1 Acceptable Indexings . 67 3.2 Undecidable Problems . 70 3.3 Reducibility and Rice's Theorem . 73 3.4 Listable (Recursively Enumerable) Sets . 76 3.5 Reducibility and Complete Sets . 82 4 The Lambda-Calculus 87 4.1 Syntax of the Lambda-Calculus . 89 4.2 β-Reduction and β-Conversion; the Church{Rosser Theorem . 94 4.3 Some Useful Combinators . -

What Is Computation? the Enduring Legacy of the Turing Machine by Lance Fortnow, Northwestern University

Ubiquity, an ACM publication December, 2010 Ubiquity Symposium What is Computation? The Enduring Legacy of the Turing Machine by Lance Fortnow, Northwestern University Editor’s Introduction In this eighth article in the ACM Ubiquity symposium discussing, What is Computation?, Lance Fortnow invokes the significance of the Turing machine in addressing the question. Editor http://ubiquity.acm.org 1 ©2010 Association for Computing Machinery Ubiquity, an ACM publication December, 2010 Ubiquity Symposium What is Computation? The Enduring Legacy of the Turing Machine by Lance Fortnow, Northwestern University Supported in part by NSF grants CCF‐0829754 and DMS‐0652521 This is one of a series of Ubiquity articles addressing the question “What is Computation?” Alan Turing1, in his seminal 1936 paper On computable numbers, with an application to the Entscheidungsproblem [4], directly answers this question by describing the now classic Turing machine model. The Church‐Turing thesis is simply stated. Everything computable is computable by a Turing machine. The Church‐Turing thesis has stood the test of time, capturing computation models Turing could not have conceived of, including digital computation, probabilistic, parallel and quantum computers and the Internet. The thesis has become accepted doctrine in computer science and the ACM has named its highest honor after Turing. Many now view computation as a fundamental part of nature, like atoms or the integers. So why are we having a series now asking a question that was settled in the 1930s? A few computer scientists nevertheless try to argue that the thesis fails to capture some aspects of computation. Some of these have been published in prestigious venues such as Science [2], the Communications of the ACM [5] and now as a whole series of papers in ACM Ubiquity. -

A Game Plan for Quantum Computing

February 2020 A game plan for quantum computing Thanks to technology advances, some companies may reap real gains from quantum computing within five years. What should you do to prepare for this next big wave in computers? by Alexandre Ménard, Ivan Ostojic, Mark Patel, and Daniel Volz Pharmaceutical companies have an abiding interest in enzymes. These proteins catalyze all kinds of biochemical interactions, often by targeting a single type of molecule with great precision. Harnessing the power of enzymes may help alleviate the major diseases of our time. Unfortunately, we don’t know the exact molecular structure of most enzymes. In principle, chemists could use computers to model these molecules in order to identify how the molecules work, but enzymes are such complex structures that most are impossible for classical computers to model. A sufficiently powerful quantum computer, however, could accurately predict in a matter of hours the properties, structure, and reactivity of such substances—an advance that could revolutionize drug development and usher in a new era in healthcare. Quantum computers have the potential to resolve problems of this complexity and magnitude across many different industries and applications, including finance, transportation, chemicals, and cybersecurity. Solving the impossible in a few hours of computing time, finding answers to problems that have bedeviled science and society for years, unlocking unprecedented capabilities for businesses of all kinds—those are the promises of quantum computing, a fundamentally different approach to computation. None of this will happen overnight. In fact, many companies and businesses won’t be able to reap significant value from quantum computing for a decade or more, although a few will see gains in the next five years. -

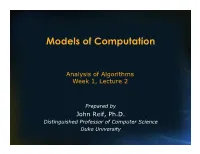

Models of Computation (RAM)

Models of Computation Analysis of Algorithms Week 1, Lecture 2 Prepared by John Reif, Ph.D. Distinguished Professor of Computer Science Duke University Models of Computation (RAM) a) Random Access Machines b) Straight Line Programs and Circuits c) Vector Machines d) Turing Machines e) Pointer Machines f) Decision Trees g) Machines That Make Random Choices Readings • Main Reading Selection: • CLR, Chapter 1 and 2 Random Access Machine (RAM) RAM Assumptions 1) Each register holds an integer 2) Program can’t modify itself 3) Memory instructions involve simple arithmetic a) Addition, subtraction b) Multiplication, division and control states (got, if-then, etc.) Simplified Program Style Complexity Measures of Algorithms Input X output size n=|X| => Algorithm A => • TimeA (X) = time cost of Algorithm A, input X • SpaceA (X) = space cost of Algorithm A, input X • Note: “time” and “space” depend on machine Complexity Measures of Algorithms (cont’d) • Worst case TAA (n) = max (Time (X)) time complexity {x:|x|=n} E(T (n))= Time (X)Prob(X) • Average case complexity AA∑ for random inputs {x:|x|=n} • Worst case S(n)AA = max (Space(X)) space complexity {x:|x|=n} • Average case complexity E(S (n))= Space (X)Prob(X) for random inputs AA∑ {x:|x|=n} Cost Criteria • Uniform Cost Criteria Time = # RAM instructions space = # RAM memory registers • Logarithmic Cost Criteria – Time= L(i) units per RAM instruction on integer size i – Space = L(i) units per RAM register on integer size i Cost Criteria (cont’d) log |i| i 0 ⎧ ⎣⎦≠ where L(i) = ⎨ ⎩ 1 i= 0 • Example Z2← for k=1 to n do Z←⋅ Z Z n ouput Z = 22 Uniform time cost = n Logarithmic time cost > 2n Varieties of Computing Machine Models RAMs Straight line programs Circuits Bit vectors Lisp machines … Turning Machines Straight Line Programs • Idea • fix n = input size • unroll each iteration loop until result is loop-free program Pn • For each n > 0, get a distinct program Pn Note: this is only possible if we can eliminate all branching and all indirect addressing Example • Given polynomial p(x) = a xnn-1 + a x + .. -

Foundations of Computation, Version 2.3.2 (Summer 2011)

Foundations of Computation Second Edition (Version 2.3.2, Summer 2011) Carol Critchlow and David Eck Department of Mathematics and Computer Science Hobart and William Smith Colleges Geneva, New York 14456 ii c 2011, Carol Critchlow and David Eck. Foundations of Computation is a textbook for a one semester introductory course in theoretical computer science. It includes topics from discrete mathematics, automata the- ory, formal language theory, and the theory of computation, along with practical applications to computer science. It has no prerequisites other than a general familiarity with com- puter programming. Version 2.3, Summer 2010, was a minor update from Version 2.2, which was published in Fall 2006. Version 2.3.1 is an even smaller update of Version 2.3. Ver- sion 2.3.2 is identical to Version 2.3.1 except for a change in the license under which the book is released. This book can be redistributed in unmodified form, or in mod- ified form with proper attribution and under the same license as the original, for non-commercial uses only, as specified by the Creative Commons Attribution-Noncommercial-ShareAlike 4.0 Li- cense. (See http://creativecommons.org/licenses/by-nc-sa/4.0/) This textbook is available for on-line use and for free download in PDF form at http://math.hws.edu/FoundationsOfComputation/ and it can be purchased in print form for the cost of reproduction plus shipping at http://www.lulu.com Contents Table of Contents iii 1 Logic and Proof 1 1.1 Propositional Logic ....................... 2 1.2 Boolean Algebra ....................... -

Introduction to Theory of Computation

Introduction to Theory of Computation Anil Maheshwari Michiel Smid School of Computer Science Carleton University Ottawa Canada anil,michiel @scs.carleton.ca { } April 17, 2019 ii Contents Contents Preface vi 1 Introduction 1 1.1 Purposeandmotivation ..................... 1 1.1.1 Complexitytheory .................... 2 1.1.2 Computability theory . 2 1.1.3 Automatatheory ..................... 3 1.1.4 Thiscourse ........................ 3 1.2 Mathematical preliminaries . 4 1.3 Prooftechniques ......................... 7 1.3.1 Directproofs ....................... 8 1.3.2 Constructiveproofs. 9 1.3.3 Nonconstructiveproofs . 10 1.3.4 Proofsbycontradiction. 11 1.3.5 The pigeon hole principle . 12 1.3.6 Proofsbyinduction. 13 1.3.7 Moreexamplesofproofs . 15 Exercises................................. 18 2 Finite Automata and Regular Languages 21 2.1 An example: Controling a toll gate . 21 2.2 Deterministic finite automata . 23 2.2.1 Afirstexampleofafiniteautomaton . 26 2.2.2 Asecondexampleofafiniteautomaton . 28 2.2.3 A third example of a finite automaton . 29 2.3 Regularoperations ........................ 31 2.4 Nondeterministic finite automata . 35 2.4.1 Afirstexample ...................... 35 iv Contents 2.4.2 Asecondexample..................... 37 2.4.3 Athirdexample...................... 38 2.4.4 Definition of nondeterministic finite automaton . 39 2.5 EquivalenceofDFAsandNFAs . 41 2.5.1 Anexample ........................ 44 2.6 Closureundertheregularoperations . 48 2.7 Regularexpressions. .. .. 52 2.8 Equivalence of regular expressions and regular languages . 56 2.8.1 Every regular expression describes a regular language . 57 2.8.2 Converting a DFA to a regular expression . 60 2.9 The pumping lemma and nonregular languages . 67 2.9.1 Applications of the pumping lemma . 69 2.10Higman’sTheorem ........................ 76 2.10.1 Dickson’sTheorem . -

What Is Computation? Peter J

A Ubiquity Symposium Opening Statement FINAL v5– 8/26/10 What is Computation? Peter J. Denning Peter J. Denning ([email protected]) is Director of the Cebrowski Institute for innovation and information superiority at the Naval Postgraduate School in Monterey, California, and is a past president of ACM. He is currently the editor in chief of Ubiquity. Abstract: Most people understand a computation as a process evoked when a computational model (or computational agent) acts on its inputs under the control of an algorithm to produce its results. The classical Turing machine model has long served as the fundamental reference model because an appropriate Turing machine can simulate every other computational model known. The Turing model is a good abstraction for most digital computers because the number of steps to execute a Turing machine algorithm is predictive of the running time of the computation on a digital computer. However, the Turing model is not as well matched for the natural, interactive, and continuous information processes frequently encountered today; other models more closely match the information processes involved and give better predictions of running time and space. The question before us -- what is computation? -- is at least as old as computer science. It is one of those questions that will never be fully settled because new discoveries and maturing understandings constantly lead to new insights. It is like the fundamental questions in other fields -- for example, “what is life?” in biology and “what are the fundamental forces?” in physics -- that will never be fully resolved. Engaging with the question is more valuable than finding a definitive answer. -

Computation Vs. Information Processing: How They Are Different and Why It Matters

Studies in History and Philosophy of Science 41 (2010) 237–246 Contents lists available at ScienceDirect Studies in History and Philosophy of Science journal homepage: www.elsevier.com/locate/shpsa Computation vs. information processing: why their difference matters to cognitive science Gualtiero Piccinini a, Andrea Scarantino b a Department of Philosophy, University of Missouri St. Louis, 599 Lucas Hall (MC 73), One University Boulevard, St. Louis, MO 63121-4499, USA b Department of Philosophy, Neuroscience Institute, Georgia State University, PO Box 4089, Atlanta, GA 30302-4089, USA article info abstract Keywords: Since the cognitive revolution, it has become commonplace that cognition involves both computation and Computation information processing. Is this one claim or two? Is computation the same as information processing? The Information processing two terms are often used interchangeably, but this usage masks important differences. In this paper, we Computationalism distinguish information processing from computation and examine some of their mutual relations, shed- Computational theory of mind ding light on the role each can play in a theory of cognition. We recommend that theorists of cognition be Cognitivism explicit and careful in choosing notions of computation and information and connecting them together. Ó 2010 Elsevier Ltd. All rights reserved. When citing this paper, please use the full journal title Studies in History and Philosophy of Science 1. Introduction cessing, and the manipulation of representations does more -

Neural Computation and the Computational Theory of Cognition

Cognitive Science 34 (2013) 453–488 Copyright Ó 2012 Cognitive Science Society, Inc. All rights reserved. ISSN: 0364-0213 print / 1551-6709 online DOI: 10.1111/cogs.12012 Neural Computation and the Computational Theory of Cognition Gualtiero Piccinini,a Sonya Baharb aCenter for Neurodynamics, Department of Philosophy, University of Missouri – St. Louis bCenter for Neurodynamics, Department of Physics and Astronomy, University of Missouri – St. Louis Received 29 August 2011; received in revised form 20 March 2012; accepted 24 May 2012 Abstract We begin by distinguishing computationalism from a number of other theses that are sometimes conflated with it. We also distinguish between several important kinds of computation: computation in a generic sense, digital computation, and analog computation. Then, we defend a weak version of computationalism—neural processes are computations in the generic sense. After that, we reject on empirical grounds the common assimilation of neural computation to either analog or digital compu- tation, concluding that neural computation is sui generis. Analog computation requires continuous signals; digital computation requires strings of digits. But current neuroscientific evidence indicates that typical neural signals, such as spike trains, are graded like continuous signals but are constituted by discrete functional elements (spikes); thus, typical neural signals are neither continuous signals nor strings of digits. It follows that neural computation is sui generis. Finally, we highlight three important consequences of a proper understanding of neural computation for the theory of cognition. First, understanding neural computation requires a specially designed mathematical theory (or theo- ries) rather than the mathematical theories of analog or digital computation. Second, several popular views about neural computation turn out to be incorrect.