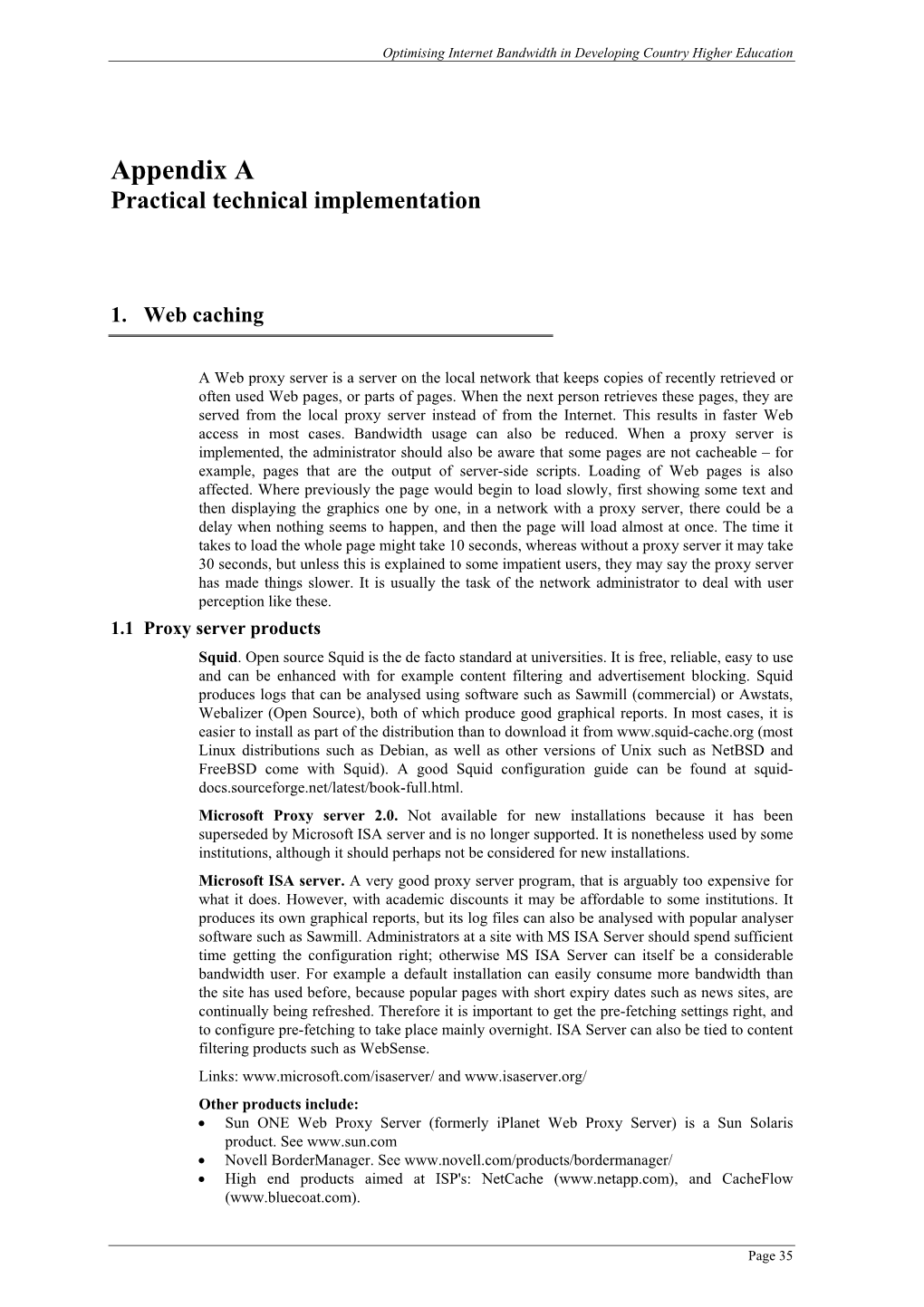

Appendix a Practical Technical Implementation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Ispconfig 3 Manual]](https://docslib.b-cdn.net/cover/9381/ispconfig-3-manual-89381.webp)

Ispconfig 3 Manual]

[ISPConfig 3 Manual] ISPConfig 3 Manual Version 1.0 for ISPConfig 3.0.3 Author: Falko Timme <[email protected]> Last edited 09/30/2010 1 The ISPConfig 3 manual is protected by copyright. No part of the manual may be reproduced, adapted, translated, or made available to a third party in any form by any process (electronic or otherwise) without the written specific consent of projektfarm GmbH. You may keep backup copies of the manual in digital or printed form for your personal use. All rights reserved. This copy was issued to: Thomas CARTER - [email protected] - Date: 2010-11-20 [ISPConfig 3 Manual] ISPConfig 3 is an open source hosting control panel for Linux and is capable of managing multiple servers from one control panel. ISPConfig 3 is licensed under BSD license. Managed Services and Features • Manage one or more servers from one control panel (multiserver management) • Different permission levels (administrators, resellers and clients) + email user level provided by a roundcube plugin for ISPConfig • Httpd (virtual hosts, domain- and IP-based) • FTP, SFTP, SCP • WebDAV • DNS (A, AAAA, ALIAS, CNAME, HINFO, MX, NS, PTR, RP, SRV, TXT records) • POP3, IMAP • Email autoresponder • Server-based mail filtering • Advanced email spamfilter and antivirus filter • MySQL client-databases • Webalizer and/or AWStats statistics • Harddisk quota • Mail quota • Traffic limits and statistics • IP addresses 2 The ISPConfig 3 manual is protected by copyright. No part of the manual may be reproduced, adapted, translated, or made available to a third party in any form by any process (electronic or otherwise) without the written specific consent of projektfarm GmbH. -

Updating Systems and Adding Software in Oracle® Solaris 11.4

Updating Systems and Adding Software ® in Oracle Solaris 11.4 Part No: E60979 November 2020 Updating Systems and Adding Software in Oracle Solaris 11.4 Part No: E60979 Copyright © 2007, 2020, Oracle and/or its affiliates. License Restrictions Warranty/Consequential Damages Disclaimer This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. Warranty Disclaimer The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. Restricted Rights Notice If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial -

Automated IT Service Fault Diagnosis Based on Event Correlation Techniques

Automated IT Service Fault Diagnosis Based on Event Correlation Techniques Dissertation an der Fakultat¨ fur¨ Mathematik, Informatik und Statistik der Ludwig-Maximilians-Universitat¨ Munchen¨ vorgelegt von Andreas Hanemann Tag der Einreichung: 22. Mai 2007 1. Berichterstatter: Professor Dr. Heinz-Gerd Hegering, Ludwig-Maximilians-Universit¨at M¨unchen 2. Berichterstatterin: Professor Dr. Gabrijela Dreo Rodosek, Universit¨at der Bundeswehr M¨unchen Automated IT Service Fault Diagnosis Based on Event Correlation Techniques Dissertation an der Fakultat¨ fur¨ Mathematik, Informatik und Statistik der Ludwig-Maximilians-Universitat¨ Munchen¨ vorgelegt von Andreas Hanemann Tag der Einreichung: 22. Mai 2007 Tag der m¨undlichen Pr¨ufung: 19. Juli 2007 1. Berichterstatter: Professor Dr. Heinz-Gerd Hegering, Ludwig-Maximilians-Universit¨at M¨unchen 2. Berichterstatterin: Professor Dr. Gabrijela Dreo Rodosek, Universit¨at der Bundeswehr M¨unchen Acknowledgments This thesis has been written as part of my work as a researcher at the Leib- niz Supercomputing Center (Leibniz-Rechenzentrum, LRZ) of the Bavarian Academy of Sciences and Humanities which was funded by the German Re- search Network (DFN-Verein) as well as in cooperation with the research group of Prof. Dr. Heinz-Gerd Hegering. Apart from the LRZ, this research group called MNM-Team (Munich Network Management Team) is located at the University of Munich (LMU), the Munich University of Technology (TUM) and the University of Federal Armed Forces in Munich. At first, I would like to thank my doctoral advisor Prof. Dr. Heinz-Gerd Hegering for his constant support and helpful advice during the whole prepa- ration time of this thesis. I would also like to express my special gratefulness to my second advisor, Prof. -

Linux Administrators Security Guide LASG - 0.1.1

Linux Administrators Security Guide LASG - 0.1.1 By Kurt Seifried ([email protected]) copyright 1999, All rights reserved. Available at: https://www.seifried.org/lasg/. This document is free for most non commercial uses, the license follows the table of contents, please read it if you have any concerns. If you have any questions email [email protected]. A mailing list is available, send an email to [email protected], with "subscribe lasg-announce" in the body (no quotes) and you will be automatically added. 1 Table of contents License Preface Forward by the author Contributing What this guide is and isn't How to determine what to secure and how to secure it Safe installation of Linux Choosing your install media It ain't over 'til... General concepts, server verses workstations, etc Physical / Boot security Physical access The computer BIOS LILO The Linux kernel Upgrading and compiling the kernel Kernel versions Administrative tools Access Telnet SSH LSH REXEC NSH Slush SSL Telnet Fsh secsh Local YaST sudo Super Remote Webmin Linuxconf COAS 2 System Files /etc/passwd /etc/shadow /etc/groups /etc/gshadow /etc/login.defs /etc/shells /etc/securetty Log files and other forms of monitoring General log security sysklogd / klogd secure-syslog next generation syslog Log monitoring logcheck colorlogs WOTS swatch Kernel logging auditd Shell logging bash Shadow passwords Cracking passwords John the ripper Crack Saltine cracker VCU PAM Software Management RPM dpkg tarballs / tgz Checking file integrity RPM dpkg PGP MD5 Automatic -

Ispmail Tutorial for Debian Lenny

6.10.2015 ISPmail tutorial for Debian Lenny ISPmail tutorial for Debian Lenny Add new comment 223533 reads This tutorial is for the former stable version "Debian Lenny". If you are using "Debian Squeeze" then please follow the new tutorial. A spanish translation of this tutorial is also available courtesy of José Ramón Magán Iglesias. What this tutorial is about You surely know the internet service providers that allow you to rent a domain and use it to receive emails. If you have a computer running Debian which is connected to the internet permanently you can do that yourself. You do not even need to have a fixed IP address thanks to dynamic DNS services like dyndns.org. All you need is this document, a cup of tea and a little time. When you are done your server will be able to... receive and store emails for your users from other mail servers let your users retrieve the email through IMAP and POP3 even with SSL to encrypt to connection receive and forward ("relay") email for your users if they are authenticated offer a webmail interface to read emails in a web browser detect most spam emails and filter them out or tag them License/Copyright This tutorial book is copyrighted 2009 Christoph Haas (email@christophhaas.de). It can be used freely under the terms of the GNU General Public License. Don't forget to refer to this URL when using it. Thank you. Changelog 17.6.09: Lenny tutorial gets published. 19.6.09: The page on SPF checks is temporarily offline. -

Linux Tips and Tricks

Linux Tips and Tricks Chris Karakas Linux Tips and Tricks by Chris Karakas This is a collection of various tips and tricks around Linux. Since they don't fit anywhere else, they are presented here in a more or less loose form. We start on how to get an attractive vi by using syntax highlighting, multiple search and the smartcase, incsearch, scrolloff, wildmode options in the vimrc file. We continue on various configuration subjects regarding Netscape, like positioning and sizing of windows and roaming profiles. Further on, I present a CSS file for HTML documents that were created automatically from DocBook SGML. Also a chapter on transparent proxying with Squid. Copyright © 2002-2003 Chris Karakas. Permission is granted to copy, distribute and/or modify this docu- ment under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license can be found at the Free Software Foundation. Revision History Revision 0.04 04.06..2003 Revised by: CK Added chapter on transparent proxying. Added Index. Alt text and captions for images Revision 0.03 29.05.2003 Revised by: CK Added chapters on Netscape and CSS for DocBook. Revision 0.02 18.12.2002 Revised by: CK first version Table of Contents 1. Introduction......................................................................................................... 1 1.1. Disclaimer .....................................................................................................................1 -

Projeto Final

UNIVERSIDADE CATÓLICA DE BRASÍLIA PRÓ-REITORIA DE GRADUAÇÃO TRABALHO DE CONCLUSÃO DE CURSO Bacharelado em Ciência da Computação e Sistemas de Informação CRIAÇÃO DE UM CORREIO ELETRÔNICO CORPORATIVO COM POSTFIX Autores: Davi Eduardo R. Domingues Luiz Carlos G. P. C. Branco Rafael Bispo Silva Orientador: MSc. Eduardo Lobo BRASÍLIA 2007 Criação de um Servidor de Correio Eletrônico Corporativo com Postfix 2 / 111 DAVI EDUARDO R. DOMINGUES LUIZ CARLOS G. P. C. BRANCO RAFAEL BISPO SILVA CRIAÇÃO DE UM SERVIDOR DE CORREIO ELETRÔNICO CORPORATIVO COM POSTFIX Monografia apresentada ao Programa de Graduação da Universidade Católica de Brasília, como requisito para obtenção do Título de Bacharelado em Ciência da Computação. Orientador: MSc. Eduardo Lobo Brasília 2007 Criação de um Servidor de Correio Eletrônico Corporativo com Postfix 3 / 111 TERMO DE APROVAÇÃO Dissertação defendida e aprovada como requisito parcial para obtenção do Título de Bacharel em Ciência da Computação, defendida e aprovada, em 05 de dezembro de 2007, pela banca examinadora constituída por: _______________________________________________________ Professor Eduardo Lobo – Orientador do Projeto _______________________________________________________ Professor Mário de Oliveira Braga Filho – Membro Interno _______________________________________________________ Professor Giovanni – Membro Interno Brasília UCB Criação de um Servidor de Correio Eletrônico Corporativo com Postfix 4 / 111 Dedico este trabalho primeiramente a Deus que me deu a vida e paciência para chegar a este nível de estudo que me encontro. Em especial a minha mãe que acreditou em mim, aos bons valores que me ensinou e pelo apoio a toda minha vida acadêmica e me compreendeu pelos momentos de ausência ao seu lado. Davi Eduardo R. Domingues Criação de um Servidor de Correio Eletrônico Corporativo com Postfix 5 / 111 Dedico a minha família que sempre acreditou em mim, também aos meus grandes amigos e aos grandes amigos que se foram, aqueles que nos deixam saudades e uma vontade de continuar seus trabalhos. -

Protokollsupport

Reference Guide WinRoute Pro 4.1 SE För version 4.1 Build 22 och sinare Tiny Software Inc. Contents Innehållsförteckning Läs mig först 2 Beskrivning av WinRoute 5 WinRoute sammanfattning...................................................................................... 6 Omfattande protokollsupport .................................................................................. 9 NAT-router............................................................................................................ 10 Introduktion i NAT .................................................................................... 11 Hur NAT fungerar...................................................................................... 12 WinRoutes struktur .................................................................................... 13 Att ställa in NAT på båda gränssnitten ...................................................... 15 Portmappning - paketbefordran ................................................................. 18 Portmappning för system med flera hem (flera IP-adresser)...................... 21 Multi-NAT ................................................................................................. 22 Gränssnittstabell......................................................................................... 24 VPN-support .............................................................................................. 24 Brandvägg med paketfilter.................................................................................... 25 Översikt -

An E-Mail Quarantine with Open Source Software

An e-mail quarantine with open source software Using amavis, qpsmtpd and MariaDB for e-mail filtering Daniel Knittel Dirk Jahnke-Zumbusch HEPiX fall 2016 NERSC, Lawrence Berkeley National Laboratory United States of America October 2016 e-mail services at DESY > DESY is hosting 70+ e-mail domains, most prominent: ▪ desy.de — of course :) ▪ xfel.eu — European XFEL ▪ belle2.org — since summer 2016 ▪ cfel.de — Center for Free-Electron Laser Science ▪ cssb-hamburg.de — Center for Structural Systems Biology > mixed environment of open source software and commercial products ▪ Zimbra network edition with web access and standard clients (Outlook, IMAP, SMTP) ▪ Postfix for MTAs ▪ SYMPA for mailing list services ▪ Sophos and Clearswift‘s MIMEsweeper for SMTP > currently ~6.500 fully-fledged mailboxes, some 1000s extra with reduced functionality (e.g. no Outlook/ActiveSync/EWS access) > daily ~300.000 delivered e-mails 2 DESY e-mail infrastructure 1b 3 4 1 2 5 > 1 DMZ filtering ▪ restrictive filtering, reject e-mails from very suspicious MTAs ▪ 1b soon: DESY’s NREN (DFN) will be integrated into e-mail flow with virus- and SPAM-scanning > 2 filter for bad content ➔ suspicious e-mails into quarantine > 3 2nd-level SPAM-scan based on mail text and own rules > 4 distribution of e-mails to mailbox servers, mailing list servers or DESY-external destinations > 5 throttling of e-mail flow to acceptable rates (individual vs. newsletter) ▪ think “phishing” ➔ high rates trigger an alarm > mixed HW/VM environment 3 e-mail at DESY – attachment filtering & quarantine > policy: e-mail traffic is filtered ▪ block “bad” e-mails in the first place ➔ viruses are blocked ➔ executable content is blocked ▪ also block e-mails originating from DESY if they contain malicious or suspicious content ▪ up to now: commercial solution > additional measures ▪ mark e-mails with a high SPAM-score (2nd-level SPAM-filtering) ▪ monitor outgoing e-mail-flow ▪ throttle if over a specific rate ➔ this is sender-specific and customizable (e.g. -

The Squid Caching Proxy

The Squid caching proxy Chris Wichura [email protected] What is Squid? • A caching proxy for – HTTP, HTTPS (tunnel only) –FTP – Gopher – WAIS (requires additional software) – WHOIS (Squid version 2 only) • Supports transparent proxying • Supports proxy hierarchies (ICP protocol) • Squid is not an origin server! Other proxies • Free-ware – Apache 1.2+ proxy support (abysmally bad!) • Commercial – Netscape Proxy – Microsoft Proxy Server – NetAppliance’s NetCache (shares some code history with Squid in the distant past) – CacheFlow (http://www.cacheflow.com/) – Cisco Cache Engine What is a proxy? • Firewall device; internal users communicate with the proxy, which in turn talks to the big bad Internet – Gate private address space (RFC 1918) into publicly routable address space • Allows one to implement policy – Restrict who can access the Internet – Restrict what sites users can access – Provides detailed logs of user activity What is a caching proxy? • Stores a local copy of objects fetched – Subsequent accesses by other users in the organization are served from the local cache, rather than the origin server – Reduces network bandwidth – Users experience faster web access How proxies work (configuration) • User configures web browser to use proxy instead of connecting directly to origin servers – Manual configuration for older PC based browsers, and many UNIX browsers (e.g., Lynx) – Proxy auto-configuration file for Netscape 2.x+ or Internet Explorer 4.x+ • Far more flexible caching policy • Simplifies user configuration, help desk support, -

Installation Guide Supplement for Use with Squid Web Proxy Cache (WWS

Installation Guide Supplement for use with Squid Web Proxy Cache Websense® Web Security Websense Web Filter v7.5 ©1996 - 2010, Websense, Inc. 10240 Sorrento Valley Rd., San Diego, CA 92121, USA All rights reserved. Published 2010 Printed in the United States of America and Ireland The products and/or methods of use described in this document are covered by U.S. Patent Numbers 5,983,270; 6,606,659; 6,947,985; 7,185,015; 7,194,464 and RE40,187 and other patents pending. This document may not, in whole or in part, be copied, photocopied, reproduced, translated, or reduced to any electronic medium or machine- readable form without prior consent in writing from Websense, Inc. Every effort has been made to ensure the accuracy of this manual. However, Websense, Inc., makes no warranties with respect to this documentation and disclaims any implied warranties of merchantability and fitness for a particular purpose. Websense, Inc., shall not be liable for any error or for incidental or consequential damages in connection with the furnishing, performance, or use of this manual or the examples herein. The information in this documentation is subject to change without notice. Trademarks Websense is a registered trademark of Websense, Inc., in the United States and certain international markets. Websense has numerous other unregistered trademarks in the United States and internationally. All other trademarks are the property of their respective owners. Microsoft, Windows, Windows NT, Windows Server, and Active Directory are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. Red Hat is a registered trademark of Red Hat, Inc., in the United States and other countries. -

Running SQUID In

Running SQUIDSQUID in freeBSD Sufi Faruq Ibne Abubakar AKTEL, TMIB What is ? • A full-featured Web proxy cache • Designed to run on Unix systems • Free, open-source software • The result of many contributions by unpaid (and paid) volunteers Why will I use ? • Save 30% Internet Bandwidth • Access Control • Low cost proxy • Proxy keeps database of each request comes from client • Proxy itself goes to get resource from internet to satisfy first time request • Proxy caches resources immediately after obtaining it from internet. • Proxy serves the resource from cache in second request for same resources Consideration for deployment • User calculation • System Memory (Min 256 MB RAM) • Speedy Storage (SCSI Preferred) •Faster CPU • Functionality expectations Supports • Proxying and caching of HTTP, FTP, and other URLs • Proxying for SSL • Cache hierarchies • ICP, HTCP, CARP, Cache Digests • Transparent caching • WCCP (Squid v2.3 and above) • Extensive access controls • HTTP server acceleration • SNMP • caching of DNS lookups Obtaining • Obtain package source from: – http://www.squid-cache.org – Squid Mirror Sites (http://www.squid- cache.org/Mirrors/http-mirrors.html) – Binary download for FreeBSD also available (http://www.squid-cache.org/binaries.html) • “STABLE” releases, suitable for production use • “PRE” releases, suitable for testing Installing • tar zxvf squid-2.4.STABLE6-src.tar.gz • cd squid-2.4.STABLE6 • ./configure --enable-removal-policies --enable-delay-pools --enable-ipf-transparent --enable-snmp --enable-storeio=diskd,ufs --enable-storeio=diskd,ufs