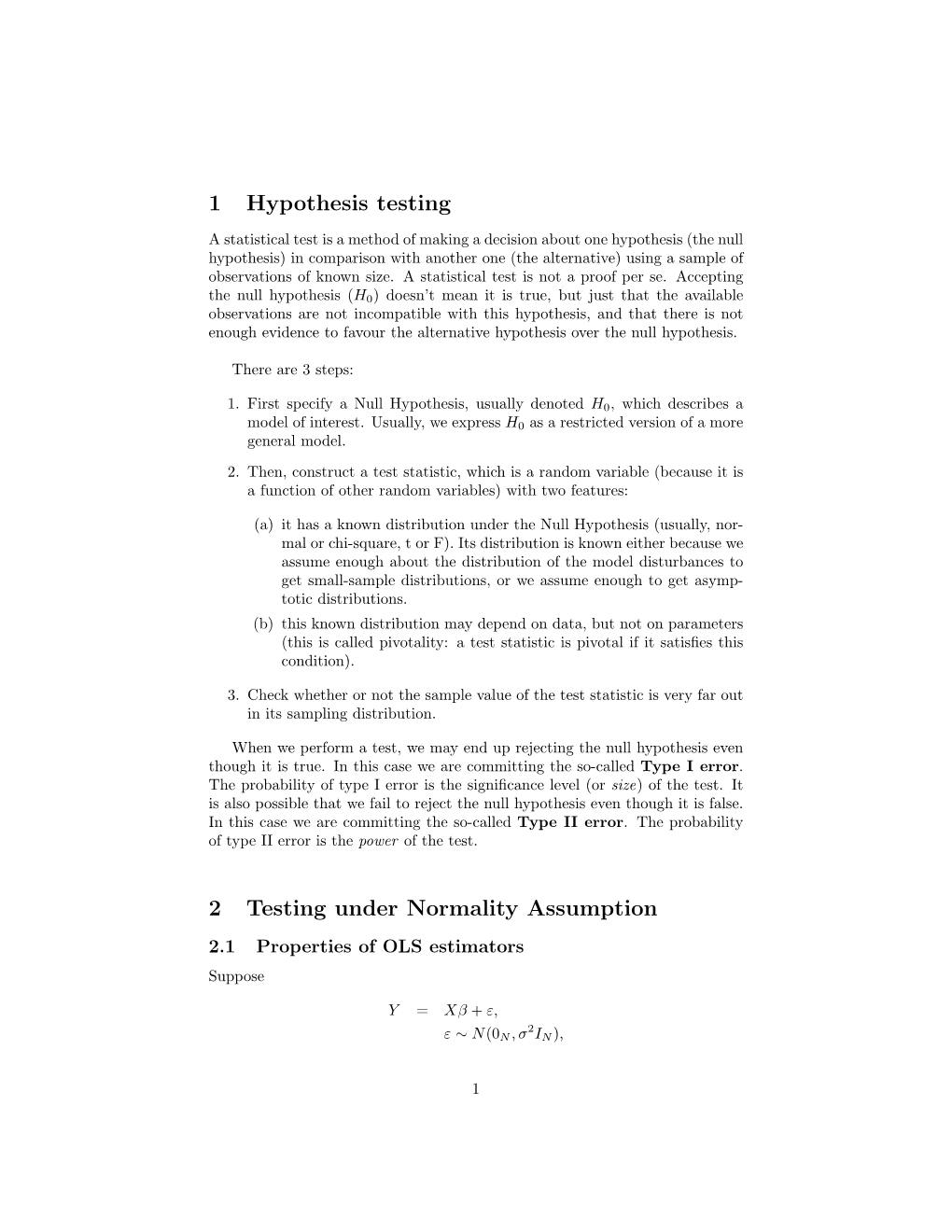

1 Hypothesis Testing 2 Testing Under Normality Assumption

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Three Statistical Testing Procedures in Logistic Regression: Their Performance in Differential Item Functioning (DIF) Investigation

Research Report Three Statistical Testing Procedures in Logistic Regression: Their Performance in Differential Item Functioning (DIF) Investigation Insu Paek December 2009 ETS RR-09-35 Listening. Learning. Leading.® Three Statistical Testing Procedures in Logistic Regression: Their Performance in Differential Item Functioning (DIF) Investigation Insu Paek ETS, Princeton, New Jersey December 2009 As part of its nonprofit mission, ETS conducts and disseminates the results of research to advance quality and equity in education and assessment for the benefit of ETS’s constituents and the field. To obtain a PDF or a print copy of a report, please visit: http://www.ets.org/research/contact.html Copyright © 2009 by Educational Testing Service. All rights reserved. ETS, the ETS logo, GRE, and LISTENING. LEARNING. LEADING. are registered trademarks of Educational Testing Service (ETS). SAT is a registered trademark of the College Board. Abstract Three statistical testing procedures well-known in the maximum likelihood approach are the Wald, likelihood ratio (LR), and score tests. Although well-known, the application of these three testing procedures in the logistic regression method to investigate differential item function (DIF) has not been rigorously made yet. Employing a variety of simulation conditions, this research (a) assessed the three tests’ performance for DIF detection and (b) compared DIF detection in different DIF testing modes (targeted vs. general DIF testing). Simulation results showed small differences between the three tests and different testing modes. However, targeted DIF testing consistently performed better than general DIF testing; the three tests differed more in performance in general DIF testing and nonuniform DIF conditions than in targeted DIF testing and uniform DIF conditions; and the LR and score tests consistently performed better than the Wald test. -

Chapter 8 Large Sample Theory

Chapter 8 Large Sample Theory 8.1 The CLT, Delta Method and an Expo- nential Family Limit Theorem Large sample theory, also called asymptotic theory, is used to approximate the distribution of an estimator when the sample size n is large. This theory is extremely useful if the exact sampling distribution of the estimator is complicated or unknown. To use this theory, one must determine what the estimator is estimating, the rate of convergence, the asymptotic distribution, and how large n must be for the approximation to be useful. Moreover, the (asymptotic) standard error (SE), an estimator of the asymptotic standard deviation, must be computable if the estimator is to be useful for inference. Theorem 8.1: the Central Limit Theorem (CLT). Let Y1,...,Yn be 2 1 n iid with E(Y )= µ and VAR(Y )= σ . Let the sample mean Y n = n i=1 Yi. Then √n(Y µ) D N(0,σ2). P n − → Hence n Y µ Yi nµ D √n n − = √n i=1 − N(0, 1). σ nσ → P Note that the sample mean is estimating the population mean µ with a √n convergence rate, the asymptotic distribution is normal, and the SE = S/√n where S is the sample standard deviation. For many distributions 203 the central limit theorem provides a good approximation if the sample size n> 30. A special case of the CLT is proven at the end of Section 4. Notation. The notation X Y and X =D Y both mean that the random variables X and Y have the same∼ distribution. -

Wald (And Score) Tests

Wald (and Score) Tests 1 / 18 Vector of MLEs is Asymptotically Normal That is, Multivariate Normal This yields I Confidence intervals I Z-tests of H0 : θj = θ0 I Wald tests I Score Tests I Indirectly, the Likelihood Ratio tests 2 / 18 Under Regularity Conditions (Thank you, Mr. Wald) a.s. I θbn → θ √ d −1 I n(θbn − θ) → T ∼ Nk 0, I(θ) 1 −1 I So we say that θbn is asymptotically Nk θ, n I(θ) . I I(θ) is the Fisher Information in one observation. I A k × k matrix ∂2 I(θ) = E[− log f(Y ; θ)] ∂θi∂θj I The Fisher Information in the whole sample is nI(θ) 3 / 18 H0 : Cθ = h Suppose θ = (θ1, . θ7), and the null hypothesis is I θ1 = θ2 I θ6 = θ7 1 1 I 3 (θ1 + θ2 + θ3) = 3 (θ4 + θ5 + θ6) We can write null hypothesis in matrix form as θ1 θ2 1 −1 0 0 0 0 0 θ3 0 0 0 0 0 0 1 −1 θ4 = 0 1 1 1 −1 −1 −1 0 θ5 0 θ6 θ7 4 / 18 p Suppose H0 : Cθ = h is True, and Id(θ)n → I(θ) By Slutsky 6a (Continuous mapping), √ √ d −1 0 n(Cθbn − Cθ) = n(Cθbn − h) → CT ∼ Nk 0, CI(θ) C and −1 p −1 Id(θ)n → I(θ) . Then by Slutsky’s (6c) Stack Theorem, √ ! n(Cθbn − h) d CT → . −1 I(θ)−1 Id(θ)n Finally, by Slutsky 6a again, −1 0 0 −1 Wn = n(Cθb − h) (CId(θ)n C ) (Cθb − h) →d W = (CT − 0)0(CI(θ)−1C0)−1(CT − 0) ∼ χ2(r) 5 / 18 The Wald Test Statistic −1 0 0 −1 Wn = n(Cθbn − h) (CId(θ)n C ) (Cθbn − h) I Again, null hypothesis is H0 : Cθ = h I Matrix C is r × k, r ≤ k, rank r I All we need is a consistent estimator of I(θ) I I(θb) would do I But it’s inconvenient I Need to compute partial derivatives and expected values in ∂2 I(θ) = E[− log f(Y ; θ)] ∂θi∂θj 6 / 18 Observed Fisher Information I To find θbn, minimize the minus log likelihood. -

Comparison of Wald, Score, and Likelihood Ratio Tests for Response Adaptive Designs

Journal of Statistical Theory and Applications Volume 10, Number 4, 2011, pp. 553-569 ISSN 1538-7887 Comparison of Wald, Score, and Likelihood Ratio Tests for Response Adaptive Designs Yanqing Yi1∗and Xikui Wang2 1 Division of Community Health and Humanities, Faculty of Medicine, Memorial University of Newfoundland, St. Johns, Newfoundland, Canada A1B 3V6 2 Department of Statistics, University of Manitoba, Winnipeg, Manitoba, Canada R3T 2N2 Abstract Data collected from response adaptive designs are dependent. Traditional statistical methods need to be justified for the use in response adaptive designs. This paper gener- alizes the Rao's score test to response adaptive designs and introduces a generalized score statistic. Simulation is conducted to compare the statistical powers of the Wald, the score, the generalized score and the likelihood ratio statistics. The overall statistical power of the Wald statistic is better than the score, the generalized score and the likelihood ratio statistics for small to medium sample sizes. The score statistic does not show good sample properties for adaptive designs and the generalized score statistic is better than the score statistic under the adaptive designs considered. When the sample size becomes large, the statistical power is similar for the Wald, the sore, the generalized score and the likelihood ratio test statistics. MSC: 62L05, 62F03 Keywords and Phrases: Response adaptive design, likelihood ratio test, maximum likelihood estimation, Rao's score test, statistical power, the Wald test ∗Corresponding author. Fax: 1-709-777-7382. E-mail addresses: [email protected] (Yanqing Yi), xikui [email protected] (Xikui Wang) Y. Yi and X. -

Statistical Asymptotics Part II: First-Order Theory

First-Order Asymptotic Theory Statistical Asymptotics Part II: First-Order Theory Andrew Wood School of Mathematical Sciences University of Nottingham APTS, April 15-19 2013 Andrew Wood Statistical Asymptotics Part II: First-Order Theory First-Order Asymptotic Theory Structure of the Chapter This chapter covers asymptotic normality and related results. Topics: MLEs, log-likelihood ratio statistics and their asymptotic distributions; M-estimators and their first-order asymptotic theory. Initially we focus on the case of the MLE of a scalar parameter θ. Then we study the case of the MLE of a vector θ, first without and then with nuisance parameters. Finally, we consider the more general setting of M-estimators. Andrew Wood Statistical Asymptotics Part II: First-Order Theory First-Order Asymptotic Theory Motivation Statistical inference typically requires approximations because exact answers are usually not available. Asymptotic theory provides useful approximations to densities or distribution functions. These approximations are based on results from probability theory. The theory underlying these approximation techniques is valid as some quantity, typically the sample size n [or more generally some measure of information], goes to infinity, but the approximations obtained are often accurate even for small sample sizes. Andrew Wood Statistical Asymptotics Part II: First-Order Theory First-Order Asymptotic Theory Test statistics Consider testing the null hypothesis H0 : θ = θ0, where θ0 is an arbitrary specified point in Ωθ. If desired, we may -

Applying the Delta Method in Metric Analytics

Applying the Delta Method in Metric Analytics: A Practical Guide with Novel Ideas Alex Deng Ulf Knoblich Jiannan Lu∗ Microsoft Corporation Microsoft Corporation Microsoft Corporation Redmond, WA Redmond, WA Redmond, WA [email protected] [email protected] [email protected] ABSTRACT variance of a normal distribution from independent and identically During the last decade, the information technology industry has distributed (i.i.d.) observations, we only need to obtain their sum adopted a data-driven culture, relying on online metrics to measure and sum of squares, which are the corresponding summary statis- 1 and monitor business performance. Under the setting of big data, tics and can be trivially computed in a distributed fashion. In data- the majority of such metrics approximately follow normal distribu- driven businesses such as information technology, these summary tions, opening up potential opportunities to model them directly statistics are often referred to as metrics, and used for measuring without extra model assumptions and solve big data problems via and monitoring key performance indicators [15, 18]. In practice, it closed-form formulas using distributed algorithms at a fraction of is often the changes or differences between metrics, rather than the cost of simulation-based procedures like bootstrap. However, measurements at the most granular level, that are of greater inter- certain attributes of the metrics, such as their corresponding data est. In the context of randomized controlled experimentation (or generating processes and aggregation levels, pose numerous chal- A/B testing), inferring the changes of metrics can establish causal- lenges for constructing trustworthy estimation and inference pro- ity [36, 44, 50], e.g., whether a new user interface design causes cedures. -

Chapter 6 Asymptotic Distribution Theory

RS – Chapter 6 Chapter 6 Asymptotic Distribution Theory Asymptotic Distribution Theory • Asymptotic distribution theory studies the hypothetical distribution -the limiting distribution- of a sequence of distributions. • Do not confuse with asymptotic theory (or large sample theory), which studies the properties of asymptotic expansions. • Definition Asymptotic expansion An asymptotic expansion (asymptotic series or Poincaré expansion) is a formal series of functions, which has the property that truncating the series after a finite number of terms provides an approximation to a given function as the argument of the function tends towards a particular, often infinite, point. (In asymptotic distribution theory, we do use asymptotic expansions.) 1 RS – Chapter 6 Asymptotic Distribution Theory • In Chapter 5, we derive exact distributions of several sample statistics based on a random sample of observations. • In many situations an exact statistical result is difficult to get. In these situations, we rely on approximate results that are based on what we know about the behavior of certain statistics in large samples. • Example from basic statistics: What can we say about 1/ x ? We know a lot about x . What do we know about its reciprocal? Maybe we can get an approximate distribution of 1/ x when n is large. Convergence • Convergence of a non-random sequence. Suppose we have a sequence of constants, indexed by n f(n) = ((n(n+1)+3)/(2n + 3n2 + 5) n=1, 2, 3, ..... 2 Ordinary limit: limn→∞ ((n(n+1)+3)/(2n + 3n + 5) = 1/3 There is nothing stochastic about the limit above. The limit will always be 1/3. • In econometrics, we are interested in the behavior of sequences of real-valued random scalars or vectors. -

The 'Delta Method'

B Appendix The ‘Delta method’ ... Suppose you have done a study, over 4 years, which yields 3 estimates of survival (say, φ1, φ2, and φ3). But, suppose what you are really interested in is the estimate of the product of the three survival values (i.e., the probability of surviving from the beginning of the study to the end of theb study)?b Whileb it is easy enough to derive an estimate of this product (as [φ φ φ ]), how do you derive 1 × 2 × 3 an estimate of the variance of the product? In other words, how do you derive an estimate of the variance of a transformation of one or more random variables (inb thisb case,b we transform the three random variables - φi - by considering their product)? One commonly used approach which is easily implemented, not computer-intensive, and can be robustly applied inb many (but not all) situations is the so-called Delta method (also known as the method of propagation of errors). In this appendix, we briefly introduce the underlying background theory, and the implementation of the Delta method, to fairly typical scenarios. B.1. Mean and variance of random variables Our primary interest here is developing a method that will allow us to estimate the mean and variance for functions of random variables. Let’s start by considering the formal approach for deriving these values explicitly, based on the method of moments. For continuous random variables, consider a continuous function f (x) on the interval [ ∞, +∞]. The first three moments of f (x) can be written − as +∞ M0 = f (x)dx ∞ Z− +∞ M1 = x f (x)dx ∞ Z− +∞ 2 M2 = x f (x)dx ∞ Z− In the particular case that the function is a probability density (as for a continuous random variable), then M0 = 1 (i.e., the area under the PDF must equal 1). -

Econometrics-I-11.Pdf

Econometrics I Professor William Greene Stern School of Business Department of Economics 11-1/78 Part 11: Hypothesis Testing - 2 Econometrics I Part 11 – Hypothesis Testing 11-2/78 Part 11: Hypothesis Testing - 2 Classical Hypothesis Testing We are interested in using the linear regression to support or cast doubt on the validity of a theory about the real world counterpart to our statistical model. The model is used to test hypotheses about the underlying data generating process. 11-3/78 Part 11: Hypothesis Testing - 2 Types of Tests Nested Models: Restriction on the parameters of a particular model y = 1 + 2x + 3T + , 3 = 0 (The “treatment” works; 3 0 .) Nonnested models: E.g., different RHS variables yt = 1 + 2xt + 3xt-1 + t yt = 1 + 2xt + 3yt-1 + wt (Lagged effects occur immediately or spread over time.) Specification tests: ~ N[0,2] vs. some other distribution (The “null” spec. is true or some other spec. is true.) 11-4/78 Part 11: Hypothesis Testing - 2 Hypothesis Testing Nested vs. nonnested specifications y=b1x+e vs. y=b1x+b2z+e: Nested y=bx+e vs. y=cz+u: Not nested y=bx+e vs. logy=clogx: Not nested y=bx+e; e ~ Normal vs. e ~ t[.]: Not nested Fixed vs. random effects: Not nested Logit vs. probit: Not nested x is (not) endogenous: Maybe nested. We’ll see … Parametric restrictions Linear: R-q = 0, R is JxK, J < K, full row rank General: r(,q) = 0, r = a vector of J functions, R(,q) = r(,q)/’. Use r(,q)=0 for linear and nonlinear cases 11-5/78 Part 11: Hypothesis Testing - 2 Broad Approaches Bayesian: Does not reach a firm conclusion. -

Chapter 5 the Delta Method and Applications

Chapter 5 The Delta Method and Applications 5.1 Linear approximations of functions In the simplest form of the central limit theorem, Theorem 4.18, we consider a sequence X1,X2,... of independent and identically distributed (univariate) random variables with finite variance σ2. In this case, the central limit theorem states that √ d n(Xn − µ) → σZ, (5.1) where µ = E X1 and Z is a standard normal random variable. In this chapter, we wish to consider the asymptotic distribution of, say, some function of Xn. In the simplest case, the answer depends on results already known: Consider a linear function g(t) = at+b for some known constants a and b. Since E Xn = µ, clearly E g(Xn) = aµ + b =√g(µ) by the linearity of the expectation operator. Therefore, it is reasonable to ask whether n[g(Xn) − g(µ)] tends to some distribution as n → ∞. But the linearity of g(t) allows one to write √ √ n g(Xn) − g(µ) = a n Xn − µ . We conclude by Theorem 2.24 that √ d n g(Xn) − g(µ) → aσZ. Of course, the distribution on the right hand side above is N(0, a2σ2). None of the preceding development is especially deep; one might even say that it is obvious that a linear transformation of the random variable Xn alters its asymptotic distribution 85 by a constant multiple. Yet what if the function g(t) is nonlinear? It is in this nonlinear case that a strong understanding of the argument above, as simple as it may be, pays real dividends. -

![Stat 8931 (Aster Models) Lecture Slides Deck 4 [1Ex] Large Sample](https://docslib.b-cdn.net/cover/4741/stat-8931-aster-models-lecture-slides-deck-4-1ex-large-sample-1354741.webp)

Stat 8931 (Aster Models) Lecture Slides Deck 4 [1Ex] Large Sample

Stat 8931 (Aster Models) Lecture Slides Deck 4 Large Sample Theory and Estimating Population Growth Rate Charles J. Geyer School of Statistics University of Minnesota October 8, 2018 R and License The version of R used to make these slides is 3.5.1. The version of R package aster used to make these slides is 1.0.2. This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License (http://creativecommons.org/licenses/by-sa/4.0/). The Delta Method The delta method is a method (duh!) of deriving the approximate distribution of a nonlinear function of an estimator from the approximate distribution of the estimator itself. What it does is linearize the nonlinear function. If g is a nonlinear, differentiable vector-to-vector function, the best linear approximation, which is the Taylor series up through linear terms, is g(y) − g(x) ≈ rg(x)(y − x); where rg(x) is the matrix of partial derivatives, sometimes called the Jacobian matrix. If gi (x) denotes the i-th component of the vector g(x), then the (i; j)-th component of the Jacobian matrix is @gi (x)=@xj . The Delta Method (cont.) The delta method is particularly useful when θ^ is an estimator and θ is the unknown true (vector) parameter value it estimates, and the delta method says g(θ^) − g(θ) ≈ rg(θ)(θ^ − θ) It is not necessary that θ and g(θ) be vectors of the same dimension. Hence it is not necessary that rg(θ) be a square matrix. The Delta Method (cont.) The delta method gives good or bad approximations depending on whether the spread of the distribution of θ^ − θ is small or large compared to the nonlinearity of the function g in the neighborhood of θ. -

Package 'Lmtest'

Package ‘lmtest’ September 9, 2020 Title Testing Linear Regression Models Version 0.9-38 Date 2020-09-09 Description A collection of tests, data sets, and examples for diagnostic checking in linear regression models. Furthermore, some generic tools for inference in parametric models are provided. LazyData yes Depends R (>= 3.0.0), stats, zoo Suggests car, strucchange, sandwich, dynlm, stats4, survival, AER Imports graphics License GPL-2 | GPL-3 NeedsCompilation yes Author Torsten Hothorn [aut] (<https://orcid.org/0000-0001-8301-0471>), Achim Zeileis [aut, cre] (<https://orcid.org/0000-0003-0918-3766>), Richard W. Farebrother [aut] (pan.f), Clint Cummins [aut] (pan.f), Giovanni Millo [ctb], David Mitchell [ctb] Maintainer Achim Zeileis <[email protected]> Repository CRAN Date/Publication 2020-09-09 05:30:11 UTC R topics documented: bgtest . .2 bondyield . .4 bptest . .6 ChickEgg . .8 coeftest . .9 coxtest . 11 currencysubstitution . 13 1 2 bgtest dwtest . 14 encomptest . 16 ftemp . 18 gqtest . 19 grangertest . 21 growthofmoney . 22 harvtest . 23 hmctest . 25 jocci . 26 jtest . 28 lrtest . 29 Mandible . 31 moneydemand . 31 petest . 33 raintest . 35 resettest . 36 unemployment . 38 USDistLag . 39 valueofstocks . 40 wages ............................................ 41 waldtest . 42 Index 46 bgtest Breusch-Godfrey Test Description bgtest performs the Breusch-Godfrey test for higher-order serial correlation. Usage bgtest(formula, order = 1, order.by = NULL, type = c("Chisq", "F"), data = list(), fill = 0) Arguments formula a symbolic description for the model to be tested (or a fitted "lm" object). order integer. maximal order of serial correlation to be tested. order.by Either a vector z or a formula with a single explanatory variable like ~ z.