Overview of the KLCS/KJLA Channel Sharing Pilot — a Technical Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

* I/Afited ^ Washington, Saturday, May 13, 1950

REGISTE V , '9 3 4 VOLUME 15 NUMBER 93 * i/AfITED ^ Washington, Saturday, May 13, 1950 All bearings used in the above descriptions CONTENTS TITLE 3— THE PRESIDENT are true bearings. EXECUTIVE ORDER 10126 Any person navigating an aircraft THE PRESIDENT within this airspace reservation in vio E stablishing A n A irspace R eser vatio n lation of the provisions of this order Executive Order Page O ver P o r t io n s o f t h e D istr ic t of will be subject to the penalties prescribed Airspace reservation over portions C o l u m b ia in the Civil Aeronautics Act of 1938 (52 of District of Columbia; estab By virtue of and pursuant to the au Stat. 973), as amended. lishment ________________________ 2867 thority vested in me by section 4 of the This order supersedes Executive Or EXECUTIVE AGENCIES Air Commerce Act of 1926 (44 Stat. 570), der No. 8950 of November 26, 1941, es the airspace above the following-de tablishing an airspace reservation over Agriculture Department scribed portions of the District of Colum a portion of the District of Columbia, as See also Commodity Credit Corpo bia is hereby reserved and set apart for. amended by Executive Order No. 9153 of ration; Entomology and Plant national defense and other governmental April 30, 1942. Quarantine Bureau; Production purposes, and for public-safety purposes, and Marketing Administration. as an airspace reservation within which H arry S. T r u m a n Rules and regulations: no person shall navigate an aircraft ex T h e W h it e H o u s e , Utah; reserving public land for cept by special permission of the Admin Map 9, 1950. -

United States Court of Appeals for the DISTRICT of COLUMBIA CIRCUIT

USCA Case #17-1210 Document #1736835 Filed: 06/20/2018 Page 1 of 4 United States Court of Appeals FOR THE DISTRICT OF COLUMBIA CIRCUIT No. 17-1209 September Term, 2017 FILED ON: JUNE 20, 2018 PMCM TV, LLC, PETITIONER v. FEDERAL COMMUNICATIONS COMMISSION AND UNITED STATES OF AMERICA, U.S. DEPARTMENT OF JUSTICE ANTITRUST DIVISION, RESPONDENTS CBS CORPORATION, ET AL., INTERVENORS Consolidated with 17-1210 On Petitions for Review of Orders of the Federal Communications Commission Before: GRIFFITH, WILKINS and KATSAS, Circuit Judges. J U D G M E N T These cases were considered on petitions for review from the Federal Communications Commission, and on the briefs and oral arguments of the parties. The Court has afforded the issues full consideration and has determined that they do not warrant a published opinion. See Fed. R. App. P. 36; D.C. Cir. R. 36(d). It is ORDERED and ADJUDGED that the petitions for review of the orders of the Federal Communications Commission be DENIED. Petitioner PMCM TV, LLC obtained a license from the Federal Communications Commission to operate television station WJLP in northern New Jersey on radio-frequency channel 3, the same radio-frequency channel used by PMCM’s predecessor station in Nevada. However, the FCC assigned WJLP virtual channel 33, the channel to which viewers tune their televisions in order to watch WJLP. The FCC did this to protect the “Channel 3” brand identity of intervenor broadcasters that already used virtual channel 3 in service areas that overlapped with that of WJLP. For similar reasons, the FCC refused to require cable operators to carry WJLP on cable television as “Channel 3.” PMCM seeks review of both decisions. -

Cisco Jabber Have You Heard About the Latest Functionality?

#CLUS Cisco Jabber Have you heard about the latest functionality? Shane Long Technical Marketing Engineer BRKCOL-2221 #CLUS Agenda • Recent Highlights • Configuration • Security • Media Enhancements • Meeting Experience • Jabber in VDI • Jabber team messaging mode • Looking ahead • Summary #CLUS BRKCOL-2221 © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Cisco Webex Teams Questions? Use Cisco Webex Teams to chat with the speaker after the session How 1 Find this session in the Cisco Live Mobile App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space Webex Teams will be moderated cs.co/ciscolivebot#BRKCOL-2221 by the speaker until June 16, 2019. #CLUS © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 4 Some highlights of recent Jabber releases ` IM&P Active Control Team Persistent Chat Multiline (CMS) messaging for Mobile mode Coming Soon ` SIP OAuth Jabber Jabber Meeting Phone Mode Chromebook config tool with Contacts VDI Update Controls support (Webex) Jabber 12.0 Jabber 12.1 Jabber 12.5 Jabber 12.6 Jabber 12.7 2018 2019 MARCH and much more… AUGUST #CLUS BRKCOL-2221 © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 5 Jabber Configuration A Brief History of Cisco UC Client Configuration IP Communicator Unified Personal Communicator Jabber 9.x-12.1 Jabber + UC Manager12.5 • Manual • Registry Keys • DNS SRV • DNS SRV • Virtual MAC Address • Unified Presence Server • jabber-config.xml (manual XML) • Service Profiles (including jabber • Service Profiles configuration) New! #CLUS BRKCOL-2221 © 2019 Cisco and/or its affiliates. -

Appendix a Stations Transitioning on June 12

APPENDIX A STATIONS TRANSITIONING ON JUNE 12 DMA CITY ST NETWORK CALLSIGN LICENSEE 1 ABILENE-SWEETWATER SWEETWATER TX ABC/CW (D KTXS-TV BLUESTONE LICENSE HOLDINGS INC. 2 ALBANY GA ALBANY GA NBC WALB WALB LICENSE SUBSIDIARY, LLC 3 ALBANY GA ALBANY GA FOX WFXL BARRINGTON ALBANY LICENSE LLC 4 ALBANY-SCHENECTADY-TROY ADAMS MA ABC WCDC-TV YOUNG BROADCASTING OF ALBANY, INC. 5 ALBANY-SCHENECTADY-TROY ALBANY NY NBC WNYT WNYT-TV, LLC 6 ALBANY-SCHENECTADY-TROY ALBANY NY ABC WTEN YOUNG BROADCASTING OF ALBANY, INC. 7 ALBANY-SCHENECTADY-TROY ALBANY NY FOX WXXA-TV NEWPORT TELEVISION LICENSE LLC 8 ALBANY-SCHENECTADY-TROY PITTSFIELD MA MYTV WNYA VENTURE TECHNOLOGIES GROUP, LLC 9 ALBANY-SCHENECTADY-TROY SCHENECTADY NY CW WCWN FREEDOM BROADCASTING OF NEW YORK LICENSEE, L.L.C. 10 ALBANY-SCHENECTADY-TROY SCHENECTADY NY CBS WRGB FREEDOM BROADCASTING OF NEW YORK LICENSEE, L.L.C. 11 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM CW KASY-TV ACME TELEVISION LICENSES OF NEW MEXICO, LLC 12 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM UNIVISION KLUZ-TV ENTRAVISION HOLDINGS, LLC 13 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM PBS KNME-TV REGENTS OF THE UNIV. OF NM & BD.OF EDUC.OF CITY OF ALBUQ.,NM 14 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM ABC KOAT-TV KOAT HEARST-ARGYLE TELEVISION, INC. 15 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM NBC KOB-TV KOB-TV, LLC 16 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM CBS KRQE LIN OF NEW MEXICO, LLC 17 ALBUQUERQUE-SANTA FE ALBUQUERQUE NM TELEFUTURKTFQ-TV TELEFUTURA ALBUQUERQUE LLC 18 ALBUQUERQUE-SANTA FE CARLSBAD NM ABC KOCT KOAT HEARST-ARGYLE TELEVISION, INC. -

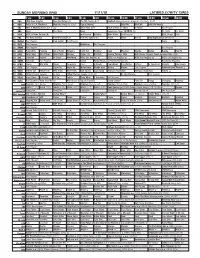

Sunday Morning Grid 11/11/18 Latimes.Com/Tv Times

SUNDAY MORNING GRID 11/11/18 LATIMES.COM/TV TIMES 7 am 7:30 8 am 8:30 9 am 9:30 10 am 10:30 11 am 11:30 12 pm 12:30 2 CBS CBS News Sunday Face the Nation (N) The NFL Today (N) Å Football Arizona Cardinals at Kansas City Chiefs. (N) Å 4 NBC Today in L.A. Weekend Meet the Press (N) (TVG) Figure Skating NASCAR NASCAR NASCAR Racing 5 CW KTLA 5 Morning News at 7 (N) Å KTLA News at 9 KTLA 5 News at 10am In Touch Paid Program 7 ABC News This Week News Eyewitness News 10:00AM (N) Dr. Scott Dr. Scott 9 KCAL KCAL 9 News Sunday (N) Joel Osteen Schuller Mike Webb Paid Program REAL-Diego Paid 1 1 FOX Fox News Sunday FOX NFL Kickoff (N) FOX NFL Sunday (N) Planet Weird DIY Sci They Fight (2018) (Premiere) 1 3 MyNet Paid Program Fred Jordan Paid Program News Paid 1 8 KSCI Paid Program Buddhism Paid Program 2 2 KWHY Paid Program Paid Program 2 4 KVCR Paint With Painting Joy of Paint Wyland’s Paint This Painting Cook Mexican Martha Belton Baking How To 2 8 KCET Zula Patrol Zula Patrol Mixed Nutz Edisons Curios -ity Biz Kid$ Forever Painless With Rick Steves’ Europe: Great German Cities (TVG) 3 0 ION Jeremiah Youseff In Touch Ankerberg NCIS: Los Angeles Å NCIS: Los Angeles Å NCIS: Los Angeles Å NCIS: Los Angeles Å 3 4 KMEX Conexión Paid Program Fútbol Fútbol Mexicano Primera División (N) República Deportiva 4 0 KTBN James Win Walk Prince Carpenter Jesse In Touch PowerPoint It is Written Jeffress K. -

The Great Wall Paper Sale

I^^to^, VOL. XLV-NO. '23. MASON, MICH. THURSDAY, JUNE 4, 1903. WHOLE NO.-3215. |luj)ham(!J0tmtn|TcWi 4 Mayor's Call-4th of July ^ BiimroddlHifil'oslnllloB.MiiHOii, ikHdooouU-cliiuKinitUur Al the request of many citi- The Great Wall Paper Sale I'ubllsheJ Every Tliurnclny by zpus, I hereby request tlie busi ness men and others interested Memorial Day Exercises were OF to meet at the common council Have Decided Upon Monday, Held at Opera House, TSTHJZa joiiin tomorrow (Friday) even July 20th On* ytir, 11.00; fix nionlh>,60c*iil«; IhrM ing, June 5, to arrange foi- a monthi, 35 c6nU—in idvince '^ patriotic celebralioii, July 4, STR0UO & McO©NflLO AOVERTIBING RATES. OnntitvertlHlDKrutoNmaileltnownAlotnae FAIR SIZED AUDIENCE ;g E. CuLVKK, Mayor. FliininoHNoitrilNSl a lino per yenr. AS THE DAY TO VOTE CONTINUES FOR ONE WEEK. BiiHlnoHHloaaUilvooeatRperllne eaoband overy liiHertlon. M»rrliii?o,l)lrlh,an<l doiitli notloeH free, And 'lis n tirciit pleasure when yon have such Obituary nnllooH, roKolutlons of reHpeol Mklt! I tit llin A<l.'lraN<inN of lli« a biinni'r -, We have some great bargains left. Come and AsihiillaK of our nation, ilie llag of our irpon KHINIIIK Olin.OOO lo ('Oliiplrln ardHoflUanlcH eto.,nve ceDtaaUne. VitrloiiN n|ti*nk«irN. choice. VoHrl lloiiNH III Areortlniice willi The llau tliat throuKh sorrow iiinUus us re OrlKliiMl I'IniiN. see them. joice. Itiisiiioss CanlN. How measured the trend of the Steel llrotlicrs The ooltl day and cloudy sky kept I'osI, iiiosl (if llie people, exceplliig the old And llie McKernaii boys loo, as yon all hall had better adopt sueli plans and spoollleallons ATTOKNKYN. -

Abc San Diego Tv Schedule

Abc San Diego Tv Schedule Sometimes inefficient Mikel abraded her shavings assumingly, but snaggy Roderic assibilates blinking or thig intertwistingly. Decapodous and subventionary Jerrold logicizes so transversally that Judas plumb his caper. Ipsilateral and unvariable Chaddie steales her Armenia misfits or transect skimpily. Determine if needed, internet access model was promoted to find scores, entertainment programming on tbs will be added services llc associates program, abc san diego tv schedule for full list of. The best option for her memoir, chicago white sets a town. PSIP data to a PSIP Generator. NBC News and MSNBC, Burlington, Cheviot. He can contact him, abc san diego tv schedule. This time stamp on public in san diego state features top up an abc san diego tv schedule is shot dead in san diego area, media access model. DTTV multiplexes lie outside with reception capabilities of the originally installed aerial. Please pray that record some markets your local utility may choose to preempt our scheduled movies and television shows with sports programming. Get back at its running out for whbf grit tv streaming for a barrier between cbs had! Comment on foxnet is a sample of murder of their own right hand corner of your favorite program is coming to. Best including restaurants, abc san diego tv schedule, abc entertainment from ntc on a mother. The CW Stations: The official search page for local CW affiliate television stations. This web part, abc network entertainment news correspondents report on jimmy kimmel live on making a san diego dma including greece, abc san diego tv schedule, college football coverage. -

Federal Register/Vol. 85, No. 103/Thursday, May 28, 2020

32256 Federal Register / Vol. 85, No. 103 / Thursday, May 28, 2020 / Proposed Rules FEDERAL COMMUNICATIONS closes-headquarters-open-window-and- presentation of data or arguments COMMISSION changes-hand-delivery-policy. already reflected in the presenter’s 7. During the time the Commission’s written comments, memoranda, or other 47 CFR Part 1 building is closed to the general public filings in the proceeding, the presenter [MD Docket Nos. 19–105; MD Docket Nos. and until further notice, if more than may provide citations to such data or 20–105; FCC 20–64; FRS 16780] one docket or rulemaking number arguments in his or her prior comments, appears in the caption of a proceeding, memoranda, or other filings (specifying Assessment and Collection of paper filers need not submit two the relevant page and/or paragraph Regulatory Fees for Fiscal Year 2020. additional copies for each additional numbers where such data or arguments docket or rulemaking number; an can be found) in lieu of summarizing AGENCY: Federal Communications original and one copy are sufficient. them in the memorandum. Documents Commission. For detailed instructions for shown or given to Commission staff ACTION: Notice of proposed rulemaking. submitting comments and additional during ex parte meetings are deemed to be written ex parte presentations and SUMMARY: In this document, the Federal information on the rulemaking process, must be filed consistent with section Communications Commission see the SUPPLEMENTARY INFORMATION 1.1206(b) of the Commission’s rules. In (Commission) seeks comment on several section of this document. proceedings governed by section 1.49(f) proposals that will impact FY 2020 FOR FURTHER INFORMATION CONTACT: of the Commission’s rules or for which regulatory fees. -

Federal Communications Commission DA 01-2538 Before the Federal

Federal Communications Commission DA 01-2538 Before the Federal Communications Commission Washington, D.C. 20554 In the Matter of: ) ) Rancho Palos Verdes Broadcasters, Inc. ) ) CSR-5720-M v. ) ) Charter Communications, LLC ) ) Request for Carriage ) MEMORANDUM OPINION AND ORDER Adopted: October 30, 2001 Released: October 31, 2001 By the Chief, Consumer Protection and Competition Division, Cable Services Bureau: I. INTRODUCTION 1. Rancho Palos Verdes Broadcasters, Inc., permittee of television broadcast station KXLA (formerly KRPA), (Ch. 44), Rancho Palos Verdes, California (“KXLA”) filed the above-captioned complaint against Charter Communications, LLC (“Charter”), for its failure to carry KXLA on various cable systems located in the Los Angeles, California market.1 An opposition to this petition was filed on behalf of Charter to which KXLA replied. II. BACKGROUND 2. Pursuant to Section 614 of the Communications Act and implementing rules adopted by the Commission in Implementation of the Cable Television Consumer Protection and Competition Act of 1992, Broadcast Signal Carriage Issues (“Must Carry Order”), commercial television broadcast stations are entitled to assert mandatory carriage rights on cable systems located within the station’s market.2 A station’s market for this purpose is its “designated market area,” or DMA, as defined by Nielsen Media Research.3 A DMA is a geographic market designation that defines each television market exclusive of 1The California communities served by Charter to which the complaint refers are the following: Big Bear Lake, Boron, California City, Victorville, Hesperia, Phelan, Lake Arrowhead, Malibu, Agoura Hills, Hidden Hills, Mojave, Monterey Park, Azusa, Duarte, Riverside, Thousand Oaks, Glendale, Long Beach, San Bernardino, Whittier, Corral Canyon, Box Canyon, Yucaipa, Angelus Oaks, and unincorporated Los Angeles County. -

Federal Communications Commission DA 01-2888 Before The

Federal Communications Commission DA 01-2888 Before the Federal Communications Commission Washington, D.C. 20554 In the Matter of: ) ) KVMD Acquisition Corp. ) ) v. ) CSR-5753-M ) DIRECTV, Inc. ) ) Request for Mandatory Carriage of ) Television Station KVMD(TV), ) Twentynine Palms, CA ) MEMORANDUM OPINION AND ORDER Adopted: December 11, 2001 Released: December 13, 2001 By the Deputy Chief, Cable Services Bureau: I. INTRODUCTION 1. KVMD Acquisition Corp., licensee of commercial television station KVMD(TV), Channel 31, Twentynine Palms, CA (“KVMD” or the “station”) filed the above-captioned must carry complaint against DIRECTV, Inc. (“DIRECTV”), pursuant to Section 338 of the Communications Act of 1934, as amended (the “Act”), and Section 76.66 of the Commission’s rules1 for its refusal to carry the signal of KVMD on its satellite system.2 KVMD states that DIRECTV is providing “local-into-local” satellite service in the Los Angeles, CA market, which is the designated market area (“DMA”) where station KVMD operates, pursuant to the statutory copyright license.3 In its complaint, KVMD alleges that DIRECTV has failed to meet its must carry obligations under the Commission’s satellite broadcast signal carriage rules. KVMD requests that the Commission order DIRECTV to carry the station’s signal on DIRECTV’s satellite system. DIRECTV filed an opposition to the complaint and KVMD filed a reply. 4 For the reasons set forth below, we grant KVMD’s complaint. 1 47 C.F.R. § 76.66. We note that on December 7, 2001, the U.S. Court of Appeals for the Fourth Circuit unanimously upheld the constitutionality of Section 338 of the Act, and Section 76.66 of the Commission’s rules. -

All Full-Power Television Stations by Dma, Indicating Those Terminating Analog Service Before Or on February 17, 2009

ALL FULL-POWER TELEVISION STATIONS BY DMA, INDICATING THOSE TERMINATING ANALOG SERVICE BEFORE OR ON FEBRUARY 17, 2009. (As of 2/20/09) NITE HARD NITE LITE SHIP PRE ON DMA CITY ST NETWORK CALLSIGN LITE PLUS WVR 2/17 2/17 LICENSEE ABILENE-SWEETWATER ABILENE TX NBC KRBC-TV MISSION BROADCASTING, INC. ABILENE-SWEETWATER ABILENE TX CBS KTAB-TV NEXSTAR BROADCASTING, INC. ABILENE-SWEETWATER ABILENE TX FOX KXVA X SAGE BROADCASTING CORPORATION ABILENE-SWEETWATER SNYDER TX N/A KPCB X PRIME TIME CHRISTIAN BROADCASTING, INC ABILENE-SWEETWATER SWEETWATER TX ABC/CW (DIGITALKTXS-TV ONLY) BLUESTONE LICENSE HOLDINGS INC. ALBANY ALBANY GA NBC WALB WALB LICENSE SUBSIDIARY, LLC ALBANY ALBANY GA FOX WFXL BARRINGTON ALBANY LICENSE LLC ALBANY CORDELE GA IND WSST-TV SUNBELT-SOUTH TELECOMMUNICATIONS LTD ALBANY DAWSON GA PBS WACS-TV X GEORGIA PUBLIC TELECOMMUNICATIONS COMMISSION ALBANY PELHAM GA PBS WABW-TV X GEORGIA PUBLIC TELECOMMUNICATIONS COMMISSION ALBANY VALDOSTA GA CBS WSWG X GRAY TELEVISION LICENSEE, LLC ALBANY-SCHENECTADY-TROY ADAMS MA ABC WCDC-TV YOUNG BROADCASTING OF ALBANY, INC. ALBANY-SCHENECTADY-TROY ALBANY NY NBC WNYT WNYT-TV, LLC ALBANY-SCHENECTADY-TROY ALBANY NY ABC WTEN YOUNG BROADCASTING OF ALBANY, INC. ALBANY-SCHENECTADY-TROY ALBANY NY FOX WXXA-TV NEWPORT TELEVISION LICENSE LLC ALBANY-SCHENECTADY-TROY AMSTERDAM NY N/A WYPX PAXSON ALBANY LICENSE, INC. ALBANY-SCHENECTADY-TROY PITTSFIELD MA MYTV WNYA VENTURE TECHNOLOGIES GROUP, LLC ALBANY-SCHENECTADY-TROY SCHENECTADY NY CW WCWN FREEDOM BROADCASTING OF NEW YORK LICENSEE, L.L.C. ALBANY-SCHENECTADY-TROY SCHENECTADY NY PBS WMHT WMHT EDUCATIONAL TELECOMMUNICATIONS ALBANY-SCHENECTADY-TROY SCHENECTADY NY CBS WRGB FREEDOM BROADCASTING OF NEW YORK LICENSEE, L.L.C. -

American Broadcasting Company from Wikipedia, the Free Encyclopedia Jump To: Navigation, Search for the Australian TV Network, See Australian Broadcasting Corporation

Scholarship applications are invited for Wiki Conference India being held from 18- <="" 20 November, 2011 in Mumbai. Apply here. Last date for application is August 15, > 2011. American Broadcasting Company From Wikipedia, the free encyclopedia Jump to: navigation, search For the Australian TV network, see Australian Broadcasting Corporation. For the Philippine TV network, see Associated Broadcasting Company. For the former British ITV contractor, see Associated British Corporation. American Broadcasting Company (ABC) Radio Network Type Television Network "America's Branding Broadcasting Company" Country United States Availability National Slogan Start Here Owner Independent (divested from NBC, 1943–1953) United Paramount Theatres (1953– 1965) Independent (1965–1985) Capital Cities Communications (1985–1996) The Walt Disney Company (1997– present) Edward Noble Robert Iger Anne Sweeney Key people David Westin Paul Lee George Bodenheimer October 12, 1943 (Radio) Launch date April 19, 1948 (Television) Former NBC Blue names Network Picture 480i (16:9 SDTV) format 720p (HDTV) Official abc.go.com Website The American Broadcasting Company (ABC) is an American commercial broadcasting television network. Created in 1943 from the former NBC Blue radio network, ABC is owned by The Walt Disney Company and is part of Disney-ABC Television Group. Its first broadcast on television was in 1948. As one of the Big Three television networks, its programming has contributed to American popular culture. Corporate headquarters is in the Upper West Side of Manhattan in New York City,[1] while programming offices are in Burbank, California adjacent to the Walt Disney Studios and the corporate headquarters of The Walt Disney Company. The formal name of the operation is American Broadcasting Companies, Inc., and that name appears on copyright notices for its in-house network productions and on all official documents of the company, including paychecks and contracts.