(I123)FP-CIT Reporting: Machine Learning, Effectiveness and Clinical Integration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Managing Terrorism Or Accidental Nuclear Errors, Preparing for Iodine-131 Emergencies: a Comprehensive Review

Int. J. Environ. Res. Public Health 2014, 11, 4158-4200; doi:10.3390/ijerph110404158 OPEN ACCESS International Journal of Environmental Research and Public Health ISSN 1660-4601 www.mdpi.com/journal/ijerph Review Managing Terrorism or Accidental Nuclear Errors, Preparing for Iodine-131 Emergencies: A Comprehensive Review Eric R. Braverman 1,2,3, Kenneth Blum 1,2,*, Bernard Loeffke 2, Robert Baker 4, 5, Florian Kreuk 2, Samantha Peiling Yang 6 and James R. Hurley 7 1 Department of Psychiatry, College of Medicine, University of Florida and McKnight Brain Institute, Gainesville, FL 32610, USA; E-Mail: [email protected] 2 Department of Clinical Neurology, PATH Foundation NY, New York, NY 10010, USA; E-Mails: [email protected] (B.L.); [email protected] (F.K.) 3 Department of Neurosurgery, Weill-Cornell Medical College, New York, NY 10065, USA 4 Department of Biological Sciences, Texas Tech University, Lubbock, TX 79409, USA; E-Mail: [email protected] 5 Natural Science Research Laboratory, Museum of Texas Tech University, Lubbock, TX 79409, USA 6 Department of Endocrinology, National University Hospital of Singapore, Singapore 119228; E-Mail: [email protected] 7 Department of Medicine, Weill Cornell Medical College, New York, NY 10065, USA; E-Mail: [email protected] * Author to whom correspondence should be addressed; E-Mail: [email protected]; Tel.: +1-646-367-7411 (ext. 123); Fax: +1-212-213-6188. Received: 5 February 2014; in revised form: 26 March 2014 / Accepted: 28 March 2014 / Published: 15 April 2014 Abstract: Chernobyl demonstrated that iodine-131 (131I) released in a nuclear accident can cause malignant thyroid nodules to develop in children within a 300 mile radius of the incident. -

Use of Radioactive Iodine As a Tracer in Water-Flooding Operations

. USE of RADIOACTIVE IODINE as a TRACER In WATER-FLOODING OPERATIONS Downloaded from http://onepetro.org/jpt/article-pdf/6/09/117/2237549/spe-349-g.pdf by guest on 27 September 2021 J. WADE WATKINS BUREAU OF MINES MEMBER AIME BARTLESVILLE, OKLA. E. S. MARDOCK WELL SURVEYS, INC. MEMBER AIME TULSA, OKLA. T. P. 3894 ABSTRACT assumed that the physical conditions in the productive formation are homogeneous. Unfortunately, homogen The accurate evaluation of reservoir-performance eous conditions rarely, if ever, exist in oil-productive characteristics in the secondary recovery of petroleum formations. The use of a tracer that may be injected by water flooding requires use of a water tracer that into an oil sand and detected quantitatively, or even may be injected into water-input wells and detected at qualitatively, at offsetting oil-production wells provides oil-production wells to supplement data obtained fronz basic data that may be used in determining more core analyses, wellhead tests, and subsurface measure accurately the subsurface rates and patterns of flow ments. Radioactive iodine has been used successfully of injected water between wells than is possible by as a water tracer in field tests to determine: (1) rela theoretical calculations based on assumed conditions. tive rates and patterns of flow of injected water between Consideration of the data obtained by using a water water-input and oil-production wells and (2) zones of tracer assists in the application of remedial measures excessive water entry into oil-production wells. to water-input wells, such as plugging of channels, or Laboratory evaluations of potential water tracers, selective plugging of highly permeable zones, thereby previous tracer studies, the value of using a radioactive effecting a more uniform flood and a greater ultimate tracer, general field procedures, and the use of surface oil recovery. -

Procedure Guideline for Tumor Imaging with 18F-FDG PET/CT 1.0*

Procedure Guideline for Tumor Imaging with 18F-FDG PET/CT 1.0* Dominique Delbeke1, R. Edward Coleman2, Milton J. Guiberteau3, Manuel L. Brown4, Henry D. Royal5, Barry A. Siegel5, David W. Townsend6, Lincoln L. Berland7, J. Anthony Parker8, Karl Hubner9, Michael G. Stabin10, George Zubal11, Marc Kachelriess12, Valerie Cronin13, and Scott Holbrook14 1Vanderbilt University Medical Center, Nashville, Tennessee; 2Duke University Medical Center, Durham, North Carolina; 3Christus St. Joseph Hospital, Houston, Texas; 4Henry Ford Hospital, Detroit, Michigan; 5Mallinckrodt Institute of Radiology, St. Louis, Missouri; 6University of Tennessee, Knoxville, Tennessee; 7University of Alabama Hospital, Birmingham, Alabama; 8Beth Israel Deaconess Hospital, Boston, Massachusetts; 9University of Tennessee Medical Center, Knoxville, Tennessee; 10Vanderbilt University, Nashville, Tennessee; 11Yale University, New Haven, Connecticut; 12Institute of Medical Physics, University of Erlangen-Nurnberg, Erlangen, Germany; 13Mercy Hospital, Buffalo, New York; and 14Precision Nuclear, Gray, Tennessee I. PURPOSE available for several years, the readily apparent and doc- umented advantages of having PET and CT in a single device The purpose of these guidelines is to assist physicians in have resulted in the rapid dissemination of this technology recommending, performing, interpreting, and reporting the in the United States. This Procedure Guideline pertains results of 18F-FDG PET/CT for oncologic imaging of adult only to combined PET/CT devices. and pediatric patients. II. BACKGROUND INFORMATION AND DEFINITIONS Definitions PET is a tomographic scintigraphic technique in which a A. A PET/CT scanner is an integrated device containing computer-generated image of local radioactive tracer dis- both a CT scanner and a PET scanner with a single tribution in tissues is produced through the detection of patient table and therefore capable of obtaining a CT annihilation photons that are emitted when radionuclides scan, a PET scan, or both. -

Radionuclides As Tracers

XA9847600 Chapter 3 RADIONUCLBDES AS TRACERS R.D. Ganatra Nuclear Medicine is usually defined as a "clinical speciality devoted to diagnostic, therapeutic and research applications of internally administered radionuclides.". Diagnostic implies both in vivo and in vitro uses. In modern times, there is hardly any medical research, where a radioactive tracer is not used in some form or other. Normally basic medical research is not considered as nuclear medicine, but clinical research applications of radioisotopes are considered as an integral part of this speciality. Radioisotopes are elements having the same atomic number but different atomic weights. For example, I3II, I25I, 123I are all isotopes of the same element. Their chemical and biological behaviours are expected to be identical. The slight differences in the weights, that they have, is due to differences in the number of particles that they hold inside the nucleus. Some isotopes are perturbed by this kind of change in the nuclear structure. They become unstable, and emit radiation till they reach stable state. These are called radioisotopes. Radioisotopes have few immutable characteristics: they are unstable, they all disintegrate at a specific rate, and they all emit radiations, which have a specific energy pattern. Importance of radioisotopes in medicine is because of their two characteristics: their biological behaviour is identical to their stable counterparts, and because they are radioactive their emissions can be detected by a suitable instrument. All isotopes of iodine will behave in the same way and will concentrate in the thyroid gland. There is no way of detecting the stable, natural iodine in the thyroid gland, but the presence of radioactive iodine can be detected externally in vivo by a detector. -

THE USE of TECHNETIUM 99M AS a CLINICAL TRACER ELEMENT R

Postgrad Med J: first published as 10.1136/pgmj.41.481.656 on 1 November 1965. Downloaded from POSTGRAD. MED. J. (1965), 41, 656. THE USE OF TECHNETIUM 99m AS A CLINICAL TRACER ELEMENT R. HERBERT, B.Sc., A.Inst.P. W. KULKE, M.B., B.Ch., D.M.R.T., M.Rad. R. T. H. SHEPHERD, B.M., B.Ch., D.M.R.T., F.F.R. The Liverpool Radium Institute, Liverpool, 7. THE VARIETY of radioactive tracer chemicals Production and Physical Properties currently available for medical use is almost Tc99m is most conveniently produced carrier embarrassingly large and is still increasing. free as a pertechnetate (TcO4) in isotonic saline The value of any proposed new isotopes should from a Mo99 generator which has a half-life of therefore be carefully considered. No apology 67 hours. These generators in which the Mo99 however, need be made for the introduction of is incorporated in an alumina column, are Technetium 99m by Scheer and Maier-Borst regularly available from the Radiochemical (1963) also by Harper, Beck, Charleston and Centre, Amersham, England and other suppliers. Lathrop (1964), since this substance has manv Tc99m decays to Tc99 by isomeric transition advantages in its application to scanning and with a half-life of 6.0 hours emitting a 0.140 in the observation of transients. (Clark, Deegan, MeV gamma ray which is 8-11% converted. McKendrick, Herbert and Kulke, 1965). (Strominger, Hollander and Seaborg, 1958). It has been evident for some time that many Tc99 itself decays by beta emission to stable radioisotopes in common use in medicine are Ru99, and has a half-life of 2.1 x 105 years so not best suited for scanning purposes. -

Thermal Properties of 18F-FDG Uptake and Imaging in Positron Emission Tomography Scans of Cancerous Cells

PANDION: The Osprey Journal of Research & Ideas Volume 2 Number 1 Article 4 2021 Thermal Properties of 18F-FDG Uptake and Imaging in Positron Emission Tomography Scans of Cancerous Cells Carleigh R. Eagle University of North Florida, [email protected] Faculty Mentor: Dr. Jason T. Haraldsen, Associate Professor Department of Physics Follow this and additional works at: https://digitalcommons.unf.edu/pandion_unf Part of the Biochemical Phenomena, Metabolism, and Nutrition Commons, Biological and Chemical Physics Commons, Biomedical Devices and Instrumentation Commons, Medical Biochemistry Commons, Medical Biophysics Commons, Medicinal-Pharmaceutical Chemistry Commons, and the Other Physics Commons Recommended Citation Eagle, Carleigh R. (2021) "Thermal Properties of 18F-FDG Uptake and Imaging in Positron Emission Tomography Scans of Cancerous Cells," PANDION: The Osprey Journal of Research and Ideas: Vol. 2 : No. 1 , Article 4. Available at: https://digitalcommons.unf.edu/pandion_unf/vol2/iss1/4 This Article is brought to you for free and open access by the Student Scholarship at UNF Digital Commons. It has been accepted for inclusion in PANDION: The Osprey Journal of Research & Ideas by an authorized administrator of UNF Digital Commons. For more information, please contact Digital Projects. © 2021 All Rights Reserved Thermal Properties of 18F-FDG Uptake and Imaging in Positron Emission Tomography Scans of Cancerous Cells Cover Page Footnote I would like to thank Dr. Jason T. Haraldsen for all of his help and guidance. Without his support, I would not have the pleasure of publishing in this journal. This article is available in PANDION: The Osprey Journal of Research & Ideas: https://digitalcommons.unf.edu/pandion_unf/vol2/iss1/4 Thermal Properties of 18F-FDG Uptake and Imaging in Positron Emission Tomography Scans of Cancerous Cells Carleigh Rose Eagle Faculty Mentor: Jason T. -

18F-FDG Labeling of Mesenchymal Stem Cells and Multipotent Adult Progenitor Cells for PET Imaging: Effects on Ultrastructure and Differentiation Capacity

Journal of Nuclear Medicine, published on January 25, 2013 as doi:10.2967/jnumed.112.108316 18F-FDG Labeling of Mesenchymal Stem Cells and Multipotent Adult Progenitor Cells for PET Imaging: Effects on Ultrastructure and Differentiation Capacity Esther Wolfs1, Tom Struys2,3, Tineke Notelaers4, Scott J. Roberts5, Abhishek Sohni4, Guy Bormans6, Koen Van Laere1, Frank P. Luyten5, Olivier Gheysens1, Ivo Lambrichts2, Catherine M. Verfaillie4, and Christophe M. Deroose1 1Division of Nuclear Medicine and Molecular Imaging, Department of Imaging and Pathology, KU Leuven, Leuven, Belgium; 2Lab of Histology, Department of Functional Morphology, Biomedical Research Institute, Universiteit Hasselt, Diepenbeek, Belgium; 3Biomedical NMR Unit, Department of Imaging and Pathology, KU Leuven, Leuven, Belgium; 4Stem Cell Institute, Department of Development and Regeneration, KU Leuven, Leuven, Belgium; 5Laboratory for Skeletal Development and Joint Disorders, KU Leuven, Leuven, Belgium; and 6Laboratory for Radiopharmacy, KU Leuven, Leuven, Belgium influenced by 18F-FDG labeling. Small-animal PET studies con- Because of their extended differentiation capacity, stem cells firmed the intracellular location of the tracer and the possibility have gained great interest in the field of regenerative medicine. of imaging injected prelabeled stem cell types in vivo. There- For the development of therapeutic strategies, more knowledge fore, direct labeling of MSCs and MAPCs with 18F-FDG is a suit- on the in vivo fate of these cells has to be acquired. Therefore, able technique to noninvasively assess cell delivery and early stem cells can be labeled with radioactive tracer molecules retention with PET. such as 18F-FDG, a positron-emitting glucose analog that is Key Words: mesenchymal stem cell; multipotent adult progenitor taken up and metabolically trapped by the cells. -

PRODUCT MONOGRAPH Fluorohmet

PRODUCT MONOGRAPH FluorOHmet ([18F]Fluorodeoxyglucose, 18F-FDG) Solution for Injection, 45 - 4240 mCi (1.665-156.88 GBq) per multi-dose vial Diagnostic Radiopharmaceutical University of Ottawa Heart Institute Date of Revision: National Cardiac PET Centre January 28, 2011 40 Ruskin Street Ottawa, ON K1Y 4W7 Submission Control No: 136101 [18F]Fluorodeoxyglucose, 18F -FDG Page 1 of 23 Table of Contents PART I: HEALTH PROFESSIONAL INFORMATION .........................................................3 SUMMARY PRODUCT INFORMATION ........................................................................3 DESCRIPTION....................................................................................................................3 INDICATIONS AND CLINICAL USE ..............................................................................4 CONTRAINDICATIONS ...................................................................................................5 WARNINGS AND PRECAUTIONS ..................................................................................5 ADVERSE REACTIONS ....................................................................................................7 DRUG INTERACTIONS ....................................................................................................8 DOSAGE AND ADMINISTRATION ................................................................................8 RADIATION DOSIMETRY .............................................................................................10 OVERDOSAGE ................................................................................................................12 -

The Basic Science of Nuclear Medicine

BASIC SCIENCE tomography (CT) has been combined with the functional mo- The basic science of dalities of single-photon emission computed tomography (SPECT/CT) and positron emission tomography (PET/CT) in e nuclear medicine integrated hybrid scanners.2 4 Overall, this has led to NM being at the vanguard of technological advancement in medical imag- Michael L Waller ing at the present time. Many of these functional techniques have Fahmid U Chowdhury relevance to modern orthopaedic practice, and the orthopaedic surgeon should, therefore, possess some knowledge of the scope and limitations of modern NM modalities as applied to ortho- Abstract paedic surgery. This article will describe the physical principles behind the basic techniques of NM imaging, and will then go on In the era of increasing utilization of cross-sectional imaging in ortho- paedic patients with computed tomography (CT) and magnetic reso- to demonstrate some of the main clinical applications of NM as applied to orthopaedic practice. nance imaging (MRI), nuclear medicine (NM) techniques continue to occupy a valuable role in the investigation of these patients. It can pro- vide crucial functional information in a variety of clinical settings that The physics of nuclear medicine (NM) imaging are encountered regularly in orthopaedic practice. It is important that the orthopaedic surgeon is aware of the modalities on offer and appre- Common isotopes and radiopharmaceuticals ciates the relative strengths and limitations of these techniques. This The ideal radiopharmaceutical should be specific to the pathway article will describe the physical principles underlying NM imaging of interest, possess the requisite half-life, be sterile and pyrogen with single-photon and positron-emitting radiotracers. -

A Radioactive Tracer Dilution Method for Mass Determination in Licl-Kcl Radioactive Eutectic Salts

A Radioactive Tracer Dilution Method for Mass Determination in LiCl-KCl Radioactive Eutectic Salts THESIS Presented in Partial Fulfillment of the Requirements for the Degree of Masters of Science from the Graduate School of The Ohio State University By Douglas Ernest Hardtmayer, B.Sc. Welding Engineering Graduate Program in Nuclear Engineering The Ohio State University 2018 Thesis Committee: Dr. Lei. Cao, Advisor Dr. Vaibhav Sinha Copyright By Douglas Ernest Hardtmayer 2018 ABSTRACT Radioactive Tracer Dilution (RTD) is a new method where a radioactive tracer isotope is dissolved in a given substance, and the dilution thereof corresponds to the mass and volume of the substance in which the tracer was dissolved. This method is being considered commercially as a means of measuring the mass of Lithium Chloride-Potassium Chloride (LiCl-KCl) eutectic salt in electrorefiners where spent nuclear fuel has been reprocessed. Efforts have been ongoing to find an effective and efficient way of measuring the mass of this salt inside of an electrorefiner for nuclear material accountancy purposes. Various methods, including creating volume calibration curves with water and molten salt, have been tried but have numerous shortcomings, such as needing to recalibrate the fitted volume curve for a specific electrorefiner every time a new piece of equipment is added or removed from the device. Research at The Ohio State University has shown promise using Na22 as a tracer in LiCl-KCl eutectic salt, and that the interference from a common fission product found in an electrorefiners salt, Eu154, could be accounted for, and an accurate mass measurement could be determined. -

Radiopharmacy

RADIOPHARMACY Radiopharmacy is an integral part of a Nuclear Medicine Department that deals largely with the preparation, compounding, Quality Control (QC), and dispensing of radiopharmaceuticals and radioisotopes for human use. Usually, the Radiopharmacy comprises a radiochemistry laboratory especially equipped with a dose calibrator, a biological safety class III cabinet for cell labelling or cold kit manufacturing, or a Biological Safety cabinet class II for cell labelling. Radiochemists or Radiopharmacists are the personnel who perform these functions at large hospitals or medical centres. They are involved in manufacturing cold kits and in developing new agents and procedures. Radiopharmaceuticals: A radiopharmaceutical may be defined as a pharmaceutical substance containing radioactive atoms (radionuclide) within its structure. It is generally used for diagnostic purposes but may also be used for therapeutic treatment. Radiopharmaceuticals are formulated in various chemical and physical forms to target specific organs of the body. The radiopharmaceutical is administered to the patient via a small injection, inhalation, or swallowed as a tablet or liquid, depending on which type of scan ordered. Most radiopharmaceuticals used in diagnostic Nuclear Medicine procedures emit gamma radiation. The gamma radiation emitted from the radiopharmaceutical is detected or measured externally by a SPECT camera. The SPECT camera is used by Technologists to perform static or dynamic images enabling Nuclear Medicine Physicians to evaluate the functional and/or morphological characteristics of the organ of interest. SPECT cameras can also perform tomographic imaging where 3D slices are generated (similar to CT scanning). An ideal radiopharmaceutical is one that rapidly and avidly localises within the organ under investigation, remains in it for the duration of study, and is quickly eliminated from the body. -

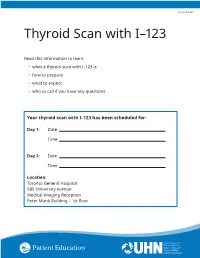

Thyroid Scan with I–123

Form: D-5567 Thyroid Scan with I–123 Read this information to learn: • what a thyroid scan with I–123 is • how to prepare • what to expect • who to call if you have any questions Your thyroid scan with I–123 has been scheduled for: Day 1: Date Time Day 2: Date Time Location: Toronto General Hospital 585 University Avenue Medical Imaging Reception Peter Munk Building – 1st floor What is a thyroid scan with I – 123? A thyroid scan with I – 123 is a nuclear medicine test. It lets your doctor see what your thyroid gland looks like and how well it is working. How do nuclear medicine tests work? Nuclear medicine tests are different from x-rays. X-rays show what your body looks like. Nuclear medicine tests show how your body and organs are working. They can help find problems that other tests can’t find. Before a nuclear medicine test, you are given a medicine called a radiopharmaceutical (also called radioactive tracer). A radiopharmaceutical is radioactive. This means it gives off energy. The radioactive tracer is usually given through an intravenous (IV) line placed in a vein. But it can also be swallowed or breathed in through the lungs. The tracer travels to the part of the body that your doctor wants to see. When it reaches the right area, we take pictures. We use a special machine called a gamma camera. It takes pictures of the energy coming from the tracer. Why do I need this test? A thyroid scan is done to: • look for abnormal thyroid tissue • look for tumours or cancer cells in the neck area • assess any thyroid diseases you may have from birth • see if you have an underactive, normal or overactive thyroid 2 How do I prepare for the test? • Stop taking your thyroid medicines before the test.