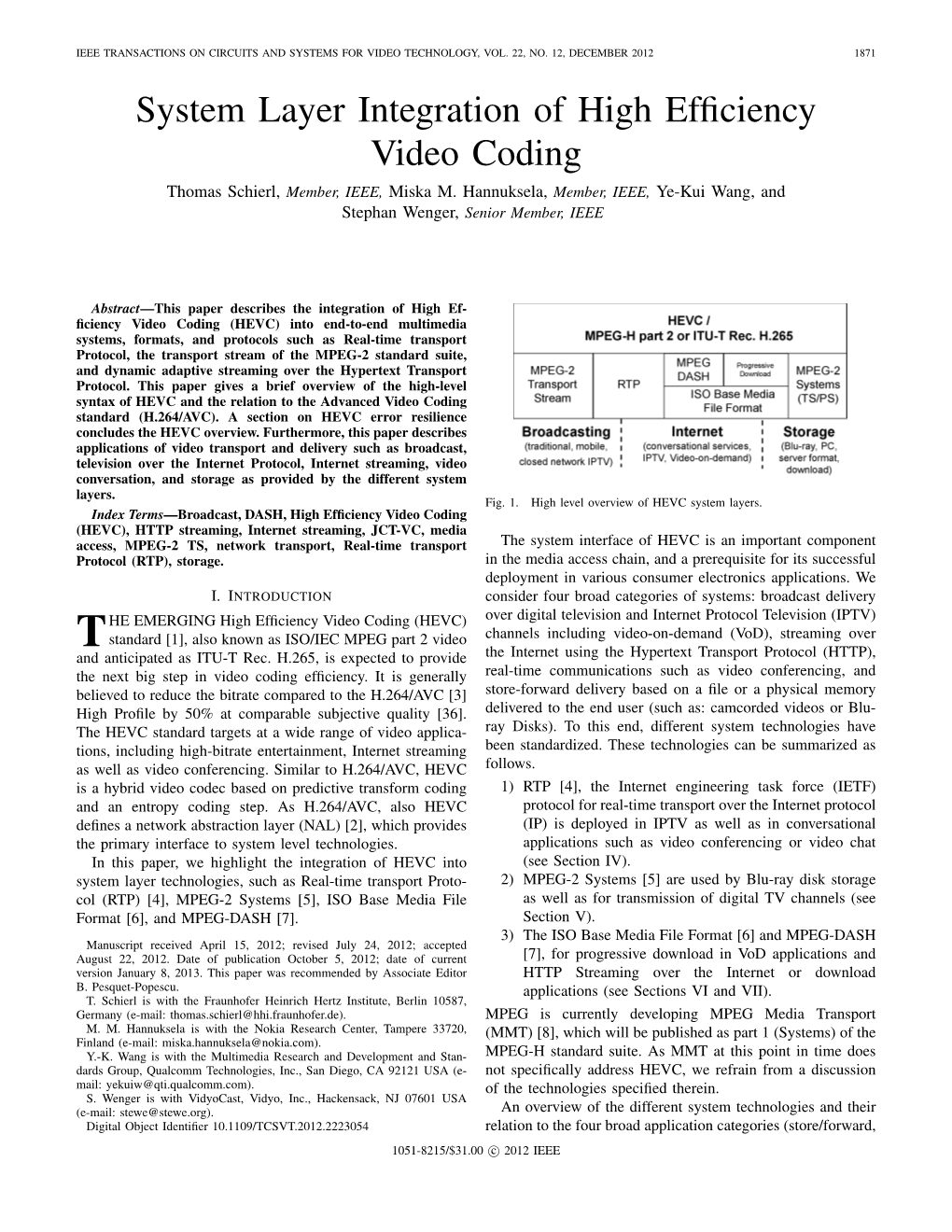

System Layer Integration of High Efficiency Video Coding

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Packetcable™ 2.0 Codec and Media Specification PKT-SP-CODEC

PacketCable™ 2.0 Codec and Media Specification PKT-SP-CODEC-MEDIA-I10-120412 ISSUED Notice This PacketCable specification is the result of a cooperative effort undertaken at the direction of Cable Television Laboratories, Inc. for the benefit of the cable industry and its customers. This document may contain references to other documents not owned or controlled by CableLabs. Use and understanding of this document may require access to such other documents. Designing, manufacturing, distributing, using, selling, or servicing products, or providing services, based on this document may require intellectual property licenses from third parties for technology referenced in this document. Neither CableLabs nor any member company is responsible to any party for any liability of any nature whatsoever resulting from or arising out of use or reliance upon this document, or any document referenced herein. This document is furnished on an "AS IS" basis and neither CableLabs nor its members provides any representation or warranty, express or implied, regarding the accuracy, completeness, noninfringement, or fitness for a particular purpose of this document, or any document referenced herein. 2006-2012 Cable Television Laboratories, Inc. All rights reserved. PKT-SP-CODEC-MEDIA-I10-120412 PacketCable™ 2.0 Document Status Sheet Document Control Number: PKT-SP-CODEC-MEDIA-I10-120412 Document Title: Codec and Media Specification Revision History: I01 - Released 04/05/06 I02 - Released 10/13/06 I03 - Released 09/25/07 I04 - Released 04/25/08 I05 - Released 07/10/08 I06 - Released 05/28/09 I07 - Released 07/02/09 I08 - Released 01/20/10 I09 - Released 05/27/10 I10 – Released 04/12/12 Date: April 12, 2012 Status: Work in Draft Issued Closed Progress Distribution Restrictions: Authors CL/Member CL/ Member/ Public Only Vendor Key to Document Status Codes: Work in Progress An incomplete document, designed to guide discussion and generate feedback, that may include several alternative requirements for consideration. -

Audio/Video Transport Working Group

Audio/Video Transport Working Group 60th IETF – San Diego 1 - 6 August 2004 Colin Perkins <[email protected]> Magnus Westerlund <[email protected]> Mailing list: <[email protected]> Agenda - Tuesday Introduction and Status Update 15 RTCP XR MIB 15 RTP Payload/Generic FEC-Encoded Time-Sensitive Media 15 RTP Payload Formats for H.261 and H.263 15 RTP Payload Format for JPEG 2000 15 RTP Payload Format for VMR-WB 15 RTP Payload Format for AMR-WB+ 15 RTP Payload Format for 3GPP Timed Text 15 RTP Payload Format for Text Conversation 5 RTP and MIME types 25 Agenda - Wednesday Introduction 5 Framing RTP on Connection-Oriented Transport 10 RTCP Extensions for SSM 15 RTP Profile for RTCP-based Feedback 15 RTP Profile for TFRC 15 Req. for Transport of Video Control Commands 15 Header Compression over MPLS 15 A Multiplexing Mechanism for RTP 15 MRTP: Multi-Flow Real-time Transport Protocol 15 Intellectual Property When starting a presentation you MUST say if: • There is IPR associated with your draft • The restrictions listed in section 5 of RFC 3667 apply to your draft When asking questions or commenting on a draft: • You MUST disclose any IPR you know of relating to the technology under discussion Reference: RFC 3667/3668 and the “Note Well” text Document Status Standards Published: • STD 64: RTP: A Transport Protocol for Real-Time Application, RFC 3550 • STD 65: RTP Profile for Audio and Video Conferences with Minimal Control, RFC 3551 References MUST include STD number, example: H. Schulzrinne, et. al., "RTP: A Transport Protocol for Real-Time Applications", STD 64, RFC 3550, Internet Engineering Task Force, July 2003. -

Versatile Video Coding – the Next-Generation Video Standard of the Joint Video Experts Team

31.07.2018 Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team Mile High Video Workshop, Denver July 31, 2018 Gary J. Sullivan, JVET co-chair Acknowledgement: Presentation prepared with Jens-Rainer Ohm and Mathias Wien, Institute of Communication Engineering, RWTH Aachen University 1. Introduction Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team 1 31.07.2018 Video coding standardization organisations • ISO/IEC MPEG = “Moving Picture Experts Group” (ISO/IEC JTC 1/SC 29/WG 11 = International Standardization Organization and International Electrotechnical Commission, Joint Technical Committee 1, Subcommittee 29, Working Group 11) • ITU-T VCEG = “Video Coding Experts Group” (ITU-T SG16/Q6 = International Telecommunications Union – Telecommunications Standardization Sector (ITU-T, a United Nations Organization, formerly CCITT), Study Group 16, Working Party 3, Question 6) • JVT = “Joint Video Team” collaborative team of MPEG & VCEG, responsible for developing AVC (discontinued in 2009) • JCT-VC = “Joint Collaborative Team on Video Coding” team of MPEG & VCEG , responsible for developing HEVC (established January 2010) • JVET = “Joint Video Experts Team” responsible for developing VVC (established Oct. 2015) – previously called “Joint Video Exploration Team” 3 Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team Gary Sullivan | Jens-Rainer Ohm | Mathias Wien | July 31, 2018 History of international video coding standardization -

RFC 8872: Guidelines for Using the Multiplexing Features of RTP To

Stream: Internet Engineering Task Force (IETF) RFC: 8872 Category: Informational Published: January 2021 ISSN: 2070-1721 Authors: M. Westerlund B. Burman C. Perkins H. Alvestrand R. Even Ericsson Ericsson University of Glasgow Google RFC 8872 Guidelines for Using the Multiplexing Features of RTP to Support Multiple Media Streams Abstract The Real-time Transport Protocol (RTP) is a flexible protocol that can be used in a wide range of applications, networks, and system topologies. That flexibility makes for wide applicability but can complicate the application design process. One particular design question that has received much attention is how to support multiple media streams in RTP. This memo discusses the available options and design trade-offs, and provides guidelines on how to use the multiplexing features of RTP to support multiple media streams. Status of This Memo This document is not an Internet Standards Track specification; it is published for informational purposes. This document is a product of the Internet Engineering Task Force (IETF). It represents the consensus of the IETF community. It has received public review and has been approved for publication by the Internet Engineering Steering Group (IESG). Not all documents approved by the IESG are candidates for any level of Internet Standard; see Section 2 of RFC 7841. Information about the current status of this document, any errata, and how to provide feedback on it may be obtained at https://www.rfc-editor.org/info/rfc8872. Copyright Notice Copyright (c) 2021 IETF Trust and the persons identified as the document authors. All rights reserved. This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents (https://trustee.ietf.org/license-info) in effect on the date of publication of this document. -

(L3) - Audio/Picture Coding

Committee: (L3) - Audio/Picture Coding National Designation Title (Click here to purchase standards) ISO/IEC Document L3 INCITS/ISO/IEC 9281-1:1990:[R2013] Information technology - Picture Coding Methods - Part 1: Identification IS 9281-1:1990 INCITS/ISO/IEC 9281-2:1990:[R2013] Information technology - Picture Coding Methods - Part 2: Procedure for Registration IS 9281-2:1990 INCITS/ISO/IEC 9282-1:1988:[R2013] Information technology - Coded Representation of Computer Graphics Images - Part IS 9282-1:1988 1: Encoding principles for picture representation in a 7-bit or 8-bit environment :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 7: IS 13522-7:2001 Interoperability and conformance testing for ISO/IEC 13522-5 (MHEG-7) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 5: IS 13522-5:1997 Support for Base-Level Interactive Applications (MHEG-5) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 3: IS 13522-3:1997 MHEG script interchange representation (MHEG-3) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 6: IS 13522-6:1998 Support for enhanced interactive applications (MHEG-6) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 8: IS 13522-8:2001 XML notation for ISO/IEC 13522-5 (MHEG-8) Created: 11/16/2014 Page 1 of 44 Committee: (L3) - Audio/Picture Coding National Designation Title (Click here to purchase standards) ISO/IEC Document :[] Information technology - Coding -

RFC 3016 RTP Payload Format for MPEG-4 Audio/Visual November 2000

Network Working Group Y. Kikuchi Request for Comments: 3016 Toshiba Category: Standards Track T. Nomura NEC S. Fukunaga Oki Y. Matsui Matsushita H. Kimata NTT November 2000 RTP Payload Format for MPEG-4 Audio/Visual Streams Status of this Memo This document specifies an Internet standards track protocol for the Internet community, and requests discussion and suggestions for improvements. Please refer to the current edition of the "Internet Official Protocol Standards" (STD 1) for the standardization state and status of this protocol. Distribution of this memo is unlimited. Copyright Notice Copyright (C) The Internet Society (2000). All Rights Reserved. Abstract This document describes Real-Time Transport Protocol (RTP) payload formats for carrying each of MPEG-4 Audio and MPEG-4 Visual bitstreams without using MPEG-4 Systems. For the purpose of directly mapping MPEG-4 Audio/Visual bitstreams onto RTP packets, it provides specifications for the use of RTP header fields and also specifies fragmentation rules. It also provides specifications for Multipurpose Internet Mail Extensions (MIME) type registrations and the use of Session Description Protocol (SDP). 1. Introduction The RTP payload formats described in this document specify how MPEG-4 Audio [3][5] and MPEG-4 Visual streams [2][4] are to be fragmented and mapped directly onto RTP packets. These RTP payload formats enable transport of MPEG-4 Audio/Visual streams without using the synchronization and stream management functionality of MPEG-4 Systems [6]. Such RTP payload formats will be used in systems that have intrinsic stream management Kikuchi, et al. Standards Track [Page 1] RFC 3016 RTP Payload Format for MPEG-4 Audio/Visual November 2000 functionality and thus require no such functionality from MPEG-4 Systems. -

Dialogic® Bordernet™ Product Description

Dialogic® BorderNet™ Virtualized Session Border Controller Product Description Release 3.3.1 Dialogic® BorderNet™ Virtualized Session Border Controller Product Description Copyright and Legal Notice Copyright © 2013-2016 Dialogic Corporation. All Rights Reserved. You may not reproduce this document in whole or in part without permission in writing from Dialogic Corporation at the address provided below. All contents of this document are furnished for informational use only and are subject to change without notice and do not represent a commitment on the part of Dialogic Corporation and its affiliates or subsidiaries (“Dialogic”). Reasonable effort is made to ensure the accuracy of the information contained in the document. However, Dialogic does not warrant the accuracy of this information and cannot accept responsibility for errors, inaccuracies or omissions that may be contained in this document. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH DIALOGIC® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN A SIGNED AGREEMENT BETWEEN YOU AND DIALOGIC, DIALOGIC ASSUMES NO LIABILITY WHATSOEVER, AND DIALOGIC DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF DIALOGIC PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY INTELLECTUAL PROPERTY RIGHT OF A THIRD PARTY. Dialogic products are not intended for use in certain safety-affecting situations. Please see http://www.dialogic.com/company/terms-of- use.aspx for more details. Due to differing national regulations and approval requirements, certain Dialogic products may be suitable for use only in specific countries, and thus may not function properly in other countries. -

MMT(MPEG Media Transport)

TECH & TREND 김용한 서울시립대학교 전자전기컴퓨터공학부 교수 TECH & MMT(MPEG Media TREND Transport) 표준의 이해 + 김용한 서울시립대학교 전자전기컴퓨터공학부 교수 MPEG(Moving Picture Experts Group)은 ISO/IEC JTC 1/SC 29/WG 11의 별칭으로서, 디지털 방송, 인터넷 멀티미디어 전송, 멀티미디어 저장 매체 등의 분야에서 널리 활용되고 있는 MPEG-1, MPEG-2, MPEG-4 등의 표준을 제정한 국제표준화 그룹이다. 특히 MPEG에서 제정한 표 준 중에서 ISO/IEC 13818-1이라는 공식 표준번호를 갖고 있는 MPEG-2 시스템 표준은 초기 디지털 방송 방식에서부터 HDTV, DMB 등에 이 르기까지 압축된 비디오, 오디오, 부가 데이터를 다중화하고 비디오와 오디오의 동기화를 달성하며 송신 측에서 수신 측으로 필요한 제어 정 보를 전달하는 방식으로 널리 활용되어 왔다. 올해 제정되고 있는 국내 4K UHDTV 표준들에도 이 MPEG-2 시스템 표준이 채택될 예정이 다. MPEG-2 시스템 표준에 맞게 구성된 비트스트림을 트랜스포트 스트림(Transport Stream, TS)이라 부르는 관계로, 많은 이들이 이 표준을 MPEG-2 TS 표준이라 부르기도 한다. MPEG-2 TS 표준이 제정된 것은 1990년대 초반으로, 인터넷이 아직 글로벌 네트워크로서 일반화되기 전이었다. 당시에는 ATM(Asynchronous Transfer Mode, 비동기 전송 모드)에 의한 차세대 네트워크가 인터넷을 대체하여 새로운 글로벌 네트워크가 될 수 있도 록 여러 나라에서 연구개발 역량을 집중하고 있을 때였다. IP 기반의 네트워크에 비해, ATM 기반 네트워크는 여러 가지 측면에서 최적화된 구조를 가지고 있었음에도 불구하고, 아이러니하게도 이러한 최적화된 기능의 장점을 무색하게 만들 정도로 고속 전송이 가능한 패스트 이 더넷이 출현하면서, IP 기반의 인터넷이 ATM 기반의 네트워크보다 더욱 널리 사용되게 되었고 그야말로 현재 인터넷은 유일한 글로벌 네트 워크가 되었다. 이에 따라 모든 통신망은 IP가 대세인 시대가 지속되고 있다. 급속한 통신 기술의 발전과 환경의 변화로 말미암아 20여 년 전 MPEG-2 TS 표준이 제정되던 때의 환경과 지금의 환경은 너무도 달라졌다. -

Security Solutions Y in MPEG Family of MPEG Family of Standards

1 Security solutions in MPEG family of standards TdjEbhiiTouradj Ebrahimi [email protected] NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG: Moving Picture Experts Group 2 • MPEG-1 (1992): MP3, Video CD, first generation set-top box, … • MPEG-2 (1994): Digital TV, HDTV, DVD, DVB, Professional, … • MPEG-4 (1998, 99, ongoing): Coding of Audiovisual Objects • MPEG-7 (2001, ongo ing ): DitiDescription of Multimedia Content • MPEG-21 (2002, ongoing): Multimedia Framework NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG-1 - ISO/IEC 11172:1992 3 • Coding of moving pictures and associated audio for digital storage media at up to about 1,5 Mbit/s – Part 1 Systems - Program Stream – Part 2 Video – Part 3 Audio – Part 4 Conformance – Part 5 Reference software NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG-2 - ISO/IEC 13818:1994 4 • Generic coding of moving pictures and associated audio – Part 1 Systems - joint with ITU – Part 2 Video - joint with ITU – Part 3 Audio – Part 4 Conformance – Part 5 Reference software – Part 6 DSM CC – Par t 7 AAC - Advance d Au dio Co ding – Part 9 RTI - Real Time Interface – Part 10 Conformance extension - DSM-CC – Part 11 IPMP on MPEG-2 Systems NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG-4 - ISO/IEC 14496:1998 5 • Coding of audio-visual objects – Part 1 Systems – Part 2 Visual – Part 3 Audio – Part 4 Conformance – Part 5 Reference -

The H.264 Advanced Video Coding (AVC) Standard

Whitepaper: The H.264 Advanced Video Coding (AVC) Standard What It Means to Web Camera Performance Introduction A new generation of webcams is hitting the market that makes video conferencing a more lifelike experience for users, thanks to adoption of the breakthrough H.264 standard. This white paper explains some of the key benefits of H.264 encoding and why cameras with this technology should be on the shopping list of every business. The Need for Compression Today, Internet connection rates average in the range of a few megabits per second. While VGA video requires 147 megabits per second (Mbps) of data, full high definition (HD) 1080p video requires almost one gigabit per second of data, as illustrated in Table 1. Table 1. Display Resolution Format Comparison Format Horizontal Pixels Vertical Lines Pixels Megabits per second (Mbps) QVGA 320 240 76,800 37 VGA 640 480 307,200 147 720p 1280 720 921,600 442 1080p 1920 1080 2,073,600 995 Video Compression Techniques Digital video streams, especially at high definition (HD) resolution, represent huge amounts of data. In order to achieve real-time HD resolution over typical Internet connection bandwidths, video compression is required. The amount of compression required to transmit 1080p video over a three megabits per second link is 332:1! Video compression techniques use mathematical algorithms to reduce the amount of data needed to transmit or store video. Lossless Compression Lossless compression changes how data is stored without resulting in any loss of information. Zip files are losslessly compressed so that when they are unzipped, the original files are recovered. -

Scene-Based Audio and Higher Order Ambisonics

Scene -Based Audio and Higher Order Ambisonics: A technology overview and application to Next-Generation Audio, VR and 360° Video NOVEMBER 2019 Ferdinando Olivieri, Nils Peters, Deep Sen, Qualcomm Technologies Inc., San Diego, California, USA The main purpose of an EBU Technical Review is to critically examine new technologies or developments in media production or distribution. All Technical Reviews are reviewed by 1 (or more) technical experts at the EBU or externally and by the EBU Technical Editions Manager. Responsibility for the views expressed in this article rests solely with the author(s). To access the full collection of our Technical Reviews, please see: https://tech.ebu.ch/publications . If you are interested in submitting a topic for an EBU Technical Review, please contact: [email protected] EBU Technology & Innovation | Technical Review | NOVEMBER 2019 2 1. Introduction Scene Based Audio is a set of technologies for 3D audio that is based on Higher Order Ambisonics. HOA is a technology that allows for accurate capturing, efficient delivery, and compelling reproduction of 3D audio sound fields on any device, such as headphones, arbitrary loudspeaker configurations, or soundbars. We introduce SBA and we describe the workflows for production, transport and reproduction of 3D audio using HOA. The efficient transport of HOA is made possible by state-of-the-art compression technologies contained in the MPEG-H Audio standard. We discuss how SBA and HOA can be used to successfully implement Next Generation Audio systems, and to deliver any combination of TV, VR, and 360° video experiences using a single audio workflow. 1.1 List of abbreviations & acronyms CBA Channel-Based Audio SBA Scene-Based Audio HOA Higher Order Ambisonics OBA Object-Based Audio HMD Head-Mounted Display MPEG Motion Picture Experts Group (also the name of various compression formats) ITU International Telecommunications Union ETSI European Telecommunications Standards Institute 2. -

Final Report of the ATSC Planning Team on Internet-Enhanced Television

Final Report of the ATSC Planning Team on Internet-Enhanced Television Doc. PT3-028r14 27 September 2011 Advanced Television Systems Committee 1776 K Street, N.W., Suite 200 Washington, D.C. 20006 202-872-9160 ATSC PT3-028r14 PT-3 Final Report 27 September 2011 The Advanced Television Systems Committee, Inc. is an international, non-profit organization developing voluntary standards for digital television. The ATSC member organizations represent the broadcast, broadcast equipment, motion picture, consumer electronics, computer, cable, satellite, and semiconductor industries. Specifically, ATSC is working to coordinate television standards among different communications media focusing on digital television, interactive systems, and broadband multimedia communications. ATSC is also developing digital television implementation strategies and presenting educational seminars on the ATSC standards. ATSC was formed in 1982 by the member organizations of the Joint Committee on InterSociety Coordination (JCIC): the Electronic Industries Association (EIA), the Institute of Electrical and Electronic Engineers (IEEE), the National Association of Broadcasters (NAB), the National Cable Telecommunications Association (NCTA), and the Society of Motion Picture and Television Engineers (SMPTE). Currently, there are approximately 150 members representing the broadcast, broadcast equipment, motion picture, consumer electronics, computer, cable, satellite, and semiconductor industries. ATSC Digital TV Standards include digital high definition television (HDTV),