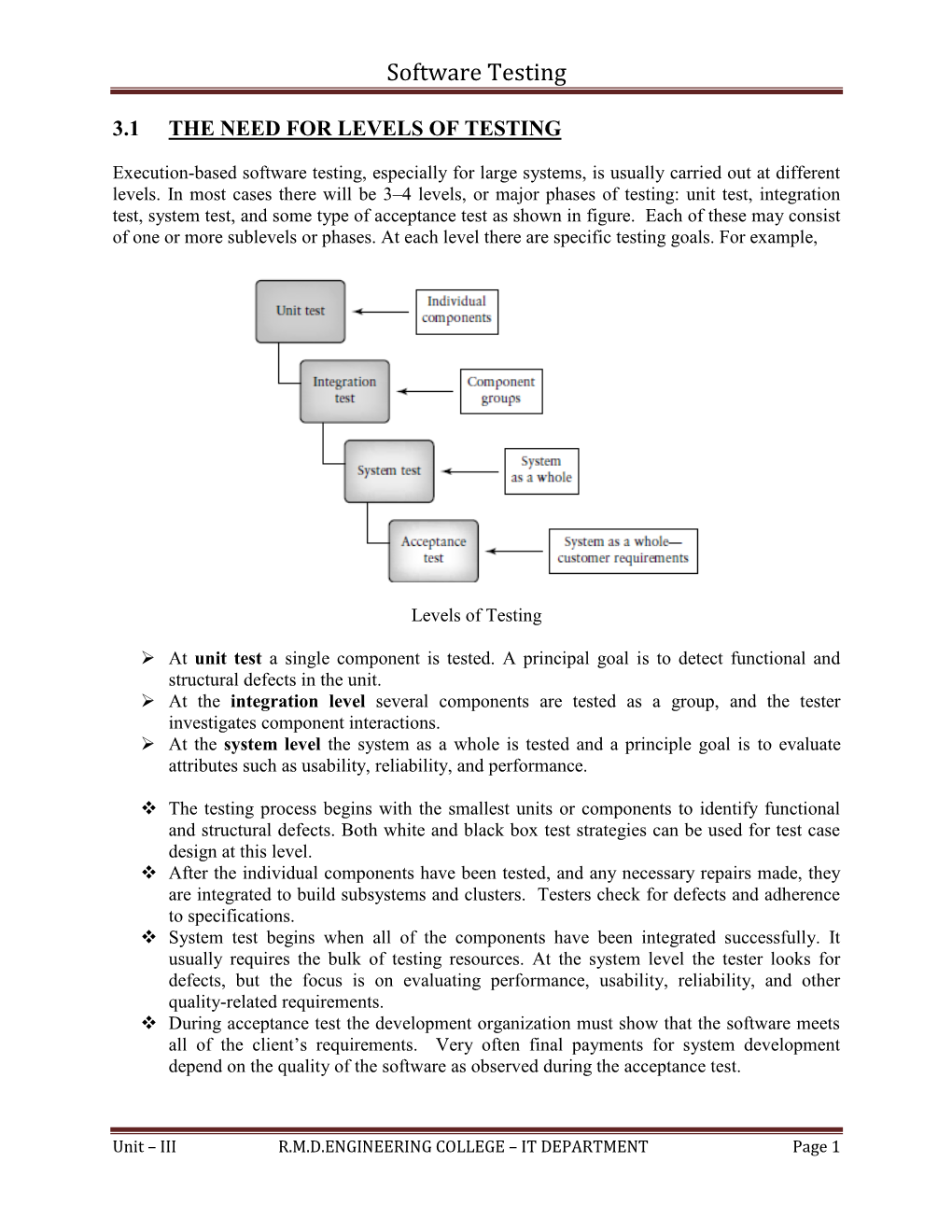

Unit III Software Testing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Combinatorial Approach to Detecting Buffer Overflow Vulnerabilities

A Combinatorial Approach to Detecting Buffer Overflow Vulnerabilities Wenhua Wang, Yu Lei, Donggang Liu, David Raghu Kacker, Rick Kuhn Kung, Christoph Csallner, Dazhi Zhang Information Technology Laboratory Department of Computer Science and Engineering National Institute of Standards and Technology The University of Texas at Arlington Gaithersburg, Maryland 20899, USA Arlington, Texas 76019, USA {raghu.kacker,kuhn}@nist.gov {wenhuawang,ylei,dliu,kung,csallner,dazhi}@uta.edu Abstract— Buffer overflow vulnerabilities are program defects Many security attacks exploit buffer overflow vulnerabilities that can cause a buffer to overflow at runtime. Many security to compromise critical data structures, so that they can attacks exploit buffer overflow vulnerabilities to compromise influence or even take control over the behavior of a target critical data structures. In this paper, we present a black-box system [27][32][33]. testing approach to detecting buffer overflow vulnerabilities. Our approach is a specification-based or black-box Our approach is motivated by a reflection on how buffer testing approach. That is, we generate test data based on a overflow vulnerabilities are exploited in practice. In most cases specification of the subject program, without analyzing the the attacker can influence the behavior of a target system only source code of the program. The specification required by by controlling its external parameters. Therefore, launching a our approach is lightweight and does not have to be formal. successful attack often amounts to a clever way of tweaking the In contrast, white-box testing approaches [5][13] derive test values of external parameters. We simulate the process performed by the attacker, but in a more systematic manner. -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Software Testing: Essential Phase of SDLC and a Comparative Study Of

International Journal of System and Software Engineering Volume 5 Issue 2, December 2017 ISSN.: 2321-6107 Software Testing: Essential Phase of SDLC and a Comparative Study of Software Testing Techniques Sushma Malik Assistant Professor, Institute of Innovation in Technology and Management, Janak Puri, New Delhi, India. Email: [email protected] Abstract: Software Development Life-Cycle (SDLC) follows In the software development process, the problem (Software) the different activities that are used in the development of a can be dividing in the following activities [3]: software product. SDLC is also called the software process ∑ Understanding the problem and it is the lifeline of any Software Development Model. ∑ Decide a plan for the solution Software Processes decide the survival of a particular software development model in the market as well as in ∑ Coding for the designed solution software organization and Software testing is a process of ∑ Testing the definite program finding software bugs while executing a program so that we get the zero defect software. The main objective of software These activities may be very complex for large systems. So, testing is to evaluating the competence and usability of a each of the activity has to be broken into smaller sub-activities software. Software testing is an important part of the SDLC or steps. These steps are then handled effectively to produce a because through software testing getting the quality of the software project or system. The basic steps involved in software software. Lots of advancements have been done through project development are: various verification techniques, but still we need software to 1) Requirement Analysis and Specification: The goal of be fully tested before handed to the customer. -

Note 5. Testing

Computer Science and Software Engineering University of Wisconsin - Platteville Note 5. Testing Yan Shi Lecture Notes for SE 3730 / CS 5730 Outline . Formal and Informal Reviews . Levels of Testing — Unit, Structural Coverage Analysis Input Coverage Testing: • Equivalence class testing • Boundary value analysis testing CRUD testing All pairs — integration, — system, — acceptance . Regression Testing Static and Dynamic Testing . Static testing: the software is not actually executed. — Generally not detailed testing — Reviews, inspections, walkthrough . Dynamic testing: test the dynamic behavior of the software — Usually need to run the software. Black, White and Grey Box Testing . Black box testing: assume no knowledge about the code, structure or implementation. White box testing: fully based on knowledge of the code, structure or implementation. Grey box testing: test with only partial knowledge of implementation. — E.g., algorithm review. Reviews . Static analysis and dynamic analysis . Black-box testing and white-box testing . Reviews are static white-box (?) testing. — Formal design reviews: DR / FDR — Peer reviews: inspections and walkthrough Formal Design Review . The only reviews that are necessary for approval of the design product. The development team cannot continue to the next stage without this approval. Maybe conducted at any development milestone: — Requirement, system design, unit/detailed design, test plan, code, support manual, product release, installation plan, etc. FDR Procedure . Preparation: — find review members (5-10), — review in advance: could use the help of checklist. A short presentation of the document. Comments by the review team. Verification and validation based on comments. Decision: — Full approval: immediate continuation to the next phase. — Partial approval: immediate continuation for some part, major action items for the remainder. -

Scenario Testing) Few Defects Found Few Defects Found

QUALITY ASSURANCE Michael Weintraub Fall, 2015 Unit Objective • Understand what quality assurance means • Understand QA models and processes Definitions According to NASA • Software Assurance: The planned and systematic set of activities that ensures that software life cycle processes and products conform to requirements, standards, and procedures. • Software Quality: The discipline of software quality is a planned and systematic set of activities to ensure quality is built into the software. It consists of software quality assurance, software quality control, and software quality engineering. As an attribute, software quality is (1) the degree to which a system, component, or process meets specified requirements. (2) The degree to which a system, component, or process meets customer or user needs or expectations [IEEE 610.12 IEEE Standard Glossary of Software Engineering Terminology]. • Software Quality Assurance: The function of software quality that assures that the standards, processes, and procedures are appropriate for the project and are correctly implemented. • Software Quality Control: The function of software quality that checks that the project follows its standards, processes, and procedures, and that the project produces the required internal and external (deliverable) products. • Software Quality Engineering: The function of software quality that assures that quality is built into the software by performing analyses, trade studies, and investigations on the requirements, design, code and verification processes and results to assure that reliability, maintainability, and other quality factors are met. • Software Reliability: The discipline of software assurance that 1) defines the requirements for software controlled system fault/failure detection, isolation, and recovery; 2) reviews the software development processes and products for software error prevention and/or controlled change to reduced functionality states; and 3) defines the process for measuring and analyzing defects and defines/derives the reliability and maintainability factors. -

Breeding Software Test Cases for Pairwise Testing Using GA GJCST Classification Dr

Global Journal of Computer Science and Technology Vol. 10 Issue 4 Ver. 1.0 June 2010 P a g e | 97 Breeding Software Test Cases for Pairwise Testing Using GA GJCST Classification Dr. Rakesh Kumar1 Surjeet Singh2 D.2.5, D.2.12 Abstract- All-pairs testing or pairwise testing is a specification- has improved with testing, it is still not clear to the based combinatorial testing method, which requires that for customers that they are receiving the return of the each pair of input parameters to a system (typically, a software investment they demand. Software testing is an important algorithm), each combination of valid values of these two activity of the software development process. It is a critical parameters be covered by at least one test case [TAI02]. element of software quality assurance. A set of possible Pairwise testing has become an indispensable tool in a software tester’s toolbox. This paper pays special attention to usability of inputs for any software system can be too large to be tested the pairwise testing technique. In this paper, we propose a new exhaustively. Techniques like equivalence class partitioning test generation strategy for pairwise testing using Genetic and boundary-value analysis help convert even a large Algorithm (GA). We compare the result with the random number of test levels into a much smaller set with testing and pairwise testing strategy and find that applying GA comparable defect-detection capability. If software under for pairwise testing performs better result. Information on at test can be influenced by a number of such aspects, least 20 tools that can generate pairwise test cases, have so far exhaustive testing again becomes impracticable. -

A Test Case Suite Generation Framework of Scenario Testing

VALID 2011 : The Third International Conference on Advances in System Testing and Validation Lifecycle A Test Case Suite Generation Framework of Scenario Testing Ting Li 1,3 Zhenyu Liu 2 Xu Jiang 2 1) Shanghai Development Center 2) Shanghai Key Laboratory of 3) Shanghai Software Industry of Computer Software Technology Computer Software Association Shanghai, China Testing and Evaluating Shanghai, China [email protected] Shanghai, China [email protected] {lzy, jiangx}@ssc.stn.sh.cn Abstract—This paper studies the software scenario testing, emerged in recent years, few of them provide any which is commonly used in black-box testing. In the paper, the consideration for business workflow verification. workflow model based on task-driven, which is very common The research work on business scenario testing and in scenario testing, is analyzed. According to test business validation is less. As for the actual development of the model in scenario testing, the model is designed to application system, the every operation in business is corresponding test case suite. The test case suite that conforms designed and developed well. However, business processes to the scenario test can be obtained through test case testing and requirements verification are very important and generation and test item design. In the last part of the paper, necessary for software quality. The scenario testing satisfies framework of test case suite design is given to illustrate the software requirement through the test design and test effectiveness of the method. execution. Keywords-test case; software test; scenario testing; test suite. Scenario testing is to judge software logic correction according to data behavior in finite test data and is to analyze results with all the possible input test data. -

Evaluating Testing with a Test Level Matrix

FSW QA Testing Levels Definitions Copyright 2000 Freightliner LLC. All rights reserved. FSW QA Testing Levels Definitions 1. Overview This document is used to help determine the amount and quality of testing (or its scope) that is planned for or has been performed on a project. This analysis results in the testing effort being assigned to a particular Testing Level category. Categorizing the quality and thoroughness of the testing that has been performed is useful when analyzing the metrics for the project. For example if only minimum testing was performed, how come so many person-hours were spent testing? Or if the maximum amount of testing was performed, how come there are so many trouble calls coming into the help desk? 2. Testing Level Categories This section provides the basic definitions of the six categories of testing levels. The definitions of the categories are intentionally vague and high level. The Testing Level Matrix in the next section provides a more detailed definition of what testing tasks are typically performed in each category. The specific testing tasks assigned to each category are defined in a separate matrix from the basic category definitions so that the categories can easily be re-defined (for example because of QA policy changes or a particular project's scope - patch release versus new product development). If it is decided that a particular category of testing is required on a project, but a testing task defined for completion in that category is not performed, it will be noted in the Test Plan (if it exists) and in the Testing Summary Report. -

Tips for Success

Tips for Success EHR System Implementation Testing By this stage of your EHR system implementation, you have fully grasped the significant flexibility of your EHR system, and have devoted significant hours to making major set-up and configuration decisions to tailor the system to your specific needs and clinical practices. Most likely, you have spent many long hours and late nights designing new workflow processes, building templates, configuring tables and dictionaries, setting system triggers, designing interfaces, and building screen flow logic to make the system operate effectively within your practice. Now, it is absolutely critical to thoroughly test your “tailoring” to be sure the system and screens work as intended before moving forward with “live” system use. It is far easier to make changes to the system configuration while there are no patients and physicians sitting in the exam rooms growing impatient and frustrated. Testing (and more testing!) will help you be sure all your planning and hard work thus far pays off in a successful EHR “Go-Live”. The following outlines an effective, proven testing process for assuring your EHR system works effectively and as planned before initial “Go-Live”. 1. Assign and Dedicate the Right Testing Resources Effectively testing a system requires significant dedicated time and attention, and staff that have been sufficiently involved with planning the EHR workflows and processes, to fully assess the set up of the features and functions to support its intended use. Testing also requires extreme patience and attention to detail that are not necessarily skills all members of your practice staff possess. -

Standard Glossary of Terms Used in Software Testing Version 3.0 Advanced Test Analyst Terms

Standard Glossary of Terms used in Software Testing Version 3.0 Advanced Test Analyst Terms International Software Testing Qualifications Board Copyright Notice This document may be copied in its entirety, or extracts made, if the source is acknowledged. Copyright © International Software Testing Qualifications Board (hereinafter called ISTQB®). acceptance criteria Ref: IEEE 610 The exit criteria that a component or system must satisfy in order to be accepted by a user, customer, or other authorized entity. acceptance testing Ref: After IEEE 610 See Also: user acceptance testing Formal testing with respect to user needs, requirements, and business processes conducted to determine whether or not a system satisfies the acceptance criteria and to enable the user, customers or other authorized entity to determine whether or not to accept the system. accessibility testing Ref: Gerrard Testing to determine the ease by which users with disabilities can use a component or system. accuracy Ref: ISO 9126 See Also: functionality The capability of the software product to provide the right or agreed results or effects with the needed degree of precision. accuracy testing See Also: accuracy Testing to determine the accuracy of a software product. actor User or any other person or system that interacts with the test object in a specific way. actual result Synonyms: actual outcome The behavior produced/observed when a component or system is tested. adaptability Ref: ISO 9126 See Also: portability The capability of the software product to be adapted for different specified environments without applying actions or means other than those provided for this purpose for the software considered. -

→Different Models(Waterfall,V Model,Spiral Etc…) →Maturity Models. → 1. Acceptance Testing: Formal Testing Conducted To

different models(waterfall,v model,spiral etc…) maturity models. 1. Acceptance Testing: Formal testing conducted to determine whether or not a system satisfies its acceptance criteria and to enable the customer to determine whether or not to accept the system. It is usually performed by the customer. 2. Accessibility Testing: Type of testing which determines the usability of a product to the people having disabilities (deaf, blind, mentally disabled etc). The evaluation process is conducted by persons having disabilities. 3. Active Testing: Type of testing consisting in introducing test data and analyzing the execution results. It is usually conducted by the testing teams. 4. Agile Testing: Software testing practice that follows the principles of the agile manifesto, emphasizing testing from the perspective of customers who will utilize the system. It is usually performed by the QA teams. 5. Age Testing: Type of testing which evaluates a system's ability to perform in the future. The evaluation process is conducted by testing teams. 6. Ad-hoc Testing: Testing performed without planning and documentation - the tester tries to 'break' the system by randomly trying the system's functionality. It is performed by the testing teams. 7. Alpha Testing: Type of testing a software product or system conducted at the developer's site. Usually it is performed by the end user. 8. Assertion Testing: Type of testing consisting in verifying if the conditions confirm the product requirements. It is performed by the testing teams. 9. API Testing: Testing technique similar to unit testing in that it targets the code level. API Testing differs from unit testing in that it is typically a QA task and not a developer task. -

The Nature of Exploratory Testing

The Nature of Exploratory Testing by Cem Kaner, J.D., Ph.D. Professor of Software Engineering Florida Institute of Technology and James Bach Principal, Satisfice Inc. These notes are partially based on research that was supported by NSF Grant EIA-0113539 ITR/SY+PE: "Improving the Education of Software Testers." Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. Kaner & Bach grant permission to make digital or hard copies of this work for personal or classroom use, including use in commercial courses, provided that (a) Copies are not made or distributed outside of classroom use for profit or commercial advantage, (b) Copies bear this notice and full citation on the front page, and if you distribute the work in portions, the notice and citation must appear on the first page of each portion. Abstracting with credit is permitted. The proper citation for this work is ”Exploratory & Risk-Based Testing (2004) www.testingeducation.org", (c) Each page that you use from this work must bear the notice "Copyright (c) Cem Kaner and James Bach", or if you modify the page, "Modified slide, originally from Cem Kaner and James Bach", and (d) If a substantial portion of a course that you teach is derived from these notes, advertisements of that course should include the statement, "Partially based on materials provided by Cem Kaner and James Bach." To copy otherwise, to republish or post on servers, or to distribute to lists requires prior specific permission and a fee.