A Novel Tool for Standardizing Clinical Data in a Realism-Based Common Data Model

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Validating RDF Data Using Shapes

83 Validating RDF data using Shapes a Jose Emilio Labra Gayo a University of Oviedo, Spain Abstract RDF forms the keystone of the Semantic Web as it enables a simple and powerful knowledge representation graph based data model that can also facilitate integration between heterogeneous sources of information. RDF based applications are usually accompanied with SPARQL stores which enable to efficiently manage and query RDF data. In spite of its well known benefits, the adoption of RDF in practice by web programmers is still lacking and SPARQL stores are usually deployed without proper documentation and quality assurance. However, the producers of RDF data usually know the implicit schema of the data they are generating, but they don't do it traditionally. In the last years, two technologies have been developed to describe and validate RDF content using the term shape: Shape Expressions (ShEx) and Shapes Constraint Language (SHACL). We will present a motivation for their appearance and compare them, as well as some applications and tools that have been developed. Keywords RDF, ShEx, SHACL, Validating, Data quality, Semantic web 1. Introduction In the tutorial we will present an overview of both RDF is a flexible knowledge and describe some challenges and future work [4] representation language based of graphs which has been successfully adopted in semantic web 2. Acknowledgements applications. In this tutorial we will describe two languages that have recently been proposed for This work has been partially funded by the RDF validation: Shape Expressions (ShEx) and Spanish Ministry of Economy, Industry and Shapes Constraint Language (SHACL).ShEx was Competitiveness, project: TIN2017-88877-R proposed as a concise and intuitive language for describing RDF data in 2014 [1]. -

V a Lida T in G R D F Da

Series ISSN: 2160-4711 LABRA GAYO • ET AL GAYO LABRA Series Editors: Ying Ding, Indiana University Paul Groth, Elsevier Labs Validating RDF Data Jose Emilio Labra Gayo, University of Oviedo Eric Prud’hommeaux, W3C/MIT and Micelio Iovka Boneva, University of Lille Dimitris Kontokostas, University of Leipzig VALIDATING RDF DATA This book describes two technologies for RDF validation: Shape Expressions (ShEx) and Shapes Constraint Language (SHACL), the rationales for their designs, a comparison of the two, and some example applications. RDF and Linked Data have broad applicability across many fields, from aircraft manufacturing to zoology. Requirements for detecting bad data differ across communities, fields, and tasks, but nearly all involve some form of data validation. This book introduces data validation and describes its practical use in day-to-day data exchange. The Semantic Web offers a bold, new take on how to organize, distribute, index, and share data. Using Web addresses (URIs) as identifiers for data elements enables the construction of distributed databases on a global scale. Like the Web, the Semantic Web is heralded as an information revolution, and also like the Web, it is encumbered by data quality issues. The quality of Semantic Web data is compromised by the lack of resources for data curation, for maintenance, and for developing globally applicable data models. At the enterprise scale, these problems have conventional solutions. Master data management provides an enterprise-wide vocabulary, while constraint languages capture and enforce data structures. Filling a need long recognized by Semantic Web users, shapes languages provide models and vocabularies for expressing such structural constraints. -

The Opencitations Data Model

The OpenCitations Data Model Marilena Daquino1;2[0000−0002−1113−7550], Silvio Peroni1;2[0000−0003−0530−4305], David Shotton2;3[0000−0001−5506−523X], Giovanni Colavizza4[0000−0002−9806−084X], Behnam Ghavimi5[0000−0002−4627−5371], Anne Lauscher6[0000−0001−8590−9827], Philipp Mayr5[0000−0002−6656−1658], Matteo Romanello7[0000−0002−7406−6286], and Philipp Zumstein8[0000−0002−6485−9434]? 1 Digital Humanities Advanced research Centre (/DH.arc), Department of Classical Philology and Italian Studies, University of Bologna fmarilena.daquino2,[email protected] 2 Research Centre for Open Scholarly Metadata, Department of Classical Philology and Italian Studies, University of Bologna 3 Oxford e-Research Centre, University of Oxford [email protected] 4 Institute for Logic, Language and Computation (ILLC), University of Amsterdam [email protected] 5 Department of Knowledge Technologies for the Social Sciences, GESIS - Leibniz-Institute for the Social Sciences [email protected], [email protected] 6 Data and Web Science Group, University of Mannheim [email protected] 7 cole Polytechnique Fdrale de Lausanne [email protected] 8 Mannheim University Library, University of Mannheim [email protected] Abstract. A variety of schemas and ontologies are currently used for the machine-readable description of bibliographic entities and citations. This diversity, and the reuse of the same ontology terms with differ- ent nuances, generates inconsistencies in data. Adoption of a single data model would facilitate data integration tasks regardless of the data sup- plier or context application. In this paper we present the OpenCitations Data Model (OCDM), a generic data model for describing bibliographic entities and citations, developed using Semantic Web technologies. -

Using Shape Expressions (Shex) to Share RDF Data Models and to Guide Curation with Rigorous Validation B Katherine Thornton1( ), Harold Solbrig2, Gregory S

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repositorio Institucional de la Universidad de Oviedo Using Shape Expressions (ShEx) to Share RDF Data Models and to Guide Curation with Rigorous Validation B Katherine Thornton1( ), Harold Solbrig2, Gregory S. Stupp3, Jose Emilio Labra Gayo4, Daniel Mietchen5, Eric Prud’hommeaux6, and Andra Waagmeester7 1 Yale University, New Haven, CT, USA [email protected] 2 Johns Hopkins University, Baltimore, MD, USA [email protected] 3 The Scripps Research Institute, San Diego, CA, USA [email protected] 4 University of Oviedo, Oviedo, Spain [email protected] 5 Data Science Institute, University of Virginia, Charlottesville, VA, USA [email protected] 6 World Wide Web Consortium (W3C), MIT, Cambridge, MA, USA [email protected] 7 Micelio, Antwerpen, Belgium [email protected] Abstract. We discuss Shape Expressions (ShEx), a concise, formal, modeling and validation language for RDF structures. For instance, a Shape Expression could prescribe that subjects in a given RDF graph that fall into the shape “Paper” are expected to have a section called “Abstract”, and any ShEx implementation can confirm whether that is indeed the case for all such subjects within a given graph or subgraph. There are currently five actively maintained ShEx implementations. We discuss how we use the JavaScript, Scala and Python implementa- tions in RDF data validation workflows in distinct, applied contexts. We present examples of how ShEx can be used to model and validate data from two different sources, the domain-specific Fast Healthcare Interop- erability Resources (FHIR) and the domain-generic Wikidata knowledge base, which is the linked database built and maintained by the Wikimedia Foundation as a sister project to Wikipedia. -

Shape Designer for Shex and SHACL Constraints Iovka Boneva, Jérémie Dusart, Daniel Fernández Alvarez, Jose Emilio Labra Gayo

Shape Designer for ShEx and SHACL Constraints Iovka Boneva, Jérémie Dusart, Daniel Fernández Alvarez, Jose Emilio Labra Gayo To cite this version: Iovka Boneva, Jérémie Dusart, Daniel Fernández Alvarez, Jose Emilio Labra Gayo. Shape Designer for ShEx and SHACL Constraints. ISWC 2019 - 18th International Semantic Web Conference, Oct 2019, Auckland, New Zealand. 2019. hal-02268667 HAL Id: hal-02268667 https://hal.archives-ouvertes.fr/hal-02268667 Submitted on 30 Sep 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Shape Designer for ShEx and SHACL Constraints∗ Iovka Boneva1, J´er´emieDusart2, Daniel Fern´andez Alvarez´ 3, and Jose Emilio Labra Gayo3 1 Univ. Lille, CNRS, Centrale Lille, Inria, UMR 9189 - CRIStAL - Centre de Recherche en Informatique Signal et Automatique de Lille, F-59000 Lille, France 2 Inria, France 3 University of Oviedo, Spain Abstract. We present Shape Designer, a graphical tool for building SHACL or ShEx constraints for an existing RDF dataset. Shape Designer allows to automatically extract complex constraints that are satisfied by the data. Its integrated shape editor and validator allow expert users to combine and modify these constraints in order to build an arbitrarily complex ShEx or SHACL schema. -

The Zebrafish Anatomy and Stage Ontologies: Representing the Anatomy and Development of Danio Rerio

Van Slyke et al. Journal of Biomedical Semantics 2014, 5:12 http://www.jbiomedsem.com/content/5/1/12 JOURNAL OF BIOMEDICAL SEMANTICS RESEARCH Open Access The zebrafish anatomy and stage ontologies: representing the anatomy and development of Danio rerio Ceri E Van Slyke1*†, Yvonne M Bradford1*†, Monte Westerfield1,2 and Melissa A Haendel3 Abstract Background: The Zebrafish Anatomy Ontology (ZFA) is an OBO Foundry ontology that is used in conjunction with the Zebrafish Stage Ontology (ZFS) to describe the gross and cellular anatomy and development of the zebrafish, Danio rerio, from single cell zygote to adult. The zebrafish model organism database (ZFIN) uses the ZFA and ZFS to annotate phenotype and gene expression data from the primary literature and from contributed data sets. Results: The ZFA models anatomy and development with a subclass hierarchy, a partonomy, and a developmental hierarchy and with relationships to the ZFS that define the stages during which each anatomical entity exists. The ZFA and ZFS are developed utilizing OBO Foundry principles to ensure orthogonality, accessibility, and interoperability. The ZFA has 2860 classes representing a diversity of anatomical structures from different anatomical systems and from different stages of development. Conclusions: The ZFA describes zebrafish anatomy and development semantically for the purposes of annotating gene expression and anatomical phenotypes. The ontology and the data have been used by other resources to perform cross-species queries of gene expression and phenotype data, providing insights into genetic relationships, morphological evolution, and models of human disease. Background function, development, and evolution. ZFIN, the zebrafish Zebrafish (Danio rerio) share many anatomical and physio- model organism database [10] manually curates these dis- logical characteristics with other vertebrates, including parate data obtained from the literature or by direct data humans, and have emerged as a premiere organism to submission. -

Human Disease Ontology 2018 Update: Classification, Content And

Published online 8 November 2018 Nucleic Acids Research, 2019, Vol. 47, Database issue D955–D962 doi: 10.1093/nar/gky1032 Human Disease Ontology 2018 update: classification, content and workflow expansion Lynn M. Schriml 1,*, Elvira Mitraka2, James Munro1, Becky Tauber1, Mike Schor1, Lance Nickle1, Victor Felix1, Linda Jeng3, Cynthia Bearer3, Richard Lichenstein3, Katharine Bisordi3, Nicole Campion3, Brooke Hyman3, David Kurland4, Connor Patrick Oates5, Siobhan Kibbey3, Poorna Sreekumar3, Chris Le3, Michelle Giglio1 and Carol Greene3 1University of Maryland School of Medicine, Institute for Genome Sciences, Baltimore, MD, USA, 2Dalhousie University, Halifax, NS, Canada, 3University of Maryland School of Medicine, Baltimore, MD, USA, 4New York University Langone Medical Center, Department of Neurosurgery, New York, NY, USA and 5Department of Medicine, Icahn School of Medicine at Mount Sinai, New York, NY, USA Received September 13, 2018; Revised October 04, 2018; Editorial Decision October 14, 2018; Accepted October 22, 2018 ABSTRACT INTRODUCTION The Human Disease Ontology (DO) (http://www. The rapid growth of biomedical and clinical research in re- disease-ontology.org), database has undergone sig- cent decades has begun to reveal novel cellular, molecular nificant expansion in the past three years. The DO and environmental determinants of disease (1–4). However, disease classification includes specific formal se- the opportunities for discovery and the transcendence of mantic rules to express meaningful disease mod- knowledge between research groups can only be realized in conjunction with the development of rigorous, standard- els and has expanded from a single asserted clas- ized bioinformatics tools. These tools should be capable of sification to include multiple-inferred mechanistic addressing specific biomedical data nomenclature and stan- disease classifications, thus providing novel per- dardization challenges posed by the vast variety of biomed- spectives on related diseases. -

The Evaluation of Ontologies: Editorial Review Vs

The Evaluation of Ontologies: Editorial Review vs. Democratic Ranking Barry Smith Department of Philosophy, Center of Excellence in Bioinformatics and Life Sciences, and National Center for Biomedical Ontology University at Buffalo from Proceedings of InterOntology 2008 (Tokyo, Japan, 26-27 February 2008), 127-138. ABSTRACT. Increasingly, the high throughput technologies used by biomedical researchers are bringing about a situation in which large bodies of data are being described using controlled structured vocabularies—also known as ontologies—in order to support the integration and analysis of this data. Annotation of data by means of ontologies is already contributing in significant ways to the cumulation of scientific knowledge and, prospectively, to the applicability of cross-domain algorithmic reasoning in support of scientific advance. This very success, however, has led to a proliferation of ontologies of varying scope and quality. We define one strategy for achieving quality assurance of ontologies—a plan of action already adopted by a large community of collaborating ontologists—which consists in subjecting ontologies to a process of peer review analogous to that which is applied to scientific journal articles. 1 From OBO to the OBO Foundry Our topic here is the use of ontologies to support scientific research, especially in the domains of biology and biomedicine. In 2001, Open Biomedical Ontologies (OBO) was created by Michael Ashburner and Suzanna Lewis as an umbrella body for the developers of such ontologies in the domain of the life sciences, applying the key principles underlying the success of the Gene Ontology (GO) [GOC 2006], namely, that ontologies be (a) open, (b) orthogonal, (c) instantiated in a well-specified syntax, and such as (d) to share a common space of identifiers [Ashburner et al. -

Applications of an Ontology Engineering Methodology Accessing Linked Data for Dialogue-Based Medical Image Retrieval

Applications of an Ontology Engineering Methodology Accessing Linked Data for Dialogue-Based Medical Image Retrieval Daniel Sonntag, Pinar Wennerberg, Sonja Zillner DFKI - German Research Center for AI, Siemens AG [email protected] [email protected] [email protected] Abstract of medication and treatment plan is foreseen. This requires This paper examines first ideas on the applicability of the medical images to be annotated accordingly so that the Linked Data, in particular a subset of the Linked Open Drug radiologists can obtain all the necessary information Data (LODD), to connect radiology, human anatomy, and starting with the image. Moreover, the image information drug information for improved medical image annotation needs to be interlinked in a coherent way to enable the and subsequent search. One outcome of our ontology radiologist to trace the dependencies between observations. engineering methodology is the semi-automatic alignment Linked Data has the potential to provide easier access to between radiology-related OWL ontologies (FMA and significantly growing, publicly available related data sets, RadLex). These can be used to provide new connections in such as those in the healthcare domain: in combination the medicine-related linked data cloud. A use case scenario with ontology matching and speech dialogue (as AI is provided that involves a full-fletched speech dialogue system as AI application and additionally demonstrates the systems) this should allow medical experts to explore the benefits of the approach by enabling the radiologist to query interrelated data more conveniently and efficiently without and explore related data, e.g., medical images and drugs. having to switch between many different applications, data The diagnosis is on a special type of cancer (lymphoma). -

Validating RDF with Shape Expressions

Validating RDF with Shape Expressions Iovka Boneva Jose Emilio Labra Gayo LINKS, INRIA & CNRS University of Oviedo University of Lille, France Spain Samuel Hym Eric G. Prud'hommeau 2XS W3C University of Lille, France Stata Center, MIT Harold Solbrig S lawek Staworko∗ Mayo Clinic College of Medicine LINKS, INRIA & CNRS Rochester, MN, USA University of Lille, France Abstract We propose shape expression schema (ShEx), a novel schema formalism for describing the topology of an RDF graph that uses regular bag expressions (RBEs) to define con- straints on the admissible neighborhood for the nodes of a given type. We provide two alternative semantics, multi- and single-type, depending on whether or not a node may have more than one type. We study the expressive power of ShEx and study the complexity of the validation problem. We show that the single-type semantics is strictly more ex- pressive than the multi-type semantics, single-type validation is generally intractable and multi-type validation is feasible for a small class of RBEs. To further curb the high com- putational complexity of validation, we propose a natural notion of determinism and show that multi-type validation for the class of deterministic schemas using single-occurrence regular bag expressions (SORBEs) is tractable. Finally, we consider the problem of val- idating only a fragment of a graph with preassigned types for some of its nodes, and argue that for deterministic ShEx using SORBEs, multi-type validation can be performed efficiently and single-type validation can be performed with a single pass over the graph. 1 Introduction Schemas have a number of important functions in databases. -

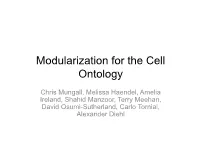

Modularization for the Cell Ontology

Modularization for the Cell Ontology Chris Mungall, Melissa Haendel, Amelia Ireland, Shahid Manzoor, Terry Meehan, David Osumi-Sutherland, Carlo Torniai, Alexander Diehl Outline • The Cell Ontology and its neighbors • Handling multiple species • Extracting modules for taxonomic contexts • The Oort – OBO Ontology Release Tool Inter-ontology axioms Source Ref Axiom cl go ‘mature eosinophil’ SubClassOf capable of some ‘respiratory burst’ go cl ‘eosinophil differentiation’ EquivalentTo differentiation and has_target some eosinophil * cl pro ‘Gr1-high classical monocyte’ EquivalentTo ‘classical monocyte’ and has_plasma_membrane_part some ‘integrin-alpha-M’ and …. cl chebi melanophage EquivalentTo ‘tissue-resident macrophage’ and has_part some melanin and … cl uberon ‘epithelial cell of lung’ EquivalentTo ‘epithelial cell’ and part_of some lung uberon cl muscle tissue EquivalentTo tissue and has_part some muscle cell clo cl HeLa cell SubClassOf derives_from some (‘epithelial cell’ and part_of some cervix) fbbt cl fbbt:neuron EquivalentTo cl:neuron and part_of some Drosophila ** * experimental extension ** taxon-context bridge axiom The cell ontology covers multiple taxonomic contexts Ontology Context # of links to cl MA adult mouse 1 FMA adult human 658 XAO frog 63 AAO amphibian ZFA zebrafish 391 TAO bony fish 385 FBbt fruitfly 53 PO plants * CL all cells - Xref macros: an easy way to formally connect to broader taxa treat-xrefs-as-genus-differentia: CL part_of NCBITaxon:7955! …! [Term]! id: ZFA:0009255! name: amacrine cell! xref: CL:0000561! -

Investigating Term Reuse and Overlap in Biomedical Ontologies Maulik R

Investigating Term Reuse and Overlap in Biomedical Ontologies Maulik R. Kamdar, Tania Tudorache, and Mark A. Musen Stanford Center for Biomedical Informatics Research, Department of Medicine, Stanford University ABSTRACT CT, to spare the effort in creating already existing quality We investigate the current extent of term reuse and overlap content (e.g., the anatomy taxonomy), to increase their among biomedical ontologies. We use the corpus of biomedical interoperability, and to support its use in electronic health ontologies stored in the BioPortal repository, and analyze three types records (Tudorache et al., 2010). Another benefit of the of reuse constructs: (a) explicit term reuse, (b) xref reuse, and (c) ontology term reuse is that it enables federated search Concept Unique Identifier (CUI) reuse. While there is a term label engines to query multiple, heterogeneous knowledge sources, similarity of approximately 14.4% of the total terms, we observed structured using these ontologies, and eliminates the need for that most ontologies reuse considerably fewer than 5% of their extensive ontology alignment efforts (Kamdar et al., 2014). terms from a concise set of a few core ontologies. We developed For the purpose of this work, we define as term reuse an interactive visualization to explore reuse dependencies among the situation in which the same term is present in two or biomedical ontologies. Moreover, we identified a set of patterns that more ontologies either by direct use of the same identifier, indicate ontology developers did intend to reuse terms from other or via explicit references and mappings. We define as term ontologies, but they were using different and sometimes incorrect overlap the situation in which the term labels in two or more representations.