Large-Scale Attribute-Object Compositions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Power of Interoperability: Why Objects Are Inevitable

The Power of Interoperability: Why Objects Are Inevitable Jonathan Aldrich Carnegie Mellon University Pittsburgh, PA, USA [email protected] Abstract 1. Introduction Three years ago in this venue, Cook argued that in Object-oriented programming has been highly suc- their essence, objects are what Reynolds called proce- cessful in practice, and has arguably become the dom- dural data structures. His observation raises a natural inant programming paradigm for writing applications question: if procedural data structures are the essence software in industry. This success can be documented of objects, has this contributed to the empirical success in many ways. For example, of the top ten program- of objects, and if so, how? ming languages at the LangPop.com index, six are pri- This essay attempts to answer that question. After marily object-oriented, and an additional two (PHP reviewing Cook’s definition, I propose the term ser- and Perl) have object-oriented features.1 The equiva- vice abstractions to capture the essential nature of ob- lent numbers for the top ten languages in the TIOBE in- jects. This terminology emphasizes, following Kay, that dex are six and three.2 SourceForge’s most popular lan- objects are not primarily about representing and ma- guages are Java and C++;3 GitHub’s are JavaScript and nipulating data, but are more about providing ser- Ruby.4 Furthermore, objects’ influence is not limited vices in support of higher-level goals. Using examples to object-oriented languages; Cook [8] argues that Mi- taken from object-oriented frameworks, I illustrate the crosoft’s Component Object Model (COM), which has unique design leverage that service abstractions pro- a C language interface, is “one of the most pure object- vide: the ability to define abstractions that can be ex- oriented programming models yet defined.” Academ- tended, and whose extensions are interoperable in a ically, object-oriented programming is a primary focus first-class way. -

The Principles of Object-Oriented Javascript Zakas

TAKETAKE CONTROLCONTROL OFOF Foreword by Cody Lindley, Best-selling Author and Principal Frontend Architect JAVASCRIPT THE PRINCIPLES OF OBJECT-ORIENTED JAVASCRIPT JAVASCRIPT THE PRINCIPLES OF OBJECT-ORIENTED JAVASCRIPT THETHE PRINCIPLESPRINCIPLES OFOF OBJECTSOBJECTS at TandemSeven OBJECT-ORIENTEDOBJECT-ORIENTED If you’ve used a more traditional object-oriented • How to define your own constructors JAVASCRIPTJAVASCRIPT language, such as C++ or Java, JavaScript probably • How to work with and understand prototypes doesn’t seem object-oriented at all. It has no concept of classes, and you don’t even need to define any • Inheritance patterns for types and objects objects in order to write code. But don’t be fooled — The Principles of Object-Oriented JavaScript will leave NICHOLAS C. ZAKAS JavaScript is an incredibly powerful and expressive even experienced developers with a deeper understand- object-oriented language that puts many design ing of JavaScript. Unlock the secrets behind how objects decisions right into your hands. work in JavaScript so you can write clearer, more In The Principles of Object-Oriented JavaScript, flexible, and more efficient code. Nicholas C. Zakas thoroughly explores JavaScript’s object-oriented nature, revealing the language’s A B O U T T H E A U T H O R unique implementation of inheritance and other key characteristics. You’ll learn: Nicholas C. Zakas is a software engineer at Box and is known for writing on and speaking about the latest • The difference between primitive and reference in JavaScript best practices. He honed his experience values during his five years at Yahoo!, where he was principal • What makes JavaScript functions so unique frontend engineer for the Yahoo! home page. -

Evolution and Composition of Object-Oriented Frameworks

Evolution and Composition of Object-Oriented Frameworks Michael Mattsson University of Karlskrona/Ronneby Department of Software Engineering and Computer Science ISBN 91-628-3856-3 © Michael Mattsson, 2000 Cover background: Digital imagery® copyright 1999 PhotoDisc, Inc. Printed in Sweden Kaserntryckeriet AB Karlskrona, 2000 To Nisse, my father-in-law - who never had the opportunity to study as much as he would have liked to This thesis is submitted to the Faculty of Technology, University of Karlskrona/Ronneby, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Engineering. Contact Information: Michael Mattsson Department of Software Engineering and Computer Science University of Karlskrona/Ronneby Soft Center SE-372 25 RONNEBY SWEDEN Tel.: +46 457 38 50 00 Fax.: +46 457 27 125 Email: [email protected] URL: http://www.ipd.hk-r.se/rise Abstract This thesis comprises studies of evolution and composition of object-oriented frameworks, a certain kind of reusable asset. An object-oriented framework is a set of classes that embodies an abstract design for solutions to a family of related prob- lems. The work presented is based on and has its origin in industrial contexts where object-oriented frameworks have been developed, used, evolved and managed. Thus, the results are based on empirical observations. Both qualitative and quanti- tative approaches have been used in the studies performed which cover both tech- nical and managerial aspects of object-oriented framework technology. Historically, object-oriented frameworks are large monolithic assets which require several design iterations and are therefore costly to develop. With the requirement of building larger applications, software engineers have started to compose multiple frameworks, thereby encountering a number of problems. -

Obstacl: a Language with Objects, Subtyping, and Classes

OBSTACL: A LANGUAGE WITH OBJECTS, SUBTYPING, AND CLASSES A DISSERTATION SUBMITTED TO THE DEPARTMENT OF COMPUTER SCIENCE AND THE COMMITTEE ON GRADUATE STUDIES OF STANFORD UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY By Amit Jayant Patel December 2001 c Copyright 2002 by Amit Jayant Patel All Rights Reserved ii I certify that I have read this dissertation and that in my opin- ion it is fully adequate, in scope and quality, as a dissertation for the degree of Doctor of Philosophy. John Mitchell (Principal Adviser) I certify that I have read this dissertation and that in my opin- ion it is fully adequate, in scope and quality, as a dissertation for the degree of Doctor of Philosophy. Kathleen Fisher I certify that I have read this dissertation and that in my opin- ion it is fully adequate, in scope and quality, as a dissertation for the degree of Doctor of Philosophy. David Dill Approved for the University Committee on Graduate Studies: iii Abstract Widely used object-oriented programming languages such as C++ and Java support soft- ware engineering practices but do not have a clean theoretical foundation. On the other hand, most research languages with well-developed foundations are not designed to support software engineering practices. This thesis bridges the gap by presenting OBSTACL, an object-oriented extension of ML with a sound theoretical basis and features that lend themselves to efficient implementation. OBSTACL supports modular programming techniques with objects, classes, structural subtyping, and a modular object construction system. OBSTACL's parameterized inheritance mechanism can be used to express both single inheritance and most common uses of multiple inheritance. -

Approaches to Composition and Refinement in Object-Oriented Design

Approaches to Composition and Refinement in Object-Oriented Design Marcel Weiher Matrikelnummer 127367 Diplomarbeit Fachbereich Informatik Technische Unversitat¨ Berlin Advisor: Professor Bernd Mahr August 21, 1997 Contents 1 Introduction 4 1.1 Object Orientation 4 1.2 Composition and Refinement 5 2 State of the Art 7 2.1 Frameworks 7 2.1.1 Framework Construction 8 2.1.2 Reusing Frameworks 9 2.2 Design Patterns 10 2.2.1 Reusing Patterns 10 2.2.2 Communicating Patterns 12 2.3 Commentary 13 3 Enhanced Interfaces 14 3.1 Interface Typing 14 3.2 The Refinement Interface 15 3.2.1 Protocol Dependencies 15 3.2.2 Reuse Contracts 18 3.3 Interaction Interfaces 21 3.3.1 Interaction Protocols 21 3.3.2 Protocol Specification and Matching 22 3.4 Commentary 23 4 Orthogonalization 24 4.1 Delegation 24 4.1.1 Prototypes Languages 25 4.1.2 Composition Filters 25 4.2 Mixins 27 4.2.1 Mixins and Multiple Inheritance 28 4.2.2 Nested Dynamic Mixins 28 4.3 Extension Hierarchies 29 4.3.1 Extension Operators 29 4.3.2 Conflicts: Detection, Resolution, Avoidance 30 4.4 Canonic Components 31 4.4.1 Components 31 4.4.2 Views 32 4.5 Commentary 33 1 5 Software Architecture 34 5.1 Architectural Styles 34 5.1.1 Pipes and Filters 35 5.1.2 Call and Return 36 5.1.3 Implicit Invocation 36 5.1.4 Using Styles 37 5.2 Architecture Description Languages 38 5.2.1 UniCon 38 5.2.2 RAPIDE 39 5.2.3 Wright 40 5.2.4 ACME 43 5.3 Commentary 43 6 Non-linear Refinement 45 6.1 Domain Specific Software Generators 45 6.1.1 A Domain Independent Model 46 6.1.2 Implementation 47 6.2 Subject Oriented -

Object Oriented Languages

Object oriented languages dr inż. Marcin Pietroń Object oriented programming • Object languages: - Java (business applications, mobile software etc.) - C++ (embedded, real-time, finance- sector) - Python (prototyping, testing) - Smalltalk - Ruby (web) - Objective-C (mobile) - … Object oriented programming • programming paradigm - data structures called "objects", contain data, in the form of fields, often known as attributes; and code, in the form of procedures, often known as methods. Object oriented programming • a feature of objects is that an object's procedures can access and often modify the data fields of the object with which they are associated, • in OO programming, objects in a software system interact with one another. Object oriented programming • There is significant diversity in object-oriented programming, but most popular languages are class-based, meaning that objects are instances of classes, which typically also determines their type. Object oriented programming Languages that support object-oriented programming use inheritance for code reuse and extensibility in the form of either classes or prototypes. Those that use classes support two main concepts: • Objects - structures that contain both data and procedures • Classes - definitions for the data format and available procedures for a given type or class of object; may also contain data and procedures (class methods) Object oriented programming • Objects sometimes correspond to things found in the real world (e.g. a graphics program may have objects such as „figure ", "circle ", "square" or " cursor ", online shop may have objects such as "shopping cart" "customer" " basket " or "product ", • Objects can represent more abstract things and entities, like an object that represents an open file, or an object which provides some service (e.g. -

Object Composition and Inheritance Prof Matthew Fricke

Object Composition and Inheritance Prof Matthew Fricke Recap: Procedural Programming •Procedural programming uses: • Data structures (like integers, strings, lists) • Functions (like addints() ) •In procedural programming, information must be passed to the function • Functions and data structures are not linked Recap: Object-Oriented Programming (OOP) • Object-Oriented programming uses • Classes! • Classes combine the data and their relevant functions into one entity • The data types we use are actually classes! • Strings have built-in functions like lower(), join(), strip(), etc. Classes vs Objects Classes are like the job description The object is the person hired to do the job. Classes vs Objects Instantiations of the Classes are like the job class definition description The object is the person hired to do the job. Instantiating Has a… (composition of objects) • A course has class sections • Class sections have students Student Name ID_num Has a… (composition of objects) • A course has class sections • Class sections have students ClassSection Student section_number Name ID_num student student student Has a… (composition of objects) • A course has class sections • Class sections have students List ClassSection Student course_number course_name class_section section_number Name ID_num class_section student class_section student student Is a… (inheritance of classes) • A student Student Is a… (inheritance of classes) • A student is a person Student Person Is a… (inheritance of classes) • A student is a person • A person is a mammal Student Person Mammal Is a… (inheritance of classes) • A student is a person • A person is a mammal Student • A mammal is an animal Person Mammal Animal Lot’s of names…. • Unfortunately there are lots of terms for the same thing: Student • Parent Class = Base Class = Superclass Person • Child Class Mammal = Derived Class Animal = Subclass Lot’s of names…. -

Object- Oriented Software Composition

Oscar Nierstrasz and Dennis Tsichritzis, Object-Oriented Software Composition, Prentice Hall, 1995. Reproduced with the permission of the Publisher, Prentice Hall (a Pearson Education company). This work is protected by copyright and may not be reproduced other than when downloaded and viewed on a single Central Processor Unit (CPU) for private use only. It is not otherwise to be reproduced or transmitted or made available on a network without prior written permission of Prentice Hall. All other rights reserved. Object- Oriented Software Composition EDITED BY Oscar Nierstrasz UNIVERSITY OF BERNE AND Dennis Tsichritzis UNIVERSITY OF GENEVA First published 1995 by Prentice Hall International (UK) Ltd Campus 400, Maylands Avenue Hemel Hempstead Hertfordshire, HP2 7EZ A division of Simon & Schuster International Group © Prentice Hall 1995 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form, or by any means, electronic, mechanical, photocopying, recording or otherwise, without prior permission, in writing, from the publisher. For permission within the United States of America contact Prentice Hall Inc., Englewood Cliffs, NJ 07632 Printed and bound in Great Britain by T.J. Press (Padstow) Ltd, Padstow, Cornwall. Library of Congress Cataloging-in-Publication Data Object-oriented software composition / edited by Oscar Nierstrasz and Dennis Tsichritzis. p. cm.—(The Object-oriented series) Includes bibliographical references and index. ISBN 0-13-220674-9 1. Object-oriented -

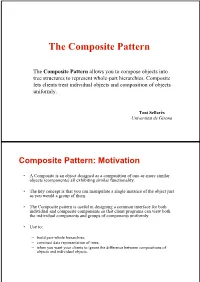

The Composite Pattern

The Composite Pattern The Composite Pattern allows you to compose objects into tree structures to represent whole-part hierarchies. Composite lets clients treat individual objects and composition of objects uniformly. Toni Sellarès Universitat de Girona Composite Pattern: Motivation • A Composite is an object designed as a composition of one-or-more similar objects (components) all exhibiting similar functionality. • The key concept is that you can manipulate a single instance of the object just as you would a group of them. • The Composite pattern is useful in designing a common interface for both individual and composite components so that client programs can view both the individual components and groups of components uniformly. •Use to: – build part-whole hierarchies; – construct data representation of trees; – when you want your clients to ignore the difference between compositions of objects and individual objects. The Composite Pattern Defined The Composite Pattern allows you to compose objects into tree structures to represent whole-part hierarchies. Composite lets clients treat individual objects and composition of objects uniformly. node 1. We can create arbitrarily complex trees. 2. We can treat them as a whole or as parts 3. Operations can be applied to the whole or the part. leaf Composite Pattern Structure The Component defines an interface for The client uses the Component interface all objects in the composition: both the The Component may implement a to manipulate the objects in the composite and the leaf nodes. composition. default behavior for add ( ), remove (), getChild() and its operations. Note that the leaf also inherits methods like add(), remove(), and getChild(), which don’t make a lot of sense for a leaf node. -

Introduction to Design Patterns

Chapter 1 Introduction • Designing object-oriented software is hard, and designing reusable object-oriented software is even harder. • It takes a long time for novices to learn what good object-oriented design is all about. Experienced designers evidently know something inexperienced ones don't. • Expert designers find recurring patterns of classes and communicating objects in many object-oriented systems, and can apply them immediately to design problems without having to rediscover them. • Recording experience in designing object-oriented software as design patterns. • Design patterns make it easier to reuse successful designs and architectures. What is a Design Pattern? • Christopher Alexander says, "Each pattern describes a problem which occurs over and over again in our environment, and then describes the core of the solution to that problem, in such a way that you can use this solution a million times over, without ever doing it the same way twice". • Four essential elements of a pattern:: 1. The pattern name is a handle we can use to describe a design problem, its solutions, and consequences in a word or two. 2. The problem describes when to apply the pattern. 3. The solution describes the elements that make up the design, their relationships, responsibilities, and collaborations 4. The consequences are the results and trade-offs of applying the pattern. • The design patterns are descriptions of communicating objects and classes that are customized to solve a general design problem in a particular context. Design Patterns in Smalltalk MVC • Model is the application object • View is the model's screen presentation • Controller defines the way the user interface reacts to user input. -

O O S E E Object Orientation

Classification [7] O OSE E + classes + inheritance Object Orientation object−based class−based object−oriented Operating-System Engineering Operating-System Engineering — Object Orientation 2 Object-Oriented Design [2] Object-Based • implies the capability of data abstraction { methods provide access to attributes method { attributes represent instance variables Object-oriented design is a method of design encompassing the process of object-oriented decomposition and a notation for depicting both logical and attribute • methods define the object’s external interface object physical as well as static and dynamic models of the systems under design. { similar to an abstract data type (ADT) • objects are unique instances, having only in common the need for memory Operating-System Engineering — Object Orientation 1 Operating-System Engineering — Object Orientation 3 Class-Based Object Orientation = Objects + Classes + Inheritance • objects are instances of classes • an object-oriented programming language must provide linguistic support { a class defines a set of common properties methods { properties are attributes of a class { it must enable the programmers to describe common properties of objects { classes may be compared to types ∗ such a language is referred to as an object-based programming language { it is called object-oriented only if class hierarchies can be built by inheritance ∗ • a meta-description specifies the interface instances so that the objects can be instances of classes that have been inherited { it allows the modeling of an object as a polymorphous entity { describing a uniform management class { indicating further data abstractions • object orientation is unattainable using imperative programming languages1 • depending on the programming language employed, a class may be an ADT 1Notwithstanding, from time to time there are comments stating the development of object-oriented systems using e.g. -

Object Behavioral Intent Structure Description

TEMPLATE METHOD (DP 325) Object Behavioral Intent Define the skeleton of an algorithm in an operation, deferring some steps to subclasses. Template Method lets subclasses redefine certain steps of an algorithm without changing the algorithm’s structure. Structure AbstractClass ... templateMethod self primitiveMethod1. primitiveMethod1 ... primitiveMethod2 self primitiveMethod2. ... ConcreteClassA ConcreteClassB primitiveMethod1 primitiveMethod1 primitiveMethod2 primitiveMethod2 ConcreteClassC primitiveMethod2 Description Template Method may be #1 on the Design Patterns Pop Charts. Its structure and use are at the heart of much of object-oriented programming. Template Method turns on the fundamental object-oriented concept of inheritance. It relies on defining classes that differ only slightly from an existing class. It does this by relying on the ability of classes to provide for new behavior by overriding inherited methods. The key to the Template Method pattern is a method that implements a broad message in terms of several narrower messages in the same receiver. The broader method, a Template Method, is implemented in a superclass and the narrower methods, Primitive Methods, are implemented in that class or its subclasses. The Template Method defines a general algorithm and the Primitive Methods specify the details. This way, when a subclass wants to use the algorithm but wishes to change some of the details, it can override the specific Primitive Methods without having to override the entire Template Method as well. Reuse through Inheritance Code and attribute sharing through inheritance is one of the most maligned techniques in object- oriented programming. It is deceptively easy to use, but often difficult to use well. Some authorities have dismissed implementation inheritance as confusing and unnecessary.