AI-KG: an Automatically Generated Knowledge Graph of Artificial Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

One Knowledge Graph to Rule Them All? Analyzing the Differences Between Dbpedia, YAGO, Wikidata & Co

One Knowledge Graph to Rule them All? Analyzing the Differences between DBpedia, YAGO, Wikidata & co. Daniel Ringler and Heiko Paulheim University of Mannheim, Data and Web Science Group Abstract. Public Knowledge Graphs (KGs) on the Web are consid- ered a valuable asset for developing intelligent applications. They contain general knowledge which can be used, e.g., for improving data analyt- ics tools, text processing pipelines, or recommender systems. While the large players, e.g., DBpedia, YAGO, or Wikidata, are often considered similar in nature and coverage, there are, in fact, quite a few differences. In this paper, we quantify those differences, and identify the overlapping and the complementary parts of public KGs. From those considerations, we can conclude that the KGs are hardly interchangeable, and that each of them has its strenghts and weaknesses when it comes to applications in different domains. 1 Knowledge Graphs on the Web The term \Knowledge Graph" was coined by Google when they introduced their knowledge graph as a backbone of a new Web search strategy in 2012, i.e., moving from pure text processing to a more symbolic representation of knowledge, using the slogan \things, not strings"1. Various public knowledge graphs are available on the Web, including DB- pedia [3] and YAGO [9], both of which are created by extracting information from Wikipedia (the latter exploiting WordNet on top), the community edited Wikidata [10], which imports other datasets, e.g., from national libraries2, as well as from the discontinued Freebase [7], the expert curated OpenCyc [4], and NELL [1], which exploits pattern-based knowledge extraction from a large Web corpus. -

Wikipedia Knowledge Graph with Deepdive

The Workshops of the Tenth International AAAI Conference on Web and Social Media Wiki: Technical Report WS-16-17 Wikipedia Knowledge Graph with DeepDive Thomas Palomares Youssef Ahres [email protected] [email protected] Juhana Kangaspunta Christopher Re´ [email protected] [email protected] Abstract This paper is organized as follows: first, we review the related work and give a general overview of DeepDive. Sec- Despite the tremendous amount of information on Wikipedia, ond, starting from the data preprocessing, we detail the gen- only a very small amount is structured. Most of the informa- eral methodology used. Then, we detail two applications tion is embedded in unstructured text and extracting it is a non trivial challenge. In this paper, we propose a full pipeline that follow this pipeline along with their specific challenges built on top of DeepDive to successfully extract meaningful and solutions. Finally, we report the results of these applica- relations from the Wikipedia text corpus. We evaluated the tions and discuss the next steps to continue populating Wiki- system by extracting company-founders and family relations data and improve the current system to extract more relations from the text. As a result, we extracted more than 140,000 with a high precision. distinct relations with an average precision above 90%. Background & Related Work Introduction Until recently, populating the large knowledge bases relied on direct contributions from human volunteers as well With the perpetual growth of web usage, the amount as integration of existing repositories such as Wikipedia of unstructured data grows exponentially. Extract- info boxes. These methods are limited by the available ing facts and assertions to store them in a struc- structured data and by human power. -

Knowledge Graphs on the Web – an Overview Arxiv:2003.00719V3 [Cs

January 2020 Knowledge Graphs on the Web – an Overview Nicolas HEIST, Sven HERTLING, Daniel RINGLER, and Heiko PAULHEIM Data and Web Science Group, University of Mannheim, Germany Abstract. Knowledge Graphs are an emerging form of knowledge representation. While Google coined the term Knowledge Graph first and promoted it as a means to improve their search results, they are used in many applications today. In a knowl- edge graph, entities in the real world and/or a business domain (e.g., people, places, or events) are represented as nodes, which are connected by edges representing the relations between those entities. While companies such as Google, Microsoft, and Facebook have their own, non-public knowledge graphs, there is also a larger body of publicly available knowledge graphs, such as DBpedia or Wikidata. In this chap- ter, we provide an overview and comparison of those publicly available knowledge graphs, and give insights into their contents, size, coverage, and overlap. Keywords. Knowledge Graph, Linked Data, Semantic Web, Profiling 1. Introduction Knowledge Graphs are increasingly used as means to represent knowledge. Due to their versatile means of representation, they can be used to integrate different heterogeneous data sources, both within as well as across organizations. [8,9] Besides such domain-specific knowledge graphs which are typically developed for specific domains and/or use cases, there are also public, cross-domain knowledge graphs encoding common knowledge, such as DBpedia, Wikidata, or YAGO. [33] Such knowl- edge graphs may be used, e.g., for automatically enriching data with background knowl- arXiv:2003.00719v3 [cs.AI] 12 Mar 2020 edge to be used in knowledge-intensive downstream applications. -

Knowledge Graph Identification

Knowledge Graph Identification Jay Pujara1, Hui Miao1, Lise Getoor1, and William Cohen2 1 Dept of Computer Science, University of Maryland, College Park, MD 20742 fjay,hui,[email protected] 2 Machine Learning Dept, Carnegie Mellon University, Pittsburgh, PA 15213 [email protected] Abstract. Large-scale information processing systems are able to ex- tract massive collections of interrelated facts, but unfortunately trans- forming these candidate facts into useful knowledge is a formidable chal- lenge. In this paper, we show how uncertain extractions about entities and their relations can be transformed into a knowledge graph. The ex- tractions form an extraction graph and we refer to the task of removing noise, inferring missing information, and determining which candidate facts should be included into a knowledge graph as knowledge graph identification. In order to perform this task, we must reason jointly about candidate facts and their associated extraction confidences, identify co- referent entities, and incorporate ontological constraints. Our proposed approach uses probabilistic soft logic (PSL), a recently introduced prob- abilistic modeling framework which easily scales to millions of facts. We demonstrate the power of our method on a synthetic Linked Data corpus derived from the MusicBrainz music community and a real-world set of extractions from the NELL project containing over 1M extractions and 70K ontological relations. We show that compared to existing methods, our approach is able to achieve improved AUC and F1 with significantly lower running time. 1 Introduction The web is a vast repository of knowledge, but automatically extracting that knowledge at scale has proven to be a formidable challenge. -

Google Knowledge Graph, Bing Satori and Wolfram Alpha * Farouk Musa Aliyu and Yusuf Isah Yahaya

International Journal of Scientific & Engineering Research Volume 12, Issue 1, January-2021 11 ISSN 2229-5518 An Investigation of the Accuracy of Knowledge Graph-base Search Engines: Google knowledge Graph, Bing Satori and Wolfram Alpha * Farouk Musa Aliyu and Yusuf Isah Yahaya Abstract— In this paper, we carried out an investigation on the accuracy of two knowledge graph driven search engines (Google knowledge Graph and Bing’s Satori) and a computational knowledge system (Wolfram Alpha). We used a dataset consisting of list of books and their correct authors and constructed queries that will retrieve the author(s) of a book given the book’s name. We evaluate the result from each search engine and measure their precision, recall and F1 score. We also compared the result of these two search engines to the result from the computation knowledge engine (Wolfram Alpha). Our result shows that Google performs better than Bing. While both Google and Bing performs better than Wolfram Alpha. Keywords — Knowledge Graphs, Evaluation, Information Retrieval, Semantic Search Engines.. —————————— —————————— 1 INTRODUCTION earch engines have played a significant role in helping leveraging knowledge graphs or how reliable are the result S web users find their search needs. Most traditional outputted by the semantic search engines? search engines answer their users by presenting a list 2. How are the accuracies of semantic search engines as of ranked documents which they believe are the most relevant compare with computational knowledge engines? to their -

Towards a Knowledge Graph for Science

Towards a Knowledge Graph for Science Invited Article∗ Sören Auer Viktor Kovtun Manuel Prinz TIB Leibniz Information Centre for L3S Research Centre, Leibniz TIB Leibniz Information Centre for Science and Technology and L3S University of Hannover Science and Technology Research Centre at University of Hannover, Germany Hannover, Germany Hannover [email protected] [email protected] Hannover, Germany [email protected] Anna Kasprzik Markus Stocker TIB Leibniz Information Centre for TIB Leibniz Information Centre for Science and Technology Science and Technology Hannover, Germany Hannover, Germany [email protected] [email protected] ABSTRACT KEYWORDS The document-centric workflows in science have reached (or al- Knowledge Graph, Science and Technology, Research Infrastructure, ready exceeded) the limits of adequacy. This is emphasized by Libraries, Information Science recent discussions on the increasing proliferation of scientific lit- ACM Reference Format: erature and the reproducibility crisis. This presents an opportu- Sören Auer, Viktor Kovtun, Manuel Prinz, Anna Kasprzik, and Markus nity to rethink the dominant paradigm of document-centric schol- Stocker. 2018. Towards a Knowledge Graph for Science: Invited Article. arly information communication and transform it into knowledge- In WIMS ’18: 8th International Conference on Web Intelligence, Mining and based information flows by representing and expressing informa- Semantics, June 25–27, 2018, Novi Sad, Serbia. ACM, New York, NY, USA, tion through semantically rich, interlinked knowledge graphs. At 6 pages. https://doi.org/10.1145/3227609.3227689 the core of knowledge-based information flows is the creation and evolution of information models that establish a common under- 1 INTRODUCTION standing of information communicated between stakeholders as The communication of scholarly information is document-centric. -

Exploiting Semantic Web Knowledge Graphs in Data Mining

Exploiting Semantic Web Knowledge Graphs in Data Mining Inauguraldissertation zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften der Universit¨atMannheim presented by Petar Ristoski Mannheim, 2017 ii Dekan: Dr. Bernd Lübcke, Universität Mannheim Referent: Professor Dr. Heiko Paulheim, Universität Mannheim Korreferent: Professor Dr. Simone Paolo Ponzetto, Universität Mannheim Tag der mündlichen Prüfung: 15 Januar 2018 Abstract Data Mining and Knowledge Discovery in Databases (KDD) is a research field concerned with deriving higher-level insights from data. The tasks performed in that field are knowledge intensive and can often benefit from using additional knowledge from various sources. Therefore, many approaches have been proposed in this area that combine Semantic Web data with the data mining and knowledge discovery process. Semantic Web knowledge graphs are a backbone of many in- formation systems that require access to structured knowledge. Such knowledge graphs contain factual knowledge about real word entities and the relations be- tween them, which can be utilized in various natural language processing, infor- mation retrieval, and any data mining applications. Following the principles of the Semantic Web, Semantic Web knowledge graphs are publicly available as Linked Open Data. Linked Open Data is an open, interlinked collection of datasets in machine-interpretable form, covering most of the real world domains. In this thesis, we investigate the hypothesis if Semantic Web knowledge graphs can be exploited as background knowledge in different steps of the knowledge discovery process, and different data mining tasks. More precisely, we aim to show that Semantic Web knowledge graphs can be utilized for generating valuable data mining features that can be used in various data mining tasks. -

Wembedder: Wikidata Entity Embedding Web Service

Wembedder: Wikidata entity embedding web service Finn Årup Nielsen Cognitive Systems, DTU Compute Technical University of Denmark Kongens Lyngby, Denmark [email protected] ABSTRACT as the SPARQL-based Wikidata Query Service (WDQS). General I present a web service for querying an embedding of entities in functions for finding related items or generating fixed-length fea- the Wikidata knowledge graph. The embedding is trained on the tures for machine learning are to my knowledge not available. Wikidata dump using Gensim’s Word2Vec implementation and a simple graph walk. A REST API is implemented. Together with There is some research that combines machine learning and Wiki- the Wikidata API the web service exposes a multilingual resource data [9, 14], e.g., Mousselly-Sergieh and Gurevych have presented for over 600’000 Wikidata items and properties. a method for aligning Wikidata items with FrameNet based on Wikidata labels and aliases [9]. Keywords Wikidata, embedding, RDF, web service. Property suggestion is running live in the Wikidata editing inter- face, where it helps editors recall appropriate properties for items during manual editing, and as such a form of recommender sys- 1. INTRODUCTION tem. Researchers have investigated various methods for this pro- The Word2Vec model [7] spawned an interest in dense word repre- cess [17]. sentation in a low-dimensional space, and there are now a consider- 1 able number of “2vec” models beyond the word level. One recent Scholia at https://tools.wmflabs.org/scholia/ is avenue of research in the “2vec” domain uses knowledge graphs our web service that presents scholarly profiles based on data in [13]. -

How Much Is a Triple? Estimating the Cost of Knowledge Graph Creation

How much is a Triple? Estimating the Cost of Knowledge Graph Creation Heiko Paulheim Data and Web Science Group, University of Mannheim, Germany [email protected] Abstract. Knowledge graphs are used in various applications and have been widely analyzed. A question that is not very well researched is: what is the price of their production? In this paper, we propose ways to estimate the cost of those knowledge graphs. We show that the cost of manually curating a triple is between $2 and $6, and that the cost for automatically created knowledge graphs is by a factor of 15 to 250 cheaper (i.e., 1g to 15g per statement). Furthermore, we advocate for taking cost into account as an evaluation metric, showing the correspon- dence between cost per triple and semantic validity as an example. Keywords: Knowledge Graphs, Cost Estimation, Automation 1 Estimating the Cost of Knowledge Graphs With the increasing attention larger scale knowledge graphs, such as DBpedia [5], YAGO [12] and the like, have drawn towards themselves, they have been inspected under various angles, such as as size, overlap [11], or quality [3]. How- ever, one dimension that is underrepresented in those analyses is cost, i.e., the prize of creating the knowledge graphs. 1.1 Manual Curation: Cyc and Freebase For manually created knowledge graphs, we have to estimate the effort of pro- viding the statements directly. Cyc [6] is one of the earliest general purpose knowledge graphs, and, at the same time, the one for which the development effort is known. At a 2017 con- ference, Douglas Lenat, the inventor of Cyc, denoted the cost of creation of Cyc at $120M.1 In the same presentation, Lenat states that Cyc consists of 21M assertions, which makes a cost of $5.71 per statement. -

Wisdom of Enterprise Knowledge Graphs

Wisdom of Enterprise Knowledge Graphs The path to collective intelligence within your company Wisdom of Enterprise Knowledge Graphs | The path to collective intelligence within your company “ Intelligence is the ability to adapt to change.” Stephen Hawking 02 Wisdom of Enterprise Knowledge Graphs | The path to collective intelligence within your company Preface 04 Use Cases 06 Impact analysis 06 AI-based decision-making 07 Product DNA and root-cause analysis 08 Intelligent data governance 09 Context based knowledge transformation 10 Leverage knowledge from external sources 11 What are Knowledge Graphs? 12 The foundation for Knowledge Graphs 14 Conclusion 15 Contact 16 03 Wisdom of Enterprise Knowledge Graphs | The path to collective intelligence within your company Preface Today, companies are relying more and more on artificial intelligence in their decision-making processes. Semantic AI enables machines to solve complex problems and explain to users how their recommendations were derived. It is the key to collective intelligence within a company – manifested in Knowledge Graphs. Unleashing the power of knowledge is one That said, it is extremely time consuming of the most crucial tasks for enterprises to share your domain knowledge. First you striving to stay competitive. Knowledge, have to structure and adapt the informa- however, is not just data thrown into a tion to fit into a pre-defined data model. database. It is a complex, dynamic model that puts every piece of information into a Knowledge Graphs connect knowledge larger frame, builds a world around it and from different domains, data models and reveals its connections and meaning in a heterogeneous data formats without specific context. -

Sangrahaka: a Tool for Annotating and Querying Knowledge Graphs

Sangrahaka: A Tool for Annotating and Querying Knowledge Graphs Hrishikesh Terdalkar Arnab Bhattacharya [email protected] [email protected] Indian Institute of Technology Kanpur Indian Institute of Technology Kanpur India India ABSTRACT Semantic tasks are high-level tasks dealing with the meaning of We present a web-based tool Sangrahaka for annotating entities and linguistic units and are considered among the most difficult tasks relationships from text corpora towards construction of a knowl- in NLP for any language. Question Answering (QA) is an example edge graph and subsequent querying using templatized natural of a semantic task that deals with answering a question asked in a language questions. The application is language and corpus agnos- natural language. The task requires a machine to ‘understand’ the tic, but can be tuned for specific needs of a language or a corpus. The language, i.e., identify the intent of the question, and then search for application is freely available for download and installation. Besides relevant information in the available text. This often encompasses having a user-friendly interface, it is fast, supports customization, other NLP tasks such as parts-of-speech tagging, named entity and is fault tolerant on both client and server side. It outperforms recognition, co-reference resolution, and dependency parsing [21]. other annotation tools in an objective evaluation metric. The frame- Making use of knowledge bases is a common approach for the work has been successfully used in two annotation tasks. The code QA task [19, 22, 30, 32]. Construction of knowledge graphs (KGs) is available from https://github.com/hrishikeshrt/sangrahaka. -

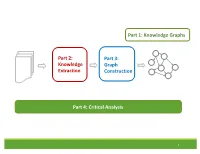

Knowledge Extraction Part 3: Graph

Part 1: Knowledge Graphs Part 2: Part 3: Knowledge Graph Extraction Construction Part 4: Critical Analysis 1 Tutorial Outline 1. Knowledge Graph Primer [Jay] 2. Knowledge Extraction from Text a. NLP Fundamentals [Sameer] b. Information Extraction [Bhavana] Coffee Break 3. Knowledge Graph Construction a. Probabilistic Models [Jay] b. Embedding Techniques [Sameer] 4. Critical Overview and Conclusion [Bhavana] 2 Critical Overview SUMMARY SUCCESS STORIES DATASETS, TASKS, SOFTWARES EXCITING ACTIVE RESEARCH FUTURE RESEARCH DIRECTIONS 3 Critical Overview SUMMARY SUCCESS STORIES DATASETS, TASKS, SOFTWARES EXCITING ACTIVE RESEARCH FUTURE RESEARCH DIRECTIONS 4 Why do we need Knowledge graphs? • Humans can explore large database in intuitive ways •AI agents get access to human common sense knowledge 5 Knowledge graph construction A1 E1 • Who are the entities A2 (nodes) in the graph? • What are their attributes E2 and types (labels)? A1 A2 • How are they related E3 (edges)? A1 A2 6 Knowledge Graph Construction Knowledge Extraction Graph Knowledge Text Extraction graph Construction graph 7 Two perspectives Extraction graph Knowledge graph Who are the entities? (nodes) What are their attributes? (labels) How are they related? (edges) 8 Two perspectives Extraction graph Knowledge graph Who are the entities? • Named Entity • Entity Linking (nodes) Recognition • Entity Resolution • Entity Coreference What are their • Entity Typing • Collective attributes? (labels) classification How are they related? • Semantic role • Link prediction (edges) labeling • Relation Extraction 9 Knowledge Extraction John was born in Liverpool, to Julia and Alfred Lennon. Text NLP Lennon.. Mrs. Lennon.. his father John Lennon... the Pool .. his mother .. he Alfred Person Location Person Person John was born in Liverpool, to Julia and Alfred Lennon.