A Framework for Distributed Parallel Execution of Transactions of Blocks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evmpatch: Timely and Automated Patching of Ethereum Smart Contracts

EVMPatch: Timely and Automated Patching of Ethereum Smart Contracts Michael Rodler Wenting Li Ghassan O. Karame University of Duisburg-Essen NEC Laboratories Europe NEC Laboratories Europe Lucas Davi University of Duisburg-Essen Abstract some of these contracts hold, smart contracts have become an appealing target for attacks. Programming errors in smart Recent attacks exploiting errors in smart contract code had contract code can have devastating consequences as an attacker devastating consequences thereby questioning the benefits of can exploit these bugs to steal cryptocurrency or tokens. this technology. It is currently highly challenging to fix er- rors and deploy a patched contract in time. Instant patching is Recently, the blockchain community has witnessed several especially important since smart contracts are always online incidents due smart contract errors [7, 39]. One especially due to the distributed nature of blockchain systems. They also infamous incident is the “TheDAO” reentrancy attack, which manage considerable amounts of assets, which are at risk and resulted in a loss of over 50 million US Dollars worth of often beyond recovery after an attack. Existing solutions to Ether [31]. This led to a highly debated hard-fork of the upgrade smart contracts depend on manual and error-prone pro- Ethereum blockchain. Several proposals demonstrated how to defend against reentrancy vulnerabilities either by means of cesses. This paper presents a framework, called EVMPATCH, to instantly and automatically patch faulty smart contracts. offline analysis at development time or by performing run-time validation [16, 23, 32, 42]. Another infamous incident is the EVMPATCH features a bytecode rewriting engine for the pop- ular Ethereum blockchain, and transparently/automatically parity wallet attack [39]. -

Decentralized Autonomous Organization Supplement."

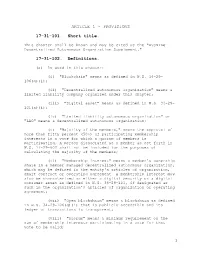

ARTICLE 1 - PROVISIONS 17-31-101. Short title. This chapter shall be known and may be cited as the "Wyoming Decentralized Autonomous Organization Supplement." 17-31-102. Definitions. (a) As used in this chapter: (i) "Blockchain" means as defined in W.S. 34-29- 106(g)(i); (ii) "Decentralized autonomous organization" means a limited liability company organized under this chapter; (iii) "Digital asset" means as defined in W.S. 34-29- 101(a)(i); (iv) "Limited liability autonomous organization" or "LAO" means a decentralized autonomous organization; (v) "Majority of the members," means the approval of more than fifty percent (50%) of participating membership interests in a vote for which a quorum of members is participating. A person dissociated as a member as set forth in W.S. 17-29-602 shall not be included for the purposes of calculating the majority of the members; (vi) "Membership interest" means a member's ownership share in a member managed decentralized autonomous organization, which may be defined in the entity's articles of organization, smart contract or operating agreement. A membership interest may also be characterized as either a digital security or a digital consumer asset as defined in W.S. 34-29-101, if designated as such in the organization's articles of organization or operating agreement; (vii) "Open blockchain" means a blockchain as defined in W.S. 34-29-106(g)(i) that is publicly accessible and its ledger of transactions is transparent; (viii) "Quorum" means a minimum requirement on the sum of membership interests participating in a vote for that vote to be valid; 1 (ix) "Smart contract" means an automated transaction, as defined in W.S. -

Blockchain & Cryptocurrency Regulation

Blockchain & Cryptocurrency Regulation Third Edition Contributing Editor: Josias N. Dewey Global Legal Insights Blockchain & Cryptocurrency Regulation 2021, Third Edition Contributing Editor: Josias N. Dewey Published by Global Legal Group GLOBAL LEGAL INSIGHTS – BLOCKCHAIN & CRYPTOCURRENCY REGULATION 2021, THIRD EDITION Contributing Editor Josias N. Dewey, Holland & Knight LLP Head of Production Suzie Levy Senior Editor Sam Friend Sub Editor Megan Hylton Consulting Group Publisher Rory Smith Chief Media Officer Fraser Allan We are extremely grateful for all contributions to this edition. Special thanks are reserved for Josias N. Dewey of Holland & Knight LLP for all of his assistance. Published by Global Legal Group Ltd. 59 Tanner Street, London SE1 3PL, United Kingdom Tel: +44 207 367 0720 / URL: www.glgroup.co.uk Copyright © 2020 Global Legal Group Ltd. All rights reserved No photocopying ISBN 978-1-83918-077-4 ISSN 2631-2999 This publication is for general information purposes only. It does not purport to provide comprehensive full legal or other advice. Global Legal Group Ltd. and the contributors accept no responsibility for losses that may arise from reliance upon information contained in this publication. This publication is intended to give an indication of legal issues upon which you may need advice. Full legal advice should be taken from a qualified professional when dealing with specific situations. The information contained herein is accurate as of the date of publication. Printed and bound by TJ International, Trecerus Industrial Estate, Padstow, Cornwall, PL28 8RW October 2020 PREFACE nother year has passed and virtual currency and other blockchain-based digital assets continue to attract the attention of policymakers across the globe. -

Article Friis Glaser

International Journal of Community Currency Research 2018 VOLUME 22 (SUMMER) EXTENDING BLOCKCHAIN TECHNOLOGY TO HOST CUSTOMIZA- BLE AND INTEROPERABLE COMMUNITY CURRENCIES Gustav R.B. Friis* and Florian Glaser** * Brainbot Technologies AG, Mainz ** Karlsruhe Institute of Technology (KIT), Karlsruhe ABSTRACT The goal of this paper is to propose an open platform for secure and interoperable virtual community currencies. We follow the established information systems design-science approach to develop a prototype that aims to combine best practices for building mutual-credit community currencies with the unique features of blockchain technology. The result is a specification of an open Internet platform that enables users to join and to host customized community currencies. The hosted currencies can be classified as credit-based future type of money with decentralized issuance. Furthermore, we describe how the transparency, security and interoperability properties of blockchain technology offer a solution to the inherent problems of existing, centrally operated community currency software. The characteristics of the prototype and its ability to fulfil the design-objectives are examined by a relative evaluation against existing payment and currency systems like Bitcoin, LETS and M-Pesa. KEYWORDS Virtual community currencies; blockchain technology; mutual-credit; LETS; Trustlines Network To cite this article: Friis, Gustav R.B. and Glaser, Florian (2018) ‘Extending Blockchain Technology to host Customizable and Interoperable Community Currencies’ International Journal of Community Currency Research 2018 Volume 22 (Summer) 71-84 <www.ijccr.net> ISSN 1325-9547. DOI http://dx.doi.org/10.15133/j.ijccr.2018.017 INTERNATIONAL JOURNAL OF COMMUNITY CURRENCY RESEARCH 2018 VOLUME 22 (SUMMER) 71-84 FRIIS & GLASER 1. -

More Legal Aspects of Smart Contract Applications

More Legal Aspects of Smart Contract Applications Token Sales, Capital Markets, Supply Chain Management, Government and Smart Cities, Real Estate Registries, and Enabling Self-Sovereign Identity J. DAX HANSEN | PARTNER LAURIE ROSINI | ASSOCIATE CARLA L. REYES | ASSISTANT PROFESSOR OF LAW +1.206.359.6324 +1.206.359.3052 Director of Legal RnD - Michigan State University College of Law [email protected] [email protected] Faculty Associate - Berkman Klein Center for Internet & Society at Harvard University [email protected] PerkinsCoie.com/Blockchain Perkins Coie LLP | October 2018 Table of Contents INTRODUCTION .............................................................................................................................................................................. 3 I. A (VERY) BRIEF INTRODUCTION TO SMART CONTRACTS ............................................................................................... 3 THE ORIGINS OF SMART CONTRACTS ........................................................................................................................................................................... 3 SMART CONTRACTS IN A DISTRIBUTED LEDGER TECHNOLOGY WORLD ....................................................................................................... 4 II. CURRENT ACADEMIC LITERATURE AND INDUSTRY INITIATIVES RELATING TO SMART CONTRACTS .................... 6 SMART CONTRACTS AND CONTRACT LAW ................................................................................................................................................................ -

ISDA Legal Guidelines for Smart Derivatives Contracts: Foreign Exchange Derivatives

ISDA Legal Guidelines for Smart Derivatives Contracts: Foreign Exchange Derivatives Contents Disclaimer .................................................................................................................................. 3 Introduction ................................................................................................................................ 4 The Over-the-Counter FX Market ............................................................................................... 5 Types of FX ............................................................................................................................... 6 Building the foundation for Smart Derivatives Contracts ............................................................11 Constructing Smart Derivatives Contracts for FX ......................................................................16 Valuations and Calculations ......................................................................................................17 Issues for technology developers to consider ............................................................................20 Settlement .................................................................................................................................32 Clearing ....................................................................................................................................37 Reporting ..................................................................................................................................37 -

Blockchain Implementation Method for Interoperability Between Cbdcs

future internet Article Blockchain Implementation Method for Interoperability between CBDCs Hyunjun Jung and Dongwon Jeong * Department of Software Convergence Engineering, Kunsan National University, Gunsan 54150, Korea; [email protected] * Correspondence: [email protected]; Tel.: +82-(063)-469-8912 Abstract: Central Bank Digital Currency (CBDC) is a digital currency issued by a central bank. Motivated by the financial crisis and prospect of a cashless society, countries are researching CBDC. Recently, global consideration has been given to paying basic income to avoid consumer sentiment shrinkage and recession due to epidemics. CBDC is coming into the spotlight as the way to manage the public finance policy of nations comprehensively. CBDC is studied by many countries. The bank of the Bahamas released Sand Dollar. Each country’s central bank should consider the situation in which CBDCs are exchanged. The transaction of the CDDB is open data. Transaction registers CBDC exchange information of the central bank in the blockchain. Open data on currency exchange between countries will provide information on the flow of money between countries. This paper proposes a blockchain system and management method based on the ISO/IEC 11179 metadata registry for exchange between CBDCs that records transactions between registered CBDCs. Each country’s CBDC will have a different implementation and time of publication. We implement the blockchain system and experiment with the operation method, measuring the block generation time of blockchains using the proposed method. Keywords: CBDC; CBDC exchange; ISO/IEC 11179; CBDC interoperability blockchain system Citation: Jung, H.; Jeong, D. Blockchain Implementation Method for Interoperability between CBDCs. Future Internet 2021, 13, 133. -

An Organized Repository of Ethereum Smart Contracts' Source Codes and Metrics

Article An Organized Repository of Ethereum Smart Contracts’ Source Codes and Metrics Giuseppe Antonio Pierro 1,* , Roberto Tonelli 2,* and Michele Marchesi 2 1 Inria Lille-Nord Europe Centre, 59650 Villeneuve d’Ascq, France 2 Department of Mathematics and Computer Science, University of Cagliari, 09124 Cagliari, Italy; [email protected] * Correspondence: [email protected] (G.A.P.); [email protected] (R.T.) Received: 31 October 2020; Accepted: 11 November 2020; Published: 15 November 2020 Abstract: Many empirical software engineering studies show that there is a need for repositories where source codes are acquired, filtered and classified. During the last few years, Ethereum block explorer services have emerged as a popular project to explore and search for Ethereum blockchain data such as transactions, addresses, tokens, smart contracts’ source codes, prices and other activities taking place on the Ethereum blockchain. Despite the availability of this kind of service, retrieving specific information useful to empirical software engineering studies, such as the study of smart contracts’ software metrics, might require many subtasks, such as searching for specific transactions in a block, parsing files in HTML format, and filtering the smart contracts to remove duplicated code or unused smart contracts. In this paper, we afford this problem by creating Smart Corpus, a corpus of smart contracts in an organized, reasoned and up-to-date repository where Solidity source code and other metadata about Ethereum smart contracts can easily and systematically be retrieved. We present Smart Corpus’s design and its initial implementation, and we show how the data set of smart contracts’ source codes in a variety of programming languages can be queried and processed to get useful information on smart contracts and their software metrics. -

Security Analysis Methods on Ethereum Smart Contract Vulnerabilities — a Survey

1 Security Analysis Methods on Ethereum Smart Contract Vulnerabilities — A Survey Purathani Praitheeshan?, Lei Pan?, Jiangshan Yuy, Joseph Liuy, and Robin Doss? Abstract—Smart contracts are software programs featuring user [4]. In consequence of these issues of the traditional both traditional applications and distributed data storage on financial systems, the technology advances in peer to peer blockchains. Ethereum is a prominent blockchain platform with network and decentralized data management were headed up the support of smart contracts. The smart contracts act as autonomous agents in critical decentralized applications and as the way of mitigation. In recent years, the blockchain hold a significant amount of cryptocurrency to perform trusted technology is being the prominent mechanism which uses transactions and agreements. Millions of dollars as part of the distributed ledger technology (DLT) to implement digitalized assets held by the smart contracts were stolen or frozen through and decentralized public ledger to keep all cryptocurrency the notorious attacks just between 2016 and 2018, such as the transactions [1], [5], [6], [7], [8]. Blockchain is a public DAO attack, Parity Multi-Sig Wallet attack, and the integer underflow/overflow attacks. These attacks were caused by a electronic ledger equivalent to a distributed database. It can combination of technical flaws in designing and implementing be openly shared among the disparate users to create an software codes. However, many more vulnerabilities of less sever- immutable record of their transactions [7], [9], [10], [11], ity are to be discovered because of the scripting natures of the [12], [13]. Since all the committed records and transactions Solidity language and the non-updateable feature of blockchains. -

Leveraged Trading on Blockchain Technology

LEVERAGED TRADING ON BLOCKCHAIN TECHNOLOGY Research in Progress Johannes Rude Jensen Victor von Wachter University of Copenhagen University of Copenhagen eToroX Labs [email protected] [email protected] Omri Ross University of Copenhagen eToroX Labs [email protected] Abstract We document an ongoing research process towards the implementation and integration of a digital ar- tefact, executing the lifecycle of a leveraged trade with permissionless blockchain technology. By em- ploying core functions of the ‘Dai Stablecoin system’ deployed on the Ethereum blockchain, we produce the equivalent exposure of a leveraged position while deterministically automating the monitoring and liquidation processes. We demonstrate the implementation and early integration of the artefact into a hardened exchange environment through a microservice utilizing standardized API calls. The early re- sults presented in this paper were produced in collaboration with a team of stakeholders at a hosting organization, a multi-national online brokerage and cryptocurrency exchange. We utilize the design sci- ence research methodology (DSR) guiding the design, development, and evaluation of the artefact. Our findings indicate that, while it is feasible to implement the lifecycle of a leveraged trade on the block- chain, the integration of the artefact into a traditional exchange environment involves multiple compro- mises and drawback. Generalizing the tentative findings presented in this paper, we introduce three propositions on the implementation, integration, -

A Survey of Smart Contract Formal Specification and Verification

A Survey of Smart Contract Formal Specification and Verification PALINA TOLMACH, Nanyang Technological University, Singapore and Institute of High Performance Computing (A*STAR), Singapore YI LI, SHANG-WEI LIN, and YANG LIU, Nanyang Technological University, Singapore ZENGXIANG LI, Institute of High Performance Computing (A*STAR), Singapore A smart contract is a computer program which allows users to automate their actions on the blockchain platform. Given the significance of smart contracts in supporting important activities across industry sectors including supply chain, finance, legal and medical services, there is a strong demand for verification and validation techniques. Yet, the vast majority of smart contracts lack any kind of formal specification, which is essential for establishing their correctness. In this survey, we investigate formal models and specifications of smart contracts presented in the literature and present a systematic overview in order to understand the common trends. We also discuss the current approaches used in verifying such property specifications and identify gaps with the hope to recognize promising directions for future work. Additional Key Words and Phrases: Smart contract, formal verification, formal specification, properties 1 INTRODUCTION A proposal to store timestamped tamper-resistant information in cryptographically secure chained blocks has gradually evolved from 1991 [100] into what we now know as blockchain: a distributed ledger shared between nodes of a peer-to-peer network following a certain consensus protocol, and one of the fastest-growing technologies of the modern time. The blockchain technology owes its initial popularity to Bitcoin [155], which appeared in 2008. Later, Ethereum [220] started the era of Blockchain 2.0 and expanded the capabilities and applications of blockchain by introducing Turing- complete smart contracts. -

4 Key Tax Considerations for Cryptocurrency Contracts by Amy Lee Rosen

Portfolio Media. Inc. | 111 West 19th Street, 5th Floor | New York, NY 10011 | www.law360.com Phone: +1 646 783 7100 | Fax: +1 646 783 7161 | [email protected] 4 Key Tax Considerations For Cryptocurrency Contracts By Amy Lee Rosen Law360 (June 18, 2021, 5:01 PM EDT) -- When deciding whether to commit to a cryptocurrency contract, token holders should be aware of how they will monitor profits and losses, what tax forms they'll receive or need to provide, and growing government enforcement efforts in the space. Here, Law360 explores four important items to think about when evaluating whether and how to create a contract that uses cryptocurrency. Tracking Profits, Losses A contract that involves cryptocurrency requires the normal contractual components of an offer, consideration and acceptance. However, token holders also need to understand that the transfer, sale, purchase or exchange of cryptocurrency also has tax consequences that need to be reported, according to Edward L. Froelich, of counsel at Morrison & Foerster LLP. The first guidance released by the Internal Revenue Service on cryptocurrency was a 2014 notice that said any capital gain or loss from the sale or exchange of cryptocurrency has to be reported the same way as any other gain or loss on the sale or exchange of property. The notice said someone who has received cryptocurrency as payment for goods or services must calculate their gross income by including the fair market value of the token on the date it was received. If a contract uses cryptocurrency as payment for a service or a good, then the holder would have to make sure they knew the basis in the token when they received it, then subtract that basis from the value of the good or service to determine the gain or loss that must be reported, Froelich said.