Choosing Effective Talent Assessments to Strengthen Your Organization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supply Chain Talent Our Most Important Resource

SUPPLY CHAIN TALENT OUR MOST IMPORTANT RESOURCE A REPORT BY THE SUPPLY CHAIN MANAGEMENT FACULTY AT THE UNIVERSITY OF TENNESSEE APRIL 2015 GLOBAL SUPPLY CHAIN INSTITUTE Sponsored by NUMBER SIX IN THE SERIES | GAME-CHANGING TRENDS IN SUPPLY CHAIN MANAGING RISK IN THE GLOBAL SUPPLY CHAIN 1 SUPPLY CHAIN TALENT OUR MOST IMPORTANT RESOURCE TABLE OF CONTENTS Executive Summary 3 Introduction 5 Developing a Talent Management Strategy 7 Talent Management Industry Research Results and Recommendations 12 Eight Talent Management Best Practices 19 Case Studies in Hourly Supply Chain Talent Management đƫ$!ƫ.!0ƫ.%2!.ƫ$+.0#!ƫ ĂĊ đƫƫ1,,(5ƫ$%*ƫ!$*%%*ƫ* ƫ.!$+1/!ƫ!./+**!( Challenges 35 Summary 36 Supply Chain Talent Skill Matrix and Development Plan Tool 39 2 MANAGING RISK IN THE GLOBAL SUPPLY CHAIN SUPPLY CHAIN TALENT OUR MOST IMPORTANT RESOURCE THE SIXTH IN THE GAME-CHANGING TRENDS SERIES OF UNIVERSITY OF TENNESSEE ƫ ƫ ƫ ƫƫ APRIL 2015 AUTHORS: SHAY SCOTT, PhD MIKE BURNETTE PAUL DITTMANN, PhD TED STANK, PhD CHAD AUTRY, PhD AT THE UNIVERSITY OF TENNESSEE HASLAM COLLEGE OF BUSINESS BENDING THE CHAIN GAME-CHANGING TRENDS IN SUPPLY CHAIN The Surprising Challenge of Integrating Purchasing and Logistics FIRST ANNUAL REPORT BY THE A REPORT BY THE SUPPLY CHAIN MANAGEMENT SUPPLY CHAIN MANAGEMENT FACULTY FACULTY AT THE UNIVERSITY OF TENNESSEE AT THE UNIVERSITY OF TENNESSEE The Game-Changers Series SPRING 2014 SPRING 2013 of University of Tennessee Supply Chain Management White Papers These University of Tennessee Supply Chain Management white papers can be downloaded by going to the SPONSORED BY Publications section at gsci.utk.edu. -

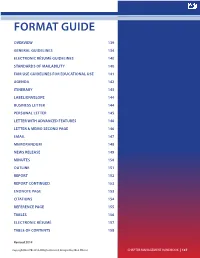

Format Guide

FORMAT GUIDE OVERVIEW 139 GENERAL GUIDELINES 134 ELECTRONIC RÉSUMÉ GUIDELINES 140 STANDARDS OF MAILABILITY 140 FAIR USE GUIDELINES FOR EDUCATIONAL USE 141 AGENDA 142 ITINERARY 143 LABEL/ENVELOPE 144 BUSINESS LETTER 144 PERSONAL LETTER 145 LETTER WITH ADVANCED FEATURES 146 LETTER & MEMO SECOND PAGE 146 EMAIL 147 MEMORANDUM 148 NEWS RELEASE 149 MINUTES 150 OUTLINE 151 REPORT 152 REPORT CONTINUED 153 ENDNOTE PAGE 153 CITATIONS 154 REFERENCE PAGE 155 TABLES 156 ELECTRONIC RÉSUMÉ 157 TABLE OF CONTENTS 158 Revised 2014 Copyright FBLA-PBL 2014. All Rights Reserved. Designed by: FBLA-PBL, Inc CHAPTER MANAGEMENT HANDBOOK | 137 138 | FBLA-PBL.ORG OVERVIEW In today’s business world, communication is consistently expressed through writing. Successful businesses require a consistent message throughout the organization. A foundation of this strategy is the use of a format guide, which enables a corporation to maintain a uniform image through all its communications. Use this guide to prepare for Computer Applications and Word Processing skill events. GENERAL GUIDELINES Font Size: 11 or 12 Font Style: Times New Roman, Arial, Calibri, or Cambria Spacing: 1 space after punctuation ending a sentence (stay consistent within the document) 1 space after a semicolon 1 space after a comma 1 space after a colon (stay consistent within the document) 1 space between state abbreviation and zip code Letters: Block Style with Open Punctuation Top Margin: 2 inches Side and Bottom Margins: 1 inch Bulleted Lists: Single space individual items; double space between items -

AN EXPERIENTIAL EXERCISE in PERSONNEL SELECTION “Be

AN EXPERIENTIAL EXERCISE IN PERSONNEL SELECTION “Be careful what you wish for.” An experiential exercise in personnel selection Submitted to the Experiential Learning Association Eastern Academy of Management AN EXPERIENTIAL EXERCISE IN PERSONNEL SELECTION “Be careful what you wish for.” An experiential exercise in personnel selection Abstract Personnel selection is a key topic in Human Resource Management (HRM) courses. This exercise intends to help students in HRM courses understand fundamental tasks in the selection process. Groups of students act as management teams to determine the suitability of applicants for a job posting for the position of instructor for a future offering of an HRM course. At the start of the exercise, the tasks include determining desirable qualifications and developing and ranking selection criteria based on the job posting and discussions among team members. Subsequently, each group reviews three resumes of fictitious candidates and ranks them based on the selection criteria. A group reflection and plenary discussion follow. Teaching notes, examples of classroom use and student responses are provided. Keywords: Personnel selection, HRM, experiential exercise AN EXPERIENTIAL EXERCISE IN PERSONNEL SELECTION “Be careful what you wish for.” An experiential exercise in personnel selection Selection is a key topic in Human Resource Management (HRM) courses and yet, selection exercises that can engage students in learning about the selection process are not abundant. Part of the challenge lies in providing students with a context to which they can relate. Many selection exercises focus on management situations that are unfamiliar for students in introductory HRM courses. Consequently, this exercise uses a context in which students have some knowledge – what they consider a good candidate for the position of sessional instructor for a future HRM course. -

ASSESSMENT METHODS in RECRUITMENT, SELECTION& PERFORMANCE 00 Prelims AMIR.Qxd 16/06/2005 05:51 Pm Page Ii Assessment Methods TP 31/8/05 10:25 Am Page 1

Assessment Methods HP 31/8/05 10:25 am Page 1 ASSESSMENT METHODS IN RECRUITMENT, SELECTION& PERFORMANCE 00_prelims_AMIR.qxd 16/06/2005 05:51 pm Page ii Assessment Methods TP 31/8/05 10:25 am Page 1 ASSESSMENT METHODS IN RECRUITMENT, SELECTION& PERFORMANCE A manager’s guide to psychometric testing, interviews and assessment centres Robert Edenborough London and Sterling, VA 00_prelims_AMIR.qxd 16/06/2005 05:51 pm Page iv To all the people whom I have studied, assessed and counselled over the last 40 years Publisher’s note Every possible effort has been made to ensure that the information contained in this book is accurate at the time of going to press, and the publisher and author cannot accept responsibility for any errors or omissions, however caused. No responsibility for loss or damage occasioned to any person acting, or refraining from action, as a result of the material in this publication can be accepted by the editor, the publisher or the author. First published in Great Britain and the United States in 2005 by Kogan Page Limited Apart from any fair dealing for the purposes of research or private study, or criticism or review, as permitted under the Copyright, Designs and Patents Act 1988, this publication may only be reproduced, stored or transmitted, in any form or by any means, with the prior permission in writing of the publishers, or in the case of reprographic reproduction in accordance with the terms and licences issued by the CLA. Enquiries concerning reproduction outside these terms should be sent to the publishers at the undermentioned addresses: 120 Pentonville Road 22883 Quicksilver Drive London N1 9JN Sterling VA 20166-2012 United Kingdom USA www.kogan-page.co.uk © Robert Edenborough, 2005 The right of Robert Edenborough to be identified as the author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988. -

Radio 4 Listings for 2 – 8 May 2020 Page 1 of 14

Radio 4 Listings for 2 – 8 May 2020 Page 1 of 14 SATURDAY 02 MAY 2020 Professor Martin Ashley, Consultant in Restorative Dentistry at panel of culinary experts from their kitchens at home - Tim the University Dental Hospital of Manchester, is on hand to Anderson, Andi Oliver, Jeremy Pang and Dr Zoe Laughlin SAT 00:00 Midnight News (m000hq2x) separate the science fact from the science fiction. answer questions sent in via email and social media. The latest news and weather forecast from BBC Radio 4. Presenter: Greg Foot This week, the panellists discuss the perfect fry-up, including Producer: Beth Eastwood whether or not the tomato has a place on the plate, and SAT 00:30 Intrigue (m0009t2b) recommend uses for tinned tuna (that aren't a pasta bake). Tunnel 29 SAT 06:00 News and Papers (m000htmx) Producer: Hannah Newton 10: The Shoes The latest news headlines. Including the weather and a look at Assistant Producer: Rosie Merotra the papers. “I started dancing with Eveline.” A final twist in the final A Somethin' Else production for BBC Radio 4 chapter. SAT 06:07 Open Country (m000hpdg) Thirty years after the fall of the Berlin Wall, Helena Merriman Closed Country: A Spring Audio-Diary with Brett Westwood SAT 11:00 The Week in Westminster (m000j0kg) tells the extraordinary true story of a man who dug a tunnel into Radio 4's assessment of developments at Westminster the East, right under the feet of border guards, to help friends, It seems hard to believe, when so many of us are coping with family and strangers escape. -

Digitalization in Supply Chain Management and Logistics: Smart and Digital Solutions for an Industry 4.0 Environment

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Kersten, Wolfgang (Ed.); Blecker, Thorsten (Ed.); Ringle, Christian M. (Ed.) Proceedings Digitalization in Supply Chain Management and Logistics: Smart and Digital Solutions for an Industry 4.0 Environment Proceedings of the Hamburg International Conference of Logistics (HICL), No. 23 Provided in Cooperation with: Hamburg University of Technology (TUHH), Institute of Business Logistics and General Management Suggested Citation: Kersten, Wolfgang (Ed.); Blecker, Thorsten (Ed.); Ringle, Christian M. (Ed.) (2017) : Digitalization in Supply Chain Management and Logistics: Smart and Digital Solutions for an Industry 4.0 Environment, Proceedings of the Hamburg International Conference of Logistics (HICL), No. 23, ISBN 978-3-7450-4328-0, epubli GmbH, Berlin, http://dx.doi.org/10.15480/882.1442 This Version is available at: http://hdl.handle.net/10419/209192 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. -

NRECA Electric Cooperative Employee Competencies 1.24.2020

The knowledge, skills, and abilities that support successful performance for ALL cooperative employees, regardless of the individual’s role or expertise. BUSINESS ACUMEN Integrates business, organizational and industry knowledge to one’s own job performance. Electric Cooperative Business Fundamentals Integrates knowledge of internal and external cooperative business and industry principles, structures and processes into daily practice. Technical Credibility Keeps current in area of expertise and demonstrates competency within areas of functional responsibility. Political Savvy Understands the impacts of internal and external political dynamics. Resource Management Uses resources to accomplish objectives and goals. Service and Community Orientation Anticipates and meets the needs of internal and external customers and stakeholders. Technology Management Keeps current on developments and leverages technology to meet goals. PERSONAL EFFECTIVENESS Demonstrates a professional presence and a commitment to effective job performance. Accountability and Dependability Takes personal responsibility for the quality and timeliness of work and achieves results with little oversight. Business Etiquette Maintains a professional presence in business settings. Ethics and Integrity Adheres to professional standards and acts in an honest, fair and trustworthy manner. Safety Focus Adheres to all occupational safety laws, regulations, standards, and practices. Self-Management Manages own time, priorities, and resources to achieve goals. Self-Awareness / Continual Learning Displays an ongoing commitment to learning and self-improvement. INTERACTIONS WITH OTHERS Builds constructive working relationships characterized by a high level of acceptance, cooperation, and mutual respect. 2 NRECA Electric Cooperative Employee Competencies 1.24.2020 Collaboration/Engagement Develops networks and alliances to build strategic relationships and achieve common goals. Interpersonal Skills Treats others with courtesy, sensitivity, and respect. -

ASPPB 2016 JTA Report

Association of State and Provincial Psychology Boards Examination for Professional Practice in Psychology (EPPP) Job Task Analysis Report May 19, 2016–September 30, 2016 Prepared by: Pearson VUE November 2016 Copyright © 2016 NCS Pearson, Inc. All rights reserved. PEARSON logo is a trademark in the U.S. and/or other countries. Table of Contents Scope of Work ................................................................................................................. 1 Glossary .......................................................................................................................... 1 Executive Summary ........................................................................................................ 2 Job Task Analysis ............................................................................................................ 3 Expert Panel Meeting .................................................................................................. 4 Developing the Survey ................................................................................................ 4 Piloting the Survey ..................................................................................................... 6 Administering the Survey ............................................................................................ 6 Analyzing the Survey .................................................................................................. 7 Test Specifications Meeting ......................................................................................... -

Talent Management Strategies for Public Procurement Professionals in Global Organizations

TALENT MANAGEMENT STRATEGIES FOR PUBLIC PROCUREMENT PROFESSIONALS IN GLOBAL ORGANIZATIONS Denise Bailey Clark* ABSTRACT. The purpose of this paper is to examine the talent management strategies used for public procurement of professionals in global organizations. Components of talent management is discussed including talent acquisition, strategic workforce planning, competitive total rewards, professional development, performance management, and succession planning. This paper will also survey how the global labor supply is affected by shifting demographics and how these shifts will influence the ability of multinational enterprises (MNEs) to recruit for talent in the emerging global markets. The current shift in demographics plays a critical role in the ability to find procurement talent; therefore, increasing the need to attract, develop, and retain appropriate talent. Department of Defense (DOD) procurement credentialing is used as a case study to gather "sustainability" data that can be applied across all organizations both public and private. This study used a systematic review of current literature to gather information that identifies strategies organizations can use to develop the skills of procurement professionals. Key finding are that is imperative to the survival of any business to understand how talent management strategies influence the ability to attract and sustain qualified personnel procurement professionals. * Denise Bailey Clark is currently a University of Maryland University College (UMUC); Doctorate of Management (DM) candidate (expected 2012). She holds a Master of Arts degree from Bowie State University in Human Resources Development and a Bachelor of Art degree from Towson State University in Business Administration and minor in Psychology. Ms. Bailey Clark is the Associate Vice President of Human Resources for the College of Southern Maryland (CSM). -

Compensation and Talent Management Committee Charter

COMPENSATION AND TALENT MANAGEMENT COMMITTEE CHARTER Purpose The Compensation and Talent Management Committee (“Committee”) shall assist the Board of Directors (the “Board”) of La-Z-Boy Incorporated (the “Company”) in fulfilling its responsibility to oversee the adoption and administration of fair and competitive executive and outside director compensation programs and leadership and talent management. Governance 1. Membership: The Committee shall be composed of not fewer than three members of the Board, each of whom shall serve until such member’s successor is duly elected and qualified or until such member resigns or is removed. Each member of the Committee shall (A) satisfy the independence and other applicable requirements of the New York Stock Exchange listing standards, the Securities Exchange Act of 1934 (the “Exchange Act”) and the rules and regulations of the Securities and Exchange Commission (“SEC”), as may be in effect from time to time, (B) be a "non-employee director" as that term is defined under Rule 16b-3 promulgated under the Exchange Act, and (C) be an "outside director" as that term is defined for purposes of the Internal Revenue Code. The Board of Directors shall determine the "independence" of directors for this purpose, as evidenced by its appointment of such Committee members. Based on the recommendations of the Nominating and Corporate Governance Committee, the Board shall review the composition of the Committee annually and fill vacancies. The Board shall elect the Chairman of the Committee, who shall chair the Committee’s meetings. Members of the Committee may be removed, with or without cause, by a majority vote of the Board. -

Personnel Selection

Journal ofOccupation aland OrganizationalPsycholog y (2001), 74, 441–472 Printedin GreatBritain 441 Ó 2001The British Psychologi calSociety Personnel selection Ivan T. Robertson* and Mike Smith Manchester School of Management, UMIST, UK Themain elementsin thedesign and validation of personnelselection procedure s havebeen in placefor many years.The role of jobanalysis, contemporary models of workperformance and criteria are reviewed criticall y.After identifyin gsome important issues andreviewing research work on attractingapplicants, including applicantperception sof personnelselection processes, theresearch on major personnelselection methods is reviewed.Recent work on cognitiveability has conrmed the good criterion-relatedvalidity, but problems of adverseimpact remain.Work on personalityis progressing beyondstudies designed simply to explorethe criterion- relatedvalidity of personality.Interviewand assessment centreresearch is reviewed,and recent studies indicating the key constructs measuredby both arediscussed. In both cases, oneof thekey constructs measuredseems to begenerally cognitive ability. Biodata validity and the processes usedto developbiodata instruments arealso criticallyreviewed.The articleconcludes with acriticalevaluation of theprocesses forobtaining validity evidence(primarily from meta-analyses)andthe limitations of thecurrent state of theart. Speculativ efutureprospects arebrie y reviewed. Thisarticle focuses on personnel selectionresearch. Muchcontempora ry practice withinpersonnel selectionhas been inuenced by the -

Tomakeindividual

SEMESTER EXAMS REGISTRATION FOR WILL BE GIVEN NEXT TERM WILL JANUARY 18-27 Eije Batotbstonian BEGIN JANUARY 17 glenba Hux WLbi €>rta Hibrrtas VoLXXI DAVIDSON COLLEGE, DAVIDSON,N. C, JANUARY 10, 1934 No. 14 ZamskyWillReturn Coach Praised Large Percentage Soph. Houseparty Examinations Nine DavidsonMen Mid - semester examinations To MakeIndividual OfStudentsMake Proves Itself To will begin on Thursday. January Represent College 18. Recess for the examination Pledges period will begin on Wednesday, Tryout Year-Book Photos GiftFund Be Great Success the day before. The examination At Rhodes '- ■ ■ period will close on Saturday, ■■■: ■■■■■■ :■ v:^.';:-:'^fl^^H Jointly Many Students ExpressDesire to North and South Lead Tommy Tucker and His Cali- January 27. Barnett of U. N. C, and Lassiter Made Percentage fornians The second semes- Have Photographs Dormitories in Provide Music for ter will officially begin on Sun- of Yale Are NorthCarolina During ThisVisit of Contributions Week-endDec.15 day, January 28, at 11 o'clock Representatives a. m. ILL COME IN FEBRUARY RUMPLE COMES SECOND LARGE ATTENDANCE DR. VOWLES ATTENDS I - College work after the Christ- ClassPictures to Be Sent to Pub- Money Will Go for Relief of Armory Auditorium Decorated mas recess was resumed at Da- Barnett, Gordon, Booth,and Pol- lishersSoon Davidson Unity Church forPre-Xmas Dances vidson Thursday, January 4, at lack Are Winners which time registration fees for Pledges toward the annual Y. M. The class held its an- the second semester werepaid at Williams, of the 1934 Jack editor Jifl^^sw. C. A. fund, which this year goes to nual house party this year the week- the During the holidays nine Davidson year definitely announced treasurer's office.