``TL;DR:'' Out-Of-Context Adversarial Text Summarization and Hashtag Recommendation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ronald Reagan Presidential Library Digital Library Collections This

Ronald Reagan Presidential Library Digital Library Collections This is a PDF of a folder from our textual collections. Collection: Cohen, Benedict S.: Files Folder Title: Robert Bork – White House Study, Joseph Biden Critique (3 of 3) Box: CFOA 1339 To see more digitized collections visit: https://www.reaganlibrary.gov/archives/digitized-textual-material To see all Ronald Reagan Presidential Library inventories visit: https://www.reaganlibrary.gov/archives/white-house-inventories Contact a reference archivist at: [email protected] Citation Guidelines: https://reaganlibrary.gov/archives/research- support/citation-guide National Archives Catalogue: https://catalog.archives.gov/ ERRORS ANO OMISSIONS IN THE "RESPONSE PREPARED TO WHITE HOUSE ANALYSIS OF JUDGE BORK'S RECORD" September 8, 1987 HIGHLIGHTS ERRORS AND OMISSIONS IN THE "RESPONSE PREPARED TO WHITE HOUSE ANALYSIS OF JUDGE BORK'S RECORD" On Thursday, September 3, Senator Biden issued a report prepared at his request reviewing Judge Bork's record. The report criticized an earlier White House document performing a similar review on the ground that it "contain[ed] a number of inaccuracies" and that "the picture it paint[ed] of Judge Bork is a distortion of his record." It claimed that it would undertake "to depict Judge Bork's record more fully and accurately." In fact, however, the Biden Report is a highly partisan and incomplete portrayal of the nominee. If it were a brief filed on the question, its misleading assertions and omissions go far enough beyond the limits of zealous advocacy to warrant Rule 11 sanctions. It contains at least 81 clear distortions and mischaracterizations. Among the most significant: o The Report claims that Judge Bork's perfect record of nonreversal by the Supreme Court in the more than 400 majority opinions he has written or joined is "uninformative" -- flippantly dismissing the clearest, most extensive, and most recent evidence of Judge Bork's views. -

Eric Johnson Producer of Recode Decode with Kara Swisher Vox Media

FIRESIDE Q&A How podcasting can take social media storytelling ‘to infinity and beyond’ Eric Johnson Producer of Recode Decode with Kara Swisher Vox Media Rebecca Reed Host Off the Assembly Line Podcast 1. Let’s start out by introducing yourself—and telling us about your favorite podcast episode or interview. What made it great? Eric Johnson: My name is Eric Johnson and I'm the producer of Recode Decode with Kara Swisher, which is part of the Vox Media Podcast Network. My favorite episode of the show is the interview Kara conducted with Facebook CEO Mark Zuckerberg — an in-depth, probing discussion about how the company was doing after the Cambridge Analytica scandal and the extent of its power. In particular, I really liked the way Kara pressed Zuckerberg to explain how he felt about the possibility that his company's software was enabling genocide in Myanmar; his inability to respond emotionally to the tragedy was revealing, and because this was a podcast and not a TV interview she was able to patiently keep asking the question, rather than moving on to the next topic. Rebecca Reed: My name is Rebecca Reed and I’m an Education Consultant and Host of the Off the Assembly Line podcast. Off the Assembly Line is your weekly dose of possibility-sparking conversation with the educators and entrepreneurs bringing the future to education. My favorite episode was an interview with Julia Freeland Fisher, author of Who You Know. We talked about the power of student social capital, and the little known role it plays in the so-called “achievement gap” (or disparity in test scores from one school/district to the next). -

Tech That Reality Check Making Money from News

NEWS REALITY IN THE November 2018 CHECK Using technology to combat DIGITAL misinformation AGE CONTINENTAL SHIFT NBC News International’s Deborah Turness on covering a divided Europe MAKING MONEY FROM NEWS Industry leaders across TECH THAT Europe share their views Check out the smart tools reshaping reporting Paid Post by Google This content was produced by the advertising department of the Financial Times, in collaboration with Google. Paid Post by Google This content was produced by the advertising department of the Financial Times, in collaboration with Google. Digital News Innovation Fund 30 European countries 559 Projects €115M In funding g.co/newsinitiative 2 | GoogleNewsInitiative.ft.com Foreword THE FUTURE OF NEWS In 2015, Google launched the Digital News Innovation Fund (DNI Fund) to stimulate innovation across the European news industry. The DNI Fund supports ambitious projects in digital journalism across a range of areas – from creating open-source technology that improves revenue streams to investing in quality, data-driven investigative journalism. Ludovic Blecher Head of the Digital News Google asked a dozen leaders from the industry to allocate a total of Innovation Fund €150m to projects submitted by media companies and start-ups – no strings attached: all intellectual property remains with the companies themselves. To date, we’ve selected 559 projects across 30 countries, supporting them with more than €115m. But it’s not just about the money. The DNI Fund provides space and opportunity to take risks and experiment. In the media industry, many players don’t compete with each other across borders. We are Veit Dengler also proud to have fostered publishers working together to tackle Executive board member, their common challenges, through technological collaboration. -

Trumpism on College Campuses

UC San Diego UC San Diego Previously Published Works Title Trumpism on College Campuses Permalink https://escholarship.org/uc/item/1d51s5hk Journal QUALITATIVE SOCIOLOGY, 43(2) ISSN 0162-0436 Authors Kidder, Jeffrey L Binder, Amy J Publication Date 2020-06-01 DOI 10.1007/s11133-020-09446-z Peer reviewed eScholarship.org Powered by the California Digital Library University of California Qualitative Sociology (2020) 43:145–163 https://doi.org/10.1007/s11133-020-09446-z Trumpism on College Campuses Jeffrey L. Kidder1 & Amy J. Binder 2 Published online: 1 February 2020 # Springer Science+Business Media, LLC, part of Springer Nature 2020 Abstract In this paper, we report data from interviews with members of conservative political clubs at four flagship public universities. First, we categorize these students into three analytically distinct orientations regarding Donald Trump and his presidency (or what we call Trumpism). There are principled rejecters, true believers, and satisficed partisans. We argue that Trumpism is a disunifying symbol in our respondents’ self- narratives. Specifically, right-leaning collegians use Trumpism to draw distinctions over the appropriate meaning of conservatism. Second, we show how political clubs sort and shape orientations to Trumpism. As such, our work reveals how student-led groups can play a significant role in making different political discourses available on campuses and shaping the types of activism pursued by club members—both of which have potentially serious implications for the content and character of American democracy moving forward. Keywords Americanpolitics.Conservatism.Culture.Highereducation.Identity.Organizations Introduction Donald Trump, first as a candidate and now as the president, has been an exceptionally divisive force in American politics, even among conservatives who typically vote Republican. -

Netflix and the Development of the Internet Television Network

Syracuse University SURFACE Dissertations - ALL SURFACE May 2016 Netflix and the Development of the Internet Television Network Laura Osur Syracuse University Follow this and additional works at: https://surface.syr.edu/etd Part of the Social and Behavioral Sciences Commons Recommended Citation Osur, Laura, "Netflix and the Development of the Internet Television Network" (2016). Dissertations - ALL. 448. https://surface.syr.edu/etd/448 This Dissertation is brought to you for free and open access by the SURFACE at SURFACE. It has been accepted for inclusion in Dissertations - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract When Netflix launched in April 1998, Internet video was in its infancy. Eighteen years later, Netflix has developed into the first truly global Internet TV network. Many books have been written about the five broadcast networks – NBC, CBS, ABC, Fox, and the CW – and many about the major cable networks – HBO, CNN, MTV, Nickelodeon, just to name a few – and this is the fitting time to undertake a detailed analysis of how Netflix, as the preeminent Internet TV networks, has come to be. This book, then, combines historical, industrial, and textual analysis to investigate, contextualize, and historicize Netflix's development as an Internet TV network. The book is split into four chapters. The first explores the ways in which Netflix's development during its early years a DVD-by-mail company – 1998-2007, a period I am calling "Netflix as Rental Company" – lay the foundations for the company's future iterations and successes. During this period, Netflix adapted DVD distribution to the Internet, revolutionizing the way viewers receive, watch, and choose content, and built a brand reputation on consumer-centric innovation. -

Vox Media Turns to Cloudian Hyperstore to Meet Growing Archive Demand

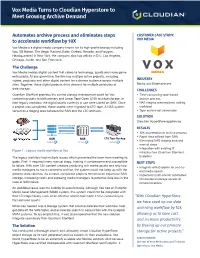

Vox Media Turns to Cloudian Hyperstore to Meet Growing Archive Demand Automates archive process and eliminates steps CUSTOMER CASE STUDY: to accelerate workflow by 10X VOX MEDIA Vox Media is a digital media company known for its high-profile brands including Vox, SB Nation, The Verge, Racked, Eater, Curbed, Recode, and Polygon. Headquartered in New York, the company also has offices in D.C, Los Angeles, Chicago, Austin, and San Francisco. The Challenge Vox Media creates digital content that caters to technology, sports and video game enthusiasts. At any given time, the firm has multiple active projects, including videos, podcasts and other digital content for a diverse audience across multiple INDUSTRY sites. Together, these digital projects drive demand for multiple petabytes of Media and Entertainment data storage. CHALLENGES Quantum StorNext provides the central storage management point for Vox, • Time-consuming tape-based connecting users to both primary and Linear Tape Open (LTO) archival storage. In archive process their legacy workflow, the digital assets currently in use were stored on SAN. Once • NAS staging area required, adding a project was completed, those assets were migrated to LTO tape. A NAS system workload served as a staging area between the SAN and the LTO archives. • Tape archive not searchable SOLUTION Cloudian HyperStore appliances PROJECT COMPLETE RESULTS PROJECT RETRIEVAL • 10X acceleration of archive process • Rapid data offload from SAN SAN NAS LTO Tape Backup • Eliminated NAS staging area and manual steps • Integration with existing IT Figure 1 : Legacy media workflow at Vox infrastructure (Quantum StorNext, The legacy workflow had multiple issues which prevented the team from meeting its Evolphin) goals. -

Social Media Immunity in 2021 and Beyond: Will Platforms Continue to Avoid Litigation Exposure Faced by Offline Counterparts

Social Media Immunity in 2021 and Beyond: Will Platforms Continue to Avoid Litigation Exposure Faced by Offline Counterparts By: Peter J. Pizzi Peter J. Pizzi, CIPPE/US, is a business trial lawyer with decades of experience in commercial litigation, internal investigations, technology litigation, eDiscovery, and labor and employment law. A Certified Civil Trial Attorney, Peter represents corporate clients in a broad array of industries, including healthcare, pharmaceuticals, financial services, information technology, cosmetics, industrial equipment, food service and others. Recent highlights include a federal court jury verdict on a contract claim arising from an acquisition and pre-discovery dismissals of consumer class actions in the convenience store retail and private aviation industries. A member of the New Jersey, New York and Pennsylvania bars, Peter is former Chair of IADC’s Cyber Security, Data Privacy and Technology Committee. URING the 2020 election cycle, companies from being held liable for both the United States transmitting or taking down user- D presidential candidates generated content (UGC). Twice in called for changes to Section 230 of 2020, the Senate held hearings, with the Communications Decency Act of both sides demanding change to the 1996, which protects online service statute and to platform moderation providers like social media practices.1 Then, following the 1 Cat Zakrzewski and Rachel Lerman, The election was a chance for Facebook and Twitter to show they could control misinformation. Now lawmakers are grilling them on it, WASH. POST (Nov. 17, 2020), https://www.washingtonpost.com/techno 2 DEFENSE COUNSEL JOURNAL | JULY 2021 January 6, 2021 Capitol Hill riot, I. Origins Donald Trump and others within his circle were de-platformed by Defamation actions brought in Twitter, Facebook, and YouTube. -

Vox: a New Far Right in Spain?

VOX: A NEW FAR RIGHT IN SPAIN? By Vicente Rubio-Pueyo Table of Contents Confronting the Far Right.................................................................................................................1 VOX: A New Far Right in Spain? By Vicente Rubio-Pueyo....................................................................................................................2 A Politico-Cultural Genealogy...................................................................................................3 The Neocon Shift and (Spanish) Constitutional Patriotism...................................................4 New Methods, New Media........................................................................................................5 The Catalonian Crisis..................................................................................................................6 Organizational Trajectories within the Spanish Right............................................................7 International Connections.........................................................................................................8 VOX, PP and Ciudadanos: Effects within the Right’s Political Field....................................9 Populist or Neoliberal Far Right? VOX’s Platform...................................................................9 The “Living Spain”: VOX’s Discourse and Its Enemies............................................................11 “Make Spain Great Again”: VOX Historical Vision...................................................................13 -

Britain in Psychological Distress: the EU Referendum and the Psychological Operations of the Two Opposing Sides

SCHOOL OF SOCIAL SCIENCES, HUMANITIES AND ARTS DEPARTMENT OF INTERNATIONAL AND EUROPEAN STUDIES MASTER’S DEGREE OF INTERNATIONAL PUBLIC ADMINISTRATION Britain in psychological distress: The EU referendum and the psychological operations of the two opposing sides By: Eleni Mokka Professor: Spyridon Litsas MIPA Thessaloniki, 2019 TABLE OF CONTENTS Summary ……………………………………………………………………………… 5 INTRODUCTION ……………………………………………………………………. 6 CHAPTER ONE: OVERVIEW OF PSYCHOLOGICAL OPERATIONS ………….. 7 A. Definition and Analysis …………………………………………………………… 7 B. Propaganda: Techniques involving Language Manipulation …………………….. 11 1. Basic Propaganda Devices ……………………………………………………... 11 2. Logical Fallacies ……………………………………………………………….. 20 C. Propaganda: Non-Verbal Techniques …………………………………………… 25 1. Opinion Polls …………………………………………………………………… 25 2. Statistics ………………………………………………………………………… 32 CHAPTER TWO: BRITAIN‟S EU REFERENDUM ………………………………. 34 A. Euroscepticism in Britain since 70‟s ……………………………………………... 34 B. Brexit vs. Bremain: Methods, Techniques and Rhetoric …………………………. 43 1. Membership, Designation and Campaigns‟ Strategy …………………………… 44 1.a. „Leave‟ Campaign …………………………………………………………… 44 1.b. „Remain‟ Campaign …………………………………………………………. 50 1.c. Labour In for Britain ………………………………………………………… 52 1.d. Conservatives for Britain ……………………………………………………. 52 2. The Deal ………………………………………………………………………… 55 3. Project Fear …………………………………………………………………..…. 57 4. Trade and Security; Barack Obama‟s visit ……………………………………... 59 3 5. Budget and Economic Arguments ……………………………………………… 62 6. Ad Hominem -

Response Opposing Committee to Bridge the Gap 820920 Motion To

. - . .- . ...-A 00CMETED USHRC 0)I I4 AN :54 UNITED STATES OF AMERICA WI..E5f, t Tr ' - NUCLEAR REGULATORY COMMISSION ' BEFORE THE ATOMIC SAFETY AND LICENSING BOARD , __ _ In the Matter of ) ) Docket No. 50-142 THE REGENTS OF THE UNIVERSITY ) (Proposed Renewal of Facility OF CALIFORNIA ) License Number R-71) ) (UCLA Research Reactor) ) October 8, 1982 ) , UNIVERSITY'S RESPONSE TO CBG'S MOTION OF SEPTEMBER 20, 1982 ! DONALD L. REIDHAAR GLENN R. WOODS CHRISTINE HELWICK 590 University Hall 2200 University Avenue Berkeley, California 94720 Telephone: (415) 642-2822 Attorneys for Applicant- ! THE REGERTS OF THE UNIVCRSITY .._ OF CALIFORNIA 8210150456 821008 PDR ADOCK 05000142 O PDR ' .. - - . I. INTRODUCTION In its Order dated September 28, 1982 the Board suspended the schedule for responses to summary disposition motions and directed the parties to respond to CBG's " Motion __ to Summarily Dismiss Staff and Applicant Motions for Summary Disposition, or Alternative Relief as to Same," (the Motion) ' dated September 20, 1982. The parties were also directed to respond to the City of Santa Monica's ' letter of September 20, | 1982 requesting an entension of time to respond to the summary i disposition motions. The Board requested that the parties' responses be received by October 8, 1982. I -- University objects to all manner of specific relief requested by CBG in its Motion as unwarranted under the Commission's Rules of Practice ' considered in light of the particular circumstances of this proceeding. Properly construed the Commission's rules preclude the summary dismissal of the Staff and University motions since those motions have not been 'subinitted shortly before a hearing that is set to commence or t which has commenced, but instead according to the schedule specifically set by the Board for the filing of such motions. -

On “Parler Vrai” Reflection Over the Political Languages of the (Dis)Trust in Le Bon Gouvernement by Pierre Rosanvallon

SYMPOSIUM LE BON GOUVERNEMENT ON “PARLER VRAI” REFLECTION OVER THE POLITICAL LANGUAGES OF THE (DIS)TRUST IN LE BON GOUVERNEMENT BY PIERRE ROSANVALLON BY ERASMO SILVIO STORACE © 2017 – Philosophy and Public Issues (New Series), Vol. 7, No. 3 (2017): 97-122 Luiss University Press E-ISSN 2240-7987 | P-ISSN 1591-0660 [THIS PAGE INTENTIONALLY LEFT BLANK] LE BON GOUVERNEMENT On “parler vrai” Reflection over the political languages of the (dis)trust in Le bon gouvernement by Pierre Rosanvallon Erasmo Silvio Storace There will be good governance only when philosophers become kings or the kings become philosophers [Plato] aving devoted a large part of his own research to the history of democracy, and in particular focusing on the H French political model, Pierre Rosanvallon developed, among the basic ideas of his thought, the concept for which between citizens and political class there was an increasingly unbearable distance, thus producing the loss of trust that ruled people could pin on leaders, and creating a wide space of “active distrust”. Hence, first of all, the idea of establishing a “counter- democracy”, founded on new ideals on which the daily work of “counter-democracy” citizens should be based: “monitoring”, “prevention” and “judging”. (As evidenced from one of his own reference texts, Counterdemocracy1, dated 2006, in which the idea of current democracies citizens abandoning the streets in order to take refuge in the sphere of private life, for example, is reconsidered, etc.). In addition to that, it is necessary to reflect on 1 P. Rosanvallon, La contre-démocratie. La politique à l'âge de la défiance (Paris: Seuil, 2006). -

The New York Times 2014 Innovation Report

Innovation March 24, 2014 Executive Summary Innovation March 24, 2014 2 Executive Summary Introduction and Flipboard often get more traffic from Times journalism than we do. The New York Times is winning at journalism. Of all In contrast, over the last year The Times has the challenges facing a media company in the digi- watched readership fall significantly. Not only is the tal age, producing great journalism is the hardest. audience on our website shrinking but our audience Our daily report is deep, broad, smart and engaging on our smartphone apps has dipped, an extremely — and we’ve got a huge lead over the competition. worrying sign on a growing platform. At the same time, we are falling behind in a sec- Our core mission remains producing the world’s ond critical area: the art and science of getting our best journalism. But with the endless upheaval journalism to readers. We have always cared about in technology, reader habits and the entire busi- the reach and impact of our work, but we haven’t ness model, The Times needs to pursue smart new done enough to crack that code in the digital era. strategies for growing our audience. The urgency is This is where our competitors are pushing ahead only growing because digital media is getting more of us. The Washington Post and The Wall Street crowded, better funded and far more innovative. Journal have announced aggressive moves in re- The first section of this report explores in detail cent months to remake themselves for this age. First the need for the newsroom to take the lead in get- Look Media and Vox Media are creating newsrooms ting more readers to spend more time reading more custom-built for digital.