Where Are the Semantics in the Semantic Web?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Panel: Large Knowledge Bases

From: AAAI Technical Report SS-02-06. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved. Panel: Large KnowledgeBases AdamPease (Teknowledge, chair), Chris Welty (Vassar College), Pat Hayes (U. West Florida), Anthony G. Cohn (U. Leeds), Ken Murray (SRI) Fellbaum, C. (1998). WordNet, An Electronic Lexical Database. MITPress. Abstract Lenat, D., 1995, "Cyc: A Large-Scale Investment in It is estimated that 1-2 exabytes of data is now being KnowledgeInfrastructure". Communicationsof the ACM generated each year, almost all of it in purely digital form 38, no. 1 !, November.See also http://www.c¥c.com (Lymanet. ai. 2000). Properly structured, this information could form a global knowledge base. Currently however, Lyman,P., Varian, H., Dunn, J., Strygin, A., Swearingen, this information exists in manydifferent forms, manyof K., (2000). HowMuch Information?, University California, which are only suitable for humanconsumption, and which Berkeley are largely opaque to computerbased understanding. Majorefforts to build large formal ontologies or address http://www.sims.berkeley.edu/research/project.,ghow-much- issues in their construction have been undertaken funded by info the government in the US such as the DARPAKnowledge Niles, I., & Pease, A., (2001), Towarda Standard Upper Sharing Effort (Patil et al, 1992), High Performance Ontology, in Proceedings of the 2nd International KnowledgeBases (Cohen et. al., 1998), Rapid Knowledge Conference on Formal Ontology in Information Systems Formation (RKF, 2002) and in Europe including Advanced (FOIS-2001). See also http:llonlology.teknowledge.com KnowledgeTechnologies (Shadboit, 2001) and OntoWeb and http:l/suo.ieee.org (OntoWeb,2002), as international standards efforts such the IEEE Standard Upper Ontology (Niles & Pease, 2001) OntoWeb(2002). -

A Critique of Pure Reason’

151 A critique of pure reason’ DREWMCDERMOTT Yale University, New Haven, CT 06S20, U.S.A. Cornput. Intell. 3. 151-160 (1987) In 1978, Patrick Hayes promulgated the Naive Physics Man- the knowledge that programs must have before we write the ifesto. (It finally appeared as an “official” publication in programs themselves. We know what this knowledge is; it’s Hobbs and Moore 1985.) In this paper, he proposed that an all- what everybody knows, about physics, about time and space, out effort be mounted to formalize commonsense knowledge, about human relationships and behavior. If we attempt to write using first-order logic as a notation. This effort had its roots in the programs first, experience shows that the knowledge will earlier research, especially the work of John McCarthy, but the be shortchanged. The tendency will be to oversimplify what scope of Hayes’s proposal was new and ambitious. He sug- people actually know in order to get a program that works. On gested that the use of Tarskian seniantics could allow us to the other hand, if we free ourselves from the exigencies of study a large volume of knowledge-representation problems hacking, then we can focus on the actual knowledge in all its free from the confines of computer programs. The suggestion complexity. Once we have a rich theory of the commonsense inspired a small community of people to actually try to write world, we can try to embody it in programs. This theory will down all (or most) of commonsense knowledge in predictate become an indispensable aid to writing those programs. -

Semantic Integration and Knowledge Discovery for Environmental Research

Journal of Database Management, 18(1), 43-67, January-March 2007 43 Semantic Integration and Knowledge Discovery for Environmental Research Zhiyuan Chen, University of Maryland, Baltimore County (UMBC), USA Aryya Gangopadhyay, University of Maryland, Baltimore County (UMBC), USA George Karabatis, University of Maryland, Baltimore County (UMBC), USA Michael McGuire, University of Maryland, Baltimore County (UMBC), USA Claire Welty, University of Maryland, Baltimore County (UMBC), USA ABSTRACT Environmental research and knowledge discovery both require extensive use of data stored in various sources and created in different ways for diverse purposes. We describe a new metadata approach to elicit semantic information from environmental data and implement semantics- based techniques to assist users in integrating, navigating, and mining multiple environmental data sources. Our system contains specifications of various environmental data sources and the relationships that are formed among them. User requests are augmented with semantically related data sources and automatically presented as a visual semantic network. In addition, we present a methodology for data navigation and pattern discovery using multi-resolution brows- ing and data mining. The data semantics are captured and utilized in terms of their patterns and trends at multiple levels of resolution. We present the efficacy of our methodology through experimental results. Keywords: environmental research, knowledge discovery and navigation, semantic integra- tion, semantic networks, -

Ontologies and Semantic Web for the Internet of Things - a Survey

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/312113565 Ontologies and Semantic Web for the Internet of Things - a survey Conference Paper · October 2016 DOI: 10.1109/IECON.2016.7793744 CITATIONS READS 5 256 2 authors: Ioan Szilagyi Patrice Wira Université de Haute-Alsace Université de Haute-Alsace 10 PUBLICATIONS 17 CITATIONS 122 PUBLICATIONS 679 CITATIONS SEE PROFILE SEE PROFILE Some of the authors of this publication are also working on these related projects: Physics of Solar Cells and Systems View project Artificial intelligence for renewable power generation and management: Application to wind and photovoltaic systems View project All content following this page was uploaded by Patrice Wira on 08 January 2018. The user has requested enhancement of the downloaded file. Ontologies and Semantic Web for the Internet of Things – A Survey Ioan Szilagyi, Patrice Wira MIPS Laboratory, University of Haute-Alsace, Mulhouse, France {ioan.szilagyi; patrice.wira}@uha.fr Abstract—The reality of Internet of Things (IoT), with its one of the most important task in an IoT system [6]. Providing growing number of devices and their diversity is challenging interoperability among the things is “one of the most current approaches and technologies for a smarter integration of fundamental requirements to support object addressing, their data, applications and services. While the Web is seen as a tracking and discovery as well as information representation, convenient platform for integrating things, the Semantic Web can storage, and exchange” [4]. further improve its capacity to understand things’ data and facilitate their interoperability. In this paper we present an There is consensus that Semantic Technologies is the overview of some of the Semantic Web technologies used in IoT appropriate tool to address the diversity of Things [4], [7]–[9]. -

Rdfa in XHTML: Syntax and Processing Rdfa in XHTML: Syntax and Processing

RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing A collection of attributes and processing rules for extending XHTML to support RDF W3C Recommendation 14 October 2008 This version: http://www.w3.org/TR/2008/REC-rdfa-syntax-20081014 Latest version: http://www.w3.org/TR/rdfa-syntax Previous version: http://www.w3.org/TR/2008/PR-rdfa-syntax-20080904 Diff from previous version: rdfa-syntax-diff.html Editors: Ben Adida, Creative Commons [email protected] Mark Birbeck, webBackplane [email protected] Shane McCarron, Applied Testing and Technology, Inc. [email protected] Steven Pemberton, CWI Please refer to the errata for this document, which may include some normative corrections. This document is also available in these non-normative formats: PostScript version, PDF version, ZIP archive, and Gzip’d TAR archive. The English version of this specification is the only normative version. Non-normative translations may also be available. Copyright © 2007-2008 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply. Abstract The current Web is primarily made up of an enormous number of documents that have been created using HTML. These documents contain significant amounts of structured data, which is largely unavailable to tools and applications. When publishers can express this data more completely, and when tools can read it, a new world of user functionality becomes available, letting users transfer structured data between applications and web sites, and allowing browsing applications to improve the user experience: an event on a web page can be directly imported - 1 - How to Read this Document RDFa in XHTML: Syntax and Processing into a user’s desktop calendar; a license on a document can be detected so that users can be informed of their rights automatically; a photo’s creator, camera setting information, resolution, location and topic can be published as easily as the original photo itself, enabling structured search and sharing. -

Semantic Integration Across Heterogeneous Databases Finding Data Correspondences Using Agglomerative Hierarchical Clustering and Artificial Neural Networks

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 Semantic Integration across Heterogeneous Databases Finding Data Correspondences using Agglomerative Hierarchical Clustering and Artificial Neural Networks MARK HOBRO KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Semantic Integration across Heterogeneous Databases Finding Data Correspondences using Agglomerative Hierarchical Clustering and Artificial Neural Networks MARK HOBRO Master in Computer Science Date: April 11, 2018 Supervisor: John Folkesson Examiner: Hedvig Kjellström Swedish title: Semantisk integrering mellan heterogena databaser: Hitta datakopplingar med hjälp av hierarkisk klustring och artificiella neuronnät School of Computer Science and Communication iii Abstract The process of data integration is an important part of the database field when it comes to database migrations and the merging of data. The research in the area has grown with the addition of machine learn- ing approaches in the last 20 years. Due to the complexity of the re- search field, no go-to solutions have appeared. Instead, a wide variety of ways of enhancing database migrations have emerged. This thesis examines how well a learning-based solution performs for the seman- tic integration problem in database migrations. Two algorithms are implemented. One that is based on informa- tion retrieval theory, with the goal of yielding a matching result that can be used as a benchmark for measuring the performance of the machine learning algorithm. The machine learning approach is based on grouping data with agglomerative hierarchical clustering and then training a neural network to recognize patterns in the data. This al- lows making predictions about potential data correspondences across two databases. -

Is It an Agent, Or Just a Program?: a Taxonomy for Autonomous Agents

Agent or Program http://www.msci.memphis.edu/~franklin/AgentProg.html Is it an Agent, or just a Program?: A Taxonomy for Autonomous Agents Stan Franklin and Art Graesser Institute for Intelligent Systems University of Memphis Proceedings of the Third International Workshop on Agent Theories, Architectures, and Languages, Springer-Verlag, 1996. Abstract The advent of software agents gave rise to much discussion of just what such an agent is, and of how they differ from programs in general. Here we propose a formal definition of an autonomous agent which clearly distinguishes a software agent from just any program. We also offer the beginnings of a natural kinds taxonomy of autonomous agents, and discuss possibilities for further classification. Finally, we discuss subagents and multiagent systems. Introduction On meeting a friend or colleague that we haven't seen for a while, or a new acquaintance, some version of the following conversation often ensues: What are you working on these days? Control structures for autonomous agents. Autonomous agents? What do you mean by that? A brief explanation is then followed by: But agents sound just like computer programs. How are they different? This elicits a more satisfying explanation that distinguishes between agent and program. The nature of this "more satisfying explanation" motivates this essay. After a review of some of the many ways the term "agent" has been used within the context of autonomous agents, we'll propose and defend a notion of agent that is clearly distinct from a program. This discussion will lead us to a discussion of possible classifications for autonomous agents. -

The Application of Semantic Web Technologies to Content Analysis in Sociology

THEAPPLICATIONOFSEMANTICWEBTECHNOLOGIESTO CONTENTANALYSISINSOCIOLOGY MASTER THESIS tabea tietz Matrikelnummer: 749153 Faculty of Economics and Social Science University of Potsdam Erstgutachter: Alexander Knoth, M.A. Zweitgutachter: Prof. Dr. rer. nat. Harald Sack Potsdam, August 2018 Tabea Tietz: The Application of Semantic Web Technologies to Content Analysis in Soci- ology, , © August 2018 ABSTRACT In sociology, texts are understood as social phenomena and provide means to an- alyze social reality. Throughout the years, a broad range of techniques evolved to perform such analysis, qualitative and quantitative approaches as well as com- pletely manual analyses and computer-assisted methods. The development of the World Wide Web and social media as well as technical developments like optical character recognition and automated speech recognition contributed to the enor- mous increase of text available for analysis. This also led sociologists to rely more on computer-assisted approaches for their text analysis and included statistical Natural Language Processing (NLP) techniques. A variety of techniques, tools and use cases developed, which lack an overall uniform way of standardizing these approaches. Furthermore, this problem is coupled with a lack of standards for reporting studies with regards to text analysis in sociology. Semantic Web and Linked Data provide a variety of standards to represent information and knowl- edge. Numerous applications make use of these standards, including possibilities to publish data and to perform Named Entity Linking, a specific branch of NLP. This thesis attempts to discuss the question to which extend the standards and tools provided by the Semantic Web and Linked Data community may support computer-assisted text analysis in sociology. First, these said tools and standards will be briefly introduced and then applied to the use case of constitutional texts of the Netherlands from 1884 to 2016. -

The Semantic Web: the Origins of Artificial Intelligence Redux

The Semantic Web: The Origins of Artificial Intelligence Redux Harry Halpin ICCS, School of Informatics University of Edinburgh 2 Buccleuch Place Edinburgh EH8 9LW Scotland UK Fax:+44 (0) 131 650 458 E-mail:[email protected] Corresponding author is Harry Halpin. For further information please contact him. This is the tear-off page. To facilitate blind review. Title:The Semantic Web: The Origins of AI Redux working process managed to both halt the fragmentation of Submission for HPLMC-04 the Web and create accepted Web standards through its con- sensus process and its own research team. The W3C set three long-term goals for itself: universal access, Semantic Web, and a web of trust, and since its creation these three goals 1 Introduction have driven a large portion of development of the Web(W3C, 1999) The World Wide Web is considered by many to be the most significant computational phenomenon yet, although even by One comparable program is the Hilbert Program in mathe- the standards of computer science its development has been matics, which set out to prove all of mathematics follows chaotic. While the promise of artificial intelligence to give us from a finite system of axioms and that such an axiom system machines capable of genuine human-level intelligence seems is consistent(Hilbert, 1922). It was through both force of per- nearly as distant as it was during the heyday of the field, the sonality and merit as a mathematician that Hilbert was able ubiquity of the World Wide Web is unquestionable. If any- to set the research program and his challenge led many of the thing it is the Web, not artificial intelligence as traditionally greatest mathematical minds to work. -

Usage of Ontologies and Software Agents for Knowledge-Based Design of Mechatronic Systems

INTERNATIONAL CONFERENCE ON ENGINEERING DESIGN, ICED’07 28 - 31 AUGUST 2007, CITE DES SCIENCES ET DE L'INDUSTRIE, PARIS, FRANCE USAGE OF ONTOLOGIES AND SOFTWARE AGENTS FOR KNOWLEDGE-BASED DESIGN OF MECHATRONIC SYSTEMS Ewald G. Welp1, Patrick Labenda1 and Christian Bludau2 1Ruhr-University Bochum, Germany 2Behr-Hella Thermocontrol GmbH, Lippstadt, Germany ABSTRACT Already in [1, 2 and 3] the newly developed Semantic Web Service Platform SEMEC (SEMantic and MEChatronics) has been introduced and explained. It forms an interconnection between semantic web, semantic web services and software agents, offering tools and methods for a knowledge-based design of mechatronic systems. Their development is complex and connected to a high information and knowledge need on the part of the engineers involved in it. Most of the tools nowadays available cannot meet this need to an adequate degree and in the demanded quality. The developed platform focuses on the design of mechatronic products supported by Semantic web services under use of the Semantic web as a dynamic and natural language knowledge base. The platform itself can also be deployed for the development of homogenous, i.e. mechanical and electronical systems. Of special scientific interest is the connection to the internet and semantic web, respectively, and its utilization within a development process. The platform can be used to support interdisciplinary design teams at an early phase in the development process by offering context- sensitive knowledge and by this to concretize as well as improve mechatronic concepts [1]. Essential components of this platform are a design environment, a domain ontology mechatronics as well as a software agent. -

Conceptualization and Visualization of Tagging and Folksonomies

Conceptualization and Visualization of Tagging and Folksonomies Von der Fakultät für Ingenieurwissenschaften, Abteilung Informatik und Angewandte Kognitionswissenschaft der Universität Duisburg-Essen zur Erlangung des akademischen Grades Doktor der Ingenieurwissenschaften (Dr.-Ing.) genehmigte Dissertation von Steffen Lohmann aus Hamburg 1. Gutachter: Prof. Dr. Maria Paloma Díaz Pérez 2. Gutachter: Prof. Dr.-Ing. Jürgen Ziegler Tag der mündlichen Prüfung: 27.11.2013 Hinweis: Diese Dissertation ist im Rahmen eines binationalen Promotionsverfahrens (Cotutelle) in Kooperation mit der Universidad Carlos III de Madrid entstanden. Abstract Tagging has become a popular indexing method for interactive systems in the past decade. It offers a simple yet effective way for users to organize an ever increasing amount of digital information for themselves and/or others. The linked user vocabulary resulting from tagging is known as folksonomy and provides a valuable source for the retrieval and exploration of digital resources. Although several models and representations of tagging have been proposed, there is no coherent conceptualization that provides a comprehensive and pre- cise description of the concepts and relationships in the domain. Furthermore, there is little systematic research in the area of folksonomy visualization, and so folksonomies are still mainly depicted as simple tag clouds. Both problems are related, as a well-defined conceptualization is an important prerequisite for the interoperable use and visualization of folksonomies. The thesis addresses these shortcomings by developing a coherent conceptualiza- tion of tagging and visualizations for the interactive exploration of folksonomies. It gives an overview and comparison of tagging models and defines key concepts of the domain. After a comprehensive review of existing tagging ontologies, a unified and coherent conceptualization is presented that incorporates the best parts of the reviewed ontologies. -

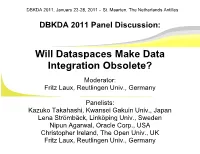

L Dataspaces Make Data Ntegration Obsolete?

DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles DBKDA 2011 Panel Discussion: Will Dataspaces Make Data Integration Obsolete? Moderator: Fritz Laux, Reutlingen Univ., Germany Panelists: Kazuko Takahashi, Kwansei Gakuin Univ., Japan Lena Strömbäck, Linköping Univ., Sweden Nipun Agarwal, Oracle Corp., USA Christopher Ireland, The Open Univ., UK Fritz Laux, Reutlingen Univ., Germany DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles The Dataspace Idea Space of Data Management Scalable Functionality and Costs far Web Search Functionality virtual Organization pay-as-you-go, Enterprise Dataspaces Admin. Portal Schema Proximity Federated first, DBMS DBMS scient. Desktop Repository Search DBMS schemaless, near unstructured high Semantic Integration low Time and Cost adopted from [Franklin, Halvey, Maier, 2005] DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Inclusive ● Deal with all the data of interest, in whatever form => but semantics matters ● We need access to the metadata! ● derive schema from instances? ● Discovering new data sources => The Münchhausen bootstrap problem? Theodor Hosemann (1807-1875) DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Co-existence