Stanford Typed Dependencies Manual

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Arxiv:1908.07448V1

Evaluating Contextualized Embeddings on 54 Languages in POS Tagging, Lemmatization and Dependency Parsing Milan Straka and Jana Strakova´ and Jan Hajicˇ Charles University Faculty of Mathematics and Physics Institute of Formal and Applied Linguistics {strakova,straka,hajic}@ufal.mff.cuni.cz Abstract Shared Task (Zeman et al., 2018). • We report our best results on UD 2.3. The We present an extensive evaluation of three addition of contextualized embeddings im- recently proposed methods for contextualized 25% embeddings on 89 corpora in 54 languages provements range from relative error re- of the Universal Dependencies 2.3 in three duction for English treebanks, through 20% tasks: POS tagging, lemmatization, and de- relative error reduction for high resource lan- pendency parsing. Employing the BERT, guages, to 10% relative error reduction for all Flair and ELMo as pretrained embedding in- UD 2.3 languages which have a training set. puts in a strong baseline of UDPipe 2.0, one of the best-performing systems of the 2 Related Work CoNLL 2018 Shared Task and an overall win- ner of the EPE 2018, we present a one-to- A new type of deep contextualized word repre- one comparison of the three contextualized sentation was introduced by Peters et al. (2018). word embedding methods, as well as a com- The proposed embeddings, called ELMo, were ob- parison with word2vec-like pretrained em- tained from internal states of deep bidirectional beddings and with end-to-end character-level word embeddings. We report state-of-the-art language model, pretrained on a large text corpus. results in all three tasks as compared to results Akbik et al. -

Extended and Enhanced Polish Dependency Bank in Universal Dependencies Format

Extended and Enhanced Polish Dependency Bank in Universal Dependencies Format Alina Wróblewska Institute of Computer Science Polish Academy of Sciences ul. Jana Kazimierza 5 01-248 Warsaw, Poland [email protected] Abstract even for languages with rich morphology and rel- atively free word order, such as Polish. The paper presents the largest Polish Depen- The supervised learning methods require gold- dency Bank in Universal Dependencies for- mat – PDBUD – with 22K trees and 352K standard training data, whose creation is a time- tokens. PDBUD builds on its previous ver- consuming and expensive process. Nevertheless, sion, i.e. the Polish UD treebank (PL-SZ), dependency treebanks have been created for many and contains all 8K PL-SZ trees. The PL- languages, in particular within the Universal De- SZ trees are checked and possibly corrected pendencies initiative (UD, Nivre et al., 2016). in the current edition of PDBUD. Further The UD leaders aim at developing a cross- 14K trees are automatically converted from linguistically consistent tree annotation schema a new version of Polish Dependency Bank. and at building a large multilingual collection of The PDBUD trees are expanded with the en- hanced edges encoding the shared dependents dependency treebanks annotated according to this and the shared governors of the coordinated schema. conjuncts and with the semantic roles of some Polish is also represented in the Universal dependents. The conducted evaluation exper- Dependencies collection. There are two Polish iments show that PDBUD is large enough treebanks in UD: the Polish UD treebank (PL- for training a high-quality graph-based depen- SZ) converted from Składnica zalezno˙ sciowa´ 1 and dency parser for Polish. -

Universal Dependencies According to BERT: Both More Specific and More General

Universal Dependencies According to BERT: Both More Specific and More General Tomasz Limisiewicz and David Marecekˇ and Rudolf Rosa Institute of Formal and Applied Linguistics, Faculty of Mathematics and Physics Charles University, Prague, Czech Republic {limisiewicz,rosa,marecek}@ufal.mff.cuni.cz Abstract former based systems particular heads tend to cap- ture specific dependency relation types (e.g. in one This work focuses on analyzing the form and extent of syntactic abstraction captured by head the attention at the predicate is usually focused BERT by extracting labeled dependency trees on the nominal subject). from self-attentions. We extend understanding of syntax in BERT by Previous work showed that individual BERT examining the ways in which it systematically di- heads tend to encode particular dependency verges from standard annotation (UD). We attempt relation types. We extend these findings by to bridge the gap between them in three ways: explicitly comparing BERT relations to Uni- • We modify the UD annotation of three lin- versal Dependencies (UD) annotations, show- ing that they often do not match one-to-one. guistic phenomena to better match the BERT We suggest a method for relation identification syntax (x3) and syntactic tree construction. Our approach • We introduce a head ensemble method, com- produces significantly more consistent depen- dency trees than previous work, showing that bining multiple heads which capture the same it better explains the syntactic abstractions in dependency relation label (x4) BERT. • We observe and analyze multipurpose heads, At the same time, it can be successfully ap- containing multiple syntactic functions (x7) plied with only a minimal amount of supervi- sion and generalizes well across languages. -

Compound Word Formation.Pdf

Snyder, William (in press) Compound word formation. In Jeffrey Lidz, William Snyder, and Joseph Pater (eds.) The Oxford Handbook of Developmental Linguistics . Oxford: Oxford University Press. CHAPTER 6 Compound Word Formation William Snyder Languages differ in the mechanisms they provide for combining existing words into new, “compound” words. This chapter will focus on two major types of compound: synthetic -ER compounds, like English dishwasher (for either a human or a machine that washes dishes), where “-ER” stands for the crosslinguistic counterparts to agentive and instrumental -er in English; and endocentric bare-stem compounds, like English flower book , which could refer to a book about flowers, a book used to store pressed flowers, or many other types of book, as long there is a salient connection to flowers. With both types of compounding we find systematic cross- linguistic variation, and a literature that addresses some of the resulting questions for child language acquisition. In addition to these two varieties of compounding, a few others will be mentioned that look like promising areas for coordinated research on cross-linguistic variation and language acquisition. 6.1 Compounding—A Selective Review 6.1.1 Terminology The first step will be defining some key terms. An unfortunate aspect of the linguistic literature on morphology is a remarkable lack of consistency in what the “basic” terms are taken to mean. Strictly speaking one should begin with the very term “word,” but as Spencer (1991: 453) puts it, “One of the key unresolved questions in morphology is, ‘What is a word?’.” Setting this grander question to one side, a word will be called a “compound” if it is composed of two or more other words, and has approximately the same privileges of occurrence within a sentence as do other word-level members of its syntactic category (N, V, A, or P). -

Hunspell – the Free Spelling Checker

Hunspell – The free spelling checker About Hunspell Hunspell is a spell checker and morphological analyzer library and program designed for languages with rich morphology and complex word compounding or character encoding. Hunspell interfaces: Ispell-like terminal interface using Curses library, Ispell pipe interface, OpenOffice.org UNO module. Main features of Hunspell spell checker and morphological analyzer: - Unicode support (affix rules work only with the first 65535 Unicode characters) - Morphological analysis (in custom item and arrangement style) and stemming - Max. 65535 affix classes and twofold affix stripping (for agglutinative languages, like Azeri, Basque, Estonian, Finnish, Hungarian, Turkish, etc.) - Support complex compoundings (for example, Hungarian and German) - Support language specific features (for example, special casing of Azeri and Turkish dotted i, or German sharp s) - Handle conditional affixes, circumfixes, fogemorphemes, forbidden words, pseudoroots and homonyms. - Free software (LGPL, GPL, MPL tri-license) Usage The src/tools dictionary contains ten executables after compiling (or some of them are in the src/win_api): affixcompress: dictionary generation from large (millions of words) vocabularies analyze: example of spell checking, stemming and morphological analysis chmorph: example of automatic morphological generation and conversion example: example of spell checking and suggestion hunspell: main program for spell checking and others (see manual) hunzip: decompressor of hzip format hzip: compressor of -

Building a Treebank for French

Building a treebank for French £ £¥ Anne Abeillé£ , Lionel Clément , Alexandra Kinyon ¥ £ TALaNa, Université Paris 7 University of Pennsylvania 75251 Paris cedex 05 Philadelphia FRANCE USA abeille, clement, [email protected] Abstract Very few gold standard annotated corpora are currently available for French. We present an ongoing project to build a reference treebank for French starting with a tagged newspaper corpus of 1 Million words (Abeillé et al., 1998), (Abeillé and Clément, 1999). Similarly to the Penn TreeBank (Marcus et al., 1993), we distinguish an automatic parsing phase followed by a second phase of systematic manual validation and correction. Similarly to the Prague treebank (Hajicova et al., 1998), we rely on several types of morphosyntactic and syntactic annotations for which we define extensive guidelines. Our goal is to provide a theory neutral, surface oriented, error free treebank for French. Similarly to the Negra project (Brants et al., 1999), we annotate both constituents and functional relations. 1. The tagged corpus pronoun (= him ) or a weak clitic pronoun (= to him or to As reported in (Abeillé and Clément, 1999), we present her), plus can either be a negative adverb (= not any more) the general methodology, the automatic tagging phase, the or a simple adverb (= more). Inflectional morphology also human validation phase and the final state of the tagged has to be annotated since morphological endings are impor- corpus. tant for gathering constituants (based on agreement marks) and also because lots of forms in French are ambiguous 1.1. Methodology with respect to mode, person, number or gender. For exam- 1.1.1. -

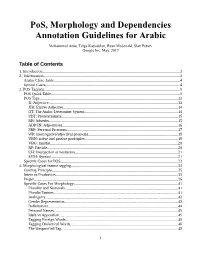

Pos, Morphology and Dependencies Annotation Guidelines for Arabic

PoS, Morphology and Dependencies Annotation Guidelines for Arabic Mohammed Attia, Tolga Kayadelen, Ryan Mcdonald, Slav Petrov Google Inc. May, 2017 Table of Contents 1. Introduction............................................................................................................................................2 2. Tokenization...........................................................................................................................................3 Arabic Clitic Table................................................................................................................................4 Special Cases.........................................................................................................................................4 3. POS Tagging..........................................................................................................................................8 POS Quick Table...................................................................................................................................8 POS Tags.............................................................................................................................................13 JJ: Adjective....................................................................................................................................13 JJR: Elative Adjective.....................................................................................................................14 DT: The Arabic Determiner System...............................................................................................14 -

Universal Dependencies for Japanese

Universal Dependencies for Japanese Takaaki Tanaka∗, Yusuke Miyaoy, Masayuki Asahara}, Sumire Uematsuy, Hiroshi Kanayama♠, Shinsuke Mori|, Yuji Matsumotoz ∗NTT Communication Science Labolatories, yNational Institute of Informatics, }National Institute for Japanese Language and Linguistics, ♠IBM Research - Tokyo, |Kyoto University, zNara Institute of Science and Technology [email protected], [email protected], [email protected], [email protected], [email protected], [email protected], [email protected] Abstract We present an attempt to port the international syntactic annotation scheme, Universal Dependencies, to the Japanese language in this paper. Since the Japanese syntactic structure is usually annotated on the basis of unique chunk-based dependencies, we first introduce word-based dependencies by using a word unit called the Short Unit Word, which usually corresponds to an entry in the lexicon UniDic. Porting is done by mapping the part-of-speech tagset in UniDic to the universal part-of-speech tagset, and converting a constituent-based treebank to a typed dependency tree. The conversion is not straightforward, and we discuss the problems that arose in the conversion and the current solutions. A treebank consisting of 10,000 sentences was built by converting the existent resources and currently released to the public. Keywords: typed dependencies, Short Unit Word, multiword expression, UniDic 1. Introduction 2. Word unit The definition of a word unit is indispensable in UD an- notation, which is not a trivial question for Japanese, since The Universal Dependencies (UD) project has been de- a sentence is not segmented into words or morphemes by veloping cross-linguistically consistent treebank annota- white space in its orthography. -

Universal Dependencies for Persian

Universal Dependencies for Persian *Mojgan Seraji, **Filip Ginter, *Joakim Nivre *Uppsala University, Department of Linguistics and Philology, Sweden **University of Turku, Department of Information Technology, Finland *firstname.lastname@lingfil.uu.se **figint@utu.fi Abstract The Persian Universal Dependency Treebank (Persian UD) is a recent effort of treebanking Persian with Universal Dependencies (UD), an ongoing project that designs unified and cross-linguistically valid grammatical representations including part-of-speech tags, morphological features, and dependency relations. The Persian UD is the converted version of the Uppsala Persian Dependency Treebank (UPDT) to the universal dependencies framework and consists of nearly 6,000 sentences and 152,871 word tokens with an average sentence length of 25 words. In addition to the universal dependencies syntactic annotation guidelines, the two treebanks differ in tokenization. All words containing unsegmented clitics (pronominal and copula clitics) annotated with complex labels in the UPDT have been separated from the clitics and appear with distinct labels in the Persian UD. The treebank has its original syntactic annota- tion scheme based on Stanford Typed Dependencies. In this paper, we present the approaches taken in the development of the Persian UD. Keywords: Universal Dependencies, Persian, Treebank 1. Introduction this paper, we present how we adapt the Universal Depen- In the past decade, the development of numerous depen- dencies to Persian by converting the Uppsala Persian De- dency parsers for different languages has frequently been pendency Treebank (UPDT) (Seraji, 2015) to the Persian benefited by the use of syntactically annotated resources, Universal Dependencies (Persian UD). First, we briefly de- or treebanks (Bohmov¨ a´ et al., 2003; Haverinen et al., 2010; scribe the Universal Dependencies and then we present the Kromann, 2003; Foth et al., 2014; Seraji et al., 2015; morphosyntactic annotations used in the extended version Vincze et al., 2010). -

Language Technology Meets Documentary Linguistics: What We Have to Tell Each Other

Language technology meets documentary linguistics: What we have to tell each other Language technology meets documentary linguistics: What we have to tell each other Trond Trosterud Giellatekno, Centre for Saami Language Technology http://giellatekno.uit.no/ . February 15, 2018 . Language technology meets documentary linguistics: What we have to tell each other Contents Introduction Language technology for the documentary linguist Language technology for the language society Conclusion . Language technology meets documentary linguistics: What we have to tell each other Introduction Introduction I Giellatekno: started in 2001 (UiT). Research group for language technology on Saami and other northern languages Gramm. modelling, dictionaries, ICALL, corpus analysis, MT, ... I Trond Trosterud, Lene Antonsen, Ciprian Gerstenberger, Chiara Argese I Divvun: Started in 2005 (UiT < Min. of Local Government). Infrastructure, proofing tools, synthetic speech, terminology I Sjur Moshagen, Thomas Omma, Maja Kappfjell, Børre Gaup, Tomi Pieski, Elena Paulsen, Linda Wiechetek . Language technology meets documentary linguistics: What we have to tell each other Introduction The most important languages we work on . Language technology meets documentary linguistics: What we have to tell each other Language technology for the documentary linguist Language technology for documentary linguistics ... Language technology meets documentary linguistics: What we have to tell each other Language technology for the documentary linguist ... what’s in it for the language community I work for? I Let’s pretend there are two types of language communities: 1. Language communities without plans for revitalisation or use in domains other than oral use 2. Language communities with such plans . Language technology meets documentary linguistics: What we have to tell each other Language technology for the documentary linguist Language communities without such plans I Gather empirical material and do your linguistic analysis I (The triplet: Grammar, text collection and dictionary) I .. -

On Compounds, Noun Phrases and Domains Gisli R

University of Connecticut OpenCommons@UConn Doctoral Dissertations University of Connecticut Graduate School 6-13-2017 Cycling Through Grammar: On Compounds, Noun Phrases and Domains Gisli R. Hardarson University of Connecticut, [email protected] Follow this and additional works at: https://opencommons.uconn.edu/dissertations Recommended Citation Hardarson, Gisli R., "Cycling Through Grammar: On Compounds, Noun Phrases and Domains" (2017). Doctoral Dissertations. 1570. https://opencommons.uconn.edu/dissertations/1570 Cycling Through Grammar: On Compounds, Noun Phrases and Domains Gísli Rúnar Harðarson, PhD University of Connecticut, 2017 In this dissertation, I address the question of domains within grammar: i.e. how domains are defined, whether different components of grammar make references to the same boundaries (or at least boundary definers), and whether these boundaries are uniform with respect to different processes. I address these questions in two case studies. First, I explore compound nouns in Icelandic and restrictions on their composition, where inflected non-head elements are structurally peripheral to uninflected ones. I argue that these effects are due to a matching condition which requires elements within compounds to match their attachment site in terms of size/type. Following that I explore how morphophonology is regulated by the structure of the compound. I argue for a contextual definition of the domain of morphophonology, where the highest functional morpheme in the extended projection of the root marks the boundary. Under this approach a morphophonological domain can contain smaller domains analogous to phases in syntax. This allows for the morphosyntactic structure to be mapped directly to phonology while giving the impression of two contradicting structures. -

Compounds and Word Trees

Compounds and word trees LING 481/581 Winter 2011 Organization • Compounds – heads – types of compounds • Word trees Compounding • [root] [root] – machine gun – land line – snail mail – top-heavy – fieldwork • Notice variability in punctuation (to ignore) Compound stress • Green Lake • bluebird • fast lane • Bigfoot • bad boy • old school • hotline • Myspace Compositionality • (Extent to which) meanings of words, phrases determined by – morpheme meaning – structure • Predictability of meaning in compounds – ’roid rage (< 1987; ‘road rage’ < 1988) – football (< 1486; ‘American football’ 1879) – soap opera (< 1939) Head • ‘A word in a syntactic construction or a morpheme in a morphological one that determines the grammatical function or meaning of the construction as a whole. For example, house is the head of the noun phrase the red house...’ Aronoff and Fudeman 2011 Righthand head rule • In English (and most languages), morphological heads are the rightmost non- inflectional morpheme in the word – Lexical category of entire compound = lexical category of rightmost member – Not Spanish (HS example p. 139) • English be- – devil, bedevil Compounding and lexical category noun verb adjective noun machine friend request skin-deep gun foot bail rage quit verb thinktank stir-fry(?) ? adjective high school dry-clean(?) red-hot low-ball ‘the V+N pattern is unproductive and limited to a few lexically listed items, and the N+V pattern is not really productive either.’ HS: 138 Heads of words • Morphosyntactic head: determines lexical category – syntactic distribution • That thinktank is overrated. – morphological characteristics; e.g. inflection • plurality: highschool highschools • tense on V: dry-clean dry-cleaned • case on N – Sahaptin wáyxti- “run, race” wayxtitpamá “pertaining to racing” wayxtitpamá k'úsi ‘race horse’ Máytski=ish á-shapaxwnawiinknik-xa-na wayxtitpamá k'úsi-nan..