Solo Trombone with Electronic Accompaniment: an Analysis and Performance Guide for Three Recent Compositions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Recommended Solos and Ensembles Tenor Trombone Solos Sång Till

Recommended Solos and Ensembles Tenor Trombone Solos Sång till Lotta, Jan Sandström. Edition Tarrodi: Stockholm, Sweden, 1991. Trombone and piano. Requires modest range (F – g flat1), well-developed lyricism, and musicianship. There are two versions of this piece, this and another that is scored a minor third higher. Written dynamics are minimal. Although phrases and slurs are not indicated, it is a SONG…encourage legato tonguing! Stephan Schulz, bass trombonist of the Berlin Philharmonic, gives a great performance of this work on YouTube - http://www.youtube.com/watch?v=Mn8569oTBg8. A Winter’s Night, Kevin McKee, 2011. Available from the composer, www.kevinmckeemusic.com. Trombone and piano. Explores the relative minor of three keys, easy rhythms, keys, range (A – g1, ossia to b flat1). There is a fine recording of this work on his web site. Trombone Sonata, Gordon Jacob. Emerson Edition: Yorkshire, England, 1979. Trombone and piano. There are no real difficult rhythms or technical considerations in this work, which lasts about 7 minutes. There is tenor clef used throughout the second movement, and it switches between bass and tenor in the last movement. Range is F – b flat1. Recorded by Dr. Ron Babcock on his CD Trombone Treasures, and available at Hickey’s Music, www.hickeys.com. Divertimento, Edward Gregson. Chappell Music: London, 1968. Trombone and piano. Three movements, range is modest (G-g#1, ossia a1), bass clef throughout. Some mixed meter. Requires a mute, glissandi, and ad. lib. flutter tonguing. Recorded by Brett Baker on his CD The World of Trombone, volume 1, and can be purchased at http://www.brettbaker.co.uk/downloads/product=download-world-of-the- trombone-volume-1-brett-baker. -

Delia Derbyshire

www.delia-derbyshire.org Delia Derbyshire Delia Derbyshire was born in Coventry, England, in 1937. Educated at Coventry Grammar School and Girton College, Cambridge, where she was awarded a degree in mathematics and music. In 1959, on approaching Decca records, Delia was told that the company DID NOT employ women in their recording studios, so she went to work for the UN in Geneva before returning to London to work for music publishers Boosey & Hawkes. In 1960 Delia joined the BBC as a trainee studio manager. She excelled in this field, but when it became apparent that the fledgling Radiophonic Workshop was under the same operational umbrella, she asked for an attachment there - an unheard of request, but one which was, nonetheless, granted. Delia remained 'temporarily attached' for years, regularly deputising for the Head, and influencing many of her trainee colleagues. To begin with Delia thought she had found her own private paradise where she could combine her interests in the theory and perception of sound; modes and tunings, and the communication of moods using purely electronic sources. Within a matter of months she had created her recording of Ron Grainer's Doctor Who theme, one of the most famous and instantly recognisable TV themes ever. On first hearing it Grainer was tickled pink: "Did I really write this?" he asked. "Most of it," replied Derbyshire. Thus began what is still referred to as the Golden Age of the Radiophonic Workshop. Initially set up as a service department for Radio Drama, it had always been run by someone with a drama background. -

Making Musical Magic Live

Making Musical Magic Live Inventing modern production technology for human-centric music performance Benjamin Arthur Philips Bloomberg Bachelor of Science in Computer Science and Engineering Massachusetts Institute of Technology, 2012 Master of Sciences in Media Arts and Sciences Massachusetts Institute of Technology, 2014 Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Media Arts and Sciences at the Massachusetts Institute of Technology February 2020 © 2020 Massachusetts Institute of Technology. All Rights Reserved. Signature of Author: Benjamin Arthur Philips Bloomberg Program in Media Arts and Sciences 17 January 2020 Certified by: Tod Machover Muriel R. Cooper Professor of Music and Media Thesis Supervisor, Program in Media Arts and Sciences Accepted by: Tod Machover Muriel R. Cooper Professor of Music and Media Academic Head, Program in Media Arts and Sciences Making Musical Magic Live Inventing modern production technology for human-centric music performance Benjamin Arthur Philips Bloomberg Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, on January 17 2020, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Media Arts and Sciences at the Massachusetts Institute of Technology Abstract Fifty-two years ago, Sergeant Pepper’s Lonely Hearts Club Band redefined what it meant to make a record album. The Beatles revolution- ized the recording process using technology to achieve completely unprecedented sounds and arrangements. Until then, popular music recordings were simply faithful reproductions of a live performance. Over the past fifty years, recording and production techniques have advanced so far that another challenge has arisen: it is now very difficult for performing artists to give a live performance that has the same impact, complexity and nuance as a produced studio recording. -

Gardner • Even Orpheus Needs a Synthi Edit No Proof

James Gardner Even Orpheus Needs a Synthi Since his return to active service a few years ago1, Peter Zinovieff has appeared quite frequently in interviews in the mainstream press and online outlets2 talking not only about his recent sonic art projects but also about the work he did in the 1960s and 70s at his own pioneering computer electronic music studio in Putney. And no such interview would be complete without referring to EMS, the synthesiser company he co-founded in 1969, or namechecking the many rock celebrities who used its products, such as the VCS3 and Synthi AKS synthesisers. Before this Indian summer (he is now 82) there had been a gap of some 30 years in his compositional activity since the demise of his studio. I say ‘compositional’ activity, but in the 60s and 70s he saw himself as more animateur than composer and it is perhaps in that capacity that his unique contribution to British electronic music during those two decades is best understood. In this article I will discuss just some of the work that was done at Zinovieff’s studio during its relatively brief existence and consider two recent contributions to the documentation and contextualization of that work: Tom Hall’s chapter3 on Harrison Birtwistle’s electronic music collaborations with Zinovieff; and the double CD Electronic Calendar: The EMS Tapes,4 which presents a substantial sampling of the studio’s output between 1966 and 1979. Electronic Calendar, a handsome package to be sure, consists of two CDs and a lavishly-illustrated booklet with lengthy texts. -

Composers' Bridge!

Composers’ Bridge Workbook Contents Notation Orchestration Graphic notation 4 Orchestral families 43 My graphic notation 8 Winds 45 Clefs 9 Brass 50 Percussion 53 Note lengths Strings 54 Musical equations 10 String instrument special techniques 59 Rhythm Voice: text setting 61 My rhythm 12 Voice: timbre 67 Rhythmic dictation 13 Tips for writing for voice 68 Record a rhythm and notate it 15 Ideas for instruments 70 Rhythm salad 16 Discovering instruments Rhythm fun 17 from around the world 71 Pitch Articulation and dynamics Pitch-shape game 19 Articulation 72 Name the pitches – part one 20 Dynamics 73 Name the pitches – part two 21 Score reading Accidentals Muddling through your music 74 Piano key activity 22 Accidental practice 24 Making scores and parts Enharmonics 25 The score 78 Parts 78 Intervals Common notational errors Fantasy intervals 26 and how to catch them 79 Natural half steps 27 Program notes 80 Interval number 28 Score template 82 Interval quality 29 Interval quality identification 30 Form Interval quality practice 32 Form analysis 84 Melody Rehearsal and concert My melody 33 Presenting your music in front Emotion melodies 34 of an audience 85 Listening to melodies 36 Working with performers 87 Variation and development Using the computer Things you can do with a Computer notation: Noteflight 89 musical idea 37 Sound exploration Harmony My favorite sounds 92 Harmony basics 39 Music in words and sentences 93 Ear fantasy 40 Word painting 95 Found sound improvisation 96 Counterpoint Found sound composition 97 This way and that 41 Listening journal 98 Chord game 42 Glossary 99 Welcome Dear Student and family Welcome to the Composers' Bridge! The fact that you are being given this book means that we already value you as a composer and a creative artist-in-training. -

Instruments of the Orchestra

INSTRUMENTS OF THE ORCHESTRA String Family WHAT: Wooden, hollow-bodied instruments strung with metal strings across a bridge. WHERE: Find this family in the front of the orchestra and along the right side. HOW: Sound is produced by a vibrating string that is bowed with a bow made of horse tail hair. The air then resonates in the hollow body. Other playing techniques include pizzicato (plucking the strings), col legno (playing with the wooden part of the bow), and double-stopping (bowing two strings at once). WHY: Composers use these instruments for their singing quality and depth of sound. HOW MANY: There are four sizes of stringed instruments: violin, viola, cello and bass. A total of forty-four are used in full orchestras. The string family is the largest family in the orchestra, accounting for over half of the total number of musicians on stage. The string instruments all have carved, hollow, wooden bodies with four strings running from top to bottom. The instruments have basically the same shape but vary in size, from the smaller VIOLINS and VIOLAS, which are played by being held firmly under the chin and either bowed or plucked, to the larger CELLOS and BASSES, which stand on the floor, supported by a long rod called an end pin. The cello is always played in a seated position, while the bass is so large that a musician must stand or sit on a very high stool in order to play it. These stringed instruments developed from an older instrument called the viol, which had six strings. -

Instrument Descriptions

RENAISSANCE INSTRUMENTS Shawm and Bagpipes The shawm is a member of a double reed tradition traceable back to ancient Egypt and prominent in many cultures (the Turkish zurna, Chinese so- na, Javanese sruni, Hindu shehnai). In Europe it was combined with brass instruments to form the principal ensemble of the wind band in the 15th and 16th centuries and gave rise in the 1660’s to the Baroque oboe. The reed of the shawm is manipulated directly by the player’s lips, allowing an extended range. The concept of inserting a reed into an airtight bag above a simple pipe is an old one, used in ancient Sumeria and Greece, and found in almost every culture. The bag acts as a reservoir for air, allowing for continuous sound. Many civic and court wind bands of the 15th and early 16th centuries include listings for bagpipes, but later they became the provenance of peasants, used for dances and festivities. Dulcian The dulcian, or bajón, as it was known in Spain, was developed somewhere in the second quarter of the 16th century, an attempt to create a bass reed instrument with a wide range but without the length of a bass shawm. This was accomplished by drilling a bore that doubled back on itself in the same piece of wood, producing an instrument effectively twice as long as the piece of wood that housed it and resulting in a sweeter and softer sound with greater dynamic flexibility. The dulcian provided the bass for brass and reed ensembles throughout its existence. During the 17th century, it became an important solo and continuo instrument and was played into the early 18th century, alongside the jointed bassoon which eventually displaced it. -

Delia Derbyshire Sound and Music for the BBC Radiophonic Workshop, 1962-1973

Delia Derbyshire Sound and Music For The BBC Radiophonic Workshop, 1962-1973 Teresa Winter PhD University of York Music June 2015 2 Abstract This thesis explores the electronic music and sound created by Delia Derbyshire in the BBC’s Radiophonic Workshop between 1962 and 1973. After her resignation from the BBC in the early 1970s, the scope and breadth of her musical work there became obscured, and so this research is primarily presented as an open-ended enquiry into that work. During the course of my enquiries, I found a much wider variety of music than the popular perception of Derbyshire suggests: it ranged from theme tunes to children’s television programmes to concrete poetry to intricate experimental soundscapes of synthesis. While her most famous work, the theme to the science fiction television programme Doctor Who (1963) has been discussed many times, because of the popularity of the show, most of the pieces here have not previously received detailed attention. Some are not widely available at all and so are practically unknown and unexplored. Despite being the first institutional electronic music studio in Britain, the Workshop’s role in broadcasting, rather than autonomous music, has resulted in it being overlooked in historical accounts of electronic music, and very little research has been undertaken to discover more about the contents of its extensive archived back catalogue. Conversely, largely because of her role in the creation of its most recognised work, the previously mentioned Doctor Who theme tune, Derbyshire is often positioned as a pioneer in the medium for bringing electronic music to a large audience. -

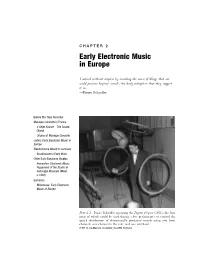

Holmes Electronic and Experimental Music

C H A P T E R 2 Early Electronic Music in Europe I noticed without surprise by recording the noise of things that one could perceive beyond sounds, the daily metaphors that they suggest to us. —Pierre Schaeffer Before the Tape Recorder Musique Concrète in France L’Objet Sonore—The Sound Object Origins of Musique Concrète Listen: Early Electronic Music in Europe Elektronische Musik in Germany Stockhausen’s Early Work Other Early European Studios Innovation: Electronic Music Equipment of the Studio di Fonologia Musicale (Milan, c.1960) Summary Milestones: Early Electronic Music of Europe Plate 2.1 Pierre Schaeffer operating the Pupitre d’espace (1951), the four rings of which could be used during a live performance to control the spatial distribution of electronically produced sounds using two front channels: one channel in the rear, and one overhead. (1951 © Ina/Maurice Lecardent, Ina GRM Archives) 42 EARLY HISTORY – PREDECESSORS AND PIONEERS A convergence of new technologies and a general cultural backlash against Old World arts and values made conditions favorable for the rise of electronic music in the years following World War II. Musical ideas that met with punishing repression and indiffer- ence prior to the war became less odious to a new generation of listeners who embraced futuristic advances of the atomic age. Prior to World War II, electronic music was anchored down by a reliance on live performance. Only a few composers—Varèse and Cage among them—anticipated the importance of the recording medium to the growth of electronic music. This chapter traces a technological transition from the turntable to the magnetic tape recorder as well as the transformation of electronic music from a medium of live performance to that of recorded media. -

Dr. Davidson's Recommendations for Trombones

Dr. Davidson’s Recommendations for Trombones, Mouthpieces, and Accessories Disclaimer - I’m not under contract with any instrument manufacturers discussed below. I play an M&W 322 or a Greenhoe Optimized Bach 42BG for my tenor work, and a Courtois 131R alto trombone. I think the M&W and Greenhoe trombones are the best instruments for me. Disclaimer aside, here are some possible recommendations for you. Large Bore Tenors (.547 bore) • Bach 42BO: This is an open-wrap F-attachment horn. I’d get the extra light slide, which I believe is a bit more durable, and, if possible, a gold brass bell. The Bach 42B was and is “the gold standard.” Read more here: http://www.conn-selmer.com/en-us/our-instruments/band- instruments/trombones/42bo/ • M&W Custom Trombones: Two former Greenhoe craftsman/professional trombonists formed their own company after Gary Greenhoe retired and closed his store. The M&W Trombones are amazing works of art, and are arguably the finest trombones made. The 322 or 322-T (T is for “Tuning-in Slide”) models are the tenor designations. I’m really a fan of one of the craftsman, Mike McLemore – he’s as good as they come. Consider this. These horns will be in-line, price-wise, with the Greenhoe/Edwards/Shires horns – they’re custom made, and will take a while to make and for you to receive them. That said, they’re VERY well-made. www.customtrombones.com • Yamaha 882OR: The “R” in the model number is important here – this is the instrument designed by Larry Zalkind, professor at Eastman, and former principal trombonist of the Utah Symphony. -

Loop Station – Tvorba Skladby

Lauderova MŠ, ZŠ a gymnázium při židovské obci v Praze Loop station – tvorba skladby Marie Čechová Vedoucí: Tereza Rejšková Ročník: 6. O 2017/18 Abstrakt Tato práce se věnuje podrobnému popisu tvorby autorské skladby, která byla vytvořena za pomoci hlasových stop na loop station. V první části poukazuje na vývoj této techniky napříč historií, přičemž zmiňuje i různé důležité události, které se jí týkají a interprety využívající loop station. Dále vysvětluje, jak přístroj funguje a jaké funkce nabízí. Jedna z dalších částí se zabývá pouze interprety, a jakým způsobem pracují s loop station. Seminární práce se často odkazuje na autorské rozhovory, které autorka vedla s hudebními interprety, kteří loop station využívají. Klíčová slova: loop station, smyčka, interpret, rozhovor, skladba 2 Obsah Úvod ......................................................................................................................................... 4 1 Teoretická část ................................................................................................................. 5 1.1 Historie – loop station .............................................................................................. 5 1.2 Funkce – loop station ............................................................................................... 6 1.3 Vybraní interpreti ..................................................................................................... 7 1.4 Rozbor skladby ........................................................................................................ -

Harpsichord and Its Discourses

Popular Music and Instrument Technology in an Electronic Age, 1960-1969 Farley Miller Schulich School of Music McGill University, Montréal April 2018 A thesis submitted to McGill University in partial fulfilment of the requirements of the degree of Ph.D. in Musicology © Farley Miller 2018 Table of Contents Abstract ................................................................................................................... iv Résumé ..................................................................................................................... v Acknowledgements ................................................................................................ vi Introduction | Popular Music and Instrument Technology in an Electronic Age ............................................................................................................................ 1 0.1: Project Overview .................................................................................................................. 1 0.1.1: Going Electric ................................................................................................................ 6 0.1.2: Encountering and Categorizing Technology .................................................................. 9 0.2: Literature Review and Theoretical Concerns ..................................................................... 16 0.2.1: Writing About Music and Technology ........................................................................ 16 0.2.2: The Theory of Affordances .........................................................................................