Trinity University Campus-Wide Deep Dive

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory,Ritual and Place in Africa TWIN CITIES AFRICANIST SYMPOSIUM

Sacred Ground: Memory,Ritual and Place in Africa TWIN CITIES AFRICANIST SYMPOSIUM Carleton College February 21-22, 2003 Events Schedule Friday, February 21 Great Hall, 4 to 9 p.m. Welcoming Remarks Allen Isaacman, University of Minnesota Keynote Lecture “The Politics and Poetics of Sacred Sites” Sandra Greene, Professor of History, Cornell University 4 to 6 p.m. Reception with African Food, Live Music Musical performance by Jalibah Kuyateh and the Mandingo Griot Ensemble 6 to 9 p.m. Saturday, February 22 Alumni Guest House Meeting Room Morning panel: 9 to 10:30 a.m. Theme: Sacred Ground: Memory, Ritual and Place in Africa Chair: Sandra Greene, Cornell University William Moseley, Department of Geography, Macalester College, “Leaving Hallowed Practices for Hollow Ground: Wealth, Poverty and Cotton Production in Southern Mali” Kathryn Linn Geurts, Department of Anthropology, Hamline University, “Migration Myths, Landscape, and Cultural Memory in Southeastern Ghana” Jamie Monson, Department of History, Carleton College, “From Protective Lions to Angry Spirits: Local Discourses of Land Degradation in Tanzania” Cynthia Becker, Department of Art History, University of St. Thomas, “Zaouia: Sacred Space, Sufism and Slavery in the Trans-Sahara Caravan Trade” Coffee Break Mid-Morning panel: 11 a.m. to 12:30 p.m. Theme: Memory, Ritual and Performance in Africa Chair: Dianna Shandy, Macalester College Michele Wagner, Department of History, University of Minnesota, “Reburial in Rwanda: Ritual of Healing or Ritual of Revenge?” Tommie Jackson, Department of English, St. Cloud State University, “‘Fences’ in the drama by August Wilson and ‘Sizwe Bansi is Dead,’ by Athol Fugard” Helena Pohlandt-McCormick, Department of History, University of Minnesota, “Memory and Violence in Soweto” Pamela Feldman-Savelsberg, Department of Anthropology, Carleton College, “Remembering the Troubles: Collective Memory and Reproduction in Cameroon” Break 12:30 to 2 p.m. -

Lawrence University (1-1, 0-0 MWC North) at Beloit College (1-1, 0-0

Lawrence University (1-1, 0-0 MWC North) at Beloit College (1-1, 0-0 MWC North) Saturday, September 19, 2015, 1 p.m., Strong Stadium, Beloit, Wisconsin Webcast making his first start, was 23-for-36 ing possession and moved 75 yards A free video webcast is available for 274 yards and three touchdowns. in 12 plays for the game’s first touch- at: http://portal.stretchinternet.com/ Mandich, a senior receiver from Green down. Byrd hit freshman receiver and lawrence/. Bay, had a career-high eight catches Appleton native Cole Erickson with an for 130 yards and a touchdown for the eight-yard touchdown pass to com- The Series Vikings. plete the drive and give Lawrence a Lawrence holds a 58-36-5 edge in The Lawrence defense limited 7-3 lead. a series that dates all the way back to Beloit to 266 yards and made a key The Vikings then put together 1899. This year marks the 100th game stop late in the game to preserve the another long scoring drive early in in the series, which is the second- victory. Linebacker Brandon Taylor the second quarter. Lawrence went longest rivalry for Lawrence. The Vi- paced the Lawrence defense with 14 80 yards in eight plays and Byrd found kings have played 114 games against tackles and two pass breakups. Trevor Spina with a 24-yard touch- Ripon, and that series dates to 1893. Beloit was down by eight but got down pass for a 14-3 Lawrence lead Lawrence has won three of the last an interception on a tipped ball and with 11:53 left in the first half. -

2005 Alumni Association Award Recipients

2005 Alumni Association Award Recipients 'C' CLUB HALL OF FAME The Carleton College Alumni ‘C’ Club inducted four new members into its Hall of Fame during Reunion weekend Bill Hendren ’50, a four-year letter winner in cross-country and indoor and outdoor track and field, holds the rare distinction of serving as a team captain in all three sports. He graduated as the Carleton record-holder in the indoor half- mile and mile runs and the outdoor mile run. He won the Minnesota state cross- country meet as a freshman and helped set new Carleton Relays records in the sprint and two-mile relays, and the mile. Hendren served as president of the ‘C’ Club his senior year, helping athletics become more visible on the Carleton campus. He continues to be a leader in the running world, having founded and coached a youth track club in Ventura, California. Lydia Neilsen ’95 was Carleton’s first female NCAA individual swimming champion, capturing the 1995 100-yard breaststroke title and becoming the first Division III female to break 1:05.00 in the event. She earned three All-America citations in the 100-yard breaststroke and was an All-America honorable mention in the 200-yard breaststroke. She captured the MIAC title in the 100- and 200-yard breaststroke in 1995 and helped the 200-yard medley relay team to victory as well. She was a four-time all-conference performer and set three school records. She earned an NCAA Postgraduate Scholarship and was an Academic All-America selection as well as the first recipient of the Pat Lamb Award as Carleton’s top female student-athlete of the year. -

2014Football Guide

2014 FOOTBALL GUIDE WWW.GAC.EDU/ATHLETICS/FB ABOUT GUSTAVUS ADOLPHUS COLLEGE For over 150 years, Gustavus Adolphus College has been educating tomorrow’s leaders to reason and act responsibly through its educational quality, accessible facilities, spiritual identity, and economic value. Quality is a word that finds its way into many colleges’ vocabularies. At Gustavus, quality is evident in its students, faculty, facilities, and programs, leading to recognition by a host of impartial critics. Forbes.com ranked Gustavus among the top 100 institutions in the country in its college report based upon several factors, including the average amount of student debt and the four-year graduation rate. The College is also included in other national guides and rankings, for example the Fiske Guide, U.S. News & World Report’s Best College Guide, and the Princeton Review, which in addition has ranked the Gustavus Dining Service among the best in the nation in each of the past two years. Gustavus students typically rank in the top third of their high school graduating class, and over 37 percent rank in the upper 10 percent of their class. Continuing their achievement at Gustavus, students find a challenging academic atmosphere where professors—98 percent of tenured faculty members have earned the highest degree in KING GUSTAV II ADOLF: THE their field—teach all classes (there are no graduate assistants) and serve as academic advisers. Collaborative learning is the norm, with exceptionally NAMESAKE OF GUSTAVUS motivated students invited to conduct research -

Selective College Admissions Five Important Tips

Selective College Admissions Five Important Tips Webinar: August 31, 2020 1 A few important notes: • Our priority in the college counseling program at Minnetonka High School – helping the student to find the right fit! • Our hope – to focus on going to a college that is a good match, not to focus on getting in. • Every student is encouraged to follow this application guideline – “You apply for admission to a college Because you would like to go there!” 2 Categories of selectivity – What does “selective” mean? • Most selective – acceptance rate Below 15% (Harvard, Princeton, University of Chicago, Yale) • Highly selective – acceptance rate of 15-30% (Boston University, Carleton, Colgate, Emory, Georgetown) • Moderately selective – acceptances of 31-60% (Denison, Denver, Macalester, Providence, St. Olaf) • Selective – acceptances of 61-90% (Drake, Hamline, Iowa State, Marquette, NeBraska, St. Thomas) Most selective • University of Chicago – freshman class of 1,726 Applicants 34,641 Admitted 2,137 (6%) ACT mid-50th 33-35 Top 10% 99% % Graduating 95% Highly selective • Carleton College – freshman class of 525 Applicants 7,324 Admitted 1,401 (19%) ACT mid-50th 31-34 Top 10% 71% % Graduating 92% Highly selective • Boston University – freshman class of 3,156 Applicants 62,224 Admitted 11,786 (20%) ACT mid-50th 30-33 Top 10% 64% % Graduating 88% Moderately selective • University of Denver – freshman class of 1,351 Applicants 21,028 Admitted 12,345 (58%) ACT mid-50th 26-31 Top 10% 39% % Graduating 77% Selective • Drake University – freshman class of 782 Applicants 6,944 Admitted 4,697 (68%) ACT mid-50th 24-30 Top 10% 39% % Graduating 82% Early application options • Early Decision – students make a binding commitment to a first-choice institution where, if admitted, they must enroll. -

2021 Academic Catalog P a G E | 1

Virginia Wesleyan University 2020 - 2021 Academic Catalog P a g e | 1 Undergraduate Academic Catalog 2020 - 2021 Statement of Non-Discrimination Virginia Wesleyan University is an Equal Opportunity Employer. Applicants are considered on the basis of skills, experience, and qualifications without regard to race, religion, color, creed, gender, national and ethnic origin, age, marital status, covered veteran status, sexual orientation, gender identity and expression, the presence of non-job-related medical disability, or any other legally protected status. Complaints relevant to Title IX are managed by the University’s Title IX Coordinator, Karla Rasmussen, 757.455.3316 or by emailing [email protected]. Complaints may also be reported directly to the Office for Civil Rights. This catalog is published by Virginia Wesleyan University and contains information concerning campus life, academic policies, program and course offerings, and career preparation. Students are expected to familiarize themselves with the academic policies contained in the catalog. Failure to do so does not excuse students from the requirements and regulations described herein. Disclaimer: The catalog is offered as a guide, not as a contract. It is not intended to and does not contain all policies and regulations that relate to students. The University reserves the right to make alterations in programs, course offerings, policies, and fees without prior notice. For the Online Degree Completion and Graduate Programs Catalog, please visit: vwu.edu/gradonline Virginia Wesleyan -

Report of the Working Group on Williams in The

DRAFT Report of the Working Group Williams in the World Working Group Members: Jackson Ennis, Class of 2020 Jim Kolesar ’72, Office of the President Colin Ovitsky, Center for Learning in Action Noah Sandstrom, Department of Psychology and Neuroscience Program Sharifa Wright ’03, Alumni Relations February 2020 1 Table of Contents Background……………………………………………………………………………………….. 3 Our Work…………………………………………………………………………………………. 6 Themes……………………………………………………………………………………………. 6 Aspirations for the next decade……………………………………………………………………7 Guiding Principles………………………………………………………………………………... 9 Recommendations……………………………………………………………………………….. 12 To Close…………………………………………………………………………………………. 14 Appendices 1: Williams in the World charge………………………………..……………………….…........ 15 2: Summary of Outreach…………………………………………………………………….…. 16 3: Tactical and Tangible Ideas That Arose From Outreach……………………………….……. 18 4: Centers for Engaged Learning or Scholarship at Several Peer Schools……………………... 21 2 Background The story of Williams’s engagement in the world is long and interesting. We have space here only to summarize it. For most of its life, Williams understood itself as a “college on a hill.” Students withdrew here to contemplate higher things before heading back into the “real world.” The vocation of faculty was to pass on that knowledge, while staff supported the operation by managing the day-to-day. Over time, however, all of these lines blurred. The beginning may have come in the early 1960s, when students formed the Lehman Service Council to organize their projects in the local community. Two student-initiated programs, the Williamstown Youth Center and the Berkshire Food Project, still thrive. In the way that the student-formed Lyceum of Natural History, some of whose interactions with other cultures we now question, eventually led to the introduction of science into the curriculum, so too in time did the engagement seed germinated in the Lehman Council disperse widely through the college. -

St. Olaf College

National Institute for Learning Outcomes Assessment September 2020 Assessment in Motion: Steps Toward a More Integrated Model Susan Canon, Kelsey Thompson, and Mary Walczak Olaf College St. Foreword By Pat Hutchings As part of an ongoing effort to track and explore developments in student learning outcomes assessment, the National Institute for Learning Outcomes Assessment (NILOA) has published a number of institutional case studies which are housed on the website. We are now revisiting and updating some of those earlier examples in order to understand how campus assessment practices evolve over time—through lessons learned from local experience but also as a result of changes in institutional priorities, the launch of new initiatives, leadership transitions, and trends in the larger assessment movement. This report on St. Olaf College is an update of theoriginal 2012 case study by Natasha Jankowski. Founded in 1874 by Norwegian Lutheran immigrants, St. Olaf College is a nationally ranked residential liberal arts college of the Evangelical Lutheran Church in America (ELCA) located in Northfield, Minnesota. St. Olaf challenges students to excel in the liberal arts, examine faith and values, and explore meaningful vocation in an inclusive, globally engaged community nourished by Lutheran tradition. St. Olaf has roughly 3,000 students, offers 49 majors and 20 concentrations (minors), and has a robust study-abroad program, with more than two-thirds of students studying abroad before graduating. St. Olaf has a long history with assessment, having participated in many different assessment initiatives over the years including a Teagle-funded project with Carleton College and Macalester College focused on using assessment findings to improve specific learning outcomes, and eth Associated Colleges of the Midwest-Teagle Collegium on Student Learning exploring how students learn and acquire the knowledge and skills of a liberal education. -

The Trinity Reporter, Fall 2015

FALL 2015 The Trinity REPORTER Trinity campus celebrates WHEN 45 years IN ROME … BRINGING TEDx THIS IS HER BANTAMS AT INSIDE TO TRINITY ‘FIGHT SONG’ THEIR BEST Student spearheads Rachel Platten ’03 soars Women’s varsity-eight crew successful efort with pop music hit wins fourth national title FALL 2015 16 20 26 This is her ‘Fight Song’ When in Rome Gender balance Rachel Platten ’03 soars with pop music hit Trinity campus celebrates 45 years makes good STEM Taking a look at Trinity and beyond FEATURES 2 / The Trinity Reporter / CONTENTS DEPARTMENTS 02 ALONG THE WALK 06 VOLUNTEER SPOTLIGHT 07 AROUND HARTFORD 14 TRINITY TREASURE 36 ATHLETICS 41 CLASS NOTES 72 IN MEMORY 78 ALUMNI EVENTS 80 ENDNOTE The Trinity Reporter Vol. 46, No. 1, Fall 2015 Published by the Ofce of Communications, Trinity College, Hartford, CT 06106. Postage paid at Hartford, Connecticut, and additional mailing ofces. The Trinity Reporter is mailed to alumni, parents, faculty, staf, and friends of Trinity College without charge. All publication rights reserved, and contents may be reproduced or reprinted only by written permission of the editor. Opinions expressed are those of the editor or contributors and do not reflect the ofcial position of Trinity College. Postmaster: Send address changes to The Trinity Reporter, Trinity College, Hartford, CT 06106 The editor welcomes your questions and comments: Sonya Adams, Ofce of Communications, Trinity College, 300 Summit Street, Hartford, CT 06106 or [email protected]. www. trincoll.edu ON THE COVER Livio Pestilli, bottom right, longtime director of the Trinity College Rome Campus, meets with his “Bernini and His World” seminar class in the sacristy of the church of Santa Maria in Vallicella, also known as Chiesa Nuova. -

Gustavus Adolphus College 2013-14 Women's Basketball

Gustavus Adolphus College 2013-14 Women’s Basketball 2013-14 SCHEDULE/RESULTS GUSTAVUS ADOLPHUS ST. OLAF DATE OPPONENT TIME/RESULT COLLEGE COLLEGE 11/20 UW-RIVER FALLS W 77-56 “Golden Gusties” “Oles” 11/23 UM-Morris W 70-38 5-2, 2-2 MIAC 5-2, 3-1 MIAC 11/30 UNIVERSITY OF NORTHWESTERN W 75-53 12/4 HAMLINE UNIVERSITY* W 75-66 Game 8 12/7 CONCORDIA COLLEGE* L 47-70 Game Information 12/11 COLLEGE OF SAINT BENEDICT* L 73-76 12/21 Augsburg College* W 66-51 DATE: Saturday, January 4 1/4 St. Olaf College* 3:00 p.m. TIME: 1:00 p.m. 1/6 MACALESTER COLLEGE* 7:30 p.m. SITE: Northfield, Minnesota 1/8 Bethel University* 7:30 p.m. VENUE: Skoglund Center: Bob Gelle Court 1/11 CARLETON COLLEGE* (Alumnae Day) 1:00 p.m. WEB VIDEO: http://client.stretchinternet.com/client/stolaf.portal 1/15 UNIVERSITY OF ST. THOMAS* 7:30 p.m. LIVE STATS: http://client.stretchinternet.com/client/stolaf.portal 1/18 Saint Mary’s University* 1:30 p.m. SETTING THE SCENE 1/20 ST. CATHERINE UNIVERSITY* 7:30 p.m. • The Gustavus Adolphus women’s basketball team will kick off 2014 with a road test 1/22 Hamline University* 7:30 p.m. against the Oles of St. Olaf College on Saturday afternoon at 1:00 p.m. on Bob Gelle Court. 1/25 Concordia College* 1:30 p.m. Gustavus enters Saturday’s game at 5-2 overall and 2-2 in the MIAC, while St. -

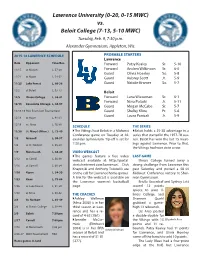

Lawrence University (0-20, 0-15 MWC) Vs. Beloit College (7-13, 5-10 MWC) Tuesday, Feb

Lawrence University (0-20, 0-15 MWC) vs. Beloit College (7-13, 5-10 MWC) Tuesday, Feb. 9, 7:30 p.m. Alexander Gymnasium, Appleton, Wis. 2015-16 LAWRENCE SCHEDULE PROBABLE STARTERS Lawrence Date Opponent Time/Res. Forward Patsy Kealey Sr. 5-10 11/17 at Marian L, 57-28 Forward Andrea Wilkinson Sr. 6-0 Guard Olivia Hoesley So. 5-8 11/21 at Ripon L, 74-57 Guard Aubrey Scott Jr. 5-9 11/25 Lake Forest L, 60-36 Guard Natalie Kramer So. 5-7 12/2 at Beloit L, 93-73 Beloit 12/5 Illinois College L, 56-41 Forward Lana Wieseman Sr. 6-1 Forward Nora Polaski Jr. 5-11 12/15 Concordia Chicago L, 68-57 Guard Megan McCabe Sr. 5-7 12/18-19 Post Exam Jam Tournament Guard Shelby Kline Fr. 5-6 Guard Laura Panicali Jr. 5-9 12/18 at Hope L, 91-43 12/19 vs. Alma L, 76-30 SCHEDULE THE SERIES 12/30 St. Mary’s (Minn.) L, 73-49 }The Vikings host Beloit in a Midwest }Beloit holds a 35-28 advantage in a Conference game on Tuesday at Al- series that started in the 1977-78 sea- 1/2 Grinnell L, 50-37 exander Gymnasium. Tip-off is set for son. Beloit has won the last 15 meet- 1/6 at St. Norbert L, 85-29 7:30 p.m. ings against Lawrence. Prior to that, the Vikings had won six in a row. 1/9 Monmouth L, 68-49 VIDEO WEBCAST }The games feature a free video LAST GAME 1/12 at Carroll L, 56-30 webcast available at: http://portal. -

Colleges & Universities

Bishop Watterson High School Students Have Been Accepted at These Colleges and Universities Art Institute of Chicago Fordham University Adrian College University of Cincinnati Franciscan University of Steubenville University of Akron Cincinnati Art Institute Franklin and Marshall College University of Alabama The Citadel Franklin University Albion College Claremont McKenna College Furman University Albertus Magnus College Clemson University Gannon University Allegheny College Cleveland Inst. Of Art George Mason University Alma College Cleveland State University George Washington University American Academy of Dramatic Arts Coastal Carolina University Georgetown University American University College of Charleston Georgia Southern University Amherst College University of Colorado at Boulder Georgia Institute of Technology Anderson University (IN) Colorado College University of Georgia Antioch College Colorado State University Gettysburg College Arizona State University Colorado School of Mines Goshen College University of Arizona Columbia College (Chicago) Grinnell College (IA) University of Arkansas Columbia University Hampshire College (MA) Art Academy of Cincinnati Columbus College of Art & Design Hamilton College The Art Institute of California-Hollywood Columbus State Community College Hampton University Ashland University Converse College (SC) Hanover College (IN) Assumption College Cornell University Hamilton College Augustana College Creighton University Harvard University Aurora University University of the Cumberlands Haverford