Uva-DARE (Digital Academic Repository)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Is Xkeyscore, and Can It 'Eavesdrop on Everyone, Everywhere'? (+Video) - Csmonitor.Com

8/3/13 What is XKeyscore, and can it 'eavesdrop on everyone, everywhere'? (+video) - CSMonitor.com The Christian Science Monitor CSMonitor.com What is XKeyscore, and can it 'eavesdrop on everyone, everywhere'? (+video) XKeyscore is apparently a tool the NSA uses to sift through massive amounts of data. Critics say it allows the NSA to dip into people's 'most private thoughts' – a claim key lawmakers reject. This photo shows an aerial view of the NSA's Utah Data Center in Bluffdale, Utah. The long, squat buildings span 1.5 million square feet, and are filled with super powered computers designed to store massive amounts of information gathered secretly from phone calls and emails. (Rick Bowmer/AP/File) By Mark Clayton, Staff writer / August 1, 2013 at 9:38 pm EDT Topsecret documents leaked to The Guardian newspaper have set off a new round of debate over National Security Agency surveillance of electronic communications, with some cyber experts saying the trove reveals new and more dangerous means of digital snooping, while some members of Congress suggested that interpretation was incorrect. The NSA's collection of "metadata" – basic call logs of phone numbers, time of the call, and duration of calls – is now wellknown, with the Senate holding a hearing on the subject this week. But the tools discussed in the new Guardian documents apparently go beyond mere collection, allowing the agency to sift through the www.csmonitor.com/layout/set/print/USA/2013/0801/What-is-XKeyscore-and-can-it-eavesdrop-on-everyone-everywhere-video 1/4 8/3/13 What is XKeyscore, and can it 'eavesdrop on everyone, everywhere'? (+video) - CSMonitor.com haystack of digital global communications to find the needle of terrorist activity. -

Advocating for Basic Constitutional Search Protections to Apply to Cell Phones from Eavesdropping and Tracking by Government and Corporate Entities

University of Central Florida STARS HIM 1990-2015 2013 Brave New World Reloaded: Advocating for Basic Constitutional Search Protections to Apply to Cell Phones from Eavesdropping and Tracking by Government and Corporate Entities Mark Berrios-Ayala University of Central Florida Part of the Legal Studies Commons Find similar works at: https://stars.library.ucf.edu/honorstheses1990-2015 University of Central Florida Libraries http://library.ucf.edu This Open Access is brought to you for free and open access by STARS. It has been accepted for inclusion in HIM 1990-2015 by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation Berrios-Ayala, Mark, "Brave New World Reloaded: Advocating for Basic Constitutional Search Protections to Apply to Cell Phones from Eavesdropping and Tracking by Government and Corporate Entities" (2013). HIM 1990-2015. 1519. https://stars.library.ucf.edu/honorstheses1990-2015/1519 BRAVE NEW WORLD RELOADED: ADVOCATING FOR BASIC CONSTITUTIONAL SEARCH PROTECTIONS TO APPLY TO CELL PHONES FROM EAVESDROPPING AND TRACKING BY THE GOVERNMENT AND CORPORATE ENTITIES by MARK KENNETH BERRIOS-AYALA A thesis submitted in partial fulfillment of the requirements for the Honors in the Major Program in Legal Studies in the College of Health and Public Affairs and in The Burnett Honors College at the University of Central Florida Orlando, Florida Fall Term 2013 Thesis Chair: Dr. Abby Milon ABSTRACT Imagine a world where someone’s personal information is constantly compromised, where federal government entities AKA Big Brother always knows what anyone is Googling, who an individual is texting, and their emoticons on Twitter. -

The Nsa's Prism Program and the New Eu Privacy Regulation: Why U.S

American University Business Law Review Volume 3 | Issue 2 Article 5 2013 The SN A'S Prism Program And The ewN EU Privacy Regulation: Why U.S. Companies With A Presence In The EU ouldC Be In Trouble Juhi Tariq American University Washington College of Law Follow this and additional works at: http://digitalcommons.wcl.american.edu/aublr Part of the International Law Commons, and the Internet Law Commons Recommended Citation Tariq, Juhi "The SAN 'S Prism Program And The eN w EU Privacy Regulation: Why U.S. Companies With A Presence In The EU ouldC Be In Trouble," American University Business Law Review, Vol. 3, No. 2 (2018) . Available at: http://digitalcommons.wcl.american.edu/aublr/vol3/iss2/5 This Note is brought to you for free and open access by the Washington College of Law Journals & Law Reviews at Digital Commons @ American University Washington College of Law. It has been accepted for inclusion in American University Business Law Review by an authorized editor of Digital Commons @ American University Washington College of Law. For more information, please contact [email protected]. NOTE THE NSA'S PRISM PROGRAM AND THE NEW EU PRIVACY REGULATION: WHY U.S. COMPANIES WITH A PRESENCE IN THE EU COULD BE IN TROUBLE JUHI TARIQ* Recent revelations about a clandestine data surveillance program operated by the NSA, Planning Tool for Resource Integration, Synchronization, and Management ("PRISM'), and a stringent proposed European Union ("EU") data protection regulation, will place U.S. companies with a businesspresence in EU member states in a problematic juxtaposition. The EU Proposed General Data Protection Regulation stipulates that a company can be fined up to two percent of its global revenue for misuse of users' data and requires the consent of data subjects prior to access. -

Ashley Deeks*

ARTICLE An International Legal Framework for Surveillance ASHLEY DEEKS* Edward Snowden’s leaks laid bare the scope and breadth of the electronic surveillance that the U.S. National Security Agency and its foreign counterparts conduct. Suddenly, foreign surveillance is understood as personal and pervasive, capturing the communications not only of foreign leaders but also of private citizens. Yet to the chagrin of many state leaders, academics, and foreign citizens, international law has had little to say about foreign surveillance. Until recently, no court, treaty body, or government had suggested that international law, including basic privacy protections in human rights treaties, applied to purely foreign intelligence collection. This is now changing: Several UN bodies, judicial tribunals, U.S. corporations, and individuals subject to foreign surveillance are pressuring states to bring that surveillance under tighter legal control. This Article tackles three key, interrelated puzzles associated with this sudden transformation. First, it explores why international law has had so little to say about how, when, and where governments may spy on other states’ nationals. Second, it draws on international relations theory to argue that the development of new international norms regarding surveillance is both likely and essential. Third, it identifies six process-driven norms that states can and should adopt to ensure meaningful privacy restrictions on international surveillance without unduly harming their legitimate national security interests. These norms, which include limits on the use of collected data, periodic reviews of surveillance authorizations, and active oversight by neutral bodies, will increase the transparency, accountability, and legitimacy of foreign surveillance. This procedural approach challenges the limited emerging scholarship on surveillance, which urges states to apply existing — but vague and contested — substantive human rights norms to complicated, clandestine practices. -

Mass Surveillance

Mass Surveillance Mass Surveillance What are the risks for the citizens and the opportunities for the European Information Society? What are the possible mitigation strategies? Part 1 - Risks and opportunities raised by the current generation of network services and applications Study IP/G/STOA/FWC-2013-1/LOT 9/C5/SC1 January 2015 PE 527.409 STOA - Science and Technology Options Assessment The STOA project “Mass Surveillance Part 1 – Risks, Opportunities and Mitigation Strategies” was carried out by TECNALIA Research and Investigation in Spain. AUTHORS Arkaitz Gamino Garcia Concepción Cortes Velasco Eider Iturbe Zamalloa Erkuden Rios Velasco Iñaki Eguía Elejabarrieta Javier Herrera Lotero Jason Mansell (Linguistic Review) José Javier Larrañeta Ibañez Stefan Schuster (Editor) The authors acknowledge and would like to thank the following experts for their contributions to this report: Prof. Nigel Smart, University of Bristol; Matteo E. Bonfanti PhD, Research Fellow in International Law and Security, Scuola Superiore Sant’Anna Pisa; Prof. Fred Piper, University of London; Caspar Bowden, independent privacy researcher; Maria Pilar Torres Bruna, Head of Cybersecurity, Everis Aerospace, Defense and Security; Prof. Kenny Paterson, University of London; Agustín Martin and Luis Hernández Encinas, Tenured Scientists, Department of Information Processing and Cryptography (Cryptology and Information Security Group), CSIC; Alessandro Zanasi, Zanasi & Partners; Fernando Acero, Expert on Open Source Software; Luigi Coppolino,Università degli Studi di Napoli; Marcello Antonucci, EZNESS srl; Rachel Oldroyd, Managing Editor of The Bureau of Investigative Journalism; Peter Kruse, Founder of CSIS Security Group A/S; Ryan Gallagher, investigative Reporter of The Intercept; Capitán Alberto Redondo, Guardia Civil; Prof. Bart Preneel, KU Leuven; Raoul Chiesa, Security Brokers SCpA, CyberDefcon Ltd.; Prof. -

PRIVACY INTERNATIONAL Claimant

IN THE INVESTIGATORY POWERS TRIBUNAL BETWEEN: PRIVACY INTERNATIONAL Claimant -and- (1) SECRETARY OF STATE FOR FOREIGN AND COMMONWEALTH AFFAIRS (2) GOVERNMENT COMMUNICATION HEADQUARTERS Defendants AMENDED STATEMENT OF GROUNDS INTRODUCTION 1. Privacy International is a leading UK charity working on the right to privacy at an international level. It focuses, in particular, on challenging unlawful acts of surveillance. 2. The Secretary of State for the Foreign and Commonwealth Office is the minister responsible for oversight of the Government Communication Headquarters (“GCHQ”), the UK’s signals intelligence agency. 3. These proceedings concern the infection by GCHQ of individuals’ computers and mobile devices on a widespread scale to gain access either to the functions of those devices – for instance activating a camera or microphone without the user’s consent – or to obtain stored data. Recently-disclosed documents suggest GCHQ has developed technology to infect individual devices, and in conjunction with the United States National Security Agency (“NSA”), has the capability to deploy that technology to potentially millions of computers by using malicious software (“malware”). GCHQ has also developed malware, known as “WARRIOR PRIDE”, specifically for infecting mobile phones. 4. The use of such techniques is potentially far more intrusive than any other current surveillance technique, including the interception of communications. At a basic level, the profile information supplied by a user in registering a device for various purposes may include details of his location, age, gender, marital status, income, 1 ethnicity, sexual orientation, education, and family. More fundamentally, access to stored content (such as documents, photos, videos, web history, or address books), not to mention the logging of keystrokes or the covert and unauthorised photography or recording of the user and those around him, will produce further such information, as will the ability to track the precise location of a user of a mobile device. -

NSA) Surveillance Programmes (PRISM) and Foreign Intelligence Surveillance Act (FISA) Activities and Their Impact on EU Citizens' Fundamental Rights

DIRECTORATE GENERAL FOR INTERNAL POLICIES POLICY DEPARTMENT C: CITIZENS' RIGHTS AND CONSTITUTIONAL AFFAIRS The US National Security Agency (NSA) surveillance programmes (PRISM) and Foreign Intelligence Surveillance Act (FISA) activities and their impact on EU citizens' fundamental rights NOTE Abstract In light of the recent PRISM-related revelations, this briefing note analyzes the impact of US surveillance programmes on European citizens’ rights. The note explores the scope of surveillance that can be carried out under the US FISA Amendment Act 2008, and related practices of the US authorities which have very strong implications for EU data sovereignty and the protection of European citizens’ rights. PE xxx.xxx EN AUTHOR(S) Mr Caspar BOWDEN (Independent Privacy Researcher) Introduction by Prof. Didier BIGO (King’s College London / Director of the Centre d’Etudes sur les Conflits, Liberté et Sécurité – CCLS, Paris, France). Copy-Editing: Dr. Amandine SCHERRER (Centre d’Etudes sur les Conflits, Liberté et Sécurité – CCLS, Paris, France) Bibliographical assistance : Wendy Grossman RESPONSIBLE ADMINISTRATOR Mr Alessandro DAVOLI Policy Department Citizens' Rights and Constitutional Affairs European Parliament B-1047 Brussels E-mail: [email protected] LINGUISTIC VERSIONS Original: EN ABOUT THE EDITOR To contact the Policy Department or to subscribe to its monthly newsletter please write to: [email protected] Manuscript completed in MMMMM 200X. Brussels, © European Parliament, 200X. This document is available on the Internet at: http://www.europarl.europa.eu/studies DISCLAIMER The opinions expressed in this document are the sole responsibility of the author and do not necessarily represent the official position of the European Parliament. -

Citizenfour Jimena Reyes Jimena Reyes, a Lawyer from the Paris Bar, Has Been FIDH's Director for the Americas Since June 2003

Citizenfour Jimena Reyes Jimena Reyes, a lawyer from the Paris Bar, has been FIDH's director for the Americas since June 2003. Since then, she has investigated human rights viola- tions and public policies of 17 countries in the Americas, and she has also con- tributed to the the drafting of more than 30 human rights reports. In 2009, she investigated the scandal of illegal activities of the Colombian secret services that eventually led to its closing in 2011. Ms. Reyes has also coordinated a case relat- ed to illegal interception of NGO communcation in Belgium. She has been very active in the promotion of a new UN mandate on the right to privacy following the events of the Edward Snowden scandal. Ferran Josep Lloveras Ferran J. Lloveras, political scientist and international development expert, joined OHCHR in August 2014, where he works on external relations and co- operation, as well as focussing on civil and political rights. Previously he worked for the European Commission, on Education in development cooperation. Be- fore that he held several positions in UNESCO, where he worked on Education in Emergencies in Palestine (2011-2012), and on Strategic Planning, Education and UN reform at the Headquarters in Paris and on several field assignments (2005-2011). He also worked for the General Secretariat for Youth of the gov- ernment of Catalonia (2002-2005), and for the European Bureau for Conscien- tious Objection, to defend the human right to conscientious objection to mili- tary service (1999-2002). Afsané Bassir-Pour Director of the United Nations Regional Information Centre for Western Eu- rope (UNRIC) in Brussels since 2006, Ms Bassir-Pour was the diplomatic corre- spondent of the French daily Le Monde from 1988 to 2006. -

Citizenfour Discussion Guide

www.influencefilmclub.com Citizenfour Discussion Guide Director: Laura Poitras Year: 2015 Time: 114 min You might know this director from: The Oath (2010) My Country, My Country (2006) Flag Wars (2003) FILM SUMMARY Director Laura Poitras was in the middle of her third documentary on post-9/11 America when she received an email from “Citizenfour,” an individual claiming to possess insider information on the wide-scale surveillance schemes of the U.S. government. Rather than brushing the message off as bogus, she pursued this mysterious mailer, embroiling herself in one of the greatest global shockwaves of this millennium thus far. Teaming up with journalist Glenn Greenwald of “The Guardian,” Poitras began her journey. The two travel to Hong Kong, where they meet “Citizenfour,” an infrastructure analyst named Edward Snowden working inside the National Security Agency. Joined by fellow Guardian colleague Ewen MacAskill, the four set up camp in Snowden’s hotel room, where over the course of the following eight days he reveals a shocking protocol in effect that will uproot the way citizens across the world view their leaders in the days to come. Snowden does not wish to remain anonymous, and yet he insists “I’m not the story.” In his mind the issues of liberty, justice, freedom, and democracy are at stake, and who he is has very little bearing on the intense implications of the mass surveillance in effect worldwide. Snowden reminds us, “It’s not science fiction. This is happening right now,” and at times the extent of information is just too massive to grasp, especially for the journalists involved. -

NSA ANT Catalog PDF from EFF.Org

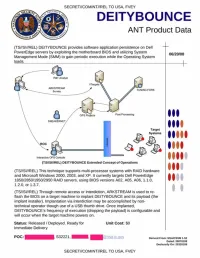

SECRET//COMINT//REL TO USA. FVEY DEITYBOUNCE ANT Product Data (TS//SI//REL) DEITYBOUNCE provides software application persistence on Dell PowerEdge servers by exploiting the motherboard BIOS and utilizing System Management Mode (SMM) to gain periodic execution while the Operating System loads. _________________ _________________________________________ TUMI KG FORK Post Proc*t*ftg Target Systems (TS//SM/REL) DEITYBOUNCE Extended Concept ot Operations (TS//SI//REL) This technique supports multi-processor systems with RAID hardware and Microsoft Windows 2000. 2003. and XP. It currently targets Dell PowerEdge A A 1850/2850/1950/2950 RAID servers, using BIOS versions A02. A05. A 0 6 .1.1.0. " " 1.2.0. or 1.3.7. (TS//SI//REL) Through remote access or interdiction. ARKSTREAM is used to re flash the BIOS on a target machine to implant DEITYBOUNCE and its payload (the implant installer). Implantation via interdiction may be accomplished by non technical operator though use of a USB thumb drive. Once implanted. DEITYBOUNCE's frequency of execution (dropping the payload) is configurable and will occur when the target machine powers on. Status: Released / Deployed. Ready for Unit Cost: $0 Immediate Delivery POC: S32221. | Oenverl From: NSAfCSSM 1-52 Dated: 20070108 Oeclaisify On: 20320108 SECRET//COMINT//REL TO USA. FVEY TOP SECRET//COMINT//REL TO USA. FVEY IRONCHEF ANT Product Data (TS//SI//REL) IRONCHEF provides access persistence to target systems by exploiting the motherboard BIOS and utilizing System Management Mode (SMM) to 07/14/08 communicate with a hardware implant that provides two-way RF communication. CRUMPET COVERT CLOSED NETWORK NETW ORK (CCN ) (Tefgef So*col CCN STRAITBIZAKRE N ode Compute* Node r -\— 0 - - j CCN S e rv e r STRAITBIZARRE Node CCN Computer UNITCORAKt Computer Node I Futoie Nodoe UNITE ORA KE Server Node I (TS//SI//REL) IRONCHEF Extended Concept of Operations (TS//SI/REL) This technique supports the HP Proliant 380DL G5 server, onto which a hardware implant has been installed that communicates over the l?C Interface (WAGONBED). -

Download Legal Document

Case 1:15-cv-00662-TSE Document 168-33 Filed 12/18/18 Page 1 of 15 Wikimedia Foundation v. NSA No. 15-cv-0062-TSE (D. Md.) Plaintiff’s Exhibit 29 12/16/2018 XKEYSCORE: NSA's Google for the World's Private Communications Case 1:15-cv-00662-TSE Document 168-33 Filed 12/18/18 Page 2 of 15 XKEYSCORE NSA’s Google for the World’s Private Communications Morgan Marquis-Boire, Glenn Greenwald, Micah Lee July 1 2015, 10:49 a.m. One of the National Security Agency’s most powerful tools of mass surveillance makes tracking someone’s Internet usage as easy as entering an email address, and provides no built-in technology to prevent abuse. Today, The Intercept is publishing 48 top-secret and other classified documents about XKEYSCORE dated up to 2013, which shed new light on the breadth, depth and functionality of this critical spy system — one of the largest releases yet of documents provided by NSA whistleblower Edward Snowden. The NSA’s XKEYSCORE program, first revealed by The Guardian, sweeps up countless people’s Internet searches, emails, documents, usernames and passwords, and other private communications. XKEYSCORE is fed a constant flow of Internet traffic from fiber optic cables that make up the backbone of the world’s communication network, among other sources, for processing. As of 2008, the surveillance system boasted approximately 150 field sites in the United States, Mexico, Brazil, United Kingdom, Spain, Russia, Nigeria, Somalia, Pakistan, Japan, Australia, as well as many other countries, consisting of over 700 servers. -

Whistle-Blowing in the Wind

ISSN (Online) - 2349-8846 Whistle-blowing in the Wind SRINIVASAN RAMANI, STANLY JOHNY Vol. 48, Issue No. 28, 13 Jul, 2013 Srinivasan Ramani ([email protected]) is Senior Assistant Editor with the Economic and Political Weekly. Stanly Johny ([email protected]) is a journalist based in New Delhi. Only the small nations of Latin America, committed to “Socialism of the 21st century”, stand up to the might of the United States, by offering to provide asylum to whistleblower Edward Snowden. The rest of the world’s state establishments show their inability to defy the US despite great sympathy for Snowden among their citizens (including within the US). In an ideal world, a whistleblower like Edward Snowden – an insider who threw light on the dystopian mass surveillance conducted by the US’ secretive security agencies – would have been regarded as the truth-teller he wanted to be known as. He would have been provided sanctuary by any government that would have been outraged at the US’ intrusive surveillance apparatus. The world’s other nations would have offered sympathy and demanded that the US rollback the programme and Snowden would have been hailed for his catalytic efforts. In the real world that is clearly dominated by a lone superpower to which every other nation regardless of standing or power has some entwined interest related relationship, Snowden is a headache for the state establishments. With the US cancelling his passport, pressing criminal charges and seeking to imprison Snowden, the state establishments elsewhere - irrespective of being the US’ formal allies (and are invariably targets of the NSA’s surveillance) – have been coy with regards the question of his asylum.