Conjugate Priors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Exponential Family 1 Definition

The Exponential Family David M. Blei Columbia University November 9, 2016 The exponential family is a class of densities (Brown, 1986). It encompasses many familiar forms of likelihoods, such as the Gaussian, Poisson, multinomial, and Bernoulli. It also encompasses their conjugate priors, such as the Gamma, Dirichlet, and beta. 1 Definition A probability density in the exponential family has this form p.x / h.x/ exp >t.x/ a./ ; (1) j D f g where is the natural parameter; t.x/ are sufficient statistics; h.x/ is the “base measure;” a./ is the log normalizer. Examples of exponential family distributions include Gaussian, gamma, Poisson, Bernoulli, multinomial, Markov models. Examples of distributions that are not in this family include student-t, mixtures, and hidden Markov models. (We are considering these families as distributions of data. The latent variables are implicitly marginalized out.) The statistic t.x/ is called sufficient because the probability as a function of only depends on x through t.x/. The exponential family has fundamental connections to the world of graphical models (Wainwright and Jordan, 2008). For our purposes, we’ll use exponential 1 families as components in directed graphical models, e.g., in the mixtures of Gaussians. The log normalizer ensures that the density integrates to 1, Z a./ log h.x/ exp >t.x/ d.x/ (2) D f g This is the negative logarithm of the normalizing constant. The function h.x/ can be a source of confusion. One way to interpret h.x/ is the (unnormalized) distribution of x when 0. It might involve statistics of x that D are not in t.x/, i.e., that do not vary with the natural parameter. -

Machine Learning Conjugate Priors and Monte Carlo Methods

Hierarchical Bayes for Non-IID Data Conjugate Priors Monte Carlo Methods CPSC 540: Machine Learning Conjugate Priors and Monte Carlo Methods Mark Schmidt University of British Columbia Winter 2016 Hierarchical Bayes for Non-IID Data Conjugate Priors Monte Carlo Methods Admin Nothing exciting? We discussed empirical Bayes, where you optimize prior using marginal likelihood, Z argmax p(xjα; β) = argmax p(xjθ)p(θjα; β)dθ: α,β α,β θ Can be used to optimize λj, polynomial degree, RBF σi, polynomial vs. RBF, etc. We also considered hierarchical Bayes, where you put a prior on the prior, p(xjα; β)p(α; βjγ) p(α; βjx; γ) = : p(xjγ) But is the hyper-prior really needed? Hierarchical Bayes for Non-IID Data Conjugate Priors Monte Carlo Methods Last Time: Bayesian Statistics In Bayesian statistics we work with posterior over parameters, p(xjθ)p(θjα; β) p(θjx; α; β) = : p(xjα; β) We also considered hierarchical Bayes, where you put a prior on the prior, p(xjα; β)p(α; βjγ) p(α; βjx; γ) = : p(xjγ) But is the hyper-prior really needed? Hierarchical Bayes for Non-IID Data Conjugate Priors Monte Carlo Methods Last Time: Bayesian Statistics In Bayesian statistics we work with posterior over parameters, p(xjθ)p(θjα; β) p(θjx; α; β) = : p(xjα; β) We discussed empirical Bayes, where you optimize prior using marginal likelihood, Z argmax p(xjα; β) = argmax p(xjθ)p(θjα; β)dθ: α,β α,β θ Can be used to optimize λj, polynomial degree, RBF σi, polynomial vs. -

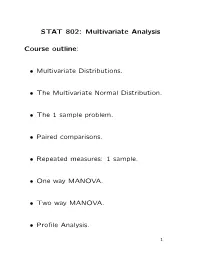

STAT 802: Multivariate Analysis Course Outline

STAT 802: Multivariate Analysis Course outline: Multivariate Distributions. • The Multivariate Normal Distribution. • The 1 sample problem. • Paired comparisons. • Repeated measures: 1 sample. • One way MANOVA. • Two way MANOVA. • Profile Analysis. • 1 Multivariate Multiple Regression. • Discriminant Analysis. • Clustering. • Principal Components. • Factor analysis. • Canonical Correlations. • 2 Basic structure of typical multivariate data set: Case by variables: data in matrix. Each row is a case, each column is a variable. Example: Fisher's iris data: 5 rows of 150 by 5 matrix: Case Sepal Sepal Petal Petal # Variety Length Width Length Width 1 Setosa 5.1 3.5 1.4 0.2 2 Setosa 4.9 3.0 1.4 0.2 . 51 Versicolor 7.0 3.2 4.7 1.4 . 3 Usual model: rows of data matrix are indepen- dent random variables. Vector valued random variable: function X : p T Ω R such that, writing X = (X ; : : : ; Xp) , 7! 1 P (X x ; : : : ; Xp xp) 1 ≤ 1 ≤ defined for any const's (x1; : : : ; xp). Cumulative Distribution Function (CDF) p of X: function FX on R defined by F (x ; : : : ; xp) = P (X x ; : : : ; Xp xp) : X 1 1 ≤ 1 ≤ 4 Defn: Distribution of rv X is absolutely con- tinuous if there is a function f such that P (X A) = f(x)dx (1) 2 ZA for any (Borel) set A. This is a p dimensional integral in general. Equivalently F (x1; : : : ; xp) = x1 xp f(y ; : : : ; yp) dyp; : : : ; dy : · · · 1 1 Z−∞ Z−∞ Defn: Any f satisfying (1) is a density of X. For most x F is differentiable at x and @pF (x) = f(x) : @x @xp 1 · · · 5 Building Multivariate Models Basic tactic: specify density of T X = (X1; : : : ; Xp) : Tools: marginal densities, conditional densi- ties, independence, transformation. -

Polynomial Singular Value Decompositions of a Family of Source-Channel Models

Polynomial Singular Value Decompositions of a Family of Source-Channel Models The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Makur, Anuran and Lizhong Zheng. "Polynomial Singular Value Decompositions of a Family of Source-Channel Models." IEEE Transactions on Information Theory 63, 12 (December 2017): 7716 - 7728. © 2017 IEEE As Published http://dx.doi.org/10.1109/tit.2017.2760626 Publisher Institute of Electrical and Electronics Engineers (IEEE) Version Author's final manuscript Citable link https://hdl.handle.net/1721.1/131019 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/ IEEE TRANSACTIONS ON INFORMATION THEORY 1 Polynomial Singular Value Decompositions of a Family of Source-Channel Models Anuran Makur, Student Member, IEEE, and Lizhong Zheng, Fellow, IEEE Abstract—In this paper, we show that the conditional expec- interested in a particular subclass of one-parameter exponential tation operators corresponding to a family of source-channel families known as natural exponential families with quadratic models, defined by natural exponential families with quadratic variance functions (NEFQVF). So, we define natural exponen- variance functions and their conjugate priors, have orthonor- mal polynomials as singular vectors. These models include the tial families next. Gaussian channel with Gaussian source, the Poisson channel Definition 1 (Natural Exponential Family). Given a measur- with gamma source, and the binomial channel with beta source. To derive the singular vectors of these models, we prove and able space (Y; B(Y)) with a σ-finite measure µ, where Y ⊆ R employ the equivalent condition that their conditional moments and B(Y) denotes the Borel σ-algebra on Y, the parametrized are strictly degree preserving polynomials. -

A Compendium of Conjugate Priors

A Compendium of Conjugate Priors Daniel Fink Environmental Statistics Group Department of Biology Montana State Univeristy Bozeman, MT 59717 May 1997 Abstract This report reviews conjugate priors and priors closed under sampling for a variety of data generating processes where the prior distributions are univariate, bivariate, and multivariate. The effects of transformations on conjugate prior relationships are considered and cases where conjugate prior relationships can be applied under transformations are identified. Univariate and bivariate prior relationships are verified using Monte Carlo methods. Contents 1 Introduction Experimenters are often in the position of having had collected some data from which they desire to make inferences about the process that produced that data. Bayes' theorem provides an appealing approach to solving such inference problems. Bayes theorem, π(θ) L(θ x ; : : : ; x ) g(θ x ; : : : ; x ) = j 1 n (1) j 1 n π(θ) L(θ x ; : : : ; x )dθ j 1 n is commonly interpreted in the following wayR. We want to make some sort of inference on the unknown parameter(s), θ, based on our prior knowledge of θ and the data collected, x1; : : : ; xn . Our prior knowledge is encapsulated by the probability distribution on θ; π(θ). The data that has been collected is combined with our prior through the likelihood function, L(θ x ; : : : ; x ) . The j 1 n normalized product of these two components yields a probability distribution of θ conditional on the data. This distribution, g(θ x ; : : : ; x ) , is known as the posterior distribution of θ. Bayes' j 1 n theorem is easily extended to cases where is θ multivariate, a vector of parameters. -

Mx (T) = 2$/2) T'w# (&&J Terp

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Applied Mathematics Letters PERGAMON Applied Mathematics Letters 16 (2003) 643-646 www.elsevier.com/locate/aml Multivariate Skew-Symmetric Distributions A. K. GUPTA Department of Mathematics and Statistics Bowling Green State University Bowling Green, OH 43403, U.S.A. [email protected] F.-C. CHANG Department of Applied Mathematics National Sun Yat-sen University Kaohsiung, Taiwan 804, R.O.C. [email protected] (Received November 2001; revised and accepted October 200.2) Abstract-In this paper, a class of multivariateskew distributions has been explored. Then its properties are derived. The relationship between the multivariate skew normal and the Wishart distribution is also studied. @ 2003 Elsevier Science Ltd. All rights reserved. Keywords-Skew normal distribution, Wishart distribution, Moment generating function, Mo- ments. Skewness. 1. INTRODUCTION The multivariate skew normal distribution has been studied by Gupta and Kollo [I] and Azzalini and Dalla Valle [2], and its applications are given in [3]. This class of distributions includes the normal distribution and has some properties like the normal and yet is skew. It is useful in studying robustness. Following Gupta and Kollo [l], the random vector X (p x 1) is said to have a multivariate skew normal distribution if it is continuous and its density function (p.d.f.) is given by fx(z) = 24&; W(a’z), x E Rp, (1.1) where C > 0, Q E RP, and 4,(x, C) is the p-dimensional normal density with zero mean vector and covariance matrix C, and a(.) is the standard normal distribution function (c.d.f.). -

Bayesian Filtering: from Kalman Filters to Particle Filters, and Beyond ZHE CHEN

MANUSCRIPT 1 Bayesian Filtering: From Kalman Filters to Particle Filters, and Beyond ZHE CHEN Abstract— In this self-contained survey/review paper, we system- IV Bayesian Optimal Filtering 9 atically investigate the roots of Bayesian filtering as well as its rich IV-AOptimalFiltering..................... 10 leaves in the literature. Stochastic filtering theory is briefly reviewed IV-BKalmanFiltering..................... 11 with emphasis on nonlinear and non-Gaussian filtering. Following IV-COptimumNonlinearFiltering.............. 13 the Bayesian statistics, different Bayesian filtering techniques are de- IV-C.1Finite-dimensionalFilters............ 13 veloped given different scenarios. Under linear quadratic Gaussian circumstance, the celebrated Kalman filter can be derived within the Bayesian framework. Optimal/suboptimal nonlinear filtering tech- V Numerical Approximation Methods 14 niques are extensively investigated. In particular, we focus our at- V-A Gaussian/Laplace Approximation ............ 14 tention on the Bayesian filtering approach based on sequential Monte V-BIterativeQuadrature................... 14 Carlo sampling, the so-called particle filters. Many variants of the V-C Mulitgrid Method and Point-Mass Approximation . 14 particle filter as well as their features (strengths and weaknesses) are V-D Moment Approximation ................. 15 discussed. Related theoretical and practical issues are addressed in V-E Gaussian Sum Approximation . ............. 16 detail. In addition, some other (new) directions on Bayesian filtering V-F Deterministic -

Bayesian Inference Via Approximation of Log-Likelihood for Priors in Exponential Family

1 Bayesian Inference via Approximation of Log-likelihood for Priors in Exponential Family Tohid Ardeshiri, Umut Orguner, and Fredrik Gustafsson Abstract—In this paper, a Bayesian inference technique based x x x ··· x on Taylor series approximation of the logarithm of the likelihood 1 2 3 T function is presented. The proposed approximation is devised for the case, where the prior distribution belongs to the exponential family of distributions. The logarithm of the likelihood function is linearized with respect to the sufficient statistic of the prior distribution in exponential family such that the posterior obtains y1 y2 y3 yT the same exponential family form as the prior. Similarities between the proposed method and the extended Kalman filter for nonlinear filtering are illustrated. Furthermore, an extended Figure 1: A probabilistic graphical model for stochastic dy- target measurement update for target models where the target namical system with latent state xk and measurements yk. extent is represented by a random matrix having an inverse Wishart distribution is derived. The approximate update covers the important case where the spread of measurement is due to the target extent as well as the measurement noise in the sensor. (HMMs) whose probabilistic graphical model is presented in Fig. 1. In such filtering problems, the posterior to the last Index Terms—Approximate Bayesian inference, exponential processed measurement is in the same form as the prior family, Bayesian graphical models, extended Kalman filter, ex- distribution before the measurement update. Thus, the same tended target tracking, group target tracking, random matrices, inference algorithm can be used in a recursive manner. -

36-463/663: Hierarchical Linear Models

36-463/663: Hierarchical Linear Models Taste of MCMC / Bayes for 3 or more “levels” Brian Junker 132E Baker Hall [email protected] 11/3/2016 1 Outline Practical Bayes Mastery Learning Example A brief taste of JAGS and RUBE Hierarchical Form of Bayesian Models Extending the “Levels” and the “Slogan” Mastery Learning – Distribution of “mastery” (to be continued…) 11/3/2016 2 Practical Bayesian Statistics (posterior) ∝ (likelihood) ×(prior) We typically want quantities like point estimate: Posterior mean, median, mode uncertainty: SE, IQR, or other measure of ‘spread’ credible interval (CI) (^θ - 2SE, ^θ + 2SE) (θ. , θ. ) Other aspects of the “shape” of the posterior distribution Aside: If (prior) ∝ 1, then (posterior) ∝ (likelihood) 1/2 posterior mode = mle, posterior SE = 1/I( θ) , etc. 11/3/2016 3 Obtaining posterior point estimates, credible intervals Easy if we recognize the posterior distribution and we have formulae for means, variances, etc. Whether or not we have such formulae, we can get similar information by simulating from the posterior distribution. Key idea: where θ, θ, …, θM is a sample from f( θ|data). 11/3/2016 4 Example: Mastery Learning Some computer-based tutoring systems declare that you have “mastered” a skill if you can perform it successfully r times. The number of times x that you erroneously perform the skill before the r th success is a measure of how likely you are to perform the skill correctly (how well you know the skill). The distribution for the number of failures x before the r th success is the negative binomial distribution. -

A Geometric View of Conjugate Priors

Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence A Geometric View of Conjugate Priors Arvind Agarwal Hal Daume´ III Department of Computer Science Department of Computer Science University of Maryland University of Maryland College Park, Maryland USA College Park, Maryland USA [email protected] [email protected] Abstract Using the same geometry also gives the closed-form solution for the maximum-a-posteriori (MAP) problem. We then ana- In Bayesian machine learning, conjugate priors are lyze the prior using concepts borrowed from the information popular, mostly due to mathematical convenience. geometry. We show that this geometry induces the Fisher In this paper, we show that there are deeper reasons information metric and 1-connection, which are respectively, for choosing a conjugate prior. Specifically, we for- the natural metric and connection for the exponential family mulate the conjugate prior in the form of Bregman (Section 5). One important outcome of this analysis is that it divergence and show that it is the inherent geome- allows us to treat the hyperparameters of the conjugate prior try of conjugate priors that makes them appropriate as the effective sample points drawn from the distribution un- and intuitive. This geometric interpretation allows der consideration. We finally extend this geometric interpre- one to view the hyperparameters of conjugate pri- tation of conjugate priors to analyze the hybrid model given ors as the effective sample points, thus providing by [7] in a purely geometric setting, and justify the argument additional intuition. We use this geometric under- presented in [1] (i.e. a coupling prior should be conjugate) standing of conjugate priors to derive the hyperpa- using a much simpler analysis (Section 6). -

Interpolation of the Wishart and Non Central Wishart Distributions

Interpolation of the Wishart and non central Wishart distributions Gérard Letac∗ Angers, June 2016 ∗Laboratoire de Statistique et Probabilités, Université Paul Sabatier, 31062 Toulouse, France 1 Contents I Introduction 3 II Wishart distributions in the classical sense 3 III Wishart distributions on symmetric cones 5 IV Wishart distributions as natural exponential families 10 V Properties of the Wishart distributions 12 VI The one dimensional non central χ2 distributions and their interpola- tion 16 VII The classical non central Wishart distribution. 17 VIIIThe non central Wishart exists only if p is in the Gindikin set 17 IX The rank problem for the non central Wishart and the Mayerhofer conjecture. 19 X Reduction of the problem: the measures m(2p; k; d) 19 XI Computation of m(1; 2; 2) 23 XII Computation of the measure m(d − 1; d; d) 28 XII.1 Zonal functions . 28 XII.2 Three properties of zonal functions . 30 XII.3 The calculation of m(d − 1; d; d) ....................... 32 XII.4 Example: m(2; 3; 3) .............................. 34 XIIIConvolution lemmas in the cone Pd 35 XIVm(d − 2; d − 1; d) and m(d − 2; d; d) do not exist for d ≥ 3 37 XV References 38 2 I Introduction The aim of these notes is to present with the most natural generality the Wishart and non central Wishart distributions on symmetric real matrices, on Hermitian matrices and sometimes on a symmetric cone. While the classical χ2 square distributions are familiar to the statisticians, they quickly learn that these χ2 laws as parameterized by n their degree of freedom, can be interpolated by the gamma distributions with a continuous shape parameter. -

Gibbs Sampling, Exponential Families and Orthogonal Polynomials

Statistical Science 2008, Vol. 23, No. 2, 151–178 DOI: 10.1214/07-STS252 c Institute of Mathematical Statistics, 2008 Gibbs Sampling, Exponential Families and Orthogonal Polynomials1 Persi Diaconis, Kshitij Khare and Laurent Saloff-Coste Abstract. We give families of examples where sharp rates of conver- gence to stationarity of the widely used Gibbs sampler are available. The examples involve standard exponential families and their conjugate priors. In each case, the transition operator is explicitly diagonalizable with classical orthogonal polynomials as eigenfunctions. Key words and phrases: Gibbs sampler, running time analyses, expo- nential families, conjugate priors, location families, orthogonal polyno- mials, singular value decomposition. 1. INTRODUCTION which has f as stationary density under mild con- ditions discussed in [4, 102]. The Gibbs sampler, also known as Glauber dy- The algorithm was introduced in 1963 by Glauber namics or the heat-bath algorithm, is a mainstay of [49] to do simulations for Ising models, and inde- scientific computing. It provides a way to draw sam- pendently by Turcin [103]. It is still a standard tool ples from a multivariate probability density f(x1,x2, of statistical physics, both for practical simulation ...,xp), perhaps only known up to a normalizing (e.g., [88]) and as a natural dynamics (e.g., [10]). constant, by a sequence of one-dimensional sampling The basic Dobrushin uniqueness theorem showing problems. From (X1,...,Xp) proceed to (X1′ , X2,..., existence of Gibbs measures was proved based on Xp), then (X1′ , X2′ , X3,...,Xp),..., (X1′ , X2′ ,...,Xp′ ) this dynamics (e.g., [54]). It was introduced as a where at the ith stage, the coordinate is sampled base for image analysis by Geman and Geman [46].