Research Workshop on Challenges for Trusted Computing 3Rd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Graphical Product-Line Configuration of Nesc-Based Sensor Network Applications Using Feature Models

GRAPHICAL PRODUCT-LINE CONFIGURATION OF NESC-BASED SENSOR NETWORK APPLICATIONS USING FEATURE MODELS by MATTHIAS NIEDERHAUSEN Diplom, University of Applied Sciences, Frankfurt, Germany, 2007 A THESIS submitted in partial fulfillment of the requirements for the degree MASTER OF SCIENCE Department of Computing and Information Sciences College of Engineering KANSAS STATE UNIVERSITY Manhattan, Kansas 2008 Approved by: Major Professor John Hatcliff Copyright Matthias Niederhausen 2008 Abstract Developing a wireless sensor network application includes a variety of tasks, such as coding of the implementation, designing the architecture and assessing availability of hardware components, that provide necessary capabilities. Before compiling an application, the developer has to con- figure the selection of hardware components and set up required parameters. One has to choose from among a variety of configurations regarding communication parameters, such as frequency, channel, subnet identifier, transmission power, etc.. This configuration step also includes setting up parameters for the selection of hardware components, such as a specific hardware platform, which sensor boards and programmer boards to be used or the use of optional services and more. Reasoning about a proper selection of configuration parameters is often very difficult, since there are a lot of dependencies among these parameters which may rule out some other options. The developer has to know about all these constraints in order to pick a valid configuration. Unfor- tunately, the existing makefile approach that comes with nesC is poorly organized and does not capture important compatibility constraints. The configuration of a particular nesC application is distributed in multiple makefiles. There- fore a developer has to look at multiple files to make sure all necessary parameter are set up correctly for compiling a specific application. -

Also Includes Slides and Contents From

The Compilation Toolchain Cross-Compilation for Embedded Systems Prof. Andrea Marongiu ([email protected]) Toolchain The toolchain is a set of development tools used in association with source code or binaries generated from the source code • Enables development in a programming language (e.g., C/C++) • It is used for a lot of operations such as a) Compilation b) Preparing Libraries Most common toolchain is the c) Reading a binary file (or part of it) GNU toolchain which is part of d) Debugging the GNU project • Normally it contains a) Compiler : Generate object files from source code files b) Linker: Link object files together to build a binary file c) Library Archiver: To group a set of object files into a library file d) Debugger: To debug the binary file while running e) And other tools The GNU Toolchain GNU (GNU’s Not Unix) The GNU toolchain has played a vital role in the development of the Linux kernel, BSD, and software for embedded systems. The GNU project produced a set of programming tools. Parts of the toolchain we will use are: -gcc: (GNU Compiler Collection): suite of compilers for many programming languages -binutils: Suite of tools including linker (ld), assembler (gas) -gdb: Code debugging tool -libc: Subset of standard C library (assuming a C compiler). -bash: free Unix shell (Bourne-again shell). Default shell on GNU/Linux systems and Mac OSX. Also ported to Microsoft Windows. -make: automation tool for compilation and build Program development tools The process of converting source code to an executable binary image requires several steps, each with its own tool. -

FPGA BASED RECONFIGURABLE BODY AREA NETWORK USING Nios II and Uclinux

FPGA BASED RECONFIGURABLE BODY AREA NETWORK USING Nios II AND uClinux A Thesis Submitted to the College of Graduate Studies and Research in Partial Fulfillment of the Requirements for the Degree of Master of Science in the Department of Electrical and Computer Engineering University of Saskatchewan by Anthony Voykin Saskatoon, Saskatchewan, Canada c Copyright Anthony Voykin, April 2013. All rights reserved. Permission to Use In presenting this thesis in partial fulfillment of the requirements for a Postgraduate degree from the University of Saskatchewan, it is agreed that the Libraries of this University may make it freely available for inspection. Permission for copying of this thesis in any manner, in whole or in part, for scholarly purposes may be granted by the professors who supervised this thesis work or, in their absence, by the Head of the Department of Electrical and Computer Engineering or the Dean of the College of Graduate Studies and Research at the University of Saskatchewan. Any copying, publication, or use of this thesis, or parts thereof, for financial gain without the written permission of the author is strictly prohibited. Proper recognition shall be given to the author and to the University of Saskatchewan in any scholarly use which may be made of any material in this thesis. Request for permission to copy or to make any other use of material in this thesis in whole or in part should be addressed to: Head of the Department of Electrical and Computer Engineering 57 Campus Drive University of Saskatchewan Saskatoon, Saskatchewan, Canada S7N 5A9 i Acknowledgments I would like to thank my advisors Professor Ron Bolton and Professor Francis Bui for providing me with guidance and support necessary to complete my thesis work. -

Embedded System Setting up Development Environment

Embedded System Setting up Development Environment Tool chain installation GCC tool chain includes gcc compiler,assembler, linker, and other related utilities. (root@host)#rpm –ivh armtools-2.95.3- 5.i386.rpm Add /usr/local/gnu-2.95.3/bin to the searching path. (root@host)# export PATH=/usr/local/gnu- 2.95.3/bin:$PATH Setting up Development Environment Installing uClibc (root@host)#rpm –ivh uClibc-0.9.5- 1.i386.rpm The uClibc will be installed in /usr/local/uClibc-0.9.5 Building busybox BusyBox: The Swiss Army Knife of Embedded Linux Official site: http://busybox.net Install the BusyBox Configure the function of busybox Modify Makefile make Configure the function of busybox Config.h // This file defines the feature set to be compiled into busybox. // When you turn things off here, they won’t be compiled in at all. // //// This file is parsed by sed. You MUST use single line comments. // i.e., //#define BB_BLAH // // BusyBox Applications //#define BB_ADJTIMEX //#define BB_AR #define BB_ASH … # If you are running a cross compiler, you may want to set this # to something more interesting, like “powerpc-linux-“. CROSS = arm-elf- CC = $(CROSS)gcc … CROSS_CFLAGS+= -nostdinc –I$(LIBCDIR)/include –I$(GCCINCDIR) #Add below LIBCDIR=/usr/local/uClibc-0.9.5/linux-2.0.x GCCINCDIR=/usr/local/gnu-2.95.3/lib/gcc-lib/arm-elf/2.95/include Modify Makefile # If you are running a cross compiler, you may want to set this # to something more interesting, like “powerpc-linux-“. CROSS = arm-elf- CC = $(CROSS)gcc … CROSS_CFLAGS+= -nostdinc –I$(LIBCDIR)/include -

Atomicity and Visibility in Tiny Embedded Systems

Atomicity and Visibility in Tiny Embedded Systems John Regehr Nathan Cooprider David Gay University of Utah, School of Computing Intel Research Berkeley {regehr, coop}@cs.utah.edu [email protected] Abstract bool flag = false; // interrupt handler Visibility is a property of a programming language’s void __vector_5 (void) memory model that determines when values stored by { one concurrent computation become visible to other atomic flag = true; computations. Our work exploits the insight that in } nesC, a C-like language with explicit atomicity, the traditional way of ensuring timely visibility—volatile void wait_for_interrupt(void) variables—can be entirely avoided. This is advan- { tageous because the volatile qualifier is a notorious bool done = false; do { source of programming errors and misunderstandings. atomic if (!flag) done = true; Furthermore, the volatile qualifier hurts performance } while (!done); by inhibiting many more optimizations than are neces- } sary to ensure visibility. In this paper we extend the semantics of nesC’s atomic statements to include a visi- bility guarantee, we show two ways that these semantics Figure 1. nesC code containing a visibility er- can be implemented, and we also show that our better ror implementation has no drawbacks in terms of resource usage. Until now, nesC has left it to programmers to en- 1. Introduction sure visibility by judicious use of C’s volatile qual- ifier. In this paper we investigate the idea of strength- ening nesC’s memory model such that the semantics of The nesC [5] language is a C dialect designed for pro- atomic are augmented with a visibility guarantee. This gramming embedded wireless sensor network nodes. -

Operating Systems and Applications for Embedded Systems >>> Toolchains

>>> Operating Systems And Applications For Embedded Systems >>> Toolchains Name: Mariusz Naumowicz Date: 31 sierpnia 2018 [~]$ _ [1/19] >>> Plan 1. Toolchain Toolchain Main component of GNU toolchain C library Finding a toolchain 2. crosstool-NG crosstool-NG Installing Anatomy of a toolchain Information about cross-compiler Configruation Most interesting features Sysroot Other tools POSIX functions AP [~]$ _ [2/19] >>> Toolchain A toolchain is the set of tools that compiles source code into executables that can run on your target device, and includes a compiler, a linker, and run-time libraries. [1. Toolchain]$ _ [3/19] >>> Main component of GNU toolchain * Binutils: A set of binary utilities including the assembler, and the linker, ld. It is available at http://www.gnu.org/software/binutils/. * GNU Compiler Collection (GCC): These are the compilers for C and other languages which, depending on the version of GCC, include C++, Objective-C, Objective-C++, Java, Fortran, Ada, and Go. They all use a common back-end which produces assembler code which is fed to the GNU assembler. It is available at http://gcc.gnu.org/. * C library: A standardized API based on the POSIX specification which is the principle interface to the operating system kernel from applications. There are several C libraries to consider, see the following section. [1. Toolchain]$ _ [4/19] >>> C library * glibc: Available at http://www.gnu.org/software/libc. It is the standard GNU C library. It is big and, until recently, not very configurable, but it is the most complete implementation of the POSIX API. * eglibc: Available at http://www.eglibc.org/home. -

A Review on Elliptic Curve Cryptography for Embedded Systems

International Journal of Computer Science & Information Technology (IJCSIT), Vol 3, No 3, June 2011 A REVIEW ON ELLIPTIC CURVE CRYPTOGRAPHY FOR EMBEDDED SYSTEMS Rahat Afreen 1 and S.C. Mehrotra 2 1Tom Patrick Institute of Computer & I.T, Dr. Rafiq Zakaria Campus, Rauza Bagh, Aurangabad. (Maharashtra) INDIA [email protected] 2Department of C.S. & I.T., Dr. B.A.M. University, Aurangabad. (Maharashtra) INDIA [email protected] ABSTRACT Importance of Elliptic Curves in Cryptography was independently proposed by Neal Koblitz and Victor Miller in 1985.Since then, Elliptic curve cryptography or ECC has evolved as a vast field for public key cryptography (PKC) systems. In PKC system, we use separate keys to encode and decode the data. Since one of the keys is distributed publicly in PKC systems, the strength of security depends on large key size. The mathematical problems of prime factorization and discrete logarithm are previously used in PKC systems. ECC has proved to provide same level of security with relatively small key sizes. The research in the field of ECC is mostly focused on its implementation on application specific systems. Such systems have restricted resources like storage, processing speed and domain specific CPU architecture. KEYWORDS Elliptic curve cryptography Public Key Cryptography, embedded systems, Elliptic Curve Digital Signature Algorithm ( ECDSA), Elliptic Curve Diffie Hellman Key Exchange (ECDH) 1. INTRODUCTION The changing global scenario shows an elegant merging of computing and communication in such a way that computers with wired communication are being rapidly replaced to smaller handheld embedded computers using wireless communication in almost every field. This has increased data privacy and security requirements. -

Porting Musl to the M3 Microkernel TU Dresden

Porting Musl to the M3 microkernel TU Dresden Sherif Abdalazim, Nils Asmussen May 8, 2018 Contents 1 Abstract 2 2 Introduction 3 2.1 Background.............................. 3 2.2 M3................................... 4 3 Picking a C library 5 3.1 C libraries design factors . 5 3.2 Alternative C libraries . 5 4 Porting Musl 7 4.1 M3andMuslbuildsystems ..................... 7 4.1.1 Scons ............................. 7 4.1.2 GNUAutotools........................ 7 4.1.3 Integrating Autotools with Scons . 8 4.2 Repositoryconfiguration. 8 4.3 Compilation.............................. 8 4.4 Testing ................................ 9 4.4.1 Syscalls ............................ 9 5 Evaluation 10 5.1 PortingBusyboxcoreutils . 10 6 Conclusion 12 1 Chapter 1 Abstract Today’s processing workloads require the usage of heterogeneous multiproces- sors to utilize the benefits of specialized processors and accelerators. This has, in turn, motivated new Operating System (OS) designs to manage these het- erogeneous processors and accelerators systematically. M3 [9] is an OS following the microkernel approach. M3 uses a hardware/- software co-design to exploit the heterogeneous systems in a seamless and effi- cient form. It achieves that by abstracting the heterogeneity of the cores via a Data Transfer Unit (DTU). The DTU abstracts the heterogeneity of the cores and accelerators so that they can communicate systematically. I have been working to enhance the programming environment in M3 by porting a C library to M3. I have evaluated different C library implementations like the GNU C Library (glibc), Musl, and uClibc. I decided to port Musl as it has a relatively small code base with fewer configurations. It is simpler to port, and it started to gain more ground in embedded systems which are also a perfect match for M3 applications. -

Aicesis Presidency

20 YEARS 1999 2019 INTERNATIONAL ASSOCIATION OF ECONOMIC AND SOCIAL COUNCILS AND SIMILAR INSTITUTIONS President Iacob Baciu AICESIS PRESIDENCY SEPTEMBER 2017-OCTOBER 2019 President Iacob Baciu AICESIS PRESIDENCY – SEPTEMBER 2017-OCTOBER 2019 BOARD AND GENERAL ASSEMBLY INTERNATIONAL CONFERENCE ILO-AICESIS-ESC OF ROMANIA 9 – 11 OCTOBER 2019, BUCHAREST, ROMANIA AICESIS PRESIDENCY BETWEEN SEPTEMBER 2017 AND OCTOBER 2019 President Iacob Baciu I had the privilege and honour of being the President of the International Asso- ciation of Economic and Social Councils and Similar Institutions (AICESIS) for a two-year term, from September 2017 to October 2019, and the main theme of my mandate was the digital revolution. Industry 4.0, as named by Chancellor Angela Merkel in 2011, represents the fourth stage of the Industrial Revolution and has brought major changes in the economy, labour relations, labour market, education, significantly influencing social life. The next industrial revolution finds itself on an unknown horizon and is difficult to predict. Humanity has come a long way in seeking its solutions to the problems it faces; history has recorded all the achievements, hesitations, mistakes, conflicts and progress made. Only if we learn from experience will we be able to avoid repeating the mistakes made. The first industrial revolution began at the end of the eighteenth century and in the first decades of the nineteenth century first in England, which managed to remain the world’s first industrial power until the end of the nineteenth century. In France, the industrial revolution evolved slowly, its beginnings dating back to the 1830s. The low demand for industrial products under a predominantly agrarian population meant that the true industrial era began only in 1890. -

MANAGING a REAL-TIME EMBEDDED LINUX PLATFORM with BUILDROOT John Diamond, Kevin Martin Fermi National Accelerator Laboratory, Batavia, IL 60510

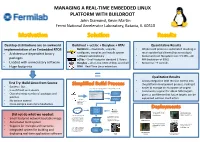

MANAGING A REAL-TIME EMBEDDED LINUX PLATFORM WITH BUILDROOT John Diamond, Kevin Martin Fermi National Accelerator Laboratory, Batavia, IL 60510 Desktop distributions are an awkward Buildroot + ucLibc + Busybox + RTAI Quantitative Results implementation of an Embedded RTOS Buildroot – downloads, unpacks, • Whole build process is automated resulting in • Architecture-dependent binary configures, compiles and installs system much quicker build times (hours not days) software automatically • Kernel and root filesystem size: 3.5 MB – 20 packages uClibc – Small-footprint standard C library MB (reduction of 99%) • Loaded with unnecessary software Busybox – all-in-one UNIX utilities and shell • Boot-time: ~9 seconds • Huge footprints RTAI – Real-Time Linux extensions = Qualitative Results • Allows integration with revision control into First Try: Build Linux from Source the platform development process, making it • Success! But.. 2. Buildroot’s menuconfig generates a package configuration file easier to manage an ecosystem of targets • Is as difficult as it sounds and kernel configuration file • Community support for x86 & ARM targets Linux Kernel • Overwhelming number of packages and Configuration gives us confidence that future targets can be patches Package supported without much effort 1. Developer Configuration • No version control configures build via Buildroot’s • Cross-compile even more headaches menuconfig Internet Build Process Power Supply Control Quench Protection Git / CVS / SVN and Regulation for the System for Tevatron Did not do what we needed: Fermilab Linac Electron Lens (TEL II) 3. The build process pulls 4. The output from the software packages from build process is a kernel • Small-footprint network bootable image the internet and custom bzImage bzImage file with an softare packages from a integrated root filesystem ARM Cortex A-9 source code repository file PC/104 AMD • Automated build system Geode SBC Beam Position Monitor Power Supply Control prototype for Fermilab and Regulation for • Support for multiple architectures 5. -

Anatomy of Cross-Compilation Toolchains

Embedded Linux Conference Europe 2016 Anatomy of cross-compilation toolchains Thomas Petazzoni free electrons [email protected] Artwork and Photography by Jason Freeny free electrons - Embedded Linux, kernel, drivers - Development, consulting, training and support. http://free-electrons.com 1/1 Thomas Petazzoni I CTO and Embedded Linux engineer at Free Electrons I Embedded Linux specialists. I Development, consulting and training. I http://free-electrons.com I Contributions I Kernel support for the Marvell Armada ARM SoCs from Marvell I Major contributor to Buildroot, an open-source, simple and fast embedded Linux build system I Living in Toulouse, south west of France Drawing from Frank Tizzoni, at Kernel Recipes 2016 free electrons - Embedded Linux, kernel, drivers - Development, consulting, training and support. http://free-electrons.com 2/1 Disclaimer I I am not a toolchain developer. Not pretending to know everything about toolchains. I Experience gained from building simple toolchains in the context of Buildroot I Purpose of the talk is to give an introduction, not in-depth information. I Focused on simple gcc-based toolchains, and for a number of examples, on ARM specific details. I Will not cover advanced use cases, such as LTO, GRAPHITE optimizations, etc. I Will not cover LLVM free electrons - Embedded Linux, kernel, drivers - Development, consulting, training and support. http://free-electrons.com 3/1 What is a cross-compiling toolchain? I A set of tools that allows to build source code into binary code for -

NASA Engineering and Safety Center Technical Assessment Report

Version: NASA Engineering and Safety Center 1.0 Technical Assessment Report Title: Page #: National Highway Traffic Safety Administration 1 of 177 Toyota Unintended Acceleration Investigation Technical Support to the National Highway Traffic Safety Administration (NHTSA) on the Reported Toyota Motor Corporation (TMC) Unintended Acceleration (UA) Investigation January 18, 2011 NESC Assessment #: TI-10-00618 NASA Engineering and Safety Center Version: 1.0 Technical Assessment Report Title: Page #: National Highway Traffic Safety Administration 2 of 177 Toyota Unintended Acceleration Investigation Report Approval and Revision History Approval and Document Revision History NOTE: This document was approved at the January 13, 2011, NRB. This document was submitted to the NESC Director on January 19, 2011, for configuration control. Approved Original Signature on File 1/19/11 Version: 1.0 NESC Director Date Office of Primary Version Description of Revision Effective Date Responsibility 1.0 Initial Release Michael T. Kirsch, 1/13/11 NESC Principal Engineer, LaRC REDACTION NOTE Since public release of this report on February 8, 2011, the Agency has revised its redactions to the document to release certain material previously deemed confidential under U.S.C. § 30167. This document, which was posted April 15, 2011 to NHTSA’s web site, replaces the one posted previously and contains the Agency’s revised redactions. NESC Assessment #: TI-10-00618 Version: NASA Engineering and Safety Center 1.0 Technical Assessment Report Title: Page #: National Highway