Memoryless Determinacy of Parity and Mean Payoff Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Frequently Asked Questions in Mathematics

Frequently Asked Questions in Mathematics The Sci.Math FAQ Team. Editor: Alex L´opez-Ortiz e-mail: [email protected] Contents 1 Introduction 4 1.1 Why a list of Frequently Asked Questions? . 4 1.2 Frequently Asked Questions in Mathematics? . 4 2 Fundamentals 5 2.1 Algebraic structures . 5 2.1.1 Monoids and Groups . 6 2.1.2 Rings . 7 2.1.3 Fields . 7 2.1.4 Ordering . 8 2.2 What are numbers? . 9 2.2.1 Introduction . 9 2.2.2 Construction of the Number System . 9 2.2.3 Construction of N ............................... 10 2.2.4 Construction of Z ................................ 10 2.2.5 Construction of Q ............................... 11 2.2.6 Construction of R ............................... 11 2.2.7 Construction of C ............................... 12 2.2.8 Rounding things up . 12 2.2.9 What’s next? . 12 3 Number Theory 14 3.1 Fermat’s Last Theorem . 14 3.1.1 History of Fermat’s Last Theorem . 14 3.1.2 What is the current status of FLT? . 14 3.1.3 Related Conjectures . 15 3.1.4 Did Fermat prove this theorem? . 16 3.2 Prime Numbers . 17 3.2.1 Largest known Mersenne prime . 17 3.2.2 Largest known prime . 17 3.2.3 Largest known twin primes . 18 3.2.4 Largest Fermat number with known factorization . 18 3.2.5 Algorithms to factor integer numbers . 18 3.2.6 Primality Testing . 19 3.2.7 List of record numbers . 20 3.2.8 What is the current status on Mersenne primes? . -

Subgame-Perfect Ε-Equilibria in Perfect Information Games With

Subgame-Perfect -Equilibria in Perfect Information Games with Common Preferences at the Limit Citation for published version (APA): Flesch, J., & Predtetchinski, A. (2016). Subgame-Perfect -Equilibria in Perfect Information Games with Common Preferences at the Limit. Mathematics of Operations Research, 41(4), 1208-1221. https://doi.org/10.1287/moor.2015.0774 Document status and date: Published: 01/11/2016 DOI: 10.1287/moor.2015.0774 Document Version: Publisher's PDF, also known as Version of record Document license: Taverne Please check the document version of this publication: • A submitted manuscript is the version of the article upon submission and before peer-review. There can be important differences between the submitted version and the official published version of record. People interested in the research are advised to contact the author for the final version of the publication, or visit the DOI to the publisher's website. • The final author version and the galley proof are versions of the publication after peer review. • The final published version features the final layout of the paper including the volume, issue and page numbers. Link to publication General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal. -

Alpha-Beta Pruning

Alpha-Beta Pruning Carl Felstiner May 9, 2019 Abstract This paper serves as an introduction to the ways computers are built to play games. We implement the basic minimax algorithm and expand on it by finding ways to reduce the portion of the game tree that must be generated to find the best move. We tested our algorithms on ordinary Tic-Tac-Toe, Hex, and 3-D Tic-Tac-Toe. With our algorithms, we were able to find the best opening move in Tic-Tac-Toe by only generating 0.34% of the nodes in the game tree. We also explored some mathematical features of Hex and provided proofs of them. 1 Introduction Building computers to play board games has been a focus for mathematicians ever since computers were invented. The first computer to beat a human opponent in chess was built in 1956, and towards the late 1960s, computers were already beating chess players of low-medium skill.[1] Now, it is generally recognized that computers can beat even the most accomplished grandmaster. Computers build a tree of different possibilities for the game and then work backwards to find the move that will give the computer the best outcome. Al- though computers can evaluate board positions very quickly, in a game like chess where there are over 10120 possible board configurations it is impossible for a computer to search through the entire tree. The challenge for the computer is then to find ways to avoid searching in certain parts of the tree. Humans also look ahead a certain number of moves when playing a game, but experienced players already know of certain theories and strategies that tell them which parts of the tree to look. -

Game Theory with Translucent Players

Game Theory with Translucent Players Joseph Y. Halpern and Rafael Pass∗ Cornell University Department of Computer Science Ithaca, NY, 14853, U.S.A. e-mail: fhalpern,[email protected] November 27, 2012 Abstract A traditional assumption in game theory is that players are opaque to one another—if a player changes strategies, then this change in strategies does not affect the choice of other players’ strategies. In many situations this is an unrealistic assumption. We develop a framework for reasoning about games where the players may be translucent to one another; in particular, a player may believe that if she were to change strategies, then the other player would also change strategies. Translucent players may achieve significantly more efficient outcomes than opaque ones. Our main result is a characterization of strategies consistent with appro- priate analogues of common belief of rationality. Common Counterfactual Belief of Rationality (CCBR) holds if (1) everyone is rational, (2) everyone counterfactually believes that everyone else is rational (i.e., all players i be- lieve that everyone else would still be rational even if i were to switch strate- gies), (3) everyone counterfactually believes that everyone else is rational, and counterfactually believes that everyone else is rational, and so on. CCBR characterizes the set of strategies surviving iterated removal of minimax dom- inated strategies: a strategy σi is minimax dominated for i if there exists a 0 0 0 strategy σ for i such that min 0 u (σ ; µ ) > max u (σ ; µ ). i µ−i i i −i µ−i i i −i ∗Halpern is supported in part by NSF grants IIS-0812045, IIS-0911036, and CCF-1214844, by AFOSR grant FA9550-08-1-0266, and by ARO grant W911NF-09-1-0281. -

Tic-Tac-Toe Is Not Very Interesting to Play, Because If Both Players Are Familiar with the Game the Result Is Always a Draw

CMPSCI 250: Introduction to Computation Lecture #27: Games and Adversary Search David Mix Barrington 4 November 2013 Games and Adversary Search • Review: A* Search • Modeling Two-Player Games • When There is a Game Tree • The Determinacy Theorem • Searching a Game Tree • Examples of Games Review: A* Search • The A* Search depends on a heuristic function, which h(y) = 6 2 is a lower bound on the 23 s distance to the goal. x 4 If x is a node, and g is the • h(z) = 3 nearest goal node to x, the admissibility condition p(y) = 23 + 2 + 6 = 31 on h is that 0 ≤ h(x) ≤ d(x, g). p(z) = 23 + 4 + 3 = 30 Review: A* Search • Suppose we have taken y off of the open list. The best-path distance from the start s to the goal g through y is d(s, y) + d(y, h(y) = 6 g), and this cannot be less than 2 23 d(s, y) + h(y). s x 4 • Thus when we find a path of length k from s to y, we put y h(z) = 3 onto the open list with priority k p(y) = 23 + 2 + 6 = 31 + h(y). We still record the p(z) = 23 + 4 + 3 = 30 distance d(s, y) when we take y off of the open list. Review: A* Search • The advantage of A* over uniform-cost search is that we do not consider entries x in the closed list for which d(s, x) + h(x) h(y) = 6 is greater than the actual best- 2 23 path distance from s to g. -

The Effective Minimax Value of Asynchronously Repeated Games*

Int J Game Theory (2003) 32: 431–442 DOI 10.1007/s001820300161 The effective minimax value of asynchronously repeated games* Kiho Yoon Department of Economics, Korea University, Anam-dong, Sungbuk-gu, Seoul, Korea 136-701 (E-mail: [email protected]) Received: October 2001 Abstract. We study the effect of asynchronous choice structure on the possi- bility of cooperation in repeated strategic situations. We model the strategic situations as asynchronously repeated games, and define two notions of effec- tive minimax value. We show that the order of players’ moves generally affects the effective minimax value of the asynchronously repeated game in significant ways, but the order of moves becomes irrelevant when the stage game satisfies the non-equivalent utilities (NEU) condition. We then prove the Folk Theorem that a payoff vector can be supported as a subgame perfect equilibrium out- come with correlation device if and only if it dominates the effective minimax value. These results, in particular, imply both Lagunoff and Matsui’s (1997) result and Yoon (2001)’s result on asynchronously repeated games. Key words: Effective minimax value, folk theorem, asynchronously repeated games 1. Introduction Asynchronous choice structure in repeated strategic situations may affect the possibility of cooperation in significant ways. When players in a repeated game make asynchronous choices, that is, when players cannot always change their actions simultaneously in each period, it is quite plausible that they can coordinate on some particular actions via short-run commitment or inertia to render unfavorable outcomes infeasible. Indeed, Lagunoff and Matsui (1997) showed that, when a pure coordination game is repeated in a way that only *I thank three anonymous referees as well as an associate editor for many helpful comments and suggestions. -

570: Minimax Sample Complexity for Turn-Based Stochastic Game

Minimax Sample Complexity for Turn-based Stochastic Game Qiwen Cui1 Lin F. Yang2 1School of Mathematical Sciences, Peking University 2Electrical and Computer Engineering Department, University of California, Los Angeles Abstract guarantees are rather rare due to complex interaction be- tween agents that makes the problem considerably harder than single agent reinforcement learning. This is also known The empirical success of multi-agent reinforce- as non-stationarity in MARL, which means when multi- ment learning is encouraging, while few theoret- ple agents alter their strategies based on samples collected ical guarantees have been revealed. In this work, from previous strategy, the system becomes non-stationary we prove that the plug-in solver approach, proba- for each agent and the improvement can not be guaranteed. bly the most natural reinforcement learning algo- One fundamental question in MBRL is that how to design rithm, achieves minimax sample complexity for efficient algorithms to overcome non-stationarity. turn-based stochastic game (TBSG). Specifically, we perform planning in an empirical TBSG by Two-players turn-based stochastic game (TBSG) is a two- utilizing a ‘simulator’ that allows sampling from agents generalization of Markov decision process (MDP), arbitrary state-action pair. We show that the em- where two agents choose actions in turn and one agent wants pirical Nash equilibrium strategy is an approxi- to maximize the total reward while the other wants to min- mate Nash equilibrium strategy in the true TBSG imize it. As a zero-sum game, TBSG is known to have and give both problem-dependent and problem- Nash equilibrium strategy [Shapley, 1953], which means independent bound. -

Constructing Stationary Sunspot Equilibria in a Continuous Time Model*

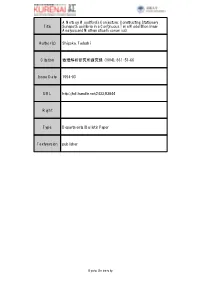

A Note on Woodford's Conjecture: Constructing Stationary Title Sunspot Equilibria in a Continuous Time Model(Nonlinear Analysis and Mathematical Economics) Author(s) Shigoka, Tadashi Citation 数理解析研究所講究録 (1994), 861: 51-66 Issue Date 1994-03 URL http://hdl.handle.net/2433/83844 Right Type Departmental Bulletin Paper Textversion publisher Kyoto University 数理解析研究所講究録 第 861 巻 1994 年 51-66 51 A Note on Woodford’s Conjecture: Constructing Stationary Sunspot Equilibria in a Continuous Time Model* Tadashi Shigoka Kyoto Institute of Economic Research, Kyoto University, Yoshidamachi Sakyoku Kyoto 606 Japan 京都大学 経済研究所 新後閑 禎 Abstract We show how to construct stationary sunspot equilibria in a continuous time model, where equilibrium is indeterninate near either a steady state or a closed orbit. Woodford’s conjecture that the indeterminacy of equilibrium implies the existence of stationary sunspot equilibria remains valid in a continuous time model. 52 Introduction If for given equilibrium dynamics there exist a continuum of non-stationary perfect foresight equilibria all converging asymptoticaUy to a steady state (a deterministic cycle resp.), we say the equilibrium dynamics is indeterminate near the steady state (the deterministic cycle resp.). Suppose that the fundamental characteristics of an economy are deterministic, but that economic agents believe nevertheless that equihibrium dynamics is affected by random factors apparently irrelevant to the fundamental characteristics (sunspots). This prophecy could be self-fulfilling, and one will get a sunspot equilibrium, if the resulting equilibrium dynamics is subject to a nontrivial stochastic process and confirns the agents’ belief. See Shell [19], and Cass-Shell [3]. Woodford [23] suggested that there exists a close relation between the indetenninacy of equilibrium near a deterministic steady state and the existence of stationary sunspot equilibria in the immediate vicinity of it. -

Games with Hidden Information

Games with Hidden Information R&N Chapter 6 R&N Section 17.6 • Assumptions so far: – Two-player game : Player A and B. – Perfect information : Both players see all the states and decisions. Each decision is made sequentially . – Zero-sum : Player’s A gain is exactly equal to player B’s loss. • We are going to eliminate these constraints. We will eliminate first the assumption of “perfect information” leading to far more realistic models. – Some more game-theoretic definitions Matrix games – Minimax results for perfect information games – Minimax results for hidden information games 1 Player A 1 L R Player B 2 3 L R L R Player A 4 +2 +5 +2 L Extensive form of game: Represent the -1 +4 game by a tree A pure strategy for a player 1 defines the move that the L R player would make for every possible state that the player 2 3 would see. L R L R 4 +2 +5 +2 L -1 +4 2 Pure strategies for A: 1 Strategy I: (1 L,4 L) Strategy II: (1 L,4 R) L R Strategy III: (1 R,4 L) Strategy IV: (1 R,4 R) 2 3 Pure strategies for B: L R L R Strategy I: (2 L,3 L) Strategy II: (2 L,3 R) 4 +2 +5 +2 Strategy III: (2 R,3 L) L R Strategy IV: (2 R,3 R) -1 +4 In general: If N states and B moves, how many pure strategies exist? Matrix form of games Pure strategies for A: Pure strategies for B: Strategy I: (1 L,4 L) Strategy I: (2 L,3 L) Strategy II: (1 L,4 R) Strategy II: (2 L,3 R) Strategy III: (1 R,4 L) Strategy III: (2 R,3 L) 1 Strategy IV: (1 R,4 R) Strategy IV: (2 R,3 R) R L I II III IV 2 3 L R I -1 -1 +2 +2 L R 4 II +4 +4 +2 +2 +2 +5 +1 L R III +5 +1 +5 +1 IV +5 +1 +5 +1 -1 +4 3 Pure strategies for Player B Player A’s payoff I II III IV if game is played I -1 -1 +2 +2 with strategy I by II +4 +4 +2 +2 Player A and strategy III by III +5 +1 +5 +1 Player B for Player A for Pure strategies IV +5 +1 +5 +1 • Matrix normal form of games: The table contains the payoffs for all the possible combinations of pure strategies for Player A and Player B • The table characterizes the game completely, there is no need for any additional information about rules, etc. -

Combinatorial Games

Midterm 1 Stat155 Game Theory Lecture 11: Midterm 1 Review “This is an open book exam: you can use any printed or written material, but you cannot use a laptop, tablet, or phone (or any device that can communicate). There are three questions, each consisting of three parts. Peter Bartlett Each part of each question carries equal weight. Answer each question in the space provided.” September 29, 2016 1 / 35 2 / 35 Topics Definitions: Combinatorial games Combinatorial games Positions, moves, terminal positions, impartial/partisan, progressively A combinatorial game has: bounded Two players, Player I and Player II. Progressively bounded impartial and partisan games The sets N and P A set of positions X . Theorem: Someone can win For each player, a set of legal moves between positions, that is, a set Examples: Subtraction, Chomp, Nim, Rims, Staircase Nim, Hex of ordered pairs, (current position, next position): Zero sum games Payoff matrices, pure and mixed strategies, safety strategies MI , MII X X . Von Neumann’s minimax theorem ⊂ × Solving two player zero-sum games Saddle points Equalizing strategies Players alternately choose moves. Solving2 2 games × Play continues until some player cannot move. Dominated strategies 2 n and m 2 games Normal play: the player that cannot move loses the game. × × Principle of indifference Symmetry: Invariance under permutations 3 / 35 4 / 35 Definitions: Combinatorial games Impartial combinatorial games and winning strategies Terminology: An impartial game has the same set of legal moves for both players: MI = MII . Theorem A partisan game has different sets of legal moves for the players. In a progressively bounded impartial A terminal position for a player has no legal move to another position. -

Strategies in Games: a Logic-Automata Study

Strategies in games: a logic-automata study S. Ghosh1 and R. Ramanujam2 1 Indian Statistical Institute SETS Campus, Chennai 600 113, India. [email protected] 2 The Institute of Mathematical Sciences C.I.T. Campus, Chennai 600 113, India. [email protected] 1 Introduction Overview. There is now a growing body of research on formal algorithmic mod- els of social procedures and interactions between rational agents. These models attempt to identify logical elements in our day-to-day social activities. When in- teractions are modeled as games, reasoning involves analysis of agents' long-term powers for influencing outcomes. Agents devise their respective strategies on how to interact so as to ensure maximal gain. In recent years, researchers have tried to devise logics and models in which strategies are “first class citizens", rather than unspecified means to ensure outcomes. Yet, these cover only basic models, leaving open a range of interesting issues, e.g. communication and coordination between players, especially in games of imperfect information. Game models are also relevant in the context of system design and verification. In this article we will discuss research on logic and automata-theoretic models of games and strategic reasoning in multi-agent systems. We will get acquainted with the basic tools and techniques for this emerging area, and provide pointers to the exciting questions it offers. Content. This article consists of 5 sections apart from the introduction. An outline of the contents of the other sections is given below. 1. Section 2 (Exploring structure in strategies): A short introduction to games in extensive form, and strategies. -

Recursion & the Minimax Algorithm Winning Tic-Tac-Toe How to Pass The

Recursion & the Minimax Algorithm Winning Tic-tac-toe Key to Acing Computer Science Anticipate the implications of your move. If you understand everything, ace your computer science course. Avoid moves which could enable your opponent to win. Otherwise, study & program something you don't understand, Attempt moves which would then see force your opponent to lose, so you win. Key to Acing Computer Science CompSci 4 Recursion & Minimax 27.1 CompSci 4 Recursion & Minimax 27.2 How to pass the buck: recursion! How could this approach work? Imagine this: What actually happens is that your friends doing most of You have all sorts of friends who are willing to help you do your work have friends of their own. Everyone your work with two caveats: eventually does a small part of the work and piece it o They won't do all of your work back together to do the work in its entirity. o They will only do the type of work you have to do In the last example, 100 total people would be working Example: on the homework, each person doing only one problem How would you do your homework consisting of 100 calculus each and passing on the rest. problems? Important: the person to do the last homework problem Easy – do the first problem yourself and ask your friend to do does it entirely without help. the rest CompSci 4 Recursion & Minimax 27.3 CompSci 4 Recursion & Minimax 27.4 The Parts of Recursion Example: Finding the Maximum ÿ Base Case – this is the simplest form of the problem ÿ Imagine you are given a stack of 1000 unsorted papers which can be solved directly.