Wesleyan University Electronic Music Studios Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Delia Derbyshire

www.delia-derbyshire.org Delia Derbyshire Delia Derbyshire was born in Coventry, England, in 1937. Educated at Coventry Grammar School and Girton College, Cambridge, where she was awarded a degree in mathematics and music. In 1959, on approaching Decca records, Delia was told that the company DID NOT employ women in their recording studios, so she went to work for the UN in Geneva before returning to London to work for music publishers Boosey & Hawkes. In 1960 Delia joined the BBC as a trainee studio manager. She excelled in this field, but when it became apparent that the fledgling Radiophonic Workshop was under the same operational umbrella, she asked for an attachment there - an unheard of request, but one which was, nonetheless, granted. Delia remained 'temporarily attached' for years, regularly deputising for the Head, and influencing many of her trainee colleagues. To begin with Delia thought she had found her own private paradise where she could combine her interests in the theory and perception of sound; modes and tunings, and the communication of moods using purely electronic sources. Within a matter of months she had created her recording of Ron Grainer's Doctor Who theme, one of the most famous and instantly recognisable TV themes ever. On first hearing it Grainer was tickled pink: "Did I really write this?" he asked. "Most of it," replied Derbyshire. Thus began what is still referred to as the Golden Age of the Radiophonic Workshop. Initially set up as a service department for Radio Drama, it had always been run by someone with a drama background. -

Synchronous Programming in Audio Processing Karim Barkati, Pierre Jouvelot

Synchronous programming in audio processing Karim Barkati, Pierre Jouvelot To cite this version: Karim Barkati, Pierre Jouvelot. Synchronous programming in audio processing. ACM Computing Surveys, Association for Computing Machinery, 2013, 46 (2), pp.24. 10.1145/2543581.2543591. hal- 01540047 HAL Id: hal-01540047 https://hal-mines-paristech.archives-ouvertes.fr/hal-01540047 Submitted on 15 Jun 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A Synchronous Programming in Audio Processing: A Lookup Table Oscillator Case Study KARIM BARKATI and PIERRE JOUVELOT, CRI, Mathématiques et systèmes, MINES ParisTech, France The adequacy of a programming language to a given software project or application domain is often con- sidered a key factor of success in software development and engineering, even though little theoretical or practical information is readily available to help make an informed decision. In this paper, we address a particular version of this issue by comparing the adequacy of general-purpose synchronous programming languages to more domain-specific -

Gardner • Even Orpheus Needs a Synthi Edit No Proof

James Gardner Even Orpheus Needs a Synthi Since his return to active service a few years ago1, Peter Zinovieff has appeared quite frequently in interviews in the mainstream press and online outlets2 talking not only about his recent sonic art projects but also about the work he did in the 1960s and 70s at his own pioneering computer electronic music studio in Putney. And no such interview would be complete without referring to EMS, the synthesiser company he co-founded in 1969, or namechecking the many rock celebrities who used its products, such as the VCS3 and Synthi AKS synthesisers. Before this Indian summer (he is now 82) there had been a gap of some 30 years in his compositional activity since the demise of his studio. I say ‘compositional’ activity, but in the 60s and 70s he saw himself as more animateur than composer and it is perhaps in that capacity that his unique contribution to British electronic music during those two decades is best understood. In this article I will discuss just some of the work that was done at Zinovieff’s studio during its relatively brief existence and consider two recent contributions to the documentation and contextualization of that work: Tom Hall’s chapter3 on Harrison Birtwistle’s electronic music collaborations with Zinovieff; and the double CD Electronic Calendar: The EMS Tapes,4 which presents a substantial sampling of the studio’s output between 1966 and 1979. Electronic Calendar, a handsome package to be sure, consists of two CDs and a lavishly-illustrated booklet with lengthy texts. -

Peter Blasser CV

Peter Blasser – [email protected] - 410 362 8364 Experience Ten years running a synthesizer business, ciat-lonbarde, with a focus on touch, gesture, and spatial expression into audio. All the while, documenting inventions and creations in digital video, audio, and still image. Disseminating this information via HTML web page design and YouTube. Leading workshops at various skill levels, through manual labor exploring how synthesizers work hand and hand with acoustics, culminating in montage of participants’ pieces. Performance as touring musician, conceptual lecturer, or anything in between. As an undergraduate, served as apprentice to guild pipe organ builders. Experience as racquetball coach. Low brass wind instrumentalist. Fluent in Java, Max/MSP, Supercollider, CSound, ProTools, C++, Sketchup, Osmond PCB, Dreamweaver, and Javascript. Education/Awards • 2002 Oberlin College, BA in Chinese, BM in TIMARA (Technology in Music and Related Arts), minors in Computer Science and Classics. • 2004 Fondation Daniel Langlois, Art and Technology Grant for the project “Shinths” • 2007 Baltimore City Grant for Artists, Craft Category • 2008 Baltimore City Grant for Community Arts Projects, Urban Gardening List of Appearances "Visiting Professor, TIMARA dep't, Environmental Studies dep't", Oberlin College, Oberlin, Ohio, Spring 2011 “Babier, piece for Dancer, Elasticity Transducer, and Max/MSP”, High Zero Festival of Experimental Improvised Music, Theatre Project, Baltimore, September 2010. "Sejayno:Cezanno (Opera)", CEZANNE FAST FORWARD. Baltimore Museum of Art, May 21, 2010. “Deerhorn Tapestry Installation”, Curators Incubator, 2009. MAP Maryland Art Place, September 15 – October 24, 2009. Curated by Shelly Blake-Pock, teachpaperless.blogspot.com “Deerhorn Micro-Cottage and Radionic Fish Drier”, Electro-Music Gathering, New Jersey, October 28-29, 2009. -

Chuck: a Strongly Timed Computer Music Language

Ge Wang,∗ Perry R. Cook,† ChucK: A Strongly Timed and Spencer Salazar∗ ∗Center for Computer Research in Music Computer Music Language and Acoustics (CCRMA) Stanford University 660 Lomita Drive, Stanford, California 94306, USA {ge, spencer}@ccrma.stanford.edu †Department of Computer Science Princeton University 35 Olden Street, Princeton, New Jersey 08540, USA [email protected] Abstract: ChucK is a programming language designed for computer music. It aims to be expressive and straightforward to read and write with respect to time and concurrency, and to provide a platform for precise audio synthesis and analysis and for rapid experimentation in computer music. In particular, ChucK defines the notion of a strongly timed audio programming language, comprising a versatile time-based programming model that allows programmers to flexibly and precisely control the flow of time in code and use the keyword now as a time-aware control construct, and gives programmers the ability to use the timing mechanism to realize sample-accurate concurrent programming. Several case studies are presented that illustrate the workings, properties, and personality of the language. We also discuss applications of ChucK in laptop orchestras, computer music pedagogy, and mobile music instruments. Properties and affordances of the language and its future directions are outlined. What Is ChucK? form the notion of a strongly timed computer music programming language. ChucK (Wang 2008) is a computer music program- ming language. First released in 2003, it is designed to support a wide array of real-time and interactive Two Observations about Audio Programming tasks such as sound synthesis, physical modeling, gesture mapping, algorithmic composition, sonifi- Time is intimately connected with sound and is cation, audio analysis, and live performance. -

Implementing Stochastic Synthesis for Supercollider and Iphone

Implementing stochastic synthesis for SuperCollider and iPhone Nick Collins Department of Informatics, University of Sussex, UK N [dot] Collins ]at[ sussex [dot] ac [dot] uk - http://www.cogs.susx.ac.uk/users/nc81/index.html Proceedings of the Xenakis International Symposium Southbank Centre, London, 1-3 April 2011 - www.gold.ac.uk/ccmc/xenakis-international-symposium This article reflects on Xenakis' contribution to sound synthesis, and explores practical tools for music making touched by his ideas on stochastic waveform generation. Implementations of the GENDYN algorithm for the SuperCollider audio programming language and in an iPhone app will be discussed. Some technical specifics will be reported without overburdening the exposition, including original directions in computer music research inspired by his ideas. The mass exposure of the iGendyn iPhone app in particular has provided a chance to reach a wider audience. Stochastic construction in music can apply at many timescales, and Xenakis was intrigued by the possibility of compositional unification through simultaneous engagement at multiple levels. In General Dynamic Stochastic Synthesis Xenakis found a potent way to extend stochastic music to the sample level in digital sound synthesis (Xenakis 1992, Serra 1993, Roads 1996, Hoffmann 2000, Harley 2004, Brown 2005, Luque 2006, Collins 2008, Luque 2009). In the central algorithm, samples are specified as a result of breakpoint interpolation synthesis (Roads 1996), where breakpoint positions in time and amplitude are subject to probabilistic perturbation. Random walks (up to second order) are followed with respect to various probability distributions for perturbation size. Figure 1 illustrates this for a single breakpoint; a full GENDYN implementation would allow a set of breakpoints, with each breakpoint in the set updated by individual perturbations each cycle. -

Isupercolliderkit: a Toolkit for Ios Using an Internal Supercollider Server As a Sound Engine

ICMC 2015 – Sept. 25 - Oct. 1, 2015 – CEMI, University of North Texas iSuperColliderKit: A Toolkit for iOS Using an Internal SuperCollider Server as a Sound Engine Akinori Ito Kengo Watanabe Genki Kuroda Ken’ichiro Ito Tokyo University of Tech- Watanabe-DENKI Inc. Tokyo University of Tech- Tokyo University of Tech- nology [email protected] nology nology [email protected]. [email protected]. [email protected]. ac.jp ac.jp ac.jp The editor client sends the OSC code-fragments to its server. ABSTRACT Due to adopting the model, SuperCollider has feature that a iSuperColliderKit is a toolkit for iOS using an internal programmer can dynamically change some musical ele- SuperCollider Server as a sound engine. Through this re- ments, phrases, rhythms, scales and so on. The real-time search, we have adapted the exiting SuperCollider source interactivity is effectively utilized mainly in the live-coding code for iOS to the latest environment. Further we attempt- field. If the iOS developers would make their application ed to detach the UI from the sound engine so that the native adopting the “sound-server” model, using SuperCollider iOS visual objects built by objective-C or Swift, send to the seems to be a reasonable choice. However, the porting situa- internal SuperCollider server with any user interaction tion is not so good. SonicPi[5] is the one of a musical pro- events. As a result, iSuperColliderKit makes it possible to gramming environment that has SuperCollider server inter- utilize the vast resources of dynamic real-time changing nally. However, it is only for Raspberry Pi, Windows and musical elements or algorithmic composition on SuperCol- OSX. -

The Viability of the Web Browser As a Computer Music Platform

Lonce Wyse and Srikumar Subramanian The Viability of the Web Communications and New Media Department National University of Singapore Blk AS6, #03-41 Browser as a Computer 11 Computing Drive Singapore 117416 Music Platform [email protected] [email protected] Abstract: The computer music community has historically pushed the boundaries of technologies for music-making, using and developing cutting-edge computing, communication, and interfaces in a wide variety of creative practices to meet exacting standards of quality. Several separate systems and protocols have been developed to serve this community, such as Max/MSP and Pd for synthesis and teaching, JackTrip for networked audio, MIDI/OSC for communication, as well as Max/MSP and TouchOSC for interface design, to name a few. With the still-nascent Web Audio API standard and related technologies, we are now, more than ever, seeing an increase in these capabilities and their integration in a single ubiquitous platform: the Web browser. In this article, we examine the suitability of the Web browser as a computer music platform in critical aspects of audio synthesis, timing, I/O, and communication. We focus on the new Web Audio API and situate it in the context of associated technologies to understand how well they together can be expected to meet the musical, computational, and development needs of the computer music community. We identify timing and extensibility as two key areas that still need work in order to meet those needs. To date, despite the work of a few intrepid musical Why would musicians care about working in explorers, the Web browser platform has not been the browser, a platform not specifically designed widely considered as a viable platform for the de- for computer music? Max/MSP is an example of a velopment of computer music. -

Delia Derbyshire Sound and Music for the BBC Radiophonic Workshop, 1962-1973

Delia Derbyshire Sound and Music For The BBC Radiophonic Workshop, 1962-1973 Teresa Winter PhD University of York Music June 2015 2 Abstract This thesis explores the electronic music and sound created by Delia Derbyshire in the BBC’s Radiophonic Workshop between 1962 and 1973. After her resignation from the BBC in the early 1970s, the scope and breadth of her musical work there became obscured, and so this research is primarily presented as an open-ended enquiry into that work. During the course of my enquiries, I found a much wider variety of music than the popular perception of Derbyshire suggests: it ranged from theme tunes to children’s television programmes to concrete poetry to intricate experimental soundscapes of synthesis. While her most famous work, the theme to the science fiction television programme Doctor Who (1963) has been discussed many times, because of the popularity of the show, most of the pieces here have not previously received detailed attention. Some are not widely available at all and so are practically unknown and unexplored. Despite being the first institutional electronic music studio in Britain, the Workshop’s role in broadcasting, rather than autonomous music, has resulted in it being overlooked in historical accounts of electronic music, and very little research has been undertaken to discover more about the contents of its extensive archived back catalogue. Conversely, largely because of her role in the creation of its most recognised work, the previously mentioned Doctor Who theme tune, Derbyshire is often positioned as a pioneer in the medium for bringing electronic music to a large audience. -

Rock Around Sonic Pi Sam Aaron, Il Live Coding E L’Educazione Musicale

Rock around Sonic Pi Sam Aaron, il live coding e l’educazione musicale Roberto Agostini IC9 Bologna, Servizio Marconi TSI (USR-ER) 1. Formazione “Hands-On” con Sam Aaron “Imagine if the only allowed use of reading and writing was to make legal documents. Would that be a nice world? Now, today’s programmers are all like that”1. In questa immagine enigmatica e un po’ provocatoria proposta da Sam Aaron nelle prime fasi di una sua recente conferenza è racchiusa un’originale visione della programmazione ricca di implicazioni, e non solo per l’educazione musicale. Ma andiamo con ordine: il 14 febbraio 2020, all’Opificio Golinelli di Bologna, Sam Aaron, creatore del software per live coding musicale Sonic Pi, è stato protagonista di un denso pomeriggio dedicato al pensiero computazionale nella musica intitolato “Rock around Sonic Pi”. L’iniziativa rientrava nell’ambito della “Formazione Hands-On” promossa dal Future Lab dell’Istituto Comprensivo di Ozzano dell’Emilia in collaborazione con il Servizio Marconi TSI dell’USR-ER. Oltre alla menzionata conferenza, aperta a tutti, Sam Aaron ha tenuto un laboratorio di tre ore a numero chiuso per i docenti delle scuole2. ● Presentazione e programma dettagliato della giornata “Rock Around Sonic Pi” (presentazione a cura di Rosa Maria Caffio). ● Ripresa integrale della conferenza di Sam Aaron (20.2.2020) (registrazione a cura dello staff dell’Opificio Golinelli). 1 “Immaginate che l’unico modo consentito di usare la lettura e la scrittura fosse quello di produrre documenti legali. Sarebbe un bel mondo? Ora, i programmatori di oggi sono nella stessa situazione”. -

A Supercollider Environment for Synthesis-Oriented Live Coding

Colliding: a SuperCollider environment for synthesis-oriented live coding Gerard Roma CVSSP, University of Surrey Guildford, United Kingdom [email protected] Abstract. One of the motivations for live coding is the freedom and flexibility that a programming language puts in the hands of the performer. At the same time, systems with explicit constraints facilitate learning and often boost creativity in unexpected ways. Some simplified languages and environments for music live coding have been developed during the last few years, most often focusing on musical events, patterns and sequences. This paper describes a constrained environment aimed at exploring the creation and modification of sound synthesis and processing networks in real time, using a subset of the SuperCollider programming language. The system has been used in educational and concert settings, two common applications of live coding that benefit from the lower cognitive load. Keywords: Live coding, sound synthesis, live interfaces Introduction The world of specialized music creation programming languages has been generally dominated by the Music-N paradigm pioneered by Max Mathews (Mathews 1963). Programming languages like CSound or SuperCollider embrace the division of the music production task in two separate levels: a signal processing level, which is used to define instruments as networks of unit generators, and a compositional level that is used to assemble and control the instruments. An exception to this is Faust, which is exclusively concerned with signal processing. Max and Pure Data use a different type of connection for signals and events, although under a similar interaction paradigm. Similarly, Chuck has specific features for connecting unit generators and controlling them along time. -

Holmes Electronic and Experimental Music

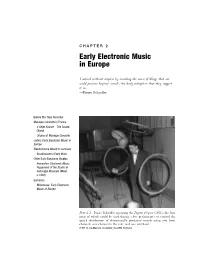

C H A P T E R 2 Early Electronic Music in Europe I noticed without surprise by recording the noise of things that one could perceive beyond sounds, the daily metaphors that they suggest to us. —Pierre Schaeffer Before the Tape Recorder Musique Concrète in France L’Objet Sonore—The Sound Object Origins of Musique Concrète Listen: Early Electronic Music in Europe Elektronische Musik in Germany Stockhausen’s Early Work Other Early European Studios Innovation: Electronic Music Equipment of the Studio di Fonologia Musicale (Milan, c.1960) Summary Milestones: Early Electronic Music of Europe Plate 2.1 Pierre Schaeffer operating the Pupitre d’espace (1951), the four rings of which could be used during a live performance to control the spatial distribution of electronically produced sounds using two front channels: one channel in the rear, and one overhead. (1951 © Ina/Maurice Lecardent, Ina GRM Archives) 42 EARLY HISTORY – PREDECESSORS AND PIONEERS A convergence of new technologies and a general cultural backlash against Old World arts and values made conditions favorable for the rise of electronic music in the years following World War II. Musical ideas that met with punishing repression and indiffer- ence prior to the war became less odious to a new generation of listeners who embraced futuristic advances of the atomic age. Prior to World War II, electronic music was anchored down by a reliance on live performance. Only a few composers—Varèse and Cage among them—anticipated the importance of the recording medium to the growth of electronic music. This chapter traces a technological transition from the turntable to the magnetic tape recorder as well as the transformation of electronic music from a medium of live performance to that of recorded media.