Theories of Fairness and Reciprocity - Evidence and Economic Applications∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Herbert Gintis – Samuel Bowles – Their Distribution Preferences, and That They Robert Boyd – Ernst Fehr (Eds.): Moral Do So Differently in Different Situations

Sociologický časopis/Czech Sociological Review, 2008, Vol. 44, No. 6 social capital theory, which shows that so- the face of the evolutionary logic in which cial collaboration is built on social networks material advantages can be achieved by that underlie norms of reciprocity and trust- adopting self-interested preferences? worthiness. The development of these pro- social dispositions is in turn enabled in so- Clara Sabbagh cieties that further extra-familial ties and University of Haifa disregard or transcend purely ‘amoral fa- [email protected] milist’ interactions [Banfi eld 1958]. This research project nevertheless References leaves several unresolved problems. First, Banfi eld, Edward C. 1958. The Moral Basis of a Backward Society. Glencoe, IL: Free Press. there is the problem of causality, which de- Camerer, Colin F. 2003. Behavioral Game Theory. rives from a major theoretical dilemma in New York: Russell Sage. the social sciences. To what extent are pro- Deutsch, Morton. 1985. Distributive Justice. New social dispositions the result of structur- Haven: Yale University Press. al constraints, such as market integration, Giddens, Anthony. 1997. Sociology. Cambridge, or rather an active element in structuring UK: Polity Press. these constraints [Giddens 1997]? Joseph Putnam, Robert D. 1993. Making Democracy Work. Henrich (Chapter 2) discusses this prob- Civic Traditions in Modern Italy. Princeton, NJ: lem on a theoretical level by explaining the Princeton University Press. different mechanisms through which the Sabbagh, Clara and Deborah Golden. 2007. ‘Jux- structure of interaction affects preferences. taposing Etic and Emic Perspectives: A Refl ec- tion on Three Studies on Distributive Justice.’ Yet only future longitudinal research will Social Justice Research 20: 372–387. -

A Monetary History of the United States, 1867-1960’

JOURNALOF Monetary ECONOMICS ELSEVIER Journal of Monetary Economics 34 (I 994) 5- 16 Review of Milton Friedman and Anna J. Schwartz’s ‘A monetary history of the United States, 1867-1960’ Robert E. Lucas, Jr. Department qf Economics, University of Chicago, Chicago, IL 60637, USA (Received October 1993; final version received December 1993) Key words: Monetary history; Monetary policy JEL classijcation: E5; B22 A contribution to monetary economics reviewed again after 30 years - quite an occasion! Keynes’s General Theory has certainly had reappraisals on many anniversaries, and perhaps Patinkin’s Money, Interest and Prices. I cannot think of any others. Milton Friedman and Anna Schwartz’s A Monetary History qf the United States has become a classic. People are even beginning to quote from it out of context in support of views entirely different from any advanced in the book, echoing the compliment - if that is what it is - so often paid to Keynes. Why do people still read and cite A Monetary History? One reason, certainly, is its beautiful time series on the money supply and its components, extended back to 1867, painstakingly documented and conveniently presented. Such a gift to the profession merits a long life, perhaps even immortality. But I think it is clear that A Monetary History is much more than a collection of useful time series. The book played an important - perhaps even decisive - role in the 1960s’ debates over stabilization policy between Keynesians and monetarists. It organ- ized nearly a century of U.S. macroeconomic evidence in a way that has had great influence on subsequent statistical and theoretical research. -

Surviving the Titanic Disaster: Economic, Natural and Social Determinants

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Frey, Bruno S.; Savage, David A.; Torgler, Benno Working Paper Surviving the Titanic Disaster: Economic, Natural and Social Determinants CREMA Working Paper, No. 2009-03 Provided in Cooperation with: CREMA - Center for Research in Economics, Management and the Arts, Zürich Suggested Citation: Frey, Bruno S.; Savage, David A.; Torgler, Benno (2009) : Surviving the Titanic Disaster: Economic, Natural and Social Determinants, CREMA Working Paper, No. 2009-03, Center for Research in Economics, Management and the Arts (CREMA), Basel This Version is available at: http://hdl.handle.net/10419/214430 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. www.econstor.eu CREMA Center for Research in Economics, Management and the Arts Surviving the Titanic Disaster: Economic, Natural and Social Determinants Bruno S. -

Prof. Dr. Armin Falk Biographical Sketch 1998 Phd, University Of

Prof. Dr. Armin Falk Biographical Sketch 1998 PhD, University of Zurich 1998 - 2003 Assistant professor, University of Zurich 2003 - 2005 Lecturer, Central European University (Budapest) 2003 - today Research Director, Institute for the Study of Labor (IZA) 2003 - today Professor of Economics, University of Bonn Affiliations with CESifo, CEPR Research Interests Labor Economics, Behavioral and experimental economics Selected Journal Publications “Fairness Perceptions and Reservation Wages - The Behavioral Effects of Minimum Wage Laws” (with Ernst Fehr and Christian Zehnder), Quarterly Journal of Economic, forthcoming. “Distrust - The Hidden Cost of Control” (with Michael Kosfeld), American Economic Review, forthcoming. “Clean Evidence on Peer Effects” (with Andrea Ichino), Journal of Labor Economics, forthcoming. “A Theory of Reciprocity” (with Urs Fischbacher), Games and Economic Behavior 54 (2), 2006, 293-315. “Driving Forces Behind Informal Sanctions” (with Ernst Fehr and Urs Fischbacher), Econometrica 73 (6), 2005, 2017-2030. “The Success of Job Applications: A New Approach to Program Evaluation” (with Rafael Lalive and Josef Zweimüller), Labour Economics 12 (6), 2005, 739-748. “Choosing the Joneses: Endogenous Goals and Reference Standards” (with Markus Knell), Scandinavian Journal of Economics 106 (3), 2004, 417-435. “Relational Contracts and the Nature of Market Interactions” (with Martin Brown and Ernst Fehr), Econometrica 72, 2004, 747-780. “On the Nature of Fair Behavior” (with Ernst Fehr and Urs Fischbacher), Economic Inquiry 41(1), 2003, 20-26. “Reasons for Conflict - Lessons From Bargaining Experiments” (with Ernst Fehr and Urs Fischbacher), Journal of Institutional and Theoretical Economics 159 (1), 2003, 171-187. “Why Labour Market Experiments?” (with Ernst Fehr), Labour Economics 10, 2003, 399-406. -

Strong Reciprocity and Human Sociality∗

Strong Reciprocity and Human Sociality∗ Herbert Gintis Department of Economics University of Massachusetts, Amherst Phone: 413-586-7756 Fax: 413-586-6014 Email: [email protected] Web: http://www-unix.oit.umass.edu/˜gintis Running Head: Strong Reciprocity and Human Sociality March 11, 2000 Abstract Human groups maintain a high level of sociality despite a low level of relatedness among group members. The behavioral basis of this sociality remains in doubt. This paper reviews the evidence for an empirically identifi- able form of prosocial behavior in humans, which we call ‘strong reciprocity,’ that may in part explain human sociality. A strong reciprocator is predisposed to cooperate with others and punish non-cooperators, even when this behavior cannot be justified in terms of extended kinship or reciprocal altruism. We present a simple model, stylized but plausible, of the evolutionary emergence of strong reciprocity. 1 Introduction Human groups maintain a high level of sociality despite a low level of relatedness among group members. Three types of explanation have been offered for this phe- nomenon: reciprocal altruism (Trivers 1971, Axelrod and Hamilton 1981), cultural group selection (Cavalli-Sforza and Feldman 1981, Boyd and Richerson 1985) and genetically-based altruism (Lumsden and Wilson 1981, Simon 1993, Wilson and Dugatkin 1997). These approaches are of course not incompatible. Reciprocal ∗ I would like to thank Lee Alan Dugatkin, Ernst Fehr, David Sloan Wilson, and the referees of this Journal for helpful comments, Samuel Bowles and Robert Boyd for many extended discussions of these issues, and the MacArthur Foundation for financial support. This paper is dedicated to the memory of W. -

Why Social Preferences Matter – the Impact of Non-Selfish Motives on Competition, Cooperation and Incentives Ernst Fehr and Urs Fischbacher

Institute for Empirical Research in Economics University of Zurich Working Paper Series ISSN 1424-0459 forthcoming in: Economic Journal 2002 Working Paper No. 84 Why Social Preferences Matter – The Impact of Non-Selfish Motives on Competition, Cooperation and Incentives Ernst Fehr and Urs Fischbacher January 2002 Why Social Preferences Matter - The Impact of Non-Selfish Motives on Competition, Cooperation and Incentives Ernst Fehra) University of Zurich, CESifo and CEPR Urs Fischbacherb) University of Zürich Frank Hahn Lecture Annual Conference of the Royal Economic Society 2001 Forthcoming in: Economic Journal 2002 Abstract: A substantial number of people exhibit social preferences, which means they are not solely motivated by material self-interest but also care positively or negatively for the material payoffs of relevant reference agents. We show empirically that economists fail to understand fundamental economic questions when they disregard social preferences, in particular, that without taking social preferences into account, it is not possible to understand adequately (i) the effects of competition on market outcomes, (ii) laws governing cooperation and collective action, (iii) effects and the determinants of material incentives, (iv) which contracts and property rights arrangements are optimal, and (v) important forces shaping social norms and market failures. a) Ernst Fehr, Institute for Empirical Research in Economics, University of Zurich, Bluemlisalpstrasse 10, CH-8006 Zurich, Switzerland, email: [email protected]. b) Urs Fischbacher, Institute for Empirical Research in Economics, University of Zurich, Bluemlisalpstrasse 10, CH-8006 Zurich, Switzerland, email: [email protected]. Contents 1 Introduction 1 2 The Nature of Social Preferences 2 2.1 Positive and Negative Reciprocity: Two Examples …............... -

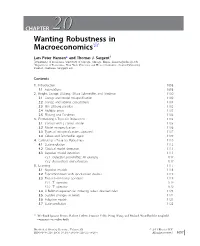

CHAPTER 2020 Wanting Robustness in Macroeconomics$

CHAPTER 2020 Wanting Robustness in Macroeconomics$ Lars Peter Hansen* and Thomas J. Sargent{ * Department of Economics, University of Chicago, Chicago, Illinois. [email protected] { Department of Economics, New York University and Hoover Institution, Stanford University, Stanford, California. [email protected] Contents 1. Introduction 1098 1.1 Foundations 1098 2. Knight, Savage, Ellsberg, Gilboa-Schmeidler, and Friedman 1100 2.1 Savage and model misspecification 1100 2.2 Savage and rational expectations 1101 2.3 The Ellsberg paradox 1102 2.4 Multiple priors 1103 2.5 Ellsberg and Friedman 1104 3. Formalizing a Taste for Robustness 1105 3.1 Control with a correct model 1105 3.2 Model misspecification 1106 3.3 Types of misspecifications captured 1107 3.4 Gilboa and Schmeidler again 1109 4. Calibrating a Taste for Robustness 1110 4.1 State evolution 1112 4.2 Classical model detection 1113 4.3 Bayesian model detection 1113 4.3.1 Detection probabilities: An example 1114 4.3.2 Reservations and extensions 1117 5. Learning 1117 5.1 Bayesian models 1118 5.2 Experimentation with specification doubts 1119 5.3 Two risk-sensitivity operators 1119 5.3.1 T1 operator 1119 5.3.2 T2 operator 1120 5.4 A Bellman equation for inducing robust decision rules 1121 5.5 Sudden changes in beliefs 1122 5.6 Adaptive models 1123 5.7 State prediction 1125 $ We thank Ignacio Presno, Robert Tetlow, Franc¸ois Velde, Neng Wang, and Michael Woodford for insightful comments on earlier drafts. Handbook of Monetary Economics, Volume 3B # 2011 Elsevier B.V. ISSN 0169-7218, DOI: 10.1016/S0169-7218(11)03026-7 All rights reserved. -

Walrasian Economics in Retrospect

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Bowles, Samuel; Gintis, Herbert Working Paper Walrasian Economics in Retrospect Working Paper, No. 2000-04 Provided in Cooperation with: Department of Economics, University of Massachusetts Suggested Citation: Bowles, Samuel; Gintis, Herbert (2000) : Walrasian Economics in Retrospect, Working Paper, No. 2000-04, University of Massachusetts, Department of Economics, Amherst, MA This Version is available at: http://hdl.handle.net/10419/105719 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. www.econstor.eu WALRASIAN ECONOMICS IN RETROSPECT∗ Department of Economics University of Massachusetts Amherst, Massachusetts, 01003 Samuel Bowles and Herbert Gintis February 4, 2000 Abstract Two basic tenets of the Walrasian model, behavior based on self-interested exogenous preferences and complete and costless contracting have recently come under critical scrutiny. -

Pricing Uncertainty Induced by Climate Change∗

Pricing Uncertainty Induced by Climate Change∗ Michael Barnett William Brock Lars Peter Hansen Arizona State University University of Wisconsin University of Chicago and University of Missouri January 30, 2020 Abstract Geophysicists examine and document the repercussions for the earth’s climate induced by alternative emission scenarios and model specifications. Using simplified approximations, they produce tractable characterizations of the associated uncer- tainty. Meanwhile, economists write highly stylized damage functions to speculate about how climate change alters macroeconomic and growth opportunities. How can we assess both climate and emissions impacts, as well as uncertainty in the broadest sense, in social decision-making? We provide a framework for answering this ques- tion by embracing recent decision theory and tools from asset pricing, and we apply this structure with its interacting components to a revealing quantitative illustration. (JEL D81, E61, G12, G18, Q51, Q54) ∗We thank James Franke, Elisabeth Moyer, and Michael Stein of RDCEP for the help they have given us on this paper. Comments, suggestions and encouragement from Harrison Hong and Jose Scheinkman are most appreciated. We gratefully acknowledge Diana Petrova and Grace Tsiang for their assistance in preparing this manuscript, Erik Chavez for his comments, and Jiaming Wang, John Wilson, Han Xu, and especially Jieyao Wang for computational assistance. More extensive results and python scripts are avail- able at http://github.com/lphansen/Climate. The Financial support for this project was provided by the Alfred P. Sloan Foundation [grant G-2018-11113] and computational support was provided by the Research Computing Center at the University of Chicago. Send correspondence to Lars Peter Hansen, University of Chicago, 1126 E. -

Dear Workshop Participants, This Paper Reports Results of a Vignette

Dear Workshop Participants, This paper reports results of a vignette study in which subjects tell us how they believe they would respond when faced with various (hypothetical) scenarios along with a closely related experiment in which subjects engage in real interactions and faced monetary consequences for their (and others’) decisions. The latter experiment is a pilot study on a subject pool of MTurk workers, which we conducted just last week. We wanted to make sure we were able to replicate the results of the vignette in a setting with real interactions and monetary incentives before conducting a more expensive, full-scale version of the second experiment on student participants in the lab. We will be able to make changes to the design prior to running the full version of that experiment. Thus, your feedback on all aspects of the paper, especially the design of the second experiment, will be particularly valuable to us going forward. Rebecca Norm-Based Enforcement of Promises∗ Nathan Atkinsony Rebecca Stonez Alexander Stremitzerx ETH Zurich UCLA ETH Zurich January 22, 2020 Abstract Considerable evidence suggests that people are internally motivated to keep their promises. But it is unclear whether promises alone create a meaningful level of com- mitment in many economically relevant situations where the stakes are high. We experimentally study the behavior of third-party observers of an interaction between a potential promisor and potential promisee who have the opportunity to punish, at a cost to themselves, non-cooperative behavior by the potential promisor. Our results suggest that third parties' motivations to punish promise breakers have the same struc- ture as the moral motivations of those deciding whether or not to keep their promises. -

Theories of the Policy Process 0813343593-Text.Qxd 11/29/06 12:56 PM Page Ii 0813343593-Text.Qxd 11/29/06 12:56 PM Page Iii

0813343593-text.qxd 11/29/06 12:56 PM Page i Theories of the Policy Process 0813343593-text.qxd 11/29/06 12:56 PM Page ii 0813343593-text.qxd 11/29/06 12:56 PM Page iii Theories of the Policy Process Edited by Paul A. Sabatier University of California, Davis Westview Press A Member of the Perseus Books Group 0813343593-text.qxd 11/29/06 12:56 PM Page iv Copyright © 2007 by Westview Press Published by Westview Press, A Member of the Perseus Books Group All rights reserved. Printed in the United States of America. No part of this book may be reproduced in any manner whatsoever without written permission except in the case of brief quotations embodied in critical articles and reviews. For information, address Westview Press, 5500 Central Avenue, Boulder, Colorado 80301-2877. Find us on the World Wide Web at www.westviewpress.com. Westview Press books are available at special discounts for bulk purchases in the United States by corporations, institutions, and other organizations. For more information, please contact the Special Markets Department at the Perseus Books Group, 11 Cambridge Center, Cambridge MA 02142, or call (617) 252-5298 or (800) 255-1514, or e-mail [email protected]. Library of Congress Cataloging-in-Publication Data Theories of the policy process / edited by Paul A. Sabatier. p. cm. Includes bibliographical references and index. ISBN-13: 978-0-8133-4359-4 (pbk. : alk. paper) ISBN-10: 0-8133-4359-3 (pbk. : alk. paper) 1. Policy sciences. 2. Political planning. I. Sabatier, Paul A. H97.T475 2007 320.6—dc22 2006036952 10 9 8 7 6 5 4 3 2 0813343593-text.qxd 11/29/06 12:56 PM Page v Contents Part I Introduction 1 The Need for Better Theories, Paul A. -

ΒΙΒΛΙΟΓ ΡΑΦΙΑ Bibliography

Τεύχος 53, Οκτώβριος-Δεκέμβριος 2019 | Issue 53, October-December 2019 ΒΙΒΛΙΟΓ ΡΑΦΙΑ Bibliography Βραβείο Νόμπελ στην Οικονομική Επιστήμη Nobel Prize in Economics Τα τεύχη δημοσιεύονται στον ιστοχώρο της All issues are published online at the Bank’s website Τράπεζας: address: https://www.bankofgreece.gr/trapeza/kepoe https://www.bankofgreece.gr/en/the- t/h-vivliothhkh-ths-tte/e-ekdoseis-kai- bank/culture/library/e-publications-and- anakoinwseis announcements Τράπεζα της Ελλάδος. Κέντρο Πολιτισμού, Bank of Greece. Centre for Culture, Research and Έρευνας και Τεκμηρίωσης, Τμήμα Documentation, Library Section Βιβλιοθήκης Ελ. Βενιζέλου 21, 102 50 Αθήνα, 21 El. Venizelos Ave., 102 50 Athens, [email protected] Τηλ. 210-3202446, [email protected], Tel. +30-210-3202446, 3202396, 3203129 3202396, 3203129 Βιβλιογραφία, τεύχος 53, Οκτ.-Δεκ. 2019, Bibliography, issue 53, Oct.-Dec. 2019, Nobel Prize Βραβείο Νόμπελ στην Οικονομική Επιστήμη in Economics Συντελεστές: Α. Ναδάλη, Ε. Σεμερτζάκη, Γ. Contributors: A. Nadali, E. Semertzaki, G. Tsouri Τσούρη Βιβλιογραφία, αρ.53 (Οκτ.-Δεκ. 2019), Βραβείο Nobel στην Οικονομική Επιστήμη 1 Bibliography, no. 53, (Oct.-Dec. 2019), Nobel Prize in Economics Πίνακας περιεχομένων Εισαγωγή / Introduction 6 2019: Abhijit Banerjee, Esther Duflo and Michael Kremer 7 Μονογραφίες / Monographs ................................................................................................... 7 Δοκίμια Εργασίας / Working papers ......................................................................................