Mpeg-H Immersive Sound for Broadcast, Streaming, and Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ts 103 190-2 V1.2.1 (2018-02)

ETSI TS 103 190-2 V1.2.1 (2018-02) TECHNICAL SPECIFICATION Digital Audio Compression (AC-4) Standard; Part 2: Immersive and personalized audio 2 ETSI TS 103 190-2 V1.2.1 (2018-02) Reference RTS/JTC-043-2 Keywords audio, broadcasting, codec, content, digital, distribution, object audio, personalization ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice The present document can be downloaded from: http://www.etsi.org/standards-search The present document may be made available in electronic versions and/or in print. The content of any electronic and/or print versions of the present document shall not be modified without the prior written authorization of ETSI. In case of any existing or perceived difference in contents between such versions and/or in print, the only prevailing document is the print of the Portable Document Format (PDF) version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at https://portal.etsi.org/TB/ETSIDeliverableStatus.aspx If you find errors in the present document, please send your comment to one of the following services: https://portal.etsi.org/People/CommiteeSupportStaff.aspx Copyright Notification No part may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm except as authorized by written permission of ETSI. -

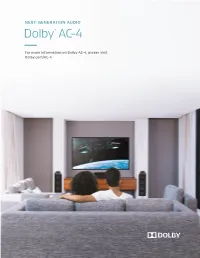

Dolby AC-4, Please Visit Dolby.Com/AC-4

NEXT-GENERATION AUDIO Dolby ® AC-4 For more information on Dolby AC-4, please visit Dolby.com/AC-4. ® ® NEX T-GENERATION AUDIO ED2 OR Dolby AC-4 PCM & METADATA AC-3 AC-4 PCM Integrating Personalized and Immersive Programming into Broadcast Operations The diagram below outlines a representative end-to-end signal flow for live and post-produced content through the broadcast ecosystem. PRODUCTION OPEN STANDARDS OUTSIDE BROADCAST BROADCASTER PAY TV OPERATOR CONFIDENCE MONITOR OUTPUT LEGACY RENDERER/ OBJECT AUDIO PROCESSOR ENCODER CHANNEL AUDIO OBJECT METADATA AUDIO MONITORING METADATA ANALYSIS GENERATION DISTRIBUTION INTEGRATED ENCODER RECEIVER/ MASTER DECODER CONTROL CHANNEL METADATA & OBJECT CHANNEL / GROUPING EMISSION INTEGRATED EMISSION OBJECT ROUTER AND OBJECT AUDIO ENCODER RECEIVER/ ENCODER DEFINITION DECODER PASSTHROUGH ANALYSIS PCM RENDERING AND PROCESSING INGEST PLAYOUT AUDIO SERVER SERVER MONITORING METADATA PCM MEZZANINE CONTRIBUTION AD STREAMING TRANSPORT FORMAT ENCODER MODULATOR/ SERVER ENCODER ENCODING FIBER MUX MUX/ SPLICER STREAMING ENCODER POSTPRODUCTION CDN ANTENNA CDN CONFIDENCE MONITOR OUTPUT OBJECT AUDIO PROCESSOR IN THE HOME SET-TOP BOX CHANNEL METADATA OBJECT AUDIO METADATA ANALYSIS GENERATION DEMODULATOR CHANNEL METADATA & OBJECT CHANNEL / ® GROUPING OBJECT AC-4 HDMI AND OBJECT AUDIO DECODER FORMATTER DEFINITION ANALYSIS PCM RENDERING AND PROCESSING TABLET/SMARTPHONE TV TO AVR / SOUNDBAR METADATA TO HEADPHONES TO SPEAKERS PCM ITU-R BWAV ADM (MXF, IMF) PACKAGING OBJECT APP AC-4 HEADPHONE RECEIVER / AC-4 HDMI® & CHANNEL DECODER RENDERER PROCESSOR DECODER FORMATTER INTERCHANGE TO AVR / SOUND BAR METADATA ED2 ENCODER PCM AC-4 IMMERSIVENESS Dolby AC-4 natively supports immersive audio to move sound around the audience in three-dimensional space. Both channel-based and object-based immersive audio can be delivered to the home and played back on speakers or headphones. -

Virtualization of Audio-Visual Services

Software Defined Media: Virtualization of Audio-Visual Services Manabu Tsukada∗, Keiko, Ogaway, Masahiro Ikedaz, Takuro Sonez, Kenta Niwax, Shoichiro Saitox, Takashi Kasuya{, Hideki Sunaharay, and Hiroshi Esaki∗ ∗ Graduate School of Information Science and Technology, The University of Tokyo Email: [email protected], [email protected] yGraduate School of Media Design, Keio University / Email: [email protected], [email protected] zYamaha Corporation / Email: fmasahiro.ikeda, [email protected] xNTT Media Intelligence Laboratories / Email: fniwa.kenta, [email protected] {Takenaka Corporation / Email: [email protected] Abstract—Internet-native audio-visual services are witnessing We believe that innovative applications will emerge from rapid development. Among these services, object-based audio- the fusion of object-based audio and video systems, including visual services are gaining importance. In 2014, we established new interactive education systems and public viewing systems. the Software Defined Media (SDM) consortium to target new research areas and markets involving object-based digital media In 2014, we established the Software Defined Media (SDM) 1 and Internet-by-design audio-visual environments. In this paper, consortium to target new research areas and markets involving we introduce the SDM architecture that virtualizes networked object-based digital media and Internet-by-design audio-visual audio-visual services along with the development of smart build- environments. We design SDM along with the development ings and smart cities using Internet of Things (IoT) devices of smart buildings and smart cities using Internet of Things and smart building facilities. Moreover, we design the SDM architecture as a layered architecture to promote the development (IoT) devices and smart building facilities. -

DVD850 DVD Video Player with Jog/Shuttle Remote

DVD Video Player DVD850 DVD Video Player with Jog/Shuttle Remote • DVD-Video,Video CD, and Audio CD Compatible • Advanced DVD-Video technology, including 10-bit video DAC and 24-bit audio DAC • Dual-lens optical pickup for optimum signal readout from both CD and DVD • Built-in Dolby Digital™ audio decoder with delay and balance controls • Digital output for Dolby Digital™ (AC-3), DTS, and PCM • 6-channel analog output for Dolby Digital™, Dolby Pro Logic™, and stereo • Choice of up to 8 audio languages • Choice of up to 32 subtitle languages • 3-dimensional virtual surround sound • Multiangle • Digital zoom (play & still) DVD850RC Remote Control • Graphic bit rate display • Remote Locator™ DVD Video Player Feature Highlights: DVD850 Component Video Out In addition to supporting traditional TV formats, Philips Magnavox DVD Technical Specifications: supports the latest high resolution TVs. Component video output offers superb color purity, crisp color detail, and reduced color noise– Playback System surpassing even that of S-Video! Today’s DVD is already prepared to DVD Video work with tomorrow’s technology. Video CD CD Gold-Plated Digital Coaxial Cables DVD-R These gold-plated cables provide the clearest connection possible with TV Standard high data capacity delivering maximum transmission efficiency. Number of Lines : 525 Playback : NTSC/60Hz Digital Optical Video Format For optimum flexibility, DVD offers digital optical connection which deliv- Signal : Digital er better, more dynamic sound reproduction. Signal Handling : Components Digital Compression : MPEG2 for DVD Analog 6-Channel Built-in AC3 Decoder : MPEG1 for VCD Dolby® Digital Sound (AC3) gives you dynamic theater-quality sound DVD while sharply filtering coding noise and reducing data consumption. -

An Introduction to MPEG Transport Streams

An introduction to MPEG-TS all you should know before using TSDuck Version 8 Topics 2 • MPEG transport streams • packets, sections, tables, PES, demux • DVB SimulCrypt • architecture, synchronization, ECM, EMM, scrambling • Standards • MPEG, DVB, others Transport streams packets and packetization Standard key terms 4 • Service / Program • DVB term : service • MPEG term : program • TV channel (video and / or audio) • data service (software download, application data) • Transport stream • aka. « TS », « multiplex », « transponder » • continuous bitstream • modulated and transmitted using one given frequency • aggregate several services • Signalization • set of data structures in a transport stream • describes the structure of transport streams and services MPEG-2 transport stream 5 • Structure of MPEG-2 TS defined in ISO/IEC 13818-1 • One operator uses several TS • TS = synchronous stream of 188-byte TS packets • 4-byte header • optional « adaptation field », a kind of extended header • payload, up to 184 bytes • Multiplex of up to 8192 independent elementary streams (ES) • each ES is identified by a Packet Identifier (PID) • each TS packet belongs to a PID, 13-bit PID in packet header • smooth muxing is complex, demuxing is trivial • Two types of ES content • PES, Packetized Elementary Stream : audio, video, subtitles, teletext • sections : data structures Multiplex of elementary streams 6 • A transport stream is a multiplex of elementary streams • elementary stream = sequence of TS packets with same PID value in header • one set of elementary -

Implementing Object-Based Audio in Radio Broadcasting

Object-based Audio in Radio Broadcast Implementing Object-based audio in radio broadcasting Diplomarbeit Ausgeführt zum Zweck der Erlangung des akademischen Grades Dipl.-Ing. für technisch-wissenschaftliche Berufe am Masterstudiengang Digitale Medientechnologien and der Fachhochschule St. Pölten, Masterkalsse Audio Design von: Baran Vlad DM161567 Betreuer/in und Erstbegutachter/in: FH-Prof. Dipl.-Ing Franz Zotlöterer Zweitbegutacher/in:FH Lektor. Dipl.-Ing Stefan Lainer [Wien, 09.09.2019] I Ehrenwörtliche Erklärung Ich versichere, dass - ich diese Arbeit selbständig verfasst, andere als die angegebenen Quellen und Hilfsmittel nicht benutzt und mich auch sonst keiner unerlaubten Hilfe bedient habe. - ich dieses Thema bisher weder im Inland noch im Ausland einem Begutachter/einer Begutachterin zur Beurteilung oder in irgendeiner Form als Prüfungsarbeit vorgelegt habe. Diese Arbeit stimmt mit der vom Begutachter bzw. der Begutachterin beurteilten Arbeit überein. .................................................. ................................................ Ort, Datum Unterschrift II Kurzfassung Die Wissenschaft der objektbasierten Tonherstellung befasst sich mit einer neuen Art der Übermittlung von räumlichen Informationen, die sich von kanalbasierten Systemen wegbewegen, hin zu einem Ansatz, der Ton unabhängig von dem Gerät verarbeitet, auf dem es gerendert wird. Diese objektbasierten Systeme behandeln Tonelemente als Objekte, die mit Metadaten verknüpft sind, welche ihr Verhalten beschreiben. Bisher wurde diese Forschungen vorwiegend -

BDP9100/05 Philips Blu-Ray Disc Player

Philips Blu-ray Disc player BDP9100 True cinema experience in 21:9 ultra-widescreen The perfect companion for the Cinema 21:9 TV. Unlike normal Blu-ray players, the BDP9100 is the only Blu-ray player allowing you to shift subtitles in the 21:9 screen aspect ratio, to retain the subtitles without the black bars. See more • 21:9 movie aspect ratio, no black bars bottom and top • Blu-ray Disc playback for sharp images in full HD 1080p • 1080p at 24 fps for cinema-like images • DVD video upscaling to 1080p via HDMI for near-HD images • DivX® Ultra for enhanced playback of DivX® media files • x.v.Colour brings more natural colours to HD camcorder videos Hear more • Dolby TrueHD and DTS-HD MA for HD 7.1 surround sound Engage more • BD-Live (Profile 2.0) to enjoy online Blu-ray bonus content • AVC HD to enjoy high definition camcorder recordings on DVD • Hi-Speed USB 2.0 Link plays video/music from USB flash drives • Enjoy all your movies and music from CDs and DVDs • EasyLink controls all EasyLink products with a single remote Blu-ray Disc player BDP9100/05 Highlights 21:9 movie aspect ratio BD-Live (Profile 2.0) resolution - ensuring more details and more true-to-life pictures. Progressive Scan (represented by "p" in "1080p') eliminates the line structure prevalent on TV screens, again ensuring relentlessly sharp images. To top it off, HDMI makes a direct digital connection that can carry uncompressed digital HD video as well as digital multi-channel audio, without conversions to analogue - delivering perfect picture and sound quality, completely free Be blown away by movies the way they are BD-Live opens up your world of high definition from noise. -

Audio Coding for Digital Broadcasting

Recommendation ITU-R BS.1196-7 (01/2019) Audio coding for digital broadcasting BS Series Broadcasting service (sound) ii Rec. ITU-R BS.1196-7 Foreword The role of the Radiocommunication Sector is to ensure the rational, equitable, efficient and economical use of the radio- frequency spectrum by all radiocommunication services, including satellite services, and carry out studies without limit of frequency range on the basis of which Recommendations are adopted. The regulatory and policy functions of the Radiocommunication Sector are performed by World and Regional Radiocommunication Conferences and Radiocommunication Assemblies supported by Study Groups. Policy on Intellectual Property Right (IPR) ITU-R policy on IPR is described in the Common Patent Policy for ITU-T/ITU-R/ISO/IEC referenced in Resolution ITU-R 1. Forms to be used for the submission of patent statements and licensing declarations by patent holders are available from http://www.itu.int/ITU-R/go/patents/en where the Guidelines for Implementation of the Common Patent Policy for ITU-T/ITU-R/ISO/IEC and the ITU-R patent information database can also be found. Series of ITU-R Recommendations (Also available online at http://www.itu.int/publ/R-REC/en) Series Title BO Satellite delivery BR Recording for production, archival and play-out; film for television BS Broadcasting service (sound) BT Broadcasting service (television) F Fixed service M Mobile, radiodetermination, amateur and related satellite services P Radiowave propagation RA Radio astronomy RS Remote sensing systems S Fixed-satellite service SA Space applications and meteorology SF Frequency sharing and coordination between fixed-satellite and fixed service systems SM Spectrum management SNG Satellite news gathering TF Time signals and frequency standards emissions V Vocabulary and related subjects Note: This ITU-R Recommendation was approved in English under the procedure detailed in Resolution ITU-R 1. -

Surround Sound Processed by Opus Codec: a Perceptual Quality Assessment

28. Konferenz Elektronische Sprachsignalverarbeitung 2017, Saarbrücken SURROUND SOUND PROCESSED BY OPUS CODEC: APERCEPTUAL QUALITY ASSESSMENT Franziska Trojahn, Martin Meszaros, Michael Maruschke and Oliver Jokisch Hochschule für Telekommunikation Leipzig, Germany [email protected] Abstract: The article describes the first perceptual quality study of 5.1 surround sound that has been processed by the Opus codec standardised by the Internet Engineering Task Force (IETF). All listening sessions with up to five subjects took place in a slightly sound absorbing laboratory – simulating living room conditions. For the assessment we conducted a Degradation Category Rating (DCR) listening- opinion test according to ITU-T P.800 recommendation with stimuli for six channels at total bitrates between 96 kbit/s and 192 kbit/s as well as hidden references. A group of 27 naive listeners compared a total of 20 sound samples. The differences between uncompressed and degraded sound samples were rated on a five-point degradation category scale resulting in Degradation Mean Opinion Score (DMOS). The overall results show that the average quality correlates with the bitrates. The quality diverges for the individual test stimuli depending on the music characteristics. Under most circumstances, a bitrate of 128 kbit/s is sufficient to achieve acceptable quality. 1 Introduction Nowadays, a high number of different speech and audio codecs are implemented in several kinds of multimedia applications; including audio / video entertainment, broadcasting and gaming. In recent years the demand for low delay and high quality audio applications, such as remote real-time jamming and cloud gaming, has been increasing. Therefore, current research objectives do not only include close to natural speech or audio quality, but also the requirements of low bitrates and a minimum latency. -

Comprehensive Video/Audio Analysis to Reduce Debug Cycle

VEGA H264 Content Readiness - From Creation to Distribution Comprehensive Video/Audio Analysis to Reduce Debug Cycle New Addressing the needs of media professionals to debug and optimize digital video products, Interra’s Vega H264 provides detailed analysis of video and audio streams. • Multicore support for video Reducing development time and costs, and increasing productivity, Vega H264 enables media professionals to quickly bring to market high quality, standard-compliant digital video products. stream analysis Vega H264 is an ideal tool for media professionals who need to: • Hierarchial view of SVC Layers • Verify a stream’s compliance with the defined standard • Audio Video synchronization • Debug an encoded stream, or optimize a stream's buffer requirements check • Evaluate and compare the performance and quality of video compression/decompression • Additional standard support: tools - H.264 SVC, AVS, ISDB-T, Teletext, • Optimize and refine video compression CODEC Subtitles, Close Captions, PCAP, HDV, • Check interoperability issues MJPEG 2000, and more... Standards Supported Video H.264, H.264 SVC, H.263, H.263+, MPEG-4, MPEG-2, MPEG-1, AVS Key Product Benefits Audio AAC, Dolby AC-3, Dolby Digital Plus, AMR, MP3, PCM, LPCM, • Extensible architecture to support MPEG-1/MPEG-2 Audio other audio, video, and system System Streams MPEG-2 Transport, MPEG-2 Program/DVD VOB, QuickTime, formats MPEG-1 Systems, MP4, 3GPP/3GPP2, AVC, AVI, HDV • Powerful debug capabilities to Broadcast Standards ATSC, DVB, DVB-T/DVB-H, TR 101 290 Priority 1,2 -

HTS3450/77 Philips DVD Home Theater System with Video

Philips DVD home theater system with Video Upscaling up to 1080i DivX Ultra HTS3450 Turn up your experience with HDMI and video upscaling This stylish High Definition digital home entertainment system plays practically any disc in high quality Dolby and DTS multi-channel surround sound. So just relax and fully immerse yourself in movies and music at home. Great audio and video performance • HDMI digital output for easy connection with only one cable • Video Upscaling for improved resolution of up to 1080i • High definition JPEG playback for images in true resolution • DTS, Dolby Digital and Pro Logic II surround sound • Progressive Scan component video for optimized image quality Play it all • Movies: DVD, DVD+R/RW, DVD-R/RW, (S)VCD, DivX • Music: CD, MP3-CD, CD-R/RW & Windows Media™ Audio • DivX Ultra Certified for enhanced playback of DivX videos • Picture CD (JPEG) with music (MP3) playback Quick and easy set-up • Easy-fit™ connectors with color-coding for a simple set-up DVD home theater system with Video Upscaling up to 1080i HTS3450/77 DivX Ultra Highlights HDMI for simple AV connection High definition JPEG playback Progressive Scan HDMI stands for High Definition Multimedia High definition JPEG playback lets you view Progressive Scan doubles the vertical Interface. It is a direct digital connection that your pictures on your television in resolutions resolution of the image resulting in a noticeably can carry digital HD video as well as digital as high as two megapixels. Now you can view sharper picture. Instead of sending a field multichannel audio. By eliminating the your digital pictures in absolute clarity, without comprising the odd lines to the screen first, conversion to analog signals it delivers perfect loss of quality or detail - and share them with followed by the field with the even lines, both picture and sound quality, completely free friends and family in the comfort of your living fields are written at one time. -

Operating Instructions Blu-Ray Disc Home Theater Sound System SC

BT300.book 1 ページ 2009年6月12日 金曜日 午後12時13分 Operating Instructions Blu-ray Disc Home Theater Sound System Model No. SC-BT303 SC-BT300 SC-BT203 SC-BT200 The illustration shows the image of the unit SC-BT300 with “P” indicated on the packaging. Dear customer Thank you for purchasing this product. For optimum performance and safety, please read these instructions carefully. Before connecting, operating or adjusting this product, please read the instructions completely. Please keep this manual for future reference. To update the firmware of this unit, refer to page 32. Region management information BD-Video Example: This unit plays BD-Video marked with labels containing the region code A. DVD-Video Example: This unit plays DVD-Video marked with labels containing the region number “1” or “ALL”. 1 2 1 ALL 4 [For[the[U.S.A.[and[Canada[ ® If you have any questions contact As an ENERGY STAR Partner, [U.S.A.]and]Puerto]Rico]:1-800-211-PANA(7262) Panasonic has determined that [Canada]: 1-800-561-5505 this product meets the ENERGY STAR® guidelines for energy efficiency. [Only]for[U.S.A.]and]Puerto]Rico]: The warranty can be found on page 58. For Canada only: The word “Participant” is used in place of the word [Canada]: The warranty can be found on page 59. “Partner”. P PC PX RQT9508-1P 2009/7/08 BT300.book 2 ページ 2009年6月12日 金曜日 午後12時13分 ≥These operating instructions are applicable System SC-BT300 SC-BT303 SC-BT200 SC-BT203 to models SC-BT300, SC-BT303, SC-BT200 Main unit SA-BT300 SA-BT300 SA-BT200 SA-BT203 Getting started and SC-BT203 for a variety of regions.